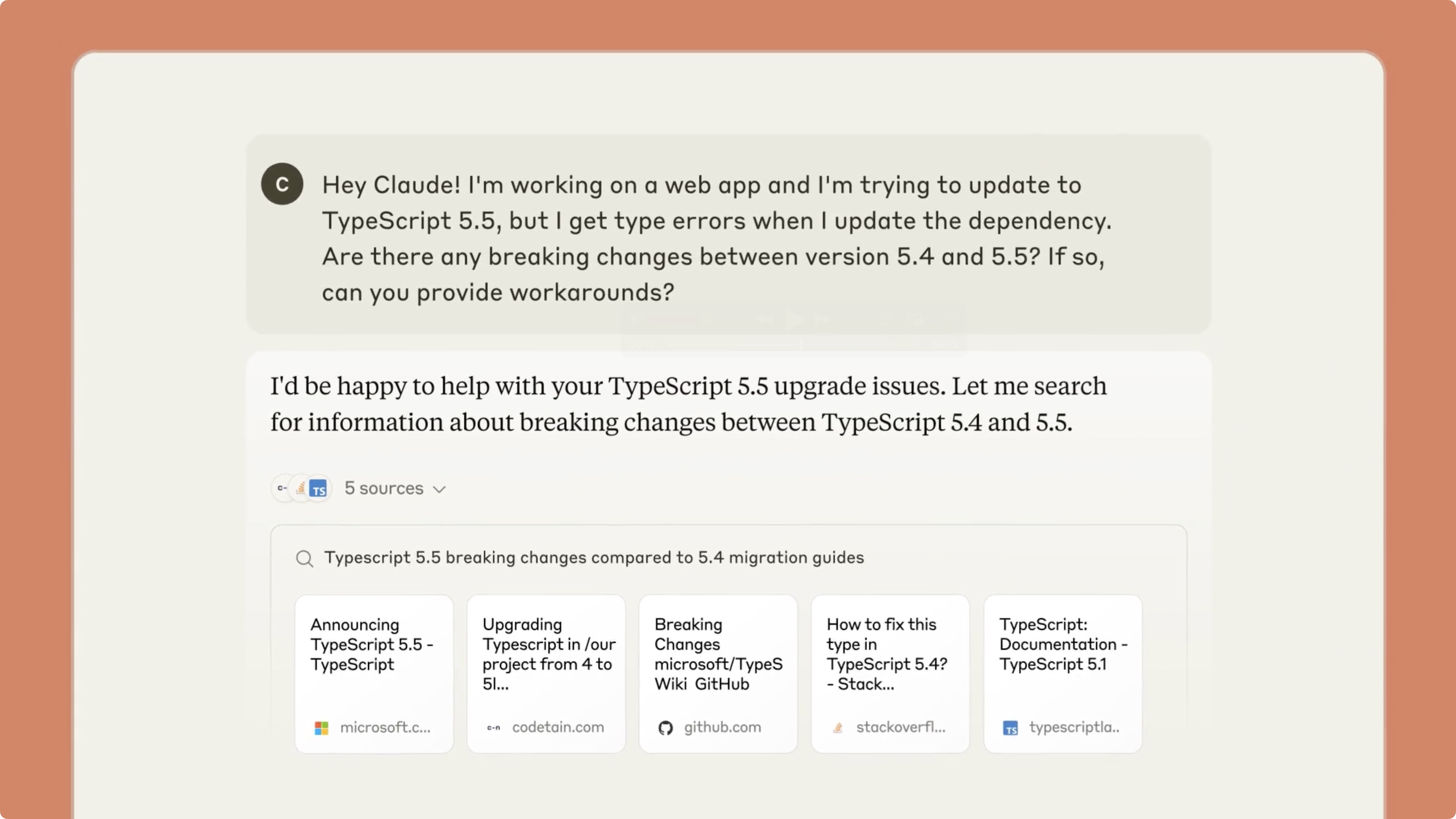

Anthropic has finally added web search capabilities to Claude 3.7 Sonnet, allowing the AI chatbot to access up-to-date information beyond its knowledge cutoff date of October 2024.

Announced on Thursday, the new feature enables Claude to search the internet for current events and information, so its accuracy should be significantly better when answering questions about recent developments.

When Claude uses web search to inform its responses, the interface provides clickable citations that allow you to verify sources and fact-check information, meaning you won't have to conduct separate searches.

The feature is currently available only to paying subscribers in the United States. Anthropic says it plans to roll out web search to free users and additional countries "soon," but the company gave no specific timeline.

Web search functionality has become a standard feature among leading AI chatbots. OpenAI began introducing ChatGPT Search to paying subscribers last fall and eventually made the feature available to all users – including those without a ChatGPT account – early last month.

Anthropic is pitching the web search function as particularly valuable for professionals across various fields. The company suggests sales teams can use it to analyze industry trends, financial analysts can assess current market data, and researchers can build stronger literature reviews by searching across primary sources.

For everyday users, the feature also promises to simplify comparison shopping by evaluating product features, prices, and reviews from multiple sources simultaneously.

Paying Claude users in the US can access web search by enabling the feature through their profile settings menu. The functionality is currently limited to Claude 3.7 Sonnet, which is Anthropic's first "hybrid reasoning model" capable of both quick responses and step-by-step problem solving.

Article Link: Anthropic's Claude Finally Gets Web Search, Months After ChatGPT