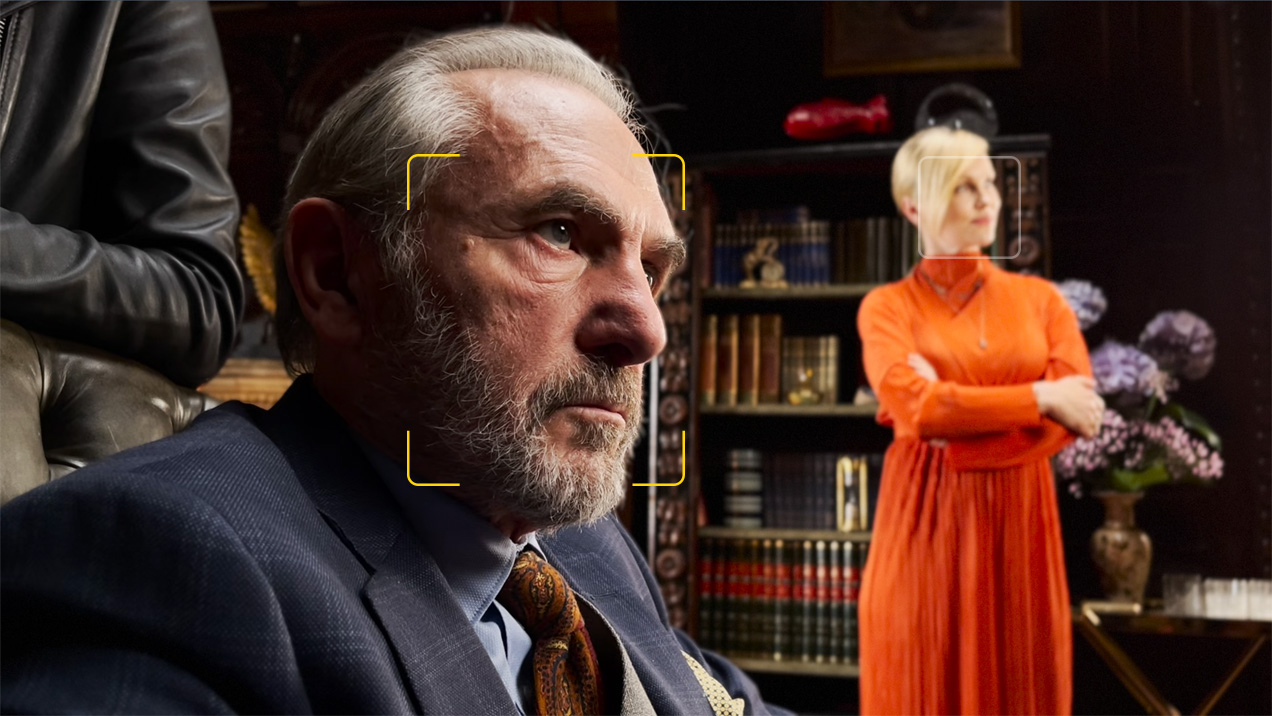

All four iPhone 13 models feature a new Cinematic mode that lets users record video with a shallow depth of field and automatic focus changes between subjects, and TechCrunch's Matthew Panzarino spoke with Apple marketing executive Kaiann Drance and designer Johnnie Manzari to learn more about how the feature was developed.

Drance said Cinematic mode was more challenging to implement than Portrait mode for photos given that rendering autofocus changes in real time is a heavy computational workload. The feature is powered by the A15 Bionic chip and the Neural Engine.

Manzari added that Apple's design team spent time researching the history of filmmaking and cinematography techniques for realistic focus transitions.We knew that bringing a high quality depth of field to video would be magnitudes more challenging [than Portrait Mode]. Unlike photos, video is designed to move as the person filming, including hand shake. And that meant we would need even higher quality depth data so Cinematic Mode could work across subjects, people, pets, and objects, and we needed that depth data continuously to keep up with every frame. Rendering these autofocus changes in real time is a heavy computational workload.

When you look at the design process, we begin with a deep reverence and respect for image and filmmaking through history. We're fascinated with questions like what principles of image and filmmaking are timeless? What craft has endured culturally and why?

Manzari said Apple observed directors of photography, camera operators, and other filmmaking professionals on sets to learn about the purpose of shallow depth of field in storytelling, which led Apple to realize the importance of guiding the viewer's attention.

The full interview goes into more detail about the work that went into Cinematic mode and highlights Panzarino's testing of the feature at Disneyland.

Article Link: Apple Discusses How it Created the iPhone 13's Cinematic Mode