Apple's new speech-to-text transcription APIs in iOS 26 and macOS Tahoe are delivering dramatically faster speeds compared to rival tools, including OpenAI's Whisper, based on beta testing conducted by MacStories' John Voorhees.

Call recording and transcription in iOS 18.1

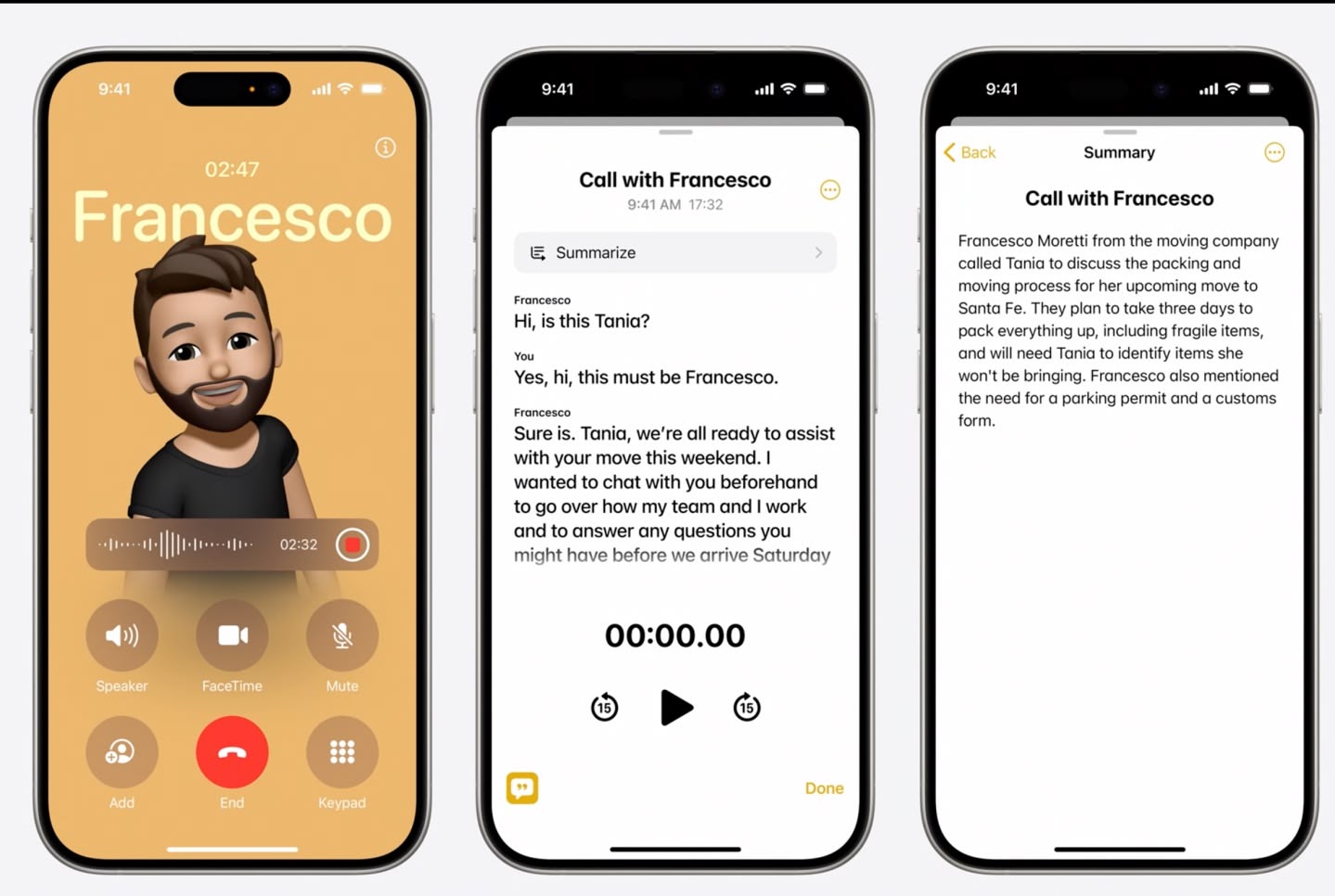

Apple uses its own native speech frameworks to power live transcription features in apps like Notes and Voice Memos, as well as phone call transcription in iOS 18.1. To improve efficiency in iOS 26 and macOS Tahoe, Apple has introduced a new SpeechAnalyzer class and SpeechTranscriber module that deal with similar requests.

According to Voorhees, the new models processed a 34-minute, 7GB video file in just 45 seconds using a command line tool called Yap (developed by Voorhees' son, Finn). That's a full 55% faster than MacWhisper's Large V3 Turbo model, which took 1 minute and 41 seconds for the same file.

Other Whisper-based tools performed even slower, with VidCap taking 1:55 and MacWhisper's Large V2 model requiring 3:55 to complete the same transcription task. Voorhees also reported no noticeable difference in transcription quality across models.

The speed advantage comes from Apple's on-device processing approach, which avoids the network overhead that typically slows cloud-based transcription services.

While the time difference might seem modest for individual files, Voorhees notes that the performance gain increases exponentially when processing multiple videos or longer content. For anyone generating subtitles or transcribing lectures regularly, the efficiency boost could save them hours.

The Speech framework components are available across iPhone, iPad, Mac, and Vision Pro platforms in the current beta releases. Voorhees expects Apple's transcription technology to eventually replace Whisper as the go-to solution for Mac transcription apps.

Article Link: Apple's New Transcription APIs Blow Past Whisper in Speed Tests

Last edited: