Got a tip for us?

Let us know

Become a MacRumors Supporter for $50/year with no ads, ability to filter front page stories, and private forums.

Do you think a theoretical M5 Extreme's GPU could match the RTX 5090?

- Thread starter ProQuiz

- Start date

- Sort by reaction score

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

There is no Extreme CPU from apple.

Lets do some conjecturing.

If the 40 gpu core M4 Max is close a RTX 3090, and assuming the M5 Max has the same 40 core count with a 30% improvement. We're looking at a 4080, maybe a 4090 class GPU.

The as yet unannounced M5 Ultra will conceivably have 2x the gpu cores, so we should see nearly double the performance, which does put us in the 5090 range.

This is just fast and loose napkin math type of conjecture, don't hold me to this given that we don't even have a M5 Pro, never mind, Max or Ultra.

Lets do some conjecturing.

If the 40 gpu core M4 Max is close a RTX 3090, and assuming the M5 Max has the same 40 core count with a 30% improvement. We're looking at a 4080, maybe a 4090 class GPU.

The as yet unannounced M5 Ultra will conceivably have 2x the gpu cores, so we should see nearly double the performance, which does put us in the 5090 range.

This is just fast and loose napkin math type of conjecture, don't hold me to this given that we don't even have a M5 Pro, never mind, Max or Ultra.

Thanks.There is no Extreme CPU from apple.

Lets do some conjecturing.

If the 40 gpu core M4 Max is close a RTX 3090, and assuming the M5 Max has the same 40 core count with a 30% improvement. We're looking at a 4080, maybe a 4090 class GPU.

The as yet unannounced M5 Ultra will conceivably have 2x the gpu cores, so we should see nearly double the performance, which does put us in the 5090 range.

This is just fast and loose napkin math type of conjecture, don't hold me to this given that we don't even have a M5 Pro, never mind, Max or Ultra.

You said the M5 Ultra could match the RTX 5090 but would that also be in Mac native games?

Yes, natively running apps, or games, not using crossover. If you take the benchmarks for the existing apple silicon chips, you can extrapolate a possible scenario where the M5 Ultra is on par with a 5090 - again its conjecture since we don't have a M4 Ultra, never mind an M5 UltraThanks.

You said the M5 Ultra could match the RTX 5090 but would that also be in Mac native games?

According to ChatGPT, an RTX 5080 (not RTX 5090) gets around double the fps at 4K native ultra settings in Resident Evil 4 or Village (can't remember which) when compared to an M3 Ultra. Does this bode well for the M5 Ultra to match the RTX 5090? ChatGPT could be wrong about the fps numbers though.Yes, natively running apps, or games, not using crossover. If you take the benchmarks for the existing apple silicon chips, you can extrapolate a possible scenario where the M5 Ultra is on par with a 5090 - again its conjecture since we don't have a M4 Ultra, never mind an M5 Ultra

Last edited:

I would say it depends. For a lot of gaming titles, even native ones, I suspect an M5 Ultra might struggle to get close to a 5090's performance. But to give you a concrete example of one where it is very likely to get close: Blender (which is ray tracing + raster). Here are some illustrative scores to underpin a back-of-the-envelope calculation:According to ChatGPT, an RTX 5080 (not RTX 5090) gets around double the fps at 4K native ultra settings in Resident Evil 4 or Village (can't remember which) when compared to an M3 Ultra. Does this bode well for the M5 Ultra to match the RTX 5090? ChatGPT could be wrong about the fps numbers though.

| Apple M3 (GPU - 10 cores) | 919.06 |

| Apple M5 (GPU - 10 cores) | 1734.16 |

| Apple M3 Ultra (GPU - 80 cores) | 7493.24 |

| NVIDIA GeForce RTX 5090 | 14933.08 |

Based on this, the projected M5 Ultra score would be ~14138, roughly within 5% of a 5090. Now we don't know if the M5 Ultra will hit that (or get more depending on Apple's design for the M5 Max/Ultra), but the Blender GPU benchmark ranks amongst Apple's best performing GPU benchmarks. So again, in a lot of games, even native ones, an M5 Ultra may not hit this level of relative performance. But it is still likely to be closer than Apple has ever gotten before to a (near) contemporaneous top Nvidia GPU (also unknown when the M5 Ultra will launch, it could be awhile, hopefully less time than the M3 Ultra took).

Last edited:

Doubtful.

One is a dedicated GPU that costs thousands of dollars. The other is primarily a CPU, with integrated GPU cores for far less money.

Edit: I was just laughing after noticing that a RTX 5090 cost MORE than my MBP.

One is a dedicated GPU that costs thousands of dollars. The other is primarily a CPU, with integrated GPU cores for far less money.

Edit: I was just laughing after noticing that a RTX 5090 cost MORE than my MBP.

Last edited:

According to this article (https://tzakharko.github.io/apple-neural-accelerators-benchmark/), a hypothetical M5 Max GPU's theoretical performance is 18 FP32 TFLOPS. This assumes a GPU clock speed for the M5 GPU cores (which Apple does not publish) of 1750 MHz.

As "Extreme" is typically used, it means 2x Ultra = 4x Max.

This woud give 4 x 18 FP32 TFLOPS = 72 FP32 TFLOPS for a hypothetical M5 Extreme. By comparison, a 5090 desktop is 105 FP32 TFLOPS (https://www.techpowerup.com/gpu-specs/geforce-rtx-5090.c4216)

So it appears the raw theoretical performance of a hypothetical M5 Extreme would be only ≈70% of a 5090 desktop.

But even if so, such a difference wouldn't necessarily be reflectected in their relative real-world performance scores. That depends on the particular optimizations used by Apple vs. NVIDIA, as well as the optimizations used by the software developers whose applications you are using for the comparison.

For instance, over the past few years it's been found that AAA games that are written natively for both AS and NVIDIA tend to run better on NVIDIA when evaluated using comparable GPUs. That's likely because developers have a much longer history optimizing AAA games for NVIDIA.

As "Extreme" is typically used, it means 2x Ultra = 4x Max.

This woud give 4 x 18 FP32 TFLOPS = 72 FP32 TFLOPS for a hypothetical M5 Extreme. By comparison, a 5090 desktop is 105 FP32 TFLOPS (https://www.techpowerup.com/gpu-specs/geforce-rtx-5090.c4216)

So it appears the raw theoretical performance of a hypothetical M5 Extreme would be only ≈70% of a 5090 desktop.

But even if so, such a difference wouldn't necessarily be reflectected in their relative real-world performance scores. That depends on the particular optimizations used by Apple vs. NVIDIA, as well as the optimizations used by the software developers whose applications you are using for the comparison.

For instance, over the past few years it's been found that AAA games that are written natively for both AS and NVIDIA tend to run better on NVIDIA when evaluated using comparable GPUs. That's likely because developers have a much longer history optimizing AAA games for NVIDIA.

Doubtful.

One is a dedicated GPU that costs thousands of dollars. The other is primarily a CPU, with integrated GPU cores for far less money.

Edit: I was just laughing after noticing that a RTX 5090 cost MORE than my MBP.

Unfortunately, you can't really compare raw FP32 TFLOPS across different architectures and come up with a relative theoretical performance that is meaningful. I don't even mean from the perspective that drivers, software optimization, etc ... matter. Rather, even just at a fundamental hardware level, the way different architectures access their FP32 units are different enough that by itself is enough to skew results past the point of utility.According to this article (https://tzakharko.github.io/apple-neural-accelerators-benchmark/), a hypothetical M5 Max GPU's theoretical performance is 18 FP32 TFLOPS. This assumes a GPU clock speed for the M5 GPU cores (which Apple does not publish) of 1750 MHz.

As "Extreme" is typically used, it means 2x Ultra = 4x Max.

This woud give 4 x 18 FP32 TFLOPS = 72 FP32 TFLOPS for a hypothetical M5 Extreme. By comparison, a 5090 desktop is 105 FP32 TFLOPS (https://www.techpowerup.com/gpu-specs/geforce-rtx-5090.c4216)

So it appears the raw theoretical performance of a hypothetical M5 Extreme would be only ≈70% of a 5090 desktop.

But even if so, such a difference wouldn't necessarily be reflectected in their relative real-world performance scores. That depends on the particular optimizations used by Apple vs. NVIDIA, as well as the optimizations used by the software developers whose applications you are using for the comparison.

For instance, over the past few years it's been found that AAA games that are written natively for both AS and NVIDIA tend to run better on NVIDIA when evaluated using comparable GPUs. That's likely because developers have a much longer history optimizing AAA games for NVIDIA.

I've done multiple posts about this, one at least I think was posted here and more at the other place. But the long and short of it is for (pseudo-)"dual-issue" designs you have to effectively renormalize the TFLOPS. For 3000- and 4000-series Nvidia GPUs this meant dividing the TFLOPS by 2 and then multiplying by ~1.15-1.3 to get the actual effective TFLOPS improvement from the dual-issue design. Blackwell improved this by quite a bit due to its new architecture (which may not really be "dual-issue" anymore, but retains some characteristics of it), where the uplift went to 1.2-1.5x over the older Nvidia designs which did not have double the FP32 pipes per core. Assuming then a 40% uplift on average, then a desktop 5090 would actually have an effective TFLOPS of ... 73 TFLOPs same as a theoretical M5 Extreme.

Then there's things that are more grey area like FP32 vs FP16 performance where, the latter of which, Apple chose to just vastly increased its performance in the M5 (or even Integer performance). I recognize that at some point when such issues capabilities switch from hardware capabilities to software optimizations becomes a matter of debate, but I would argue at least those are still in the realm of theoretical hardware performance. Historically people out of simplicity focused solely on FP32 (in fairness because it is typically the most important) and didn't usually even consider FP16 and Int32, but we probably should've. Chipsandcheese in its GPU hardware reviews typically tries to measure ops on all of these in microbenchmarks (i.e. as close to theoretical performance as possible).

And now of course there are different hardware blocks like ray tracing which are sadly harder to reason about in a theoretical sense and not always applicable depending on the software in question and so unfortunately can't be even considered in such quick comparisons like TFLOPS are trying to provide.

However, again to give a real world example, then if the M5 Ultra (never mind Extreme) scales relative to the M3 Ultra the same as the M5 did for the M3, then in Blender it should get within 5% of the performance of the desktop 5090:

I would say it depends. For a lot of gaming titles, even native ones, I suspect an M5 Ultra might struggle to get close to a 5090's performance. But to give you a concrete example of one where it is very likely to get close: Blender (which is ray tracing + raster). Here are some illustrative scores to underpin a back-of-the-envelope calculation:

Apple M3 (GPU - 10 cores) 919.06

Apple M5 (GPU - 10 cores) 1734.16

Apple M3 Ultra (GPU - 80 cores) 7493.24

NVIDIA GeForce RTX 5090 14933.08

Based on this, the projected M5 Ultra score would be ~14138, roughly within 5% of a 5090. Now we don't know if the M5 Ultra will hit that (or get more depending on Apple's design for the M5 Max/Ultra), but the Blender GPU benchmark ranks amongst Apple's best performing GPU benchmarks. So again, in a lot of games, even native ones, an M5 Ultra may not hit this level of relative performance. But it is still likely to be closer than Apple has ever gotten before to a (near) contemporaneous top Nvidia GPU (also unknown when the M5 Ultra will launch, it could be awhile, hopefully less time than the M3 Ultra took).

And again, that's not an Extreme, that's an M5 Ultra coming close to matching the 5090 (in theory). Of course this relies on the M5 Ultra scaling the same and, as aforementioned both by you and myself, this is not expected to be the case in gaming for lots of reasons.

We have to see what performance gains we get with TSMC new packaging tech that the m5 max and pro are set to use.

TSMC's 'new' packaging tech is generally just the same as the packaging tech used for the UltraFusion. The pads/connections are smaller, which means conceptually get more of them between dies. However, the primary performance impact going to get is that the two (or more) dies act like the performnce of just using a monolthiic die. It isn't going to bring performance in and on itself. The construction of something bigger than one max sized die is where the performance is coming from ( not the connection).

Folks keep waving at this new tech as magical sprinkle power. The horizontal packaging variant simply allows Apple to do 'better' Ultras they have been doing. If Apple switches to smaller building blocks, chiplets, then connectivity is just getting back to the performance that the monolithic "Max" sized chips could do. Might end up more economical ( if defect savings outweight increased packaging costs ) , but the more so 'costs' than 'performance'. The more expesnive fab process might be more easily paid for that way. But again the fab process is the core source of the performance enablement; not the connection.

Doubtful.

One is a dedicated GPU that costs thousands of dollars. The other is primarily a CPU, with integrated GPU cores for far less money.

Two disconnections with reality here. ( and 'dedicated' is a oddball adjective here).

The plain Mn or Mn Pro perhaps is a more even split. However, The Max is extremely far closer to a GPU with some relatively modest CPU cores attached to it. The die area is dominated by the Memory Controller + System Cache (SLC) + GPU cores. (nevermind the relatively large display controllers and fixed function A/V logic which pragmatically a GUI operating system needs and is part of the GPU system in common terminology. ) . The bandwidth demands of the system are baselined on the GPU core requirements. So leaving memory out is very dubious. Same issue with the SLC. GPU results that no one can see 'works' for Mac users sitting in front of the system how (display of graphics is a GUI requirement) ?

Just 'eyeballing' that picture, start at bottom of die and go half way up. At the half way point imagine a horizontal line, That line slices through what?

But let us go with the percentages in the legend on the side:

35% ('GPU') , 10% (LPDDR5) , 10% (SLC) , 4% Display Engines => 49% of die. ( haven't even gotten to the fix function A/V stuff.)

[ NOTE: the 'unattributed' 22% is almost twice as large as the CPU core total. ]

The Ultra is 2x a Max so even more area allocated to GPUs in disportioncate allocation.

Second, the nominal entry Mac Studio with a Max is $1,999. To step to an Ultra is $3,999 ( gap of $2K). To top end Ultra ( which would be likely. 5090 comparator) it is at least $1,500 more ("top end" Ultra BTO add-on price). So now $3k more. The notion that Apple isn't selling 'thousands' of dollars GPU options just isn't true. Most Mac users don't buy it , but Apple has been selling them for last 4 years. Extremely likely to continue to do that into the future also.

What Apple has not done is sell $5-10K GPU BTO up-charge something like the "extreme" ( 4 x max ). (the more exotic the packaging , the more non linear the price increase is going to be). Unlikely, they would get many buyers for that. Especially, from the 5090 fanboy crowd that are more fixated on the $2K price and the legacy PCI-e format at least as much as performance.

Apple probably needs more effective memory bandwidth uplift than they need a huge amount of slicon area space to compete with the 5090. Bigger cache ( e.g. 3D cache) so that get closer to max theoretical than 5090 will. And more wider 'poor man's HBM" with wider LPDDR throughput. ( need to push , rather than trail on LPDDR generations bandwidth evolution. ) Bleeding edge LPDDR is going to push up costs also. (in addition to being far higher capacity than 5090 ).

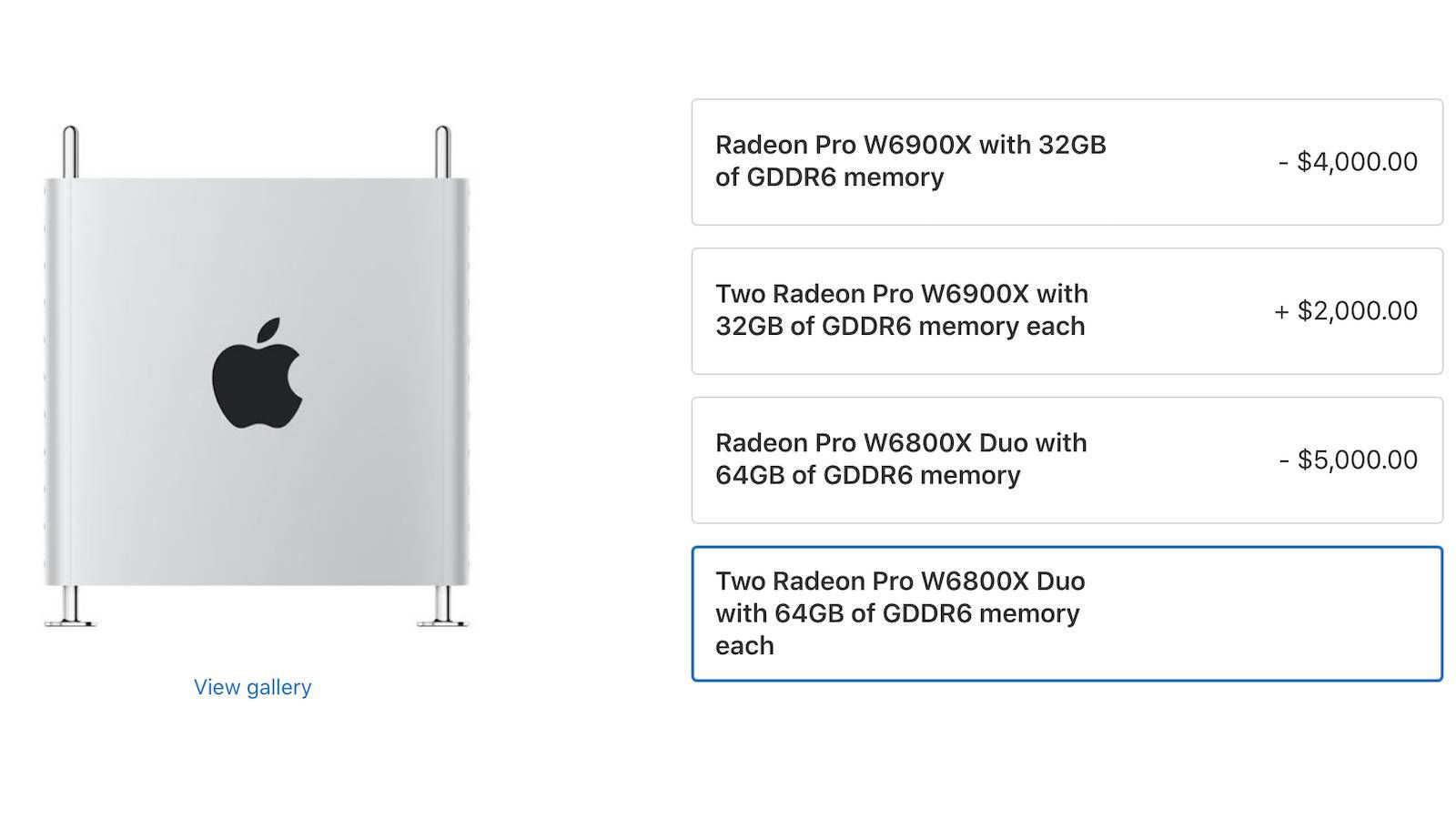

The top end of Apple's GPU skewed line up is expensive. Apple is not some kind of 'low cost' leader here with 'cheap' GPUs. They were not back in the Intel era either. MPX modules went for how much? Thousands...

Apple Introduces New High-End Graphics Options for Mac Pro

Apple today began offering new high-end graphics upgrade options for both the tower and rack versions of the Mac Pro desktop computer. This comes on the same day that Apple started selling the Magic Keyboard with Touch ID on a standalone basis. As noted by CNN Underscored's Jake Krol, the Mac...

Apple really hasn't change positions.

Edit: I was just laughing after noticing that a RTX 5090 cost MORE than my MBP.

Likely so does a Ultra Mac Studio.

P.S. The GPU cores in the Max is no less 'dedicated' to the GPU function than in a discrete 'GPU'. That tasks they are assigned are effectively the same. It isn't 100% assigned to GPU cores. No PCI_e controller and it would be pragmatically useless. No output ... not particularly useful for a GUI operating system userbase. No Audio , compresses video DRM ... ooops.... not normal end user usable.

P.P.S. the more Tensor/Matrix-Mult/ 'FP extra small' / etc support that Nvidia addes to their next gen GPU pragmatically also takes die area away from 'pure' raster. The baseline definition of a GPU from 20 years ago is being moved away from by just about all of the GPU implementors.

Last edited:

And again, that's not an Extreme, that's an M5 Ultra coming close to matching the 5090 (in theory). Of course this relies on the M5 Ultra scaling the same and, as aforementioned both by you and myself, this is not expected to be the case in gaming for lots of reasons.

The M5 likely will scale better than the M3. The 80 core version of the M3 Ultra didn't scale as a system. Likely runs out of memory.

| Apple M3 Ultra (GPU - 80 cores) | 7494.03 | 8x |

| Apple M3 Ultra (GPU - 60 cores) | 6505.79 | 6x |

| Apple M3 Max (GPU - 40 cores) | 4238.72 | 4x |

| Apple M3 Max (GPU - 30 cores) | 3449.17 | 3x |

| Apple M3 Pro (GPU - 18 cores) | 1767.1 | 1.8x |

| Apple M3 (GPU - 10 cores) | 919.06 | 1x |

1.8 * 919 = 1654 ( versus 1767 ) (LPDDR5 clocked higher also)

3 * 919 = 2757 ( versus 3449 )

4 * 919 = 4238 ( versus 4238; almost undershoot. )

6 * 919 = 5514 ( versus 6505 ) [ 2 * 2757 = 5514 , 2 * 3449 = 6898 ]

8 * 919 =. 7352 ( versus 7494; undershoot compared to others.) [ 2 * 4238 = 8476 ]

Indications are that 80 core variant is bandwidth starved. The raw compute there, but just can't get data to the units fast enough. ( the plain M3 unit is slower LPDDR5 memory so it also isn't getting fast enough. Also, some of this is UltraFusion getting 'old' relative to memory increases. )

M5 comes with faster LPDDR5 also. on M3 iteration Apple wasn't that aggressive in following the bleeding edge of LPDDR ( could have traded off 'unknown' more expensive fab process for more affordable LPDDR ). Base M4/M5 both came with uplifts in lLPDDR clocks vs M3. ( e.g., Samsung has some custom LPDDR5X-10700). If Apple also cranks up the 2.5D connection between dies ("UltraFusion 2"), then they should be a substantive a bandwidth jump from the M3 Ultra era. Also possible issue if they didn't order/reserve enough memory early in advance before AI hypetrain bought it out. Skipping M4 Ultra means good chance they were substantially planning ahead for the M5 Ultra needs.

Last edited:

Oh I agree that's also possible that the M5 Ultra will scale even better depending on what Apple does and in my original post I noted the M5 Ultra could indeed do so. But yes I highlighted the inverse more strongly since we don't have M5 Ultras in front of us I wanted to make sure it was understood that this was a prognostication based on several assumptions which could be violated by unknowns and that the M5 Ultra may not reach this level (and yes could be better).The M5 likely will scale better than the M3. The 80 core version of the M3 Ultra didn't scale as a system. Likely runs out of memory.

Apple M3 Ultra (GPU - 80 cores) 7494.03 8x Apple M3 Ultra (GPU - 60 cores) 6505.79 6x

Apple M3 Max (GPU - 40 cores) 4238.72 4x

Apple M3 Max (GPU - 30 cores) 3449.17 3x

Apple M3 Pro (GPU - 18 cores) 1767.1 1.8x

Apple M3 (GPU - 10 cores) 919.06 1x

1.8 * 919 = 1654 ( versus 1767 ) (LPDDR5 clocked higher also)

3 * 919 = 2757 ( versus 3449 )

4 * 919 = 4238 ( versus 4238; almost undershoot. )

6 * 919 = 5514 ( versus 6505 ) [ 2 * 2757 = 5514 , 2 * 3449 = 6898 ]

8 * 919 =. 7352 ( versus 7494; undershoot) [ 2 * 4238 = 8476 ]

Indications are that 80 core variant is bandwidth starve. The raw compute there, but just can't get data to the units fast enough. ( the plain M3 unit is slower LPDDR5 memory so it also isn't getting Also some of this is UltraFusion getting 'old' relative to memory increases.

M5 comes with faster LPDDR5 also. on M3 iteration Apple wasn't that aggressive in following the bleeding edge of LPDDR ( could have traded off 'unknown' more expensive fab process for more affordable LPDDR ). Base M4/M5 both came with uplifts in lLPDDR clocks vs M3. ( e.g., Samsung has some custom LPDDR5X-10700). If Apple also cranks up the 2.5D connection between dies ("UltraFusion 2"), then they should be a substantive a bandwidth jump from the M3 Ultra era. Also possible issue if they didn't order/reserve enough memory early in advance before AI hypetrain bought it out. Skipping M4 Ultra means good chance they were sub, stantially planning ahead for the M5 Ultra needs.

Also, the pattern you noted with the M3 Ultra is actually repeated in the M4 line as well, I looked pretty closely at the binned/full pro/max using the 3DMark benchmarks (and Blender too actually) - sometimes seemingly related to bandwidth issues, other times less clear (well one time anyway - one data point on the Steel Nomad Light benchmark makes no sense to me, but everything else including the full Steel Nomad bench results tracks okay). The clearest result was that the binned M4 Pro has the best bandwidth/TFLOPS ratio of the pro/max M4 lineup and the best scores per TFLOPS amongst those devices. Again, that's why I was being careful to make sure people understood this is just a rough estimation.

Good points. Though what about 3D wafer-on-wafer configurations? When will we see them, and could those enhance performance by allowing shorter communication paths? E.g., suppose a processing unit on a monolithic chip most often talks to other units that, because of other constrains, can't be located near to it on the monolithic chip. With a wafer-on-wafer approach, if those other units could be postioned vertically above it, thus shortening the path length, could that enhance performance?TSMC's 'new' packaging tech is generally just the same as the packaging tech used for the UltraFusion. The pads/connections are smaller, which means conceptually get more of them between dies. However, the primary performance impact going to get is that the two (or more) dies act like the performnce of just using a monolthiic die. It isn't going to bring performance in and on itself. The construction of something bigger than one max sized die is where the performance is coming from ( not the connection).

Folks keep waving at this new tech as magical sprinkle power. The horizontal packaging variant simply allows Apple to do 'better' Ultras they have been doing. If Apple switches to smaller building blocks, chiplets, then connectivity is just getting back to the performance that the monolithic "Max" sized chips could do. Might end up more economical ( if defect savings outweight increased packaging costs ) , but the more so 'costs' than 'performance'. The more expesnive fab process might be more easily paid for that way. But again the fab process is the core source of the performance enablement; not the connection.

Of course, a big tradeoff with 3D designs is their reduced surface area and thus reduced heat dissipation. The again, with shorter average path lengths, would thermal energy generation be reduced? I'm not sure about the relative contribution of electron flow between processing units vs. logic gate operation to thermals.

Separately, with 2.5D, the additional modularity might enable Apple to more easily offer Mac Studio/Pro variants with high GPU core counts.

Last edited:

Also, the pattern you noted with the M3 Ultra is actually repeated in the M4 line as well, I looked pretty closely at the binned/full pro/max using the 3DMark benchmarks (and Blender too actually) - sometimes seemingly related to bandwidth issues, other times less clear (well one time anyway - one data point on the Steel Nomad Light benchmark makes no sense to me, but everything else including the full Steel Nomad bench results tracks okay). The clearest result was that the binned M4 Pro has the best bandwidth/TFLOPS ratio of the pro/max M4 lineup and the best scores per TFLOPS amongst those devices. Again, that's why I was being careful to make sure people understood this is just a rough estimation.

The "Extreme" meme has always pragmatically meant more expensive. That could end up more expensive subcomponent parts rather than more dies (Max sized or otherwise). At some point, Apple would need to shift from "poor man's HBM" to"not quite as poor man's" HBM.

Apple seems to be regularly soaking up as much bandwidth as gets added. But they also are likely not to waste die area on boosting TFLOPS significantly higher than what they can actually get just for some spec bragging rights (even if the CPU cores were not there, grossly outstripping the memory system would probably get a pass for more affordable die size.). In terms of "Keeping up with the Joneses", lots of doubt about steady improvements versus "competitor X"'s improvement seem unjustified.

it is indicative though that Apple's primary design criteria is not out on the extreme "lunatic fringe" market. The biggest 'bang for buck' is in the more affordable part of the range. They can get a reasonably good top end model without obsessing about being 'king of the hill' on every benchmark. Make most of the apps that folks use faster , and end up with substantively better offerings each generation. It isn't about stalking a specific implementation from a competitor ( ' beat Nvidia x090 or bust' objective.)

The 4090->5090 raster improvements in sub 4K were (~1/2) smaller than 4K improvements. Some of the raster gen-to-gen improvements is just when the VRAM subsystem improves/evolves to the next gen. GDDR and LPPPDR don't move in 100% lock step ( e.g., LPDDR6 recently passed standards vote while GDDR7 passed last year) , so the x090 is a goofy stalking horse for that reason also.

Good points. Though what about 3D wafer-on-wafer configurations? When will we see them, and could those enhance performance by allowing shorter communication paths?

Apple is already using wafter on wafer with UltraFusion.

Purely vertical wafer-on-wafer isn't 'free'. Stacked vertically brings thermal constraints. Additionally, the horizontal bridge bonding isn't all that much longer. (versus just dealing with a above average sized die. 'Distant' parts of a very large die are 'far part' also. )

E.g., suppose a processing unit on a monolithic chip most often talks to other units that , because of other constrains , can't be located near to it on the monolithic chip. With a wafer-on-wafer approach, if those other units could be postioned vertically above it, thus shortening the path length, could that enhance performance?

If both a 'intense' processing units that likely does not work. Thermally it won't work out. Similar CPU cores stacked on top of each other has problems. Stacking SRAM cache on top each other is different than stacking relatively high thermal cores on top of each other. One of those constraints reason they were apart was thermal. Verticalness doesn't alleviate the constraint. And if you had a high number of CPU/GPU cores the constraint on being apart is the high number itself. 2, 3, 4, etc. deep even if come up with a thermal mitigation for 2 doesn't mean that 3 is going to work (mitigation is likely quite limited).

More vertical 3D wafer-on-wafer is likely going to be applied to stuff that is not shrinking as well. (e.g. I/O and SRAM). Take them off the die (which may get smaller) and reconnect. The other constraint that vertical doesn't remove is that future 'smaller' fab processes are likely more expensive also. The split is likely more on function affinity for wafer process than on sub-unit to sub-unit coupling.

Of course, a big tradeoff with 3D designs is their reduced surface area and thus reduced heat dissipation. The again, with shorter average path lengths, would thermal energy generation be reduced (not sure about the relative contribution of electron flow between processing units vs. logic gate operation to thermals).

Going off wafer is always going to be higher than on chip. The new techniques are lowering the penalty cost, but it isn't going to zero. Closer to 'easier to tolerate' than zero.

Separately, with 2.5D, the additional modularity might enable Apple to more easily offer Mac Studio/Pro variants with high GPU core counts.

Apple already offers high GPU core counts ( relative to NPU/CPU core counts).

GPUs don't cobble-together as easily as CPU cores do. In part, due to the core counts targets and additional latency constraints with things like display engines and other entanglements.

There are real constraints not going to be able to get around there also. The logic board area for MBP or Mac Studio for a Max (or Ultra ) package are fixed. If 2.5D grow the aggregate package bigger than what the are budget present then it won't work.

Chopping a Pro or Max-sized die up in to smaller pieces would just get you a very slightly slower 'Max' once put them back together (the more interconnect use to patch it back together the more incremental losses you'll get). [ for example the M1 Pro and M1 Max were high overlap design with mostly just "more GPU/Memory" tacked onto the Max. ] Even if tack on additional GPU/CPU cores without the other then probably wouldn't get a GPU core count higher in the 2D suface area of an Ultra ( which is a constraint for the Studio). Pretty good chance the [GPU+Memory] chiplet chunk is larger than the [CPU + reset] chunk. More likely is enabling configurations where the GPU/CPU core ratio is higher. A package where GPU can go up and CPU cores stay constant.

A higher number of SoC SKUs isn't necessarily a win. If end up with more chiplet dies variants than have now in monolithic die variants the costs don't necessarily go down (for Apple or consumers. ) When shift to chiplets and die count goes up then that is an odd decomposition. There are a bunch of folks who project what Inte/AMD onto what Apple should do. Intel has gobs of processor SKUs so Apple needs to have gobs of processor SKUs. I think those thought tracks are suspect.

The M-series dies have a built in SSD controller. Does Apple really need a A20 ( marketing '2nm' ) fab process for a SSD controller? For USB ? For PCI-e ? They don't have to wait for A20 to start decompose I/O from the bleeding edge processes.

The odd part is, the one and only time Apple bandwidth was tested to its max, pardon the pun, on the Max is to my knowledge Andrei with the M1 Max and try as he might, he could not saturate it or even get close to doing so despite running both CPU and GPU workloads simultaneously. Now that was the M1 Max, I haven't gone back to see its compute to bandwidth ratio, and I haven't seen any similar test done by anyone since (and unfortunately all the old Anandtech articles are now completely gone, unless you have a local version or can find them on the Internet archive). However, bandwidth is the cleanest explanation for what I see*.The "Extreme" meme has always pragmatically meant more expensive. That could end up more expensive subcomponent parts rather than more dies (Max sized or otherwise). At some point, Apple would need to shift from "poor man's HBM" to"not quite as poor man's" HBM.More SLC (e.g. attached 3D) and/or faster LPDDR than the 'poor man's' version, but still less than conventional HBM.

Apple seems to be regularly soaking up as much bandwidth as gets added. But they also are likely not to waste die area on boosting TFLOPS significantly higher than what they can actually get just for some spec bragging rights (even if the CPU cores were not there, grossly outstripping the memory system would probably get a pass for more affordable die size.). In terms of "Keeping up with the Joneses", lots of doubt about steady improvements versus "competitor X"'s improvement seem unjustified.

it is indicative though that Apple's primary design criteria is not out on the extreme "lunatic fringe" market. The biggest 'bang for buck' is in the more affordable part of the range. They can get a reasonably good top end model without obsessing about being 'king of the hill' on every benchmark. Make most of the apps that folks use faster , and end up with substantively better offerings each generation. It isn't about stalking a specific implementation from a competitor ( ' beat Nvidia x090 or bust' objective.)

The 4090->5090 raster improvements in sub 4K were (~1/2) smaller than 4K improvements. Some of the raster gen-to-gen improvements is just when the VRAM subsystem improves/evolves to the next gen. GDDR and LPPPDR don't move in 100% lock step ( e.g., LPDDR6 recently passed standards vote while GDDR7 passed last year) , so the x090 is a goofy stalking horse for that reason also.

*again, except for that one niggling Steel Nomad Light data point where for some reason the full M4 Max lost performance pts per TFLOPS despite having the same (slightly better in fact) bandwidth per compute than the binned M4 Mx but didn't do so on any other benchmark - to be fair did not look at Blender - but still multiple other 3DMark benchmarks showed a very clear relationship between bandwidth and compute. Basically the binned Pro had the best ratio of bandwidth to compute and the best ratio of performance per compute while every other device had roughly the same bandwidth per compute and the same compute per bandwidth. It's possible that SNL data point is just noise I might've pulled these values from a single run rather than the median. When I get more time I'll have to double check (EDIT: doing a quick look and about half of the decrease in the full M4 Max relative to the binned does look noise, but not all, so a 5% decrease in performance per TFLOPS but only in SNL, all other tests show the expected relationship - i.e. the binned and full M4 Max showing the same performance per TFLOPS since they have almost the same bandwidth per TFLOPS with the full M4 Max being even slightly better).

Ah heck here's the data (apologies for the picture format and the split header/body, can't type it all out it right now and chart is too wide anyway):

And Blender where for some reason did not do the 32-core score:

One other interesting thing is of course a similar pattern emerges with Nvidia chips (no time to post it all), so it has occurred to me that at least some of the pattern I saw with the "dual-issue" design of the 3000 and 4000 series GPUs that I mention earlier was exacerbated by their lack of bandwidth per compute relative to the 2000 series of GPUs, which was partially but not totally improved by the 5000-series. That said ChipsandCheese also mentioned that they saw a similar pattern and attributed at least in part to core occupancy issues in their tests - also they may have been testing compute more directly avoiding any memory system bottlenecks, I'm not sure, so this particular relationship could be slightly coincidental. However, even if so, modern Nvidia chips have pretty bad compute to bandwidth ratios both to Apple and their own older Turing RTX 20XX cards which had very nearly similar bandwidth to compute to Apple and much more similar performance to compute as well.

One issue is that the M5 did double FP16 compute - I haven't been tracking that, but if an engine does use FP16 if that has any impact on bandwidth per performance more than the FP32 both since the FP16 doubled which will be hard for the bandwidth increases to make up for but also that, properly coded, the needed bandwidth for FP16 should be halved relative to FP32.

Last edited:

Optimization takes time and money. It makes more sense to invest that for major platforms than for minor ones. On the average, it's reasonable to expect that computer/console games will be better optimized for Nvidia/AMD hardware than for Apple hardware, and the other way around for mobile-first games.For instance, over the past few years it's been found that AAA games that are written natively for both AS and NVIDIA tend to run better on NVIDIA when evaluated using comparable GPUs. That's likely because developers have a much longer history optimizing AAA games for NVIDIA.

Internaut

macrumors 65816

I don't see parity happening anytime soon, unless Apple introduces an interface for eGPUs. That said, the Apple Silicon setup isn't without it's advantages bearing in mind that every last byte of RAM is also GPU memory, which is great for running local LLMs. Unless Apple prioritize games, I don't see the nature of the competition changing.

Wafer-on-wafer means vertical die stacking, and UltraFusion doesn't stack the two dies vertically. [As the name indicates: It's one die on (top of) another, not one die beside another.]Apple is already using wafter on wafer with UltraFusion.

The odd part is, the one and only time Apple bandwidth was tested to its max, pardon the pun, on the Max is to my knowledge Andrei with the M1 Max and try as he might, he could not saturate it or even get close to doing so despite running both CPU and GPU workloads simultaneously. Now that was the M1 Max, I haven't gone back to see its compute to bandwidth ratio, and I haven't seen any similar test done by anyone since (and unfortunately all the old Anandtech articles are now completely gone, unless you have a local version or can find them on the Internet archive). However, bandwidth is the cleanest explanation for what I see*.

*again, except for that one niggling Steel Nomad Light data point where for some reason the full M4 Max lost performance pts per TFLOPS despite having the same (slightly better in fact) bandwidth per compute than the binned M4 Mx but didn't do so on any other benchmark - to be fair did not look at Blender - but still multiple other 3DMark benchmarks showed a very clear relationship between bandwidth and compute. Basically the binned Pro had the best ratio of bandwidth to compute and the best ratio of performance per compute while every other device had roughly the same bandwidth per compute and the same compute per bandwidth. It's possible that SNL data point is just noise I might've pulled these values from a single run rather than the median. When I get more time I'll have to double check (EDIT: doing a quick look and about half of the decrease in the full M4 Max relative to the binned does look noise, but not all, so a 5% decrease in performance per TFLOPS but only in SNL, all other tests show the expected relationship - i.e. the binned and full M4 Max showing the same performance per TFLOPS since they have almost the same bandwidth per TFLOPS with the full M4 Max being even slightly better).

Ah heck here's the data (apologies for the picture format and the split header/body, can't type it all out it right now and chart is too wide anyway):

View attachment 2579038

View attachment 2579035

And Blender where for some reason did not do the 32-core score:

View attachment 2579039

View attachment 2579037

One other interesting thing is of course a similar pattern emerges with Nvidia chips (no time to post it all), so it has occurred to me that at least some of the pattern I saw with the "dual-issue" design of the 3000 and 4000 series GPUs that I mention earlier was exacerbated by their lack of bandwidth per compute relative to the 2000 series of GPUs, which was partially but not totally improved by the 5000-series. That said ChipsandCheese also mentioned that they saw a similar pattern and attributed at least in part to core occupancy issues in their tests - also they may have been testing compute more directly avoiding any memory system bottlenecks, I'm not sure, so this particular relationship could be slightly coincidental. However, even if so, modern Nvidia chips have pretty bad compute to bandwidth ratios both to Apple and their own older Turing RTX 20XX cards which had very nearly similar bandwidth to compute to Apple and much more similar performance to compute as well.

One issue is that the M5 did double FP16 compute - I haven't been tracking that, but if an engine does use FP16 if that has any impact on bandwidth per performance more than the FP32 both since the FP16 doubled which will be hard for the bandwidth increases to make up for but also that, properly coded, the needed bandwidth for FP16 should be halved relative to FP32.

M4 pro only being 6 tflops is surprising

That is the binned version, but think of it this way: the binned Pro is only 60% bigger than the base and the base is meant for the iPad Pro and 13" Air as much as, if not more so arguably than, the mini/MBP/15" Air. Apple likes to double the GPU each tier which constricts what the binned Pro can reasonably be. Even if Apple leaves the relationship between Pro and Max the same, Apple would have to more than double the full Pro's core count over the base to change this. That would be nice, but so far Apple seems pretty set on their current structure.M4 pro only being 6 tflops is surprising

Edit: the base M6 could itself see a big-ish TFLOPS upgrade in the next generation with the new node of course

Last edited:

M4 pro only being 6 tflops is surprising

M5 is only around 5 TFLOPs nominally, yet it performs close to the

Edit: fixed my mistake comparing the M5 to 4060 - was looking at a wrong set of numbers, sorry. M5 is good, but not that good

Last edited:

Register on MacRumors! This sidebar will go away, and you'll see fewer ads.