Starting with iOS 17, iPadOS 17, and macOS Sonoma, Apple is making Communication Safety available worldwide. The previously opt-in feature will now be turned on by default for children under the age of 13 who are signed in to their Apple ID and part of a Family Sharing group. Parents can turn it off in the Settings app under Screen Time.

Communication Safety first launched in the U.S. with iOS 15.2 in December 2021, and has since expanded to Australia, Belgium, Brazil, Canada, France, Germany, Italy, Japan, the Netherlands, New Zealand, South Korea, Spain, Sweden, and the U.K. With the software updates coming later this year, Apple is making the feature available globally.

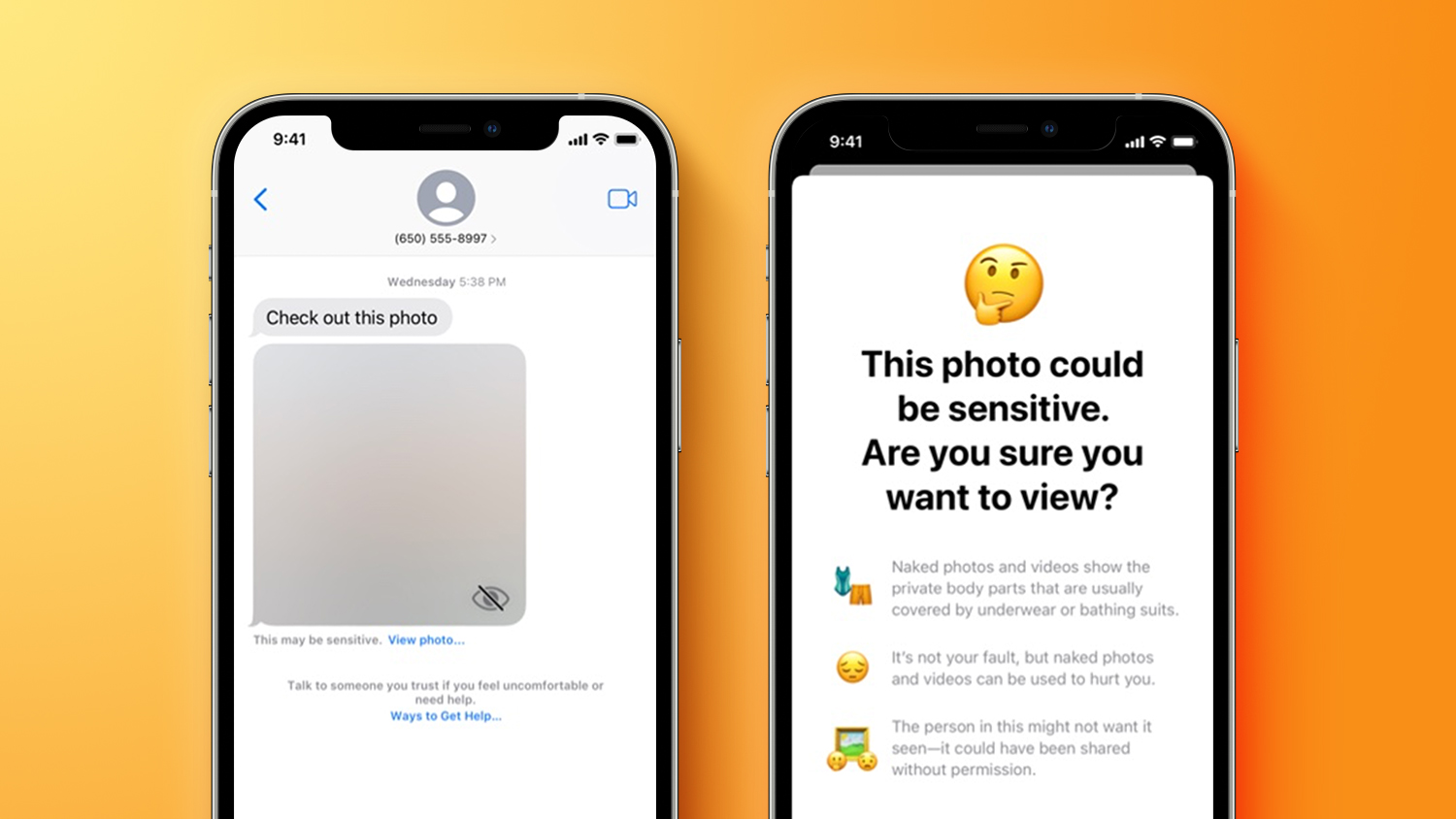

Communication Safety is designed to warn children when receiving or sending photos that contain nudity in the Messages app. Apple is expanding the feature on iOS 17, iPadOS 17, and macOS Sonoma to cover video content, and it will also work for AirDrop content, FaceTime video messages, and Contact Posters in the Phone app.

When the feature is enabled, photos and videos containing nudity are automatically blurred in supported apps, and the child will be warned about viewing sensitive content. The warning also provides children with ways to get help. Apple is making a new API available that will allow developers to support Communication Safety in their App Store apps.

Apple says Communication Safety uses on-device processing to detect photos and videos containing nudity, ensuring that Apple and third parties cannot access the content, and that end-to-end encryption is preserved in the Messages app.

iOS 17, iPadOS 17, and macOS Sonoma will be released later this year. The updates are currently available in beta for users with an Apple developer account.

Article Link: iOS 17 Expands Communication Safety Worldwide, Turned On by Default