Apple has announced a major Visual Intelligence update at WWDC 2025, enabling users to search and take action on anything displayed across their iPhone apps.

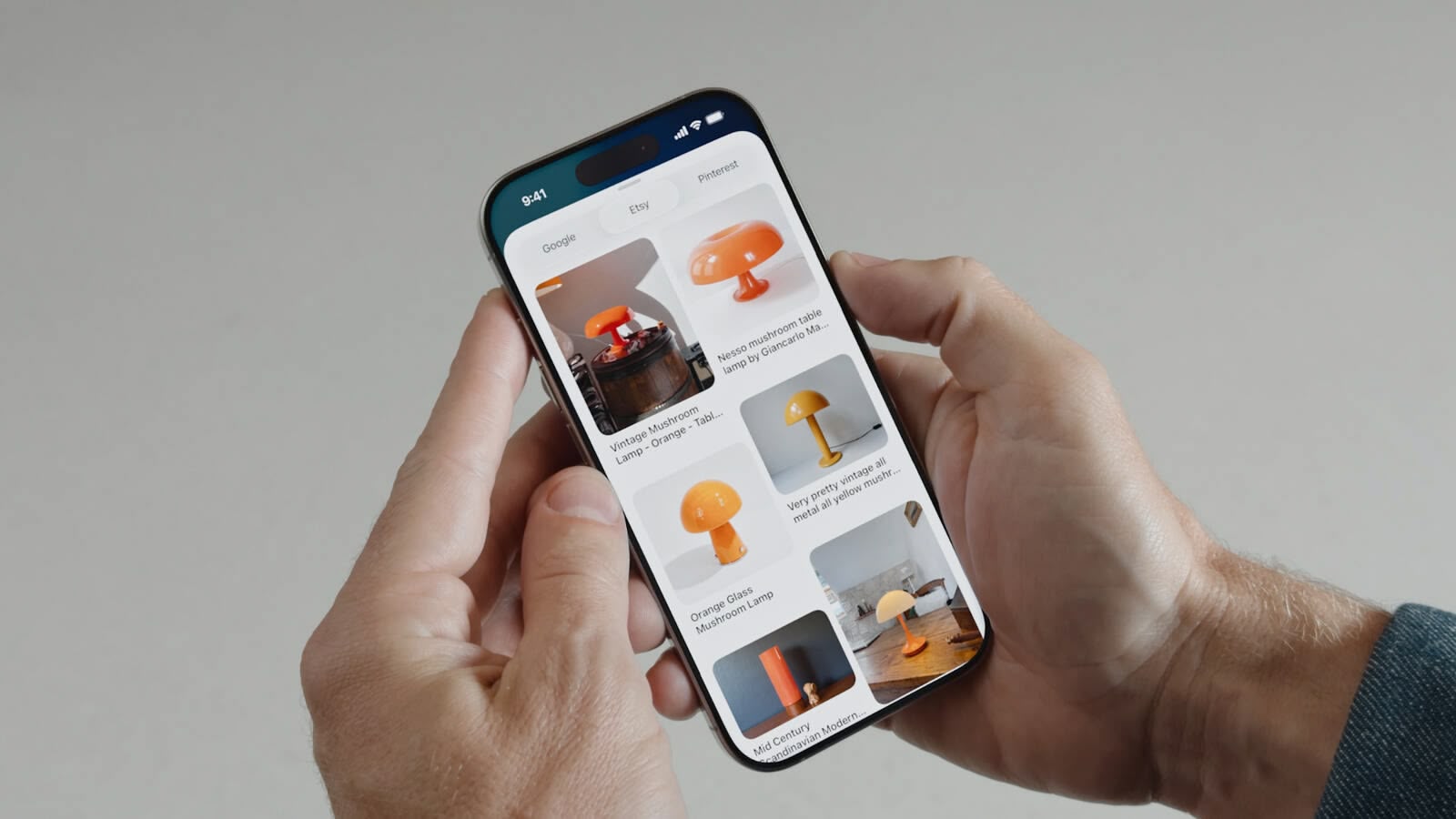

The feature, which previously worked only with the camera to identify real-world objects, now analyzes on-screen content. Users can ask ChatGPT questions about what they're viewing or search Google, Etsy, and other supported apps for similar items and products.

Visual Intelligence recognizes specific objects within apps – like highlighting a lamp to find similar items online. The system also detects events on screen and suggests adding them to Calendar, automatically extracting dates, times, and locations.

Accessing the feature appears straightforward: users press the same button combination used for screenshots. They can then choose to save or share the screenshot, or explore further with Visual Intelligence.

The update basically makes Visual Intelligence more of a universal search and action tool across the entire iPhone experience. Apple says the feature builds on Apple Intelligence's on-device processing approach, maintaining user privacy while delivering contextual assistance across apps.

Article Link: iOS 26: Visual Intelligence Now Searches On-Screen Content