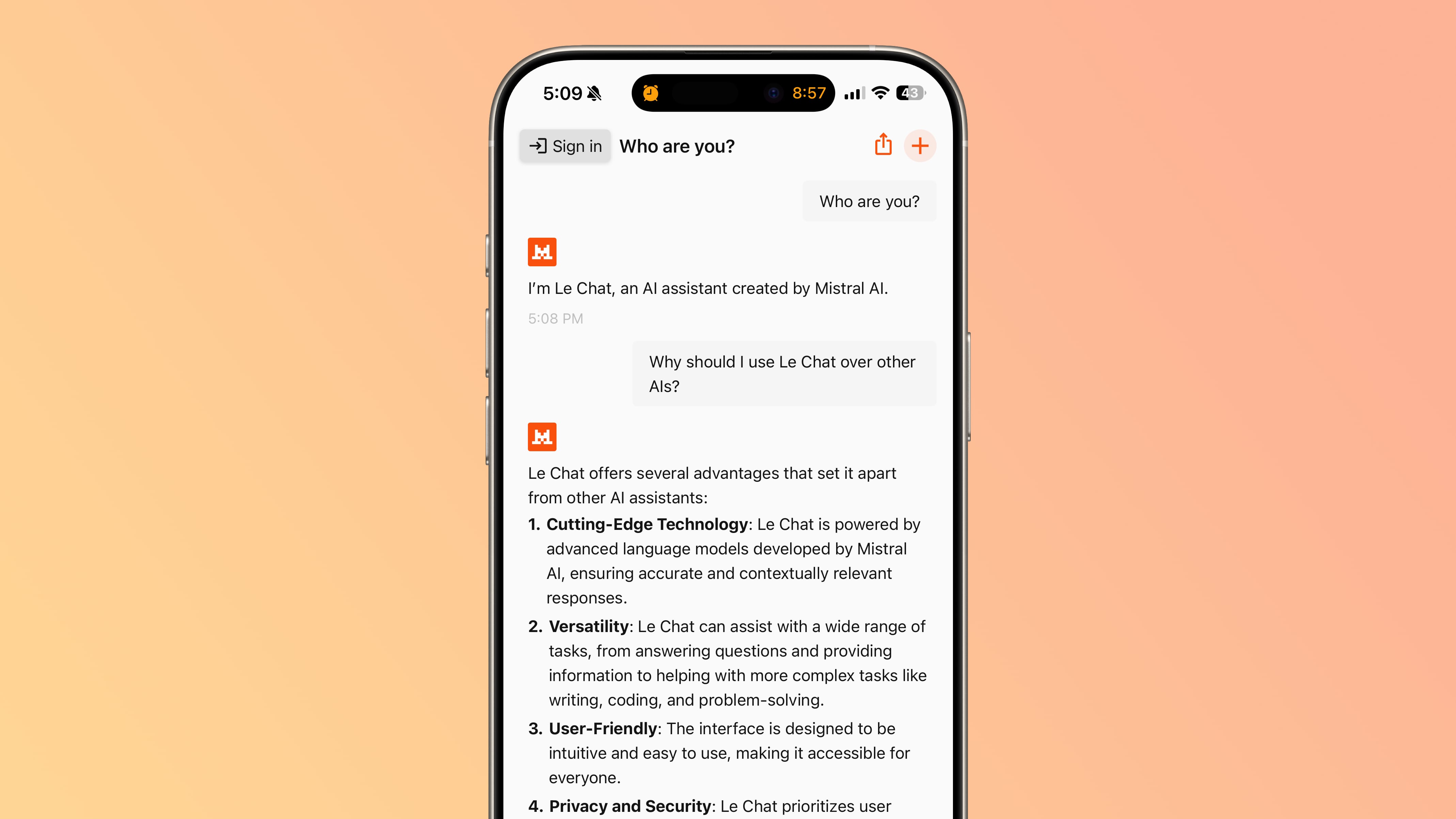

If you haven't had enough of AI apps, there's yet another to download and try out as of today. European company Mistral's Le Chat chatbot can now be used through a dedicated iOS app.

Mistral is a French AI company founded by engineers from Google and Meta. It creates its own open-weight large language models, and is aiming to compete with OpenAI. Le Chat has been available on the web, but the app will make it better able to compete with ChatGPT, DeepSeek, Gemini, and other options.

Like many competing chatbots, Le Chat supports natural language conversations, real-time web search, document analysis, and image generation.

LeChat is free to use, but access to the highest performing models is limited. A $14.99 per month fee unlocks a Pro tier with unlimited web browsing, extended access to news, and unlimited messaging.

Le Chat can be downloaded from the App Store for free. [Direct Link]

Article Link: Mistral AI's 'Le Chat' Chatbot Now Available on iPhone