Apple is believed to be developing several technological innovations to mark the 20th anniversary of the iPhone, and one key technology it's considering is Mobile High Bandwidth Memory (HBM), according to a report from ETNews.

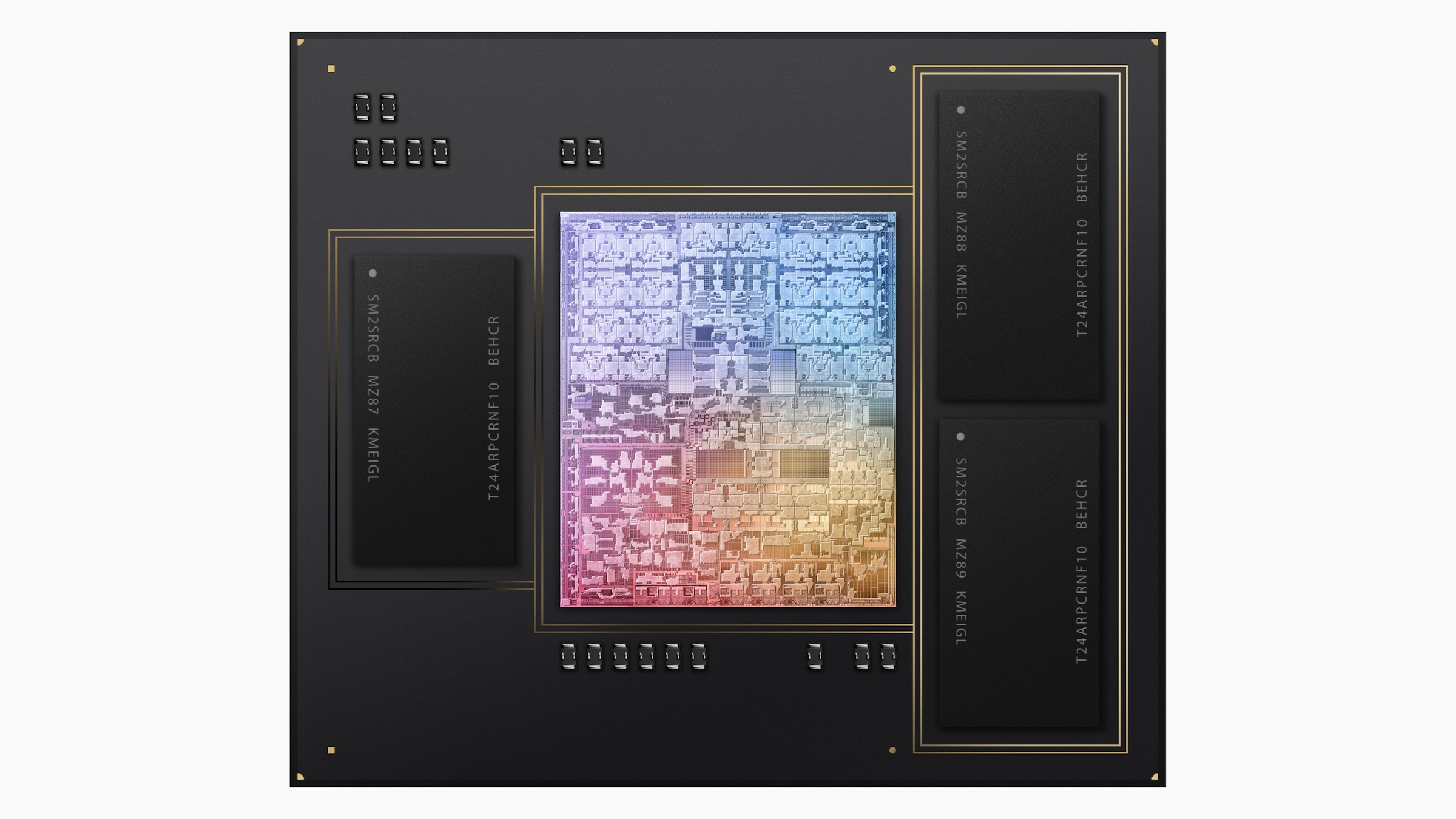

HBM is a type of DRAM that stacks memory chips vertically and connects them via tiny vertical interconnects called Through-Silicon Vias (TSVs) to dramatically boost signal transmission speeds. It's primarily used in AI servers today, and is often referred to as AI memory due to its ability to support AI processing alongside GPUs.

Mobile HBM is what the term suggests – a variant of the technology for mobile devices that is designed to deliver very high data throughput while minimizing power consumption and the physical footprint of the RAM dies. Apple is looking to enhance on-device AI capabilities, and ETNews reports that connecting mobile HBM to the iPhone's GPU units is being considered as a strong candidate for achieving this goal.

The technology could be key for running massive AI models on-device, such as for large language model inference or advanced vision tasks, without draining battery or increasing latency.

The report indicates that Apple may have already discussed its plans with major memory suppliers like Samsung Electronics and SK hynix, both of which are developing their own versions of mobile HBM.

Samsung is reportedly using a packaging approach called VCS (Vertical Cu-post Stack), while SK hynix is working on a method called VFO (Vertical wire Fan-Out). Both companies aim for mass production sometime after 2026.

As always though, there are manufacturing challenges. Mobile HBM is a lot more expensive to manufacture than current LPDDR memory. It could also face thermal constraints in slim devices like iPhones, and the 3D stacking and TSVs require highly sophisticated packaging and yield management.

If Apple does adopt this technology for its 2027 iPhone lineup, it would be yet another example of the company pushing the envelope for its 20th anniversary iPhone, which is rumored to feature a completely bezel-less display that curves around all four edges of the device.

Article Link: Report: 2027 iPhones Could Adopt Advanced AI Memory Technology