Got a tip for us?

Let us know

Become a MacRumors Supporter for $50/year with no ads, ability to filter front page stories, and private forums.

Report: 2027 iPhones Could Adopt Advanced AI Memory Technology

- Thread starter MacRumors

- Start date

- Sort by reaction score

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

It's AI in the sense that while there have been use-cases before, the thing that has really driven the adoption is AI workloads.AI-memory - really?! 🤣

This is simply FASTER memory, as usual. That AI improves with memory speed doesn't make this "AI-"memory! Every memory driven technology will advance with this! This is just putting the AI-sticker on normal technology progress.

Faster memory is and was always better, that is nothing new. Remember when your video cards had memory chips at least twice/triple/quadruple as fast as the memory in the computer housing that same video card? Now the main memory has that same technology. It's always the same: faster = better in every use case regarding memory!It's AI in the sense that while there have been use-cases before, the thing that has really driven the adoption is AI workloads.

Pretty sure that's not what Apple has in mind for this...Just use any of the third party apps.

Faster memory is and was always better, that is nothing new. Remember when your video cards had memory chips at least twice/triple/quadruple as fast as the memory in the computer housing that same video card? Now the main memory has that same technology. It's always the same: faster = better in every use case regarding memory!

The DRAM market is just continuing to fragment. Video is now DDR6, main memory is DDR5 and MRDIMM. HBM utilising TSV has taken off with AI, and now that's migrating to consumer products ....

Faster is not always better. It depends on the use-case. There are always tradeoff's. In the case of HBM, all that bandwidth uses lots of power even in the mobile variant.... maybe this will be aimed at a pro device.

Nothing is exciting about this, we want less AI on our phones not moreThis is exciting as the memory bandwidth is the bottleneck today!

Wow!!! That last line is very disturbing. A display that curves around all four edges of the device is a monumentally stupid idea. Let’s hope it doesn’t come to pass.

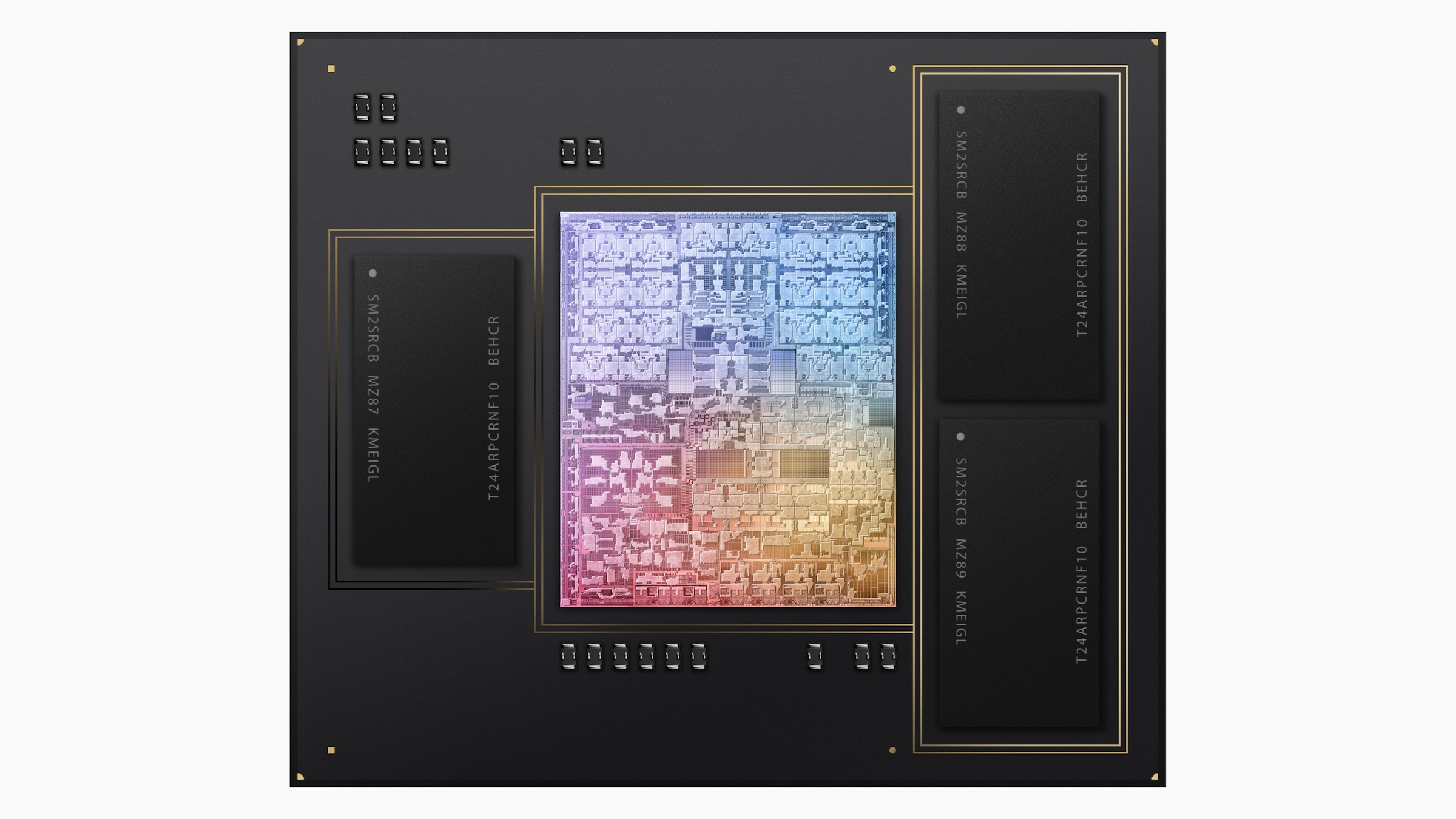

Apple is believed to be developing several technological innovations to mark the 20th anniversary of the iPhone, and one key technology it's considering is Mobile High Bandwidth Memory (HBM), according to a report from ETNews.

HBM is a type of DRAM that stacks memory chips vertically and connects them via tiny vertical interconnects called Through-Silicon Vias (TSVs) to dramatically boost signal transmission speeds. It's primarily used in AI servers today, and is often referred to as AI memory due to its ability to support AI processing alongside GPUs.

Mobile HBM is what the term suggests – a variant of the technology for mobile devices that is designed to deliver very high data throughput while minimizing power consumption and the physical footprint of the RAM dies. Apple is looking to enhance on-device AI capabilities, and ETNews reports that connecting mobile HBM to the iPhone's GPU units is being considered as a strong candidate for achieving this goal.

The technology could be key for running massive AI models on-device, such as for large language model inference or advanced vision tasks, without draining battery or increasing latency.

The report indicates that Apple may have already discussed its plans with major memory suppliers like Samsung Electronics and SK hynix, both of which are developing their own versions of mobile HBM.

Samsung is reportedly using a packaging approach called VCS (Vertical Cu-post Stack), while SK hynix is working on a method called VFO (Vertical wire Fan-Out). Both companies aim for mass production sometime after 2026.

As always though, there are manufacturing challenges. Mobile HBM is a lot more expensive to manufacture than current LPDDR memory. It could also face thermal constraints in slim devices like iPhones, and the 3D stacking and TSVs require highly sophisticated packaging and yield management.

If Apple does adopt this technology for its 2027 iPhone lineup, it would be yet another example of the company pushing the envelope for its 20th anniversary iPhone, which is rumored to feature a completely bezel-less display that curves around all four edges of the device.

Article Link: Report: 2027 iPhones Could Adopt Advanced AI Memory Technology

HBM has existed for many many years before modern AI, the R9 Fury had it. If it's referred to as AI memory at all in the industry which I'm in and never heard it referred to as, it's just catching the trend lol.

Also more interested in HBM on Macs if they're expanding its use (there's a misunderstanding by some that it already has it by some typographical confusion, it's just LPDDR, they said it's high bandwidth, memory, not High Bandwidth Memory)

HBM is just about as much about 'wider memory path' as it is about stacked dies. Lots of DDR and LPDDR packages are stacked memory dies. The vias in HBM are to get to 'wider' ; make more paths more viable in a limited amount of space.

Apple's implementation of memory on M-series is close to "Poor Man's HBM" than just plain LPDDR. Pragmatically, what Apple does is wider than most LPDDR implementations. And the memory packages are semicustom. Apple throws more LPDDR memory controllers at memory than most others (CPU vendors) do to get the 'wider' effect.

This report possibly means that it is still just LPDDR as the base technology but the die stacking might get a bit less proprietary. Same LPDDDR standard widths but routing of the channels in the die stacking more so through the 'middle' than 'around the edges'. Apple is already stacking RAM dies; that isn't new or "AI".

If this gets more standardized , (more than just Apple or 2-3 vendors buying it) then the economies of scale will push the price down a bit. 'Ai' is mainly being spun around HBM here, because currently 'AI' is synonymous with "spend money like drunken sailer on leave". R9 Fury would have done better if performance/$ didn't mean as much when it was released.

You're not far off though. For all Apple's aspirations, the other day I was in the shower listening to music via Homepod. I wanted to skip a song but my watch was wet so I couldn't press the skip button, so I asked Siri to skip to the next song. She couldn't do it!Does this mean that Siri will fail faster?

(I'm mostly kidding... maybe)

Register on MacRumors! This sidebar will go away, and you'll see fewer ads.