At its annual MAX conference, Adobe today provided insight into its plans for generative AI technology in Photoshop, Illustrator, and other popular design apps.

Adobe has iterated on Firefly, its AI image generation model, with the Firefly Image 2 Model. Improved Text to Image capabilities can be used on the Firefly web app, and a Generative Match option lets users generate content in user-specified styles. Photo Settings allows for photography-style image adjustments, and Prompt Guidance helps users refine their suggestions for better end results.

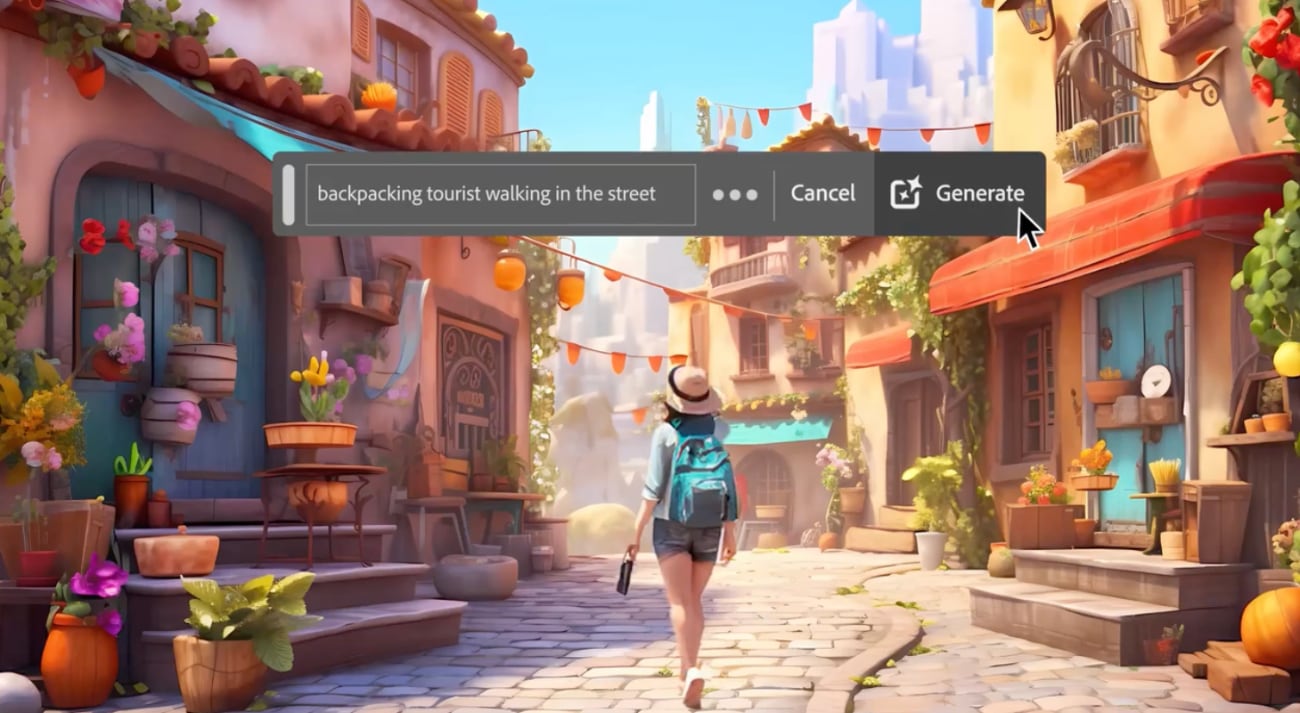

As for quality, Firefly Image renders better skin, hair, eyes, hands, and body structure, plus it offers better colors and improved dynamic range. The generative AI model is used for generative fill in Adobe Photoshop, allowing users to add, expand, and remove content with text prompts.

There's now a Firefly Vector Model, which Adobe says is the world's first generative AI model for creating vector graphics. Integrated into Adobe Illustrator, Firefly Vector Model can be used to create all manner of vector graphics, including logos, website designs, and product packaging.

According to Adobe, the Firefly Vector Model can create "human quality" vector and pattern outputs with a Text to Vector graphic feature. Generated graphics are organized into groups and fully editable, with options to match an artboard's existing style.

Adobe Express also has a new Firefly Design model for generating templates for social media posts and marketing assets. Firefly Design Model can generate templates in popular aspect ratios that are editable in Express.

More information about Adobe's new AI features can be found on the Adobe website.

Article Link: Adobe Highlights New Generative AI Features for Photoshop and More