Anthropic announced today that it is changing its Consumer Terms and Privacy Policy, with plans to train its AI chatbot Claude with user data.

New users will be able to opt out at signup. Existing users will receive a popup that allows them to opt out of Anthropic using their data for AI training purposes.

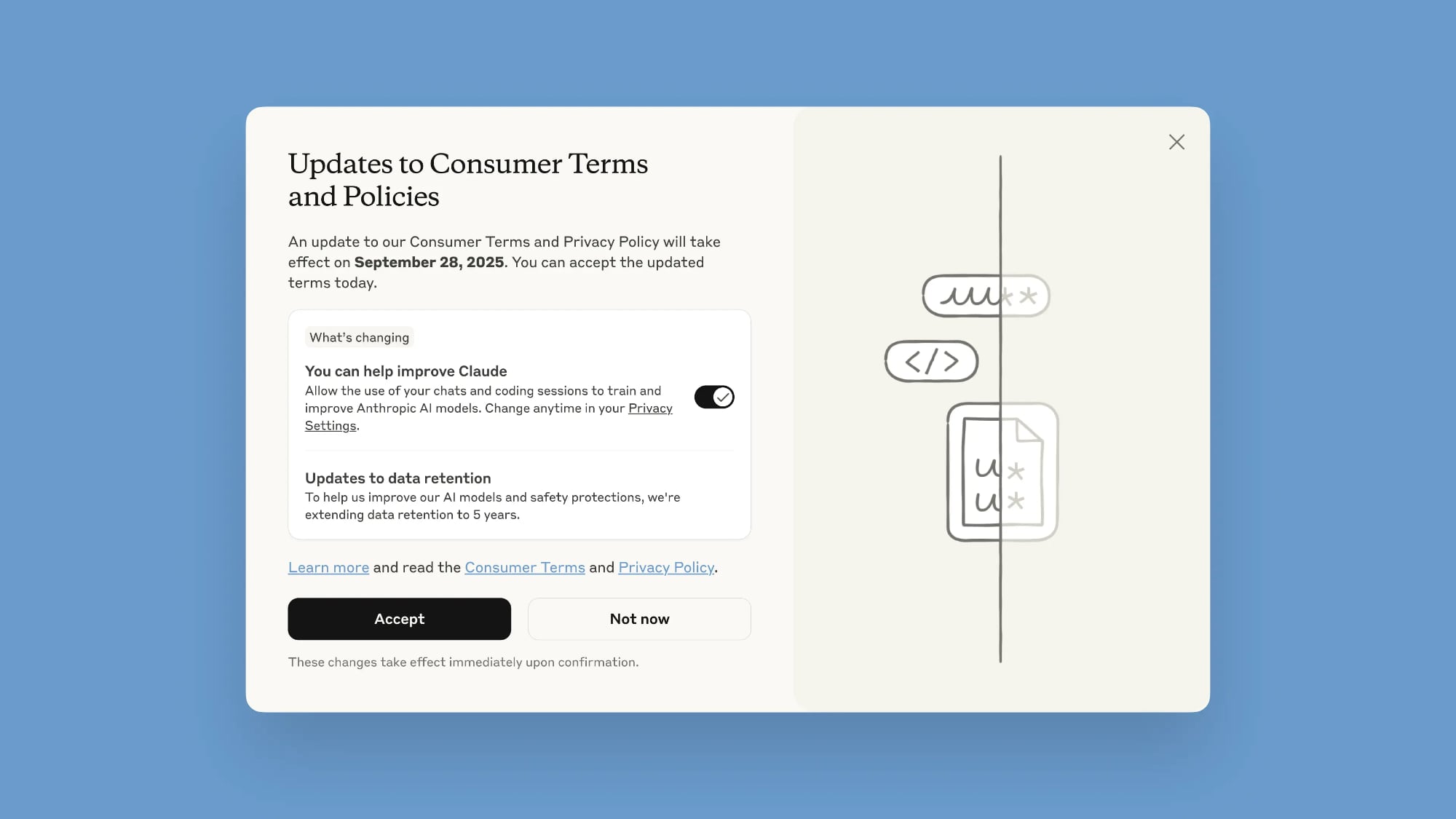

The popup is labeled "Updates to Consumer Terms and Policies," and when it shows up, unchecking the "You can help improve Claude" toggle will disallow the use of chats. Choosing to accept the policy now will allow all new or resumed chats to be used by Anthropic. Users will need to opt in or opt out by September 28, 2025, to continue using Claude.

Opting out can also be done by going to Claude's Settings, selecting the Privacy option, and toggling off "Help improve Claude."

Anthropic says that the new training policy will allow it to deliver "even more capable, useful AI models" and strengthen safeguards against harmful usage like scams and abuse. The updated terms apply to all users on Claude Free, Pro, and Max plans, but not to services under commercial terms like Claude for Work or Claude for Education.

In addition to using chat transcripts to train Claude, Anthropic is extending data retention to five years. So if you opt in to allowing Claude to be trained with your data, Anthropic will keep your information for a five year period. Deleted conversations will not be used for future model training, and for those that do not opt in to sharing data for training, Anthropic will continue keeping information for 30 days as it does now.

Anthropic says that a "combination of tools and automated processes" will be used to filter sensitive data, with no information provided to third-parties.

Prior to today, Anthropic did not use conversations and data from users to train or improve Claude, unless users submitted feedback.

Article Link: Anthropic Will Now Train Claude on Your Chats, Here's How to Opt Out