Apple is today launching a new Apple Security Research Device Program that's designed to provide security researchers with special iPhones that are dedicated to security research with unique code execution and containment policies.

Apple last year said it would be providing security researchers with access to "special" iPhones that would make it easier for them to find security vulnerabilities and weaknesses to make iOS devices more secure, which appears to be the program that's rolling out now.

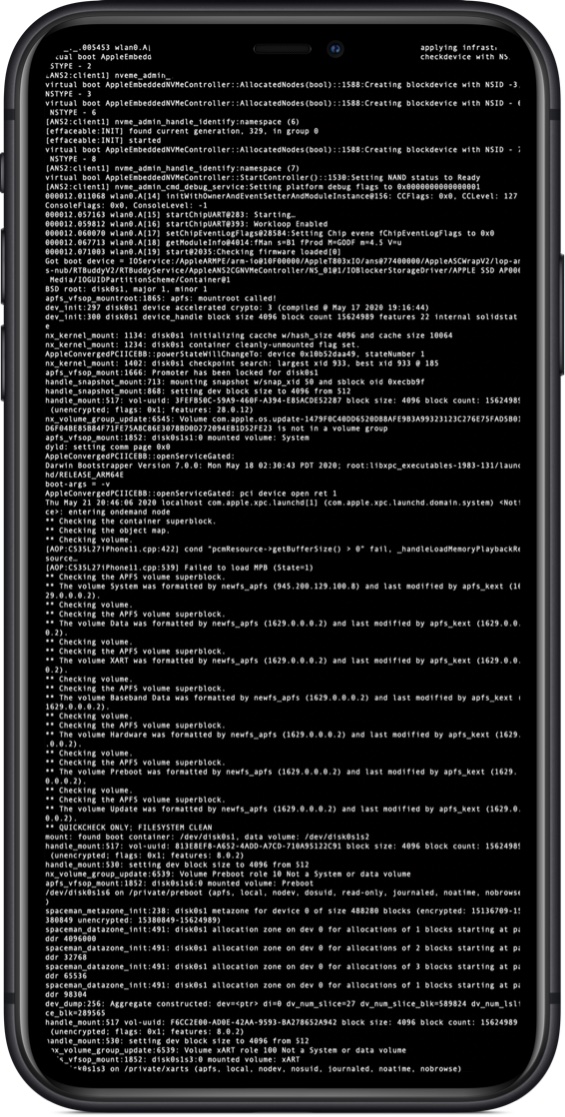

The iPhones that Apple is providing to security researchers are less locked down than consumer devices and will make it easier to find serious security vulnerabilities.

Apple says the Security Research Device (SRD) offers shell access and can run any tools or entitlements, but other than that, it behaves similarly to a standard iPhone. SRDs are provided to security researchers on a 12-month renewable basis and remain Apple property. Bugs discovered with the SRD must be "promptly" reported to Apple or a relevant third-party.

Apple is accepting applications for the Security Research Device Program. Requirements include being in the Apple Developer Program, and having a track record finding security issues on Apple platforms.If you use the SRD to find, test, validate, verify, or confirm a vulnerability, you must promptly report it to Apple and, if the bug is in third-party code, to the appropriate third party. If you didn't use the SRD for any aspect of your work with a vulnerability, Apple strongly encourages (and rewards, through the Apple Security Bounty) that you report the vulnerability, but you are not required to do so.

If you report a vulnerability affecting Apple products, Apple will provide you with a publication date (usually the date on which Apple releases the update to resolve the issue). Apple will work in good faith to resolve each vulnerability as soon as practical. Until the publication date, you cannot discuss the vulnerability with others.

Those that participate in the program will have access to extensive documentation and a dedicated forum with Apple engineers, with Apple telling TechCrunch that it wants the program to be a collaboration.

The Security Research Device Program will run alongside the bug bounty program, and hackers can file bug reports with Apple and receive payouts of up to $1 million, with bonuses possible for the worst vulnerabilities.

Article Link: Apple Launches Security Research Device Program to Give Bug Hunters Deeper OS Access to Find Vulnerabilities