Apple today previewed a wide range of new accessibility features for the iPhone, iPad, and Mac that are set to arrive later this year.

Apple says that the "new software features for cognitive, speech, and vision accessibility are coming later this year," which strongly suggests that they will be part of iOS 17, iPadOS 17, and macOS 14. The new operating systems are expected to be previewed at WWDC in early June before launching in the fall.

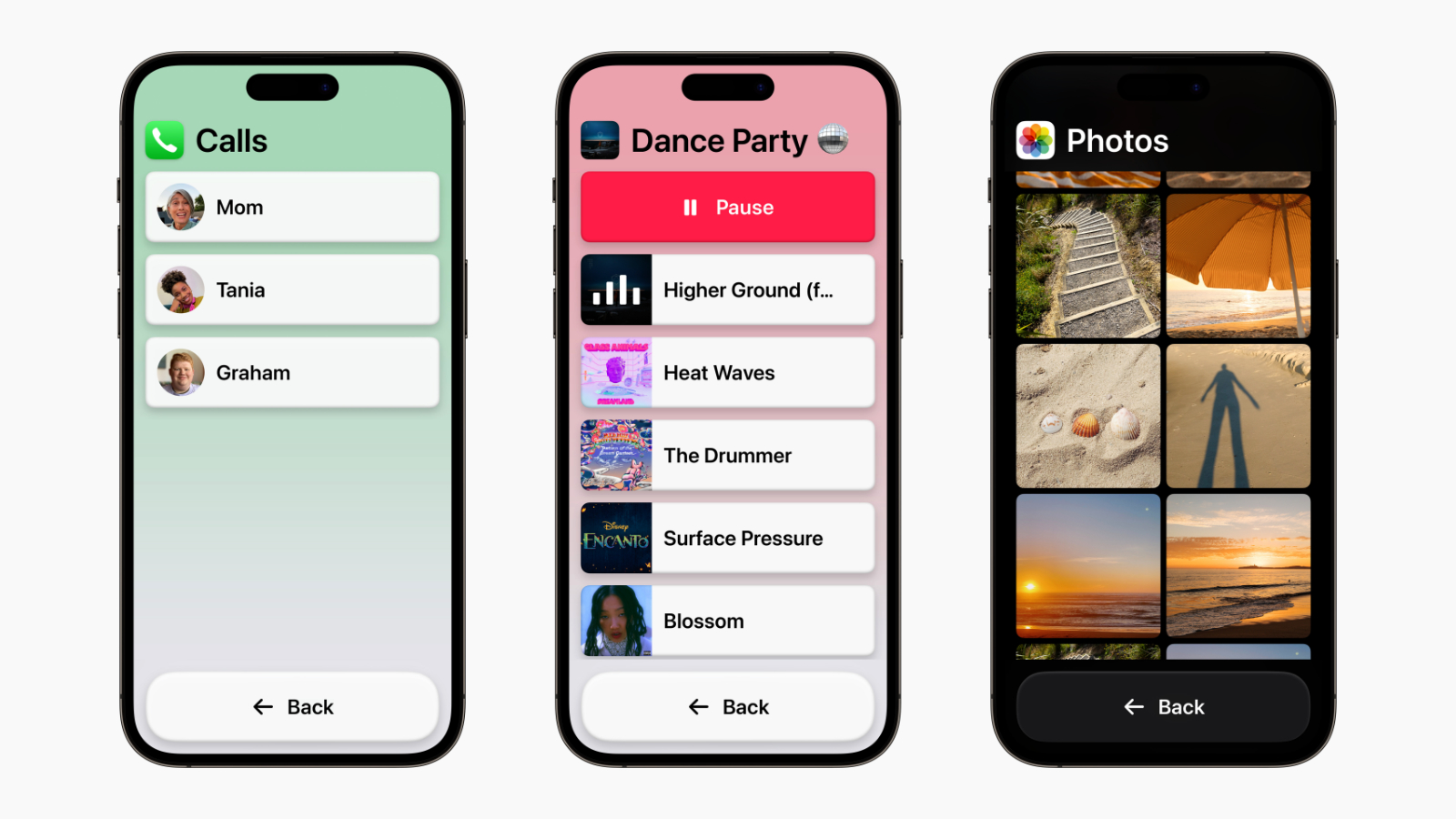

Assistive Access

Assistive Access distills iPhone and iPad apps and experiences to their core features. The mode includes a customized experience for Phone and FaceTime, which are combined into a single Calls app, as well as Messages, Camera, Photos, and Music. The feature offers a simplified interface with high contrast buttons and large text labels, as well as tools to help tailor the experience. For example, users can choose between a more visual, grid-based layout for their Home Screen and apps, or a row-based layout for users who prefer text.

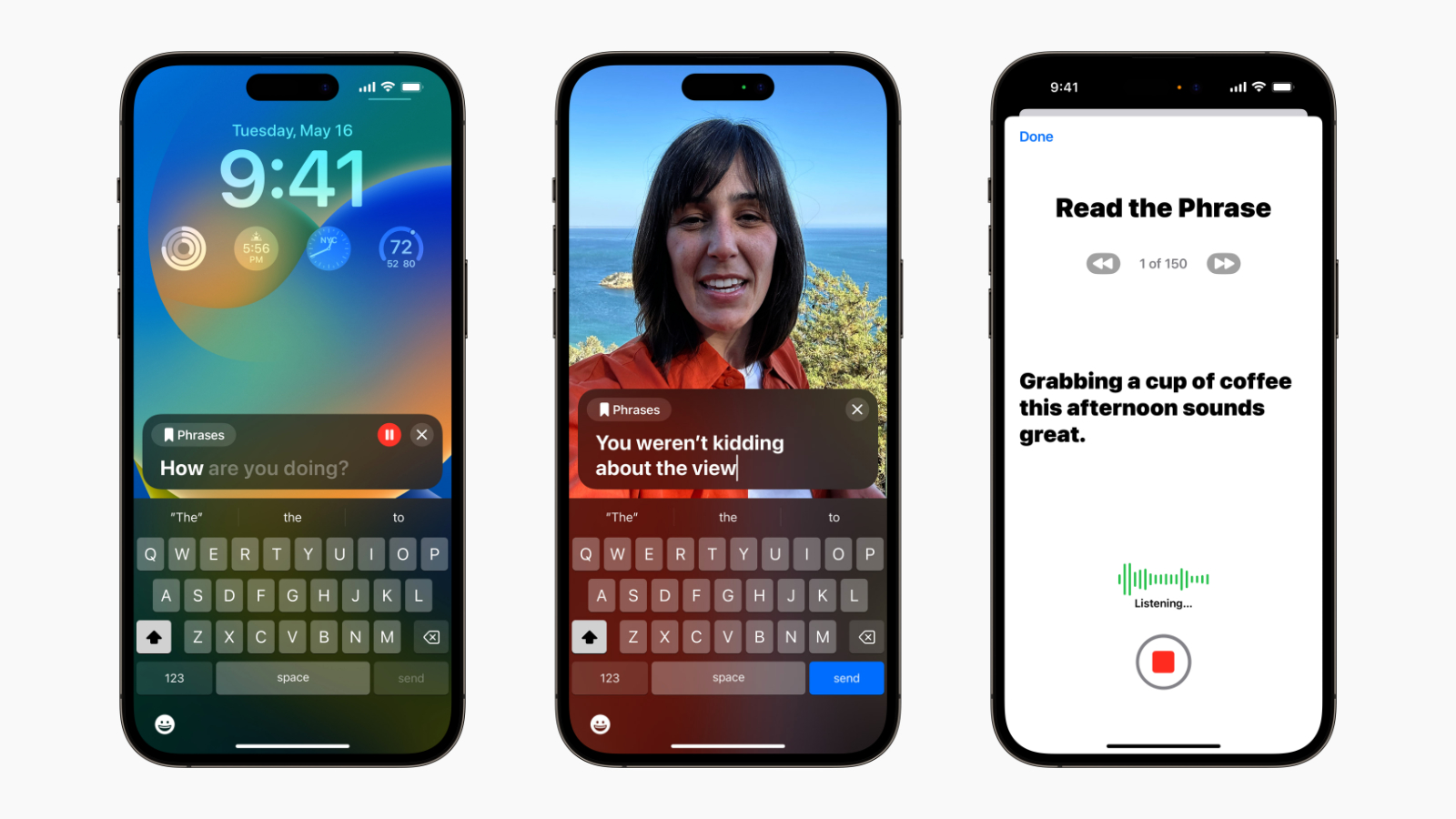

Live Speech and Personal Voice Advance Speech Accessibility

Live Speech on the iPhone, iPad, and Mac allows users to type what they want to say and have it spoken out loud during phone and FaceTime calls, as well as in-person conversations. Users can also save commonly used phrases to chime into conversations quickly.

Users at risk of losing their ability to speak, such as those with a recent diagnosis of amyotrophic lateral sclerosis (ALS), can use Personal Voice to create a digital voice that sounds like them. Users simply need to read along with a randomized set of text prompts to record 15 minutes of audio on an iPhone or iPad. The feature uses on-device machine learning to keep users' information secure and private, and integrates with Live Speech so users can speak with their Personal Voice.

Detection Mode in Magnifier and Point and Speak

In the Magnifier app, Point and Speak helps users interact with physical objects that have several text labels. For example, while using a household appliance, Point and Speak combines input from the Camera app, the LiDAR Scanner, and on-device machine learning to announce the text on buttons as users move their finger across the keypad.

Point and Speak is built into the Magnifier app on iPhone and iPad, works with VoiceOver, and can be used with other Magnifier features such as People Detection, Door Detection, and Image Descriptions to help users navigate their physical environment more effectively.

Other Features

- Deaf or hard-of-hearing users can pair Made for iPhone hearing devices directly to a Mac with specific customization options.

- Voice Control gains phonetic suggestions for text editing so users who type with their voice can choose the right word out of several that might sound similar, like "do," "due," and "dew."

- Voice Control Guide helps users learn tips and tricks about using voice commands as an alternative to touch and typing.

- Switch Control can now be activated to turn any switch into a virtual video game controller.

- Text Size is now easier to adjust across Mac apps including Finder, Messages, Mail, Calendar, and Notes.

- Users who are sensitive to rapid animations can automatically pause images with moving elements, such as GIFs, in Messages and Safari.

- Users can customize the speed at which Siri speaks to them, with options ranging from 0.8x to 2x.

- Shortcuts gains a new "Remember This" action, helping users with cognitive disabilities create a visual diary in the Notes app.

Article Link: Apple Previews iOS 17 Accessibility Features Ahead of WWDC

Last edited: