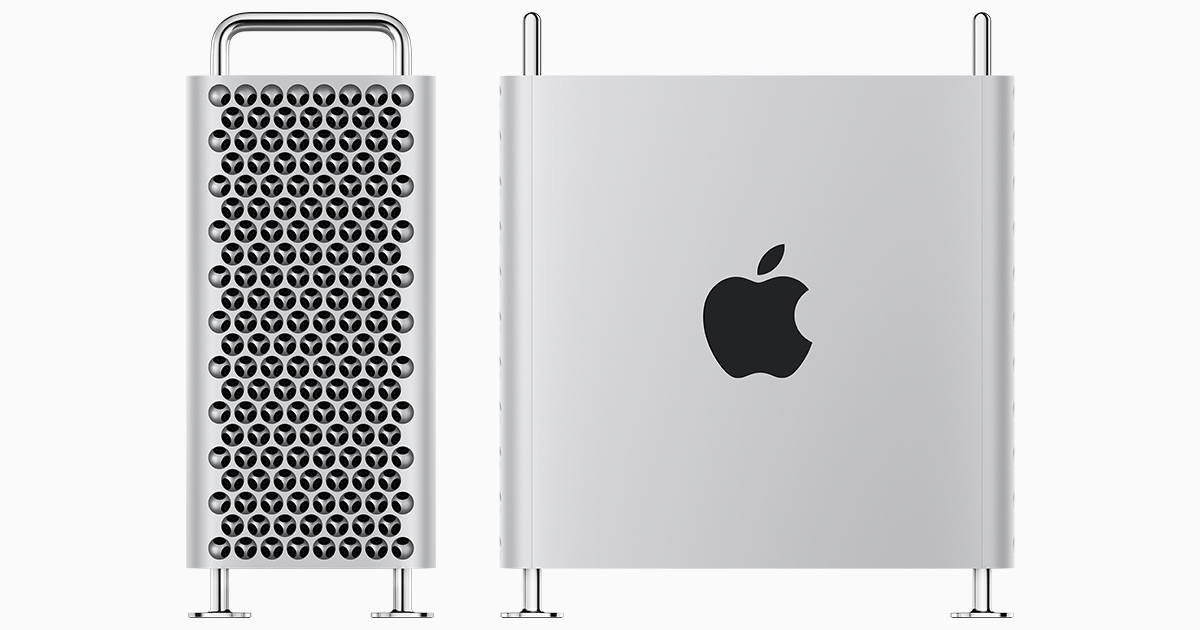

Selling a big tower computer with decreased GPU performance compared to the 2019 model, and no ability to upgrade the GPU despite having expansion slots and lots of empty space? That just seems absurd.

The Intel Mac Pro with W6900X AMD GPU that they’ll sell you new today in 2023 must continue to be supported into many future generations of MacOS drivers. Is it so unthinkable that they’d also include AMD driver support for Apple Silicon MacOS as well as Intel MacOS?

There is a software issue that you are not really covering. First, Apple has sold the M-series as a way to run some designated native iPhone/iPadOS apps natively with little, to no, changes. So what happens when that non universal app that has no code to handle AMD GPU details and loads of implicit assumptions about the universality of Unified memory hits this AMD GPU? What if the user starts an native iPhone app on a screen connected to a Apple GPU and then after it is running the user drags it to a screen powered by an AMD GPU. What happens? (app refuses to go vioalting user interface guidelines, implodes , or what? )

Second, a standard off the shelf AMD card presumes there is a UEFI layer to talk to. There is no such layer at the raw boot layer on a Apple Silicon Mac. Apple doesn't do UEFI. This is exactly not like the Mac Pro where the T2 chip just validated UEFI firmware and handed to the Intel CPU to execute. UEFI has been banished here. The card isn't going to work in the boot environment. Possibly could hack it into running after system boots with the iGPU.

The secure boot login screen of the Mac though requires an Apple iGPU. .... so if the drive is encrypted you get what if don't have a monitor hooked to the iGPU.

There are work-arounds for most of these issues. Trap iphone apps before launch and abort them if AMD GPU is present and might cause problems. Custom boot ROM just for Apple only GPU cards (which was more problematical in the past than most folks want to admit. lots of ROM copying without paying which leads to a corrosive funding structure for the proprietary work over the long term. ) .

Linux and Window virtual machines on augmented versions of Apple's hypervisor framework do have access to a virtual UEFI implementation and don't have any native phone app assumptions. If there was IOMMU pass-thru to the VM where the card is fixed assigned to that environment those would work more easily.

Similarily if just used the GPU card as a "Compute' GPGPU accelerator you dodge the need to be present at boot and any GUI monitor work.

But the expectation that it will work exactly like it did on a old school UEFI machine because it is a general PC box with slots. It isn't a general PC. The legacy , much bigger ecosystem that Apple Silicon is hooked to is iOS/iPadOS which have zero 3rd Party display GPUs. It is not all that absurd the Mac riding on that base line infrastructure would pick up the same constraint. Mac were riding on Intel got lots of ancient BIOS and UEFI quirks there for the much larger ecosystem.

The other hidden presumption here is that more future discrete GPU from AMD would get covered with drivers. (it magically happens by default on the general PC market side , but not necesarily going to happen on the Mac side.)

As Apple's iGPU cover more and more of the preformance range of AMD GPU product range what is going to be the large motivator to cover the parts that Apple already covers (even if extend that out to the eGPU deployments. ) ?

This first AS Mac Pro is probably going to have a lot of compromises. It likely won’t be able to support as many lanes of PCIe as the Intel Xeon can. (To be fair, we haven’t seen the M2 Max yet, which will be the foundation for the M2 Ultra presumably.)

...

I have doubts the M2 Max in the laptop is going to be the sole basic building block for the M2 Ultra. Especially if the Ultra is picking up substantially enhanced PCI-e lane provisioning. Pretty good chance the PCI-e provisioning is off on another chiplet that just won't be present at all in the laptop deployment.

Admittedly I don’t understand the engineering of the CPU / SoC architecture, and some others here clearly do. If they do continue to offer an Intel Mac Pro along with an M2, then maybe only the Intel model gets the MPX GPUs. Maybe they do hand-wavy graphics benchmarks based on the Intel config and also talk about Apple Silicon benefits in the same breath.

Apple wouldn't have to "hand-wave" all that much. The M1 Max and Ultra beat the W5700 in the Mac Pro. The Also performed better than the 16 core Mac Pro 2019. If the configurations of the MP 2019 stay 100% still (no new CPU or GPUs ) then the M2 Ultra will still beat those. Those two are the most common CPU/GPU components bought from Apple. If the M2 Ultra gets decently close to the W6800 they could add those to the "beat that" list also.

They probably will just stay way from any notion of being a Nvidia 4000 series or AMD 7900 'killer'. That is more ducking the issue than 'hand waving".

But still. Gurman said graphics. GRAPHICS!

*(Really unlikely he meant M.2 PCIe slots for SSDs and graphics cards, as Apple has never used M.2. They’ve put SSD blades on a variety of proprietary slots but never for any other purpose. They’ve used mini PCI slots for iMac GPUs, AirPort and bluetooth cards a long time ago, but not in many years. And space is hardly an issue in a Mac Pro tower to necessitate such tiny slots for expansion cards.)

Apple's new native boot environment has support for generic NVMe SSDs so why should Apple pretend that M.2 devices do not commonly exist. In 2023 , the number of user workstation motherboards in the general market that have zero M.2 slots on them is about the same number of boards that have zero SATA sockets on them. In 2019, Apple put a SATA socket on the motherboard. 3-4 years M.2 is about at the same ubiquity.

This is the one that actually seems more appropriately labeled absurd. The firmware support for this is already present. OS support present and working. Fully enabled out the box is put an adapter in a PCI slot. But the end of the world if put the connector on the logicboard directly.

If the new system has a realtively puny PCI-e backhaul that is already excessively oversubscribed then yeah it would make sense. But if they do any decent job of provided decent overall system backhaul on the logicabord. This kind of loopy. APFS is mainly about getting more folks onto SSDs and block the path to more SSD usage.