Maybe the author used AI to summarize the news from the AWS re:invent conference …I read that as a typo for “AWS and Apple”

Got a tip for us?

Let us know

Become a MacRumors Supporter for $50/year with no ads, ability to filter front page stories, and private forums.

Apple Uses Amazon's Custom AI Chips for Search Services

- Thread starter MacRumors

- Start date

- Sort by reaction score

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Go Amazon, ask 30% of each revenue done by a$$le thanks to your processors!

Apple uses custom Inferentia and Graviton artificial intelligence chips from Amazon Web Services for search services, Apple machine learning and AI director Benoit Dupin said today at the AWS re:Invent conference (via CNBC).

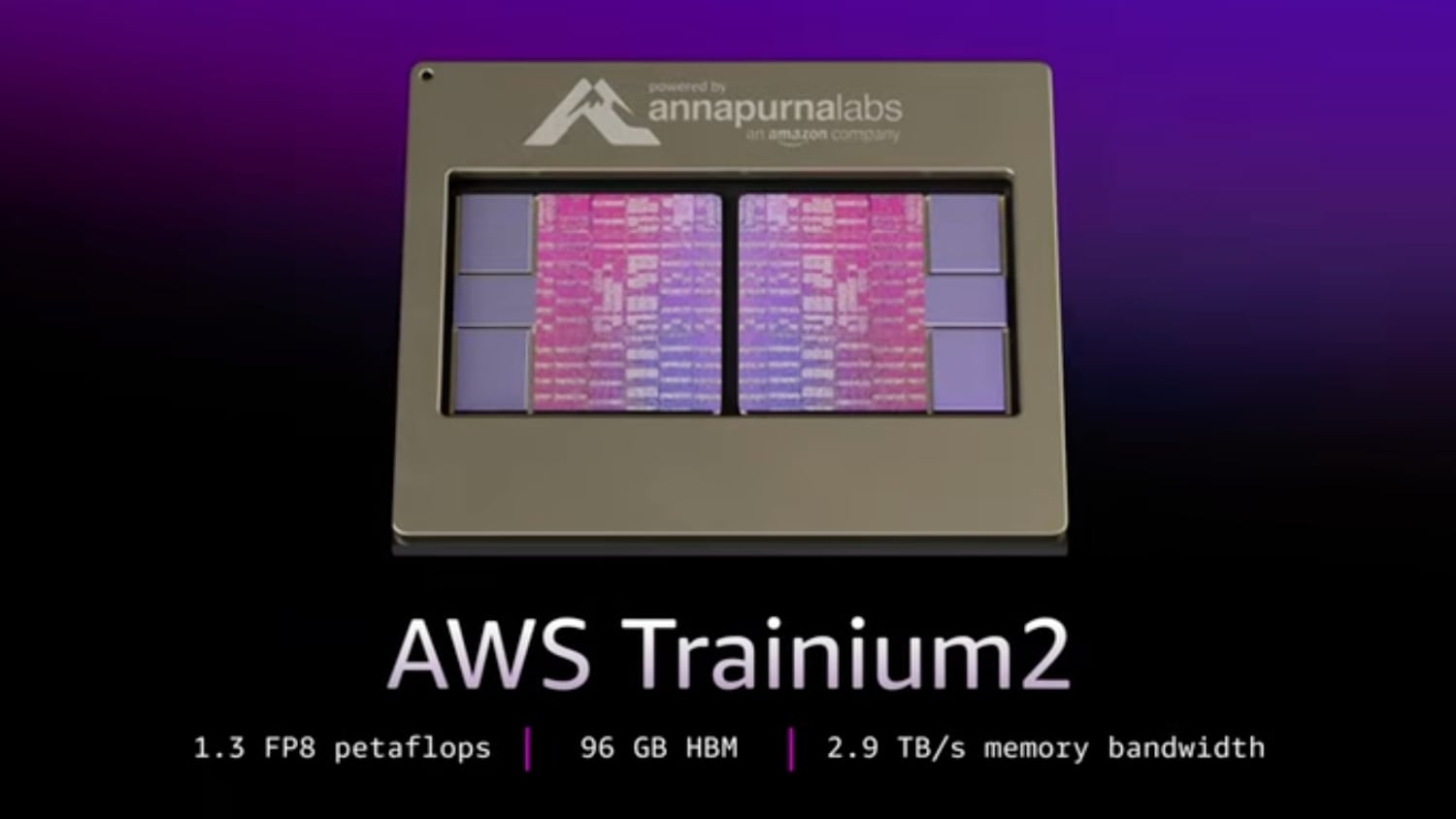

Dupin said that Amazon's AI chips are "reliable, definite, and able to serve [Apple] customers worldwide." AWS and Amazon have a "strong relationship," and Apple plans to test whether Amazon's Trainium2 chip can be used for pre-training Apple Intelligence and other AI models. Amazon announced rental opportunities for the Trainium2 chip today.

Apple has used AWS for more than 10 years for Siri, Apple Maps, and Apple Music. With Amazon's Inferentia and Graviton chips, Apple has seen a 40 percent efficiency gain, and with Trainium2, Dupin said Apple expects up to a 50 percent improvement in efficiency with pre-training.

Nvidia is the market leader when it comes to GPUs for AI training, but companies like Amazon are aiming to compete with lower-cost options.

Article Link: Apple Uses Amazon's Custom AI Chips for Search Services

I don't get it, is it really more efficient to have a R&D department to design your own chip then order a huge numbers to be manufactured than to just buy off the shelf or request that CPU manufacturer like Nvidia to build a specific one that can be used by every other company?

tbf this kind of still exist and in previous life it used to be the case. I doubt much was between Apple and Microsoft in 1995 except maybe office. In modern tech everything overlapped so thats why they work as a whole now.

I use a youtube video summarizer. It works really well and saved me a lot of time. Maybe NovaGamer aims for a shorter "re-write" that has to be done by a human but I think most people are just hoping to get the basic points.

There's marketing, and then there's business. Apple does significant business with Google and Microsoft, too. As I'm sure Microsoft does business with Oracle, and Google with Meta, and Amazon with Apple, and on down the line, every possible combination.

The "my favorite company is more pious and pure than your favorite company" is sports ball-style chum for ad impressions on sites like this one. The reality is, they all work together, as it suits them, because that's how business works. Efficiency first.

tbf this kind of still exist and in previous life it used to be the case. I doubt much was between Apple and Microsoft in 1995 except maybe office. In modern tech everything overlapped so thats why they work as a whole now.

Wish they used AMD so ROCm wouldn’t be such a pile of hot garbage.

It’s not just you, GenAI cannot summarize – it’s a key area they not only struggle with but functionally can not do well.

I invite anyone wants to argue with me to please reply with research papers, I’ve asked before and I’ll ask again in the future… I’m deeply involved in this area and would love someone to figure out how to crack that problem or point me to newer research that even attempts to do so and has measurable success.

Until then, it is puzzling why it was one of the first features they rolled out. It intuitively seems like it can summarize, but it just can not. Statistics and regression aren’t the same thing as critical human thought and novel synthesis.

I don’t hate GemAI, but I wish there was more responsible roll-out of the tooling and more understanding / openness about the limitations.

You're going to have to give us a little bit more than, "I'm deeply involved in this area," for us to take you seriously. I've found that generative AI's ability to summarize content is one of its strengths. You don't have to believe me. Just believe Amazon. They apparently think it's good enough to put their retail business at risk using AI to summarize user reviews.

I mean, seriously. Asking us to "reply with research papers" proving GenAI is good at summarization is kinda like asking to provide research saying that water is wet. It's so obvious that nobody's going to research a broad generalization like that.

If you want to provide us research showing specific cases where GenAI doesn't summarize content with 100% accuracy, go for it. Let's pick it apart and debate it. I'm sure they exist. But does something need to be perfectly accurate to be useful? In most cases, no.

I use a youtube video summarizer. It works really well and saved me a lot of time. Maybe NovaGamer aims for a shorter "re-write" that has to be done by a human but I think most people are just hoping to get the basic points.

I don't get it, is it really more efficient to have a R&D department to design your own chip then order a huge numbers to be manufactured than to just buy off the shelf or request that CPU manufacturer like Nvidia to build a specific one that can be used by every other company?

i mean... yes? or amazon wouldn't do it. just business 101. it's either spend the money on your own project or pay profits to nvidia. also if amazon doesn't do this they will be perceived as falling behind and their stock will suffer.

Absolutely, happy to discuss.You're going to have to give us a little bit more than, "I'm deeply involved in this area," for us to take you seriously. I've found that generative AI's ability to summarize content is one of its strengths. You don't have to believe me. Just believe Amazon. They apparently think it's good enough to put their retail business at risk using AI to summarize user reviews.

I mean, seriously. Asking us to "reply with research papers" proving GenAI is good at summarization is kinda like asking to provide research saying that water is wet. It's so obvious that nobody's going to research a broad generalization like that.

If you want to provide us research showing specific cases where GenAI doesn't summarize content with 100% accuracy, go for it. Let's pick it apart and debate it. I'm sure they exist. But does something need to be perfectly accurate to be useful? In most cases, no.

Here's one from October: https://arxiv.org/html/2410.13961v1

Another one: https://arxiv.org/html/2410.20021

Here's a good one (this is a pdf link because the HTML beta formatting isn't good for this paper) from this past summer that talks about "limitations in terms of the alignment with human evaluation" which is precisely what I'm getting at: https://arxiv.org/pdf/2305.14239v3

I agree with you that in the abstract for low-criticality review GenAI and LLMs are "ok" and maybe in some cases even good enough, but on a device I think there's a different benchmark – I shouldn't have to double check whether my email or my message summary is correct, and right now it requires effectively double work.

I beta tested the Apple Intelligence summaries from inception and thought they'd improve by launch but they did not, so I turned them off because I got tired of checking things twice and getting misinformation that in one instance made me completely stop what I was doing because it said something I was the owner of was flagged by administration (it turned out I was not the owner of the issue).

I also think it's crucial that more people know about these limitations because they are being deployed in the medical field prematurely. Transcribing text is one thing but they are being used to summarize it too and will absolutely miss things which should disqualify them from being used in such a critical area.

Scale alone will not solve this problem, there need to be some novel developments in the field itself and I base that opinion on years of experience working with experts in these and closely-adjacent areas.

I use LLMs myself, they are especially useful to me for brainstorming and getting a wide swath of information from a dense field – I've discovered corners of Computer Science I was unaware of and just last week a found new method for cooking something that is kind of obscure and I never would have come across without the LLM.

...

Adding some more context: Because there are fiefdoms forming around Generative AI I want to be explicit that I am not dogmatically against the technology, far from it, and I have worked with many researchers directly, not just 'coders' using existing tools, but the people doing the hard science work behind the scenes and not just at one company but across academia with dozens of research projects that either made the cut or didn't.

I believe reducing the capabilities down to stochastic parrots as many critics have done over the last few years is not useful, and that there absolutely is emergent behavior in some of the most complex models, but that behavior isn't controlled well and may not be truly controllable at all.

Without the capability to synthesize novel insight you cannot summarize like a human, and we have a lot of marketing going on in the industry where claims are being made that are not backed up by research and I am specifically interested in this area on a personal level, it's bit of a hobby project for me outside of work.

The cognitive science fascinates me and I want to see a future where this technology does what it promises, but exactly because it is almost good enough means that it is going to be widely adopted and ultimately doom itself and cause a bubble that will pop unless these problems are able to be solved before that happens. When executives have the same experience I did and drop everything to respond to a 'hallucination' (I hate this term of art), they will kill funding for these projects.

I have been part of selection committees, executive review boards, etc. and I have seen promising technology killed because it crossed over into a certain category of "these people are trying to get funding" and I think a significant amount of what is called "AI" now falls into that exact category. I've seen funding refused just because a project mentioned NLP which was en vogue in the late aughts but became 'also-ran' and while there is certainly more utility with GenAI in some cases the fact that it reached escape velocity and was deployed to the general public who don't have those insights means it's almost a pandora's box opening as far as hard-science research goes, because every 2nd, 3rd, and 4th paper you find that would have been discussing formulas and science is discussing model evaluation just to secure some modest amount of funding because the investors haven't caught on to the grift.

Sorry for being long winded about this but I think it's important to understand why I make the claims I do and take these strong positions. I know posting in a forum isn't going to change anything, but someone might eventually stumble on it and go "hmm..." and do something about it, and that will have been worth it.

Last edited:

Great...

One one side Open AI, on the other Amazon.

What's next, Meta?

But isn't that why Apple is unique in the tech landscape? Because they offer the hardware and an operating system upon which multiple vendors can compete? Why should Apple compete with all of them directly rather than leverage the best-of-breed that's available?

Diversification is smart. It doesn't mean Apple won't excel one day with its own server chips. We already know that's coming, but until then, ride the coattails of great technology that's already available.

Wait, wasn’t Apple rumored to be using Google’s chips at one point?

And more importantly, is this just a stopgap to Apple using its own silicon down the road?

It feels increasingly plausible that the AI boom caught Apple with its pants all the way down.

Apple Silicon in the data center is coming. It's likely already deployed and being actively tested.

The confusion is coming from the fact that "AI" is being used so broadly these days. When they talk about features like Search and Siri being powered by AI chips, they are really powered by a subset of AI called Machine Learning (ML). That's been around for decades.

Generative AI (OpenAI, Summarize, etc.) is just the new-kid-on-the-block of the broader AI field of technologies.

The chipsets Apple has been using have been "ML" chipsets, not necessarily "GenAI" chipsets. Two different things, but both are being called "AI" these days.

How about Apple, that you let your customers install AI Accelerators on their Pro Desktops, ok? AMD Instinct or these Amazon AI chips? Tim, Craig, anyone…? Hello?!

I've got to clear a bunch of things off my plate right now, but I just wanted to quickly say that this was a fantastic response. Thank you for being "long winded" because I now understand much better where you're coming from.Absolutely, happy to discuss.

Here's one from October: https://arxiv.org/html/2410.13961v1

Another one: https://arxiv.org/html/2410.20021

Here's a good one (this is a pdf link because the HTML beta formatting isn't good for this paper) from this past summer that talks about "limitations in terms of the alignment with human evaluation" which is precisely what I'm getting at: https://arxiv.org/pdf/2305.14239v3

I agree with you that in the abstract for low-criticality review GenAI and LLMs are "ok" and maybe in some cases even good enough, but on a device I think there's a different benchmark – I shouldn't have to double check whether my email or my message summary is correct, and right now it requires effectively double work.

I beta tested the Apple Intelligence summaries from inception and thought they'd improve by launch but they did not, so I turned them off because I got tired of checking things twice and getting misinformation that in one instance made me completely stop what I was doing because it said something I was the owner of was flagged by administration (it turned out I was not the owner of the issue).

I also think it's crucial that more people know about these limitations because they are being deployed in the medical field prematurely. Transcribing text is one thing but they are being used to summarize it too and will absolutely miss things which should disqualify them from being used in such a critical area.

Scale alone will not solve this problem, there need to be some novel developments in the field itself and I base that opinion on years of experience working with experts in these and closely-adjacent areas.

I use LLMs myself, they are especially useful to me for brainstorming and getting a wide swath of information from a dense field – I've discovered corners of Computer Science I was unaware of and just last week a found new method for cooking something that is kind of obscure and I never would have come across without the LLM.

...

Adding some more context: Because there are fiefdoms forming around Generative AI I want to be explicit that I am not dogmatically against the technology, far from it, and I have worked with many researchers directly, not just 'coders' using existing tools, but the people doing the hard science work behind the scenes and not just at one company but across academia with dozens of research projects that either made the cut or didn't.

I believe reducing the capabilities down to stochastic parrots as many critics have done over the last few years is not useful, and that there absolutely is emergent behavior in some of the most complex models, but that behavior isn't controlled well and may not be truly controllable at all.

Without the capability to synthesize novel insight you cannot summarize like a human, and we have a lot of marketing going on in the industry where claims are being made that are not backed up by research and I am specifically interested in this area on a personal level, it's bit of a hobby project for me outside of work.

The cognitive science fascinates me and I want to see a future where this technology does what it promises, but exactly because it is almost good enough means that it is going to be widely adopted and ultimately doom itself and cause a bubble that will pop unless these problems are able to be solved before that happens. When executives have the same experience I did and drop everything to respond to a 'hallucination' (I hate this term of art), they will kill funding for these projects.

I have been part of selection committees, executive review boards, etc. and I have seen promising technology killed because it crossed over into a certain category of "these people are trying to get funding" and I think a significant amount of what is called "AI" now falls into that exact category. I've seen funding refused just because a project mentioned NLP which was en vogue in the late aughts but became 'also-ran' and while there is certainly more utility with GenAI in some cases the fact that it reached escape velocity and was deployed to the general public who don't have those insights means it's almost a pandora's box opening as far as hard-science research goes, because every 2nd, 3rd, and 4th paper you find that would have been discussing formulas and science is discussing model evaluation just to secure some modest amount of funding because the investors haven't caught on to the grift.

Sorry for being long winded about this but I think it's important to understand why I make the claims I do and take these strong positions. I know posting in a forum isn't going to change anything, but someone might eventually stumble on it and go "hmm..." and do something about it, and that will have been worth it.

What’s the problemGreat...

One one side Open AI, on the other Amazon.

What's next, Meta?

…tell me where you can buy an Amazon chip 🙄How about Apple, that you let your customers install AI Accelerators on their Pro Desktops, ok? AMD Instinct or these Amazon AI chips? Tim, Craig, anyone…? Hello?!

All the hyperscalers are doing their own training and inference oriented chips at this point and given NVIDIA's premiums, Apple would be wise to be trying all of them.Wait, wasn’t Apple rumored to be using Google’s chips at one point?

Apple is, reportedly, already using their own silicon for inference. Training has a different set of requirements and Apple may not find it worthwhile to build their own chips for training, nor should they, because, as I said above, all the hyperscalers are making their own training oriented chips.And more importantly, is this just a stopgap to Apple using its own silicon down the road?

Apple has been shipping "AI" accelerators in every iPhone for over a decade and in every Apple Silicon Mac. Their use of large language models is a bit behind, but then Google, who invented a key enabling technology, was caught entirely flat-footed by both OpenAI and Meta's efforts.It feels increasingly plausible that the AI boom caught Apple with its pants all the way down.

Microsoft started incorporating LLMs into their products well before Apple and I have to say, in my experience, CoPilot (for "information work") isn't great. It takes a lot of experiements to get it to do anything useful. It hasn't saved me much time and to the extent it is, it's a drag on quality.

You think statements Apple's director of AI made at an industry conference weren't vetted and approved at the highest levels?Pretty sure that guy violated his NDA. Apple doesn't like partners to reveal what tech they use.

Oh, no, you think that statements made by Apple's director of AI at an industry conference were instead made by someone who works for AWS. My mistake.

I think these are two diffrent types of chips. Apple is working on AI server chips. AI chips are designed to run already trained AI models, whereas the Amazon Tranimum chips are "AI training chips" that are specifically optimized for the computationally intensive process of training new AI models, often requiring more raw power and memory bandwidth to handle large datasets.Wait, wasn’t Apple rumored to be using Google’s chips at one point?

And more importantly, is this just a stopgap to Apple using its own silicon down the road?

It feels increasingly plausible that the AI boom caught Apple with its pants all the way down.

Always has been.good to see that there is more than Nvidia for AI ...

Apple TRAINED their current models on Google TPUs.

Register on MacRumors! This sidebar will go away, and you'll see fewer ads.