Holy hell, when did Federighi turn into such a hunk?! You see that sultry gaze he’s giving in the still of the video??

Got a tip for us?

Let us know

Become a MacRumors Supporter for $50/year with no ads, ability to filter front page stories, and private forums.

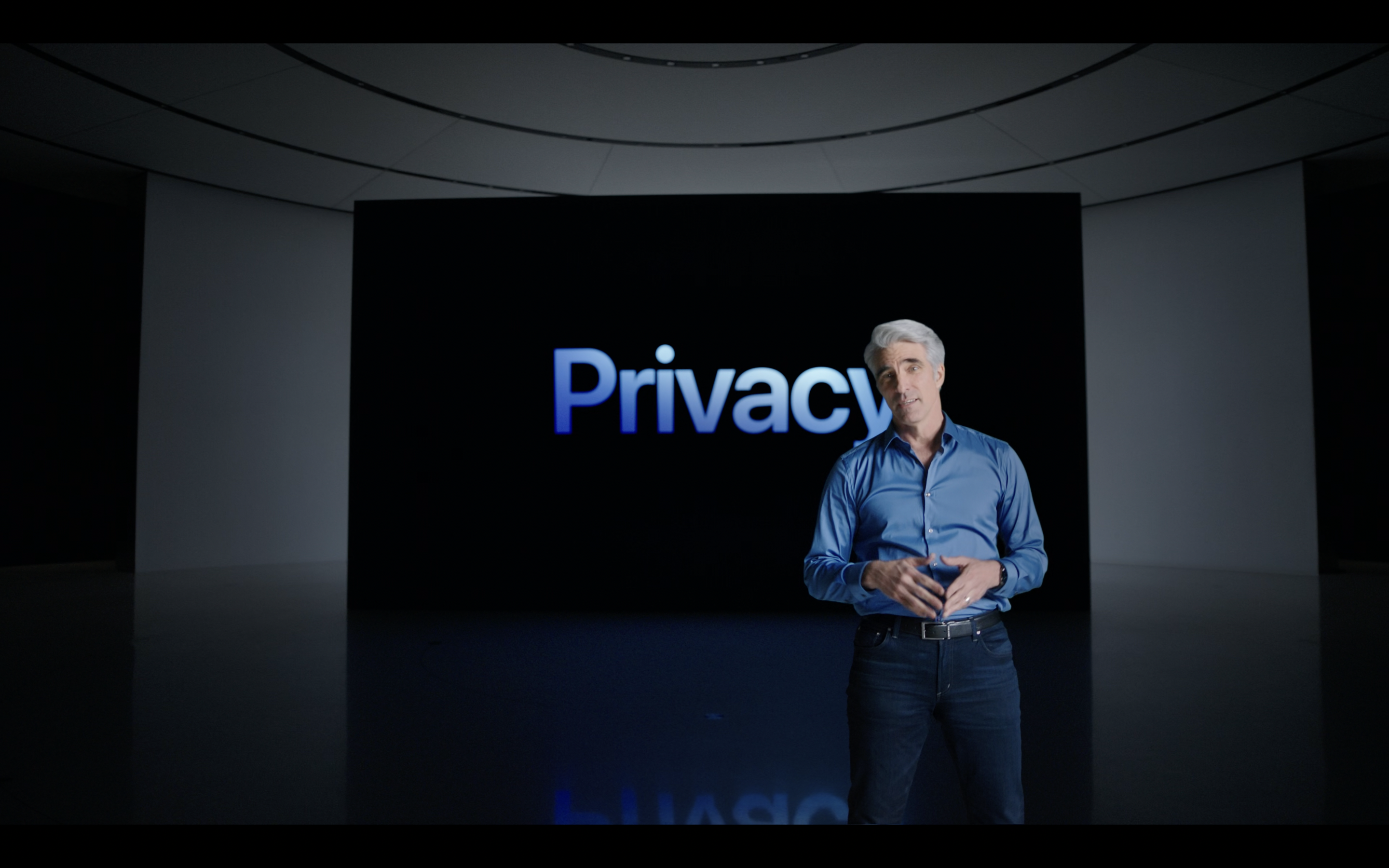

Craig Federighi Acknowledges Confusion Around Apple Child Safety Features and Explains New Details About Safeguards

- Thread starter MacRumors

- Start date

- Sort by reaction score

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

.

The comparison will take place on my phone and at no point will the results of that comparison leave my phone.

You can choose to live in the extreme examples of how it may go wrong, or amplify the rage machine but for the 99.999999997% of times the system will work as intended, your privacy will not be breached.

For the time when the 30,000,000,000 photos in you're iCloud library see enough to flag a report, a human will double check it.

My privacy is not being breached.Not at the cost of everybody's privacy.

The comparison will take place on my phone and at no point will the results of that comparison leave my phone.

You can choose to live in the extreme examples of how it may go wrong, or amplify the rage machine but for the 99.999999997% of times the system will work as intended, your privacy will not be breached.

For the time when the 30,000,000,000 photos in you're iCloud library see enough to flag a report, a human will double check it.

Actually, my wish came true, after today's reports.Good luck getting clarity on that you can trust.

Imagine an Apple worker looking at your personal photos because their algorithm failed, we are talking about Apple, a company that everything that release is full of bugs (macOS, iOS, etc). Ya I feel better now..

My privacy is not being breached.

The comparison will take place on my phone and at no point will the results of that comparison leave my phone.

You can choose to live in the extreme examples of how it may go wrong, or amplify the rage machine but for the 99.999999997% of times the system will work as intended, your privacy will not be breached.

For the time when the 30,000,000,000 photos in you're iCloud library see enough to flag a report, a human will double check it.

Let me remind you that you don't have access to their code, and server side, you are just believing their bs.

Well so far what I see discussed in this forum can be broadly categorised into two categories:No, but I wasn't making the claim that Apple was doing anything, therefore nothing for me to cite. As I said, other posters are making claims that Apple does not do this or that but cannot back those statements up using anything other than Apple marketing.

1. one based on published information from Apple

2. conspiracy theories of a back door

Wrong.Well so far what I see discussed in this forum can be broadly categorised into two categories:

1. one based on published information from Apple

2. conspiracy theories of a back door

More like:

1. People that believe the bs from a company.

2. People that truly care about privacy.

I'm glad you understand the need for security at the game.I go to a ballgame and they search me. Thats ok. I dont let then come to my house and search me before the game

Apple should use a similar method:

Don't do the security check in your home (monitor what you do while you are doing it)

Do the security check at the gates, just before you enter their property.

Oh....

Even more reason to hate those green iMessage bubbles.Everyone who would get caught by this will just switch to Android, rendering this useless anyway.

There it is...So what I'm hearing is that once I have 30 pictures of my baby, Apple will start looking at them taking baths? No thanks.

You will do what Apple tells you and like it.Thank you, Craig! I wonder if Craig woke up and read my comment from the previous article haha!

Craig Federighi Acknowledges Confusion Around Apple Child Safety Features and Explains New Details About Safeguards

Apple's senior vice president of software engineering, Craig Federighi, has today defended the company's controversial planned child safety...www.macrumors.com

All jokes aside! Let's talk business Craig, you do not know what you are talking about when all you did was praise how important privacy was. Stay away from my PRIVACY, please. It is my HUMAN RIGHT. Craig! please, give us an Opt-Out option from CSAM, please. Let our voices be heard. I will not appreciate Apple scanning my iCloud photos whether it's through AI or Hash.

It sounds like Apple is using "Protecting Children" as an example to be spying on the consumers.

STOP this mass surveillance to be launched. This needs to SHUT DOWN.

Apple you are not a Law Enforcement organization. Stop acting like one. Apple, how are you not getting the point. You are violating our PRIVACY rights. Over 7000 signatures were collected. Stop playing with our PRIVACY and HUMAN right.

LLCBuddy® - Your Business Formation & Management Buddy

Comprehensive guides on LLC and business formation and management essentials with LLCBuddy - your business formation & management partner.appleprivacyletter.com

The CSAM detection is, as far as I can tell, the first component that has no benefit to the user at all. In the best case it consumes just some device resources, in the worst case it gets the user into serious trouble. It is nothing a rational user would want on their phone. Open-sourcing it would at least allow users to see just how bad it is.Releasing the source code won't do anything with the biggest problem some of these people have with Apple: trust.

There are several other areas of iOS which could be misused to an even larger degree and no one is demanding open sourcing those areas.

Apple does not trust its customers; it treats all of them as potential sex predators. I do not think Apple should expect trust in return.

We must be reading different comments. I'm on page 12 of 25 and haven't read a single comment about kids bath pics yet. I did read many of those on previous articles on this subject, but not in comments going with this article.

Warrantless searches, back door in iOS? What did Apple do to have this thrust upon us? It must have been epic, wish they would release that info. Does Apple understand we buy these products and WE own them? We expect privacy and they promised it, vehemently. So I think they should deliver or buy them back at full retail value! Let me know if that’s confusing Craig I can say it slowly if need be.

Anyone know when Vegas will start telling everyone what happened in Vegas? 😉

Anyone know when Vegas will start telling everyone what happened in Vegas? 😉

This all reminds me of 9/11. Scare people into giving up their privacy for the “greater good.”

That Federighi WSJ interview is a messaging disaster. Very slimy indeed.

If they wanted to catch CP to iCloud uploaded content, then why not just add that to their privacy policy when using iCloud, "...every photo uploaded to iCloud will be cross referenced to the CSAM image database, and after 30 matches will trigger an internal human audit of the CSAM matches." I think most people would be totally comfortable with that; I would be, and I'm a staunch, my data is my data, person. That is very reasonable, their servers, they can scan what they want.

Now they want to scan on the phone, what they can easily do (or still do) in the cloud. This stinks of something most foul, and they know it, but there's something in their pipeline that makes this new feature good for the share price long term... but what is that??

If they wanted to catch CP to iCloud uploaded content, then why not just add that to their privacy policy when using iCloud, "...every photo uploaded to iCloud will be cross referenced to the CSAM image database, and after 30 matches will trigger an internal human audit of the CSAM matches." I think most people would be totally comfortable with that; I would be, and I'm a staunch, my data is my data, person. That is very reasonable, their servers, they can scan what they want.

Now they want to scan on the phone, what they can easily do (or still do) in the cloud. This stinks of something most foul, and they know it, but there's something in their pipeline that makes this new feature good for the share price long term... but what is that??

Yes. Yes, I believe their BS because their words match their actions; the foundation of trust.Imagine an Apple worker looking at your personal photos because their algorithm failed, we are talking about Apple, a company that everything that release is full of bugs (macOS, iOS, etc). Ya I feel better now.

Let me remind you that you don't have access to their code, and server side, you are just believing their bs.

Apple are clearly signalling what they are about to do.

They have told you how it will work.

They are attempting to clarify disinformation.

They have provided ways to opt out.

Regardless of how badly they have rolled this out - they are repeatedly being transparent with us - you are angry because they are being trustworthy.

Most people who buy the next iPhone, or upgrade to iOS 15 will be well informed.

So yes, I believe their bs.

You can choose to opt out.

There is mega-corporation One which is open and transparent or mega-corporation Two who is not open and transparent... But you suspect Two is kind of evil anyway so the lack of transparency is okay.

Yours and my minds are probably already made up, and neither of us will convince the other... But neither of us can plead ignorance because of Apple's actions.

The CSAM detection is, as far as I can tell, the first component that has no benefit to the user at all. In the best case it consumes just some device resources, in the worst case it gets the user into serious trouble. It is nothing a rational user would want on their phone. Open-sourcing it would at least allow users to see just how bad it is.

Apple does not trust its customers; it treats all of them as potential sex predators. I do not think Apple should expect trust in return.

I think it helps to see this CSAM detection feature in totality with the rest of the Apple ecosystem.

You are right in that on its own, there doesn’t seem to be any benefit to the end user. At best, I am proven innocent and am not any better off prior to the implementation of said feature. However, think about how other services like facebook and google scan everything you upload to their servers. What Apple is doing here is scanning your phones on device, before they would be uploaded to iCloud, so that Apple doesn’t subsequently have to scan them online.

In short, what Apple appears to be trying to do is to catch up to facebook and google in terms of weeding out bad actors, while going about it in as noninvasive a manner as possible.

In this context, it’s really no different from Apple using anonymised data for Maps and Siri while taking steps to ensure that the data used cannot be traced back to the end user. Compared to the competition, Apple is clearly taking the longer and the more difficult route.

There’s no indicator that Apple will do this, but it may also be setting the foundation for Apple eventually offering the option to encrypt your cloud storage in the future. Apple has yet to do so apparently because law enforcement doesn’t want them to. What if CSAM detection is the missing linchpin that would allow Apple to encrypt their users’ online files, while also convincing law enforcement that they do not have to worry about child pornography being uploaded on their servers (since Apple wouldn’t have the ability to scan for such content online).

So with Apple, the value proposition seems to be “just let me check your photos once at the point of uploading and I will get off your back and never check it again”. Compared to companies like facebook and google, where the photos are constantly being checked and being mined for way more data than Apple ever will.

I can certainly get behind that.

Nonsense. We are angry because we are under surveillance without a warrant.you are angry because they are being trustworthy.

What exactly does privacy mean in your dictionary?

It can’t be ’sequestered’ in that way because it doesn’t do anything useful to an attacker. The code on the phone never finds out if the users images matched the CSAM database. It never interprets what’s in the image using AI or anything else, it never connects to a 3rd party. It has no idea if you have porn on your phone. The result of the match can only be read by Apple on its own servers. Any attacker with enough access to change all that into something useful, would have a far easier time just forgetting about the CSAM feature altogether and just hacking the Photos app, or Autocorrect, or Mail, or Safari, all of which do ‘scan’ your stuff in the clear.I'm not so sure about that. Intelligence agencies have always wanted to be able to snoop on phones. Their best guys can probably already figure out a way of accessing stuff in the cloud.

Apple has now provided a way to snoop on a users device. I think that should be sacred. The user can decide if they want to use the cloud or not, but if you choose not to then the OS shouldn't provide any way for a 3rd-party to snoop on the phone.

Admittedly, Apple says that device scanning only occurs for cloud users, but since they have built the technology into the device OS, it could be sequestered even for non-cloud customers.

When the cops break down my door and sieze my computer to examine its content, my declaration that I didn't know what was there will be laughingly ignored and I will be successfully prosecuted. If I find it first and delete it, I don't have a problem. If I know it is there and do nothing, like Apple is doing when told they are uploading child porn, I am breaking the law.Yes, you do get off the hook for possession.

Everywhere in the statue they use the word 'knowingly'.

An affirmative defense if you discover child pornography in your storage system is to promptly delete it.

(2) promptly and in good faith, and without retaining or allowing any person, other than a law enforcement agency, to access any visual depiction or copy thereof—

(A) took reasonable steps to destroy each such visual depiction; or

(B) reported the matter to a law enforcement agency and afforded that agency access to each such visual depiction.

It is still wrong for Apple to scan your data, on your device. No amount of explanation or obfuscation can change that..

My privacy is not being breached.

The comparison will take place on my phone and at no point will the results of that comparison leave my phone.

You can choose to live in the extreme examples of how it may go wrong, or amplify the rage machine but for the 99.999999997% of times the system will work as intended, your privacy will not be breached.

For the time when the 30,000,000,000 photos in you're iCloud library see enough to flag a report, a human will double check it.

Well said. I think there is a case to be made that, based on their advertised commitments, Apple needs to compensate users for lost value and for losses when selling and changing devices based on this sea change 😉. Hmmm.Warrantless searches, back door in iOS? What did Apple do to have this thrust upon us? It must have been epic, wish they would release that info. Does Apple understand we buy these products and WE own them? We expect privacy and they promised it, vehemently. So I think they should deliver or buy them back at full retail value! Let me know if that’s confusing Craig I can say it slowly if need be.

Anyone know when Vegas will start telling everyone what happened in Vegas? 😉

And that is supposed to make us feel BETTER? I trust Apple to make devices that protect my privacy, not that allow them to snoop through my data on devices I paid for!Apple can overwrite the hash table with a new version every time you update the OS.

No. It's not wrong.It is still wrong for Apple to scan your data, on your device. No amount of explanation or obfuscation can change that.

I'm aware of the feature. I understand how it works. I know my options.

Do you get that ?

I can choose to not update.

I can choose to not use icloud.

I can choose an alternate device.

I choose to agree too those terms.

privacyNonsense. We are angry because we are under surveillance without a warrant.

What exactly does privacy mean in your dictionary?

noun [ U ]

someone's right to keep their personalmatters and relationships secret:

You have the right to not grant Apple this functionality and your secrets will stay on your device.

You know this because Apple have been clear and transparent with how it will work and how you can opt out.

Privacy protected.

Register on MacRumors! This sidebar will go away, and you'll see fewer ads.