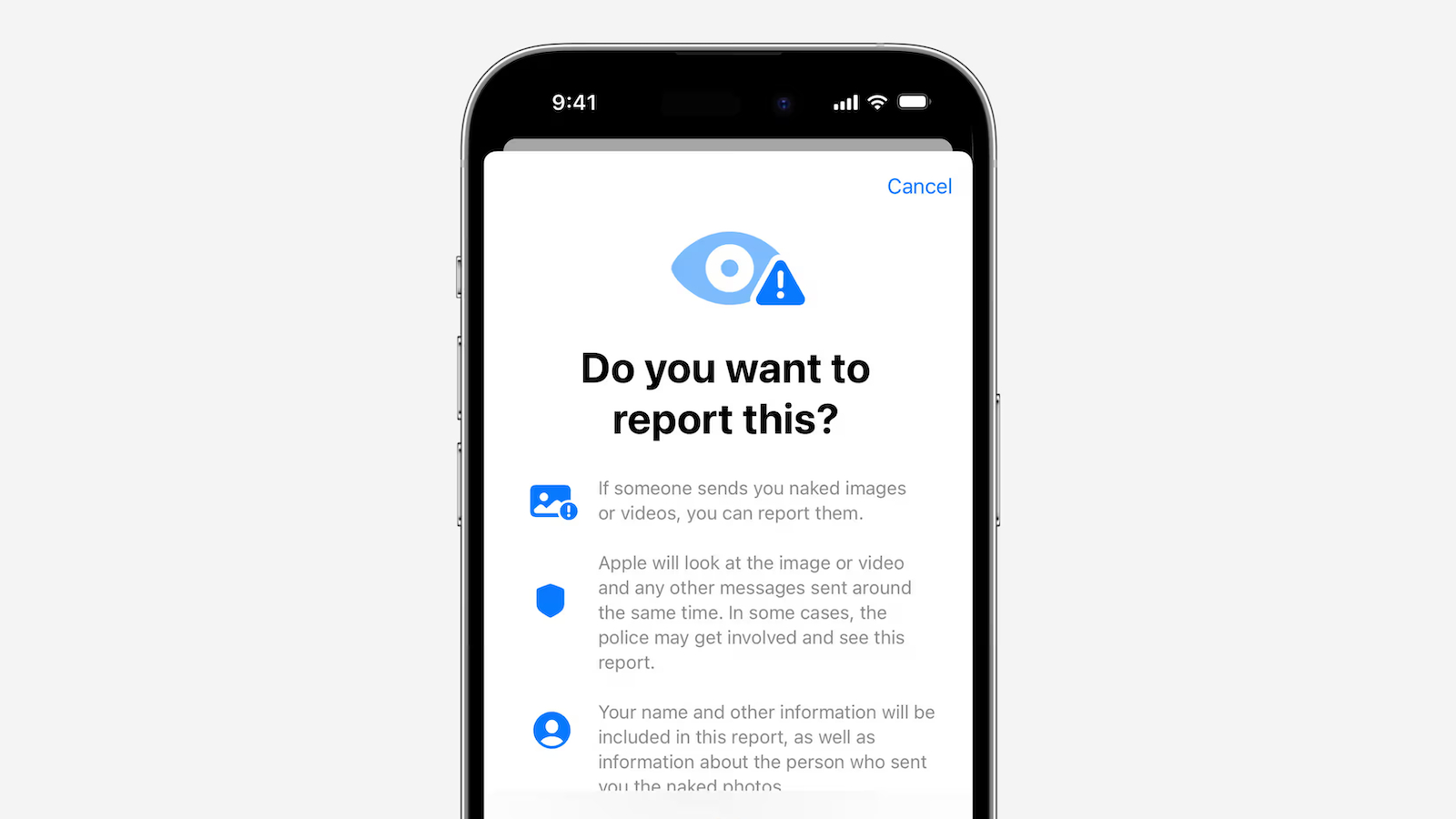

Starting with iOS 18.2, children in Australia have a new option to report iMessages containing nude photos and videos to Apple, the company told The Guardian. Apple said it will review these reports and could take action, such as by disabling the sender's Apple Account and/or reporting the incident to law enforcement.

The report outlined what these reports will include:

The feature comes after Australia introduced new rules that will require tech companies like Apple to take stronger measures to combat child sexual abuse material (CSAM) on their platforms by the end of 2024, according to the report.The device will prepare a report containing the images or videos, as well as messages sent immediately before and after the image or video. It will include the contact information from both accounts, and users can fill out a form describing what happened.

Apple said it plans to make this feature available globally in the future, according to the report, but no timeframe was provided.

This is an extension of Apple's existing Communication Safety feature for iMessage, which launched in the U.S. with iOS 15.2 in 2021. With the release of iOS 17 last year, Apple expanded the feature worldwide and enabled it by default for children who are under the age of 13, signed in to their Apple Account, and part of a Family Sharing group.

Communication Safety is designed to warn children when they receive or send iMessages containing nudity, and Apple ensures that the feature relies entirely on on-device processing as a privacy protection. The feature also applies to AirDrop content, FaceTime video messages, and Contact Posters in the Phone app. Parents can turn off the feature on their child's device in the Settings app under Screen Time if they wish to.

The nudity reporting option comes after Apple in 2022 abandoned its controversial plans to detect known CSAM stored in iCloud Photos.

The first iOS 18.2 beta was released yesterday for devices with Apple Intelligence support, including iPhone 15 Pro models and all iPhone 16 models. The software update is expected to be widely released to the public in December.

Note: Due to the political or social nature of the discussion regarding this topic, the discussion thread is located in our Political News forum. All forum members and site visitors are welcome to read and follow the thread, but posting is limited to forum members with at least 100 posts.

Article Link: iOS 18.2 Lets Children Report Nudity in iMessages, Starting in Australia