With the new Liquid Glass design taking the spotlight, Apple didn't spend a ton of time discussing Apple Intelligence at WWDC 2025, nor was there a mention of the missing Siri features. Apple Intelligence wasn't a focus, but Apple is continuing to build out Apple Intelligence in iOS 26. There are new features, and updates to some existing features.

We've outlined what's new with Apple Intelligence below.

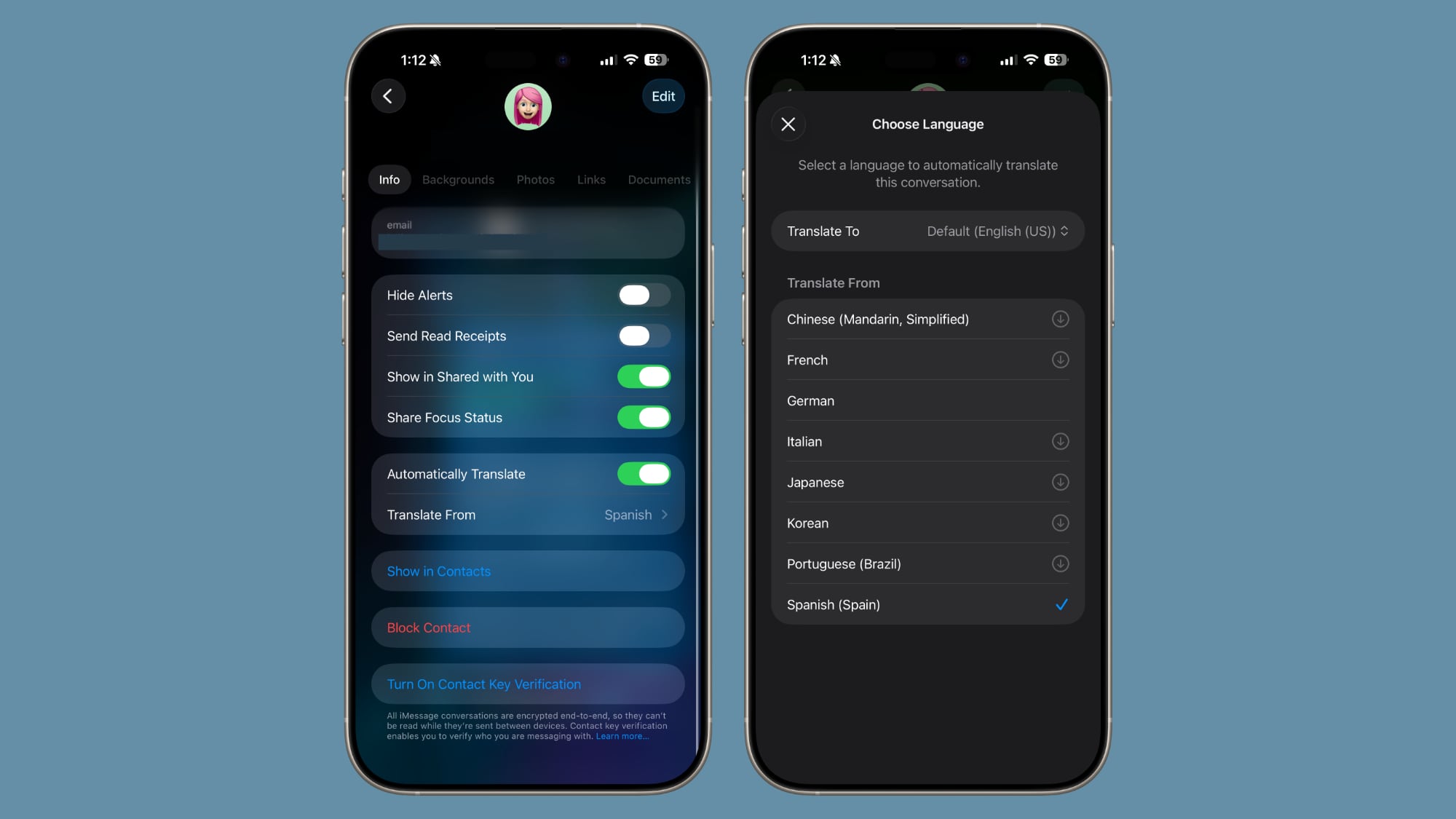

Live Translation

Live Translation works in Messages, FaceTime, and Phone. It auto translates both spoken and text conversations if the people conversing do not speak the same language.

In a Messages conversation with someone, tap on the person's name and then toggle on the Automatically Translate option. From there, you can select a language that you want to translate your conversations to. Language packs vary in size, but they are around 900MB.

Language options include English (US), English (UK), Chinese (Mandarin, Simplified), French, German, Italian, Japanese, Korean, Portuguese (Brazil), and Spanish (Spain). You can set both the translate to and the translate from languages.

The messages that you send to someone will show up in both your language and the translated language on your iPhone, while the person on the other end sees the message only in their language. Messages they respond with will show both their language and the translated language.

Live Translation works similarly in the Phone and FaceTime apps, and it needs to be turned on for each conversation and language assets need to be downloaded. In the Phone app, Live Translation uses actual voice translation with an AI voice to translate spoken content quickly and efficiently, but you can also see a transcript of the conversation.

In FaceTime, you'll see translated captions for speech, so you'll hear what the person is saying in their own language while also being able to read live captions with a translation.

To use these features, both participants should have Live Translation, so an Apple Intelligence-enabled iPhone, iPad, or Mac that is running the 26 series software. In Messages, though, if you have Live Translation turned on and you're chatting with someone who has an older device, they can type in their language and you will see the translation. Your responses to them aren't translated to their language.

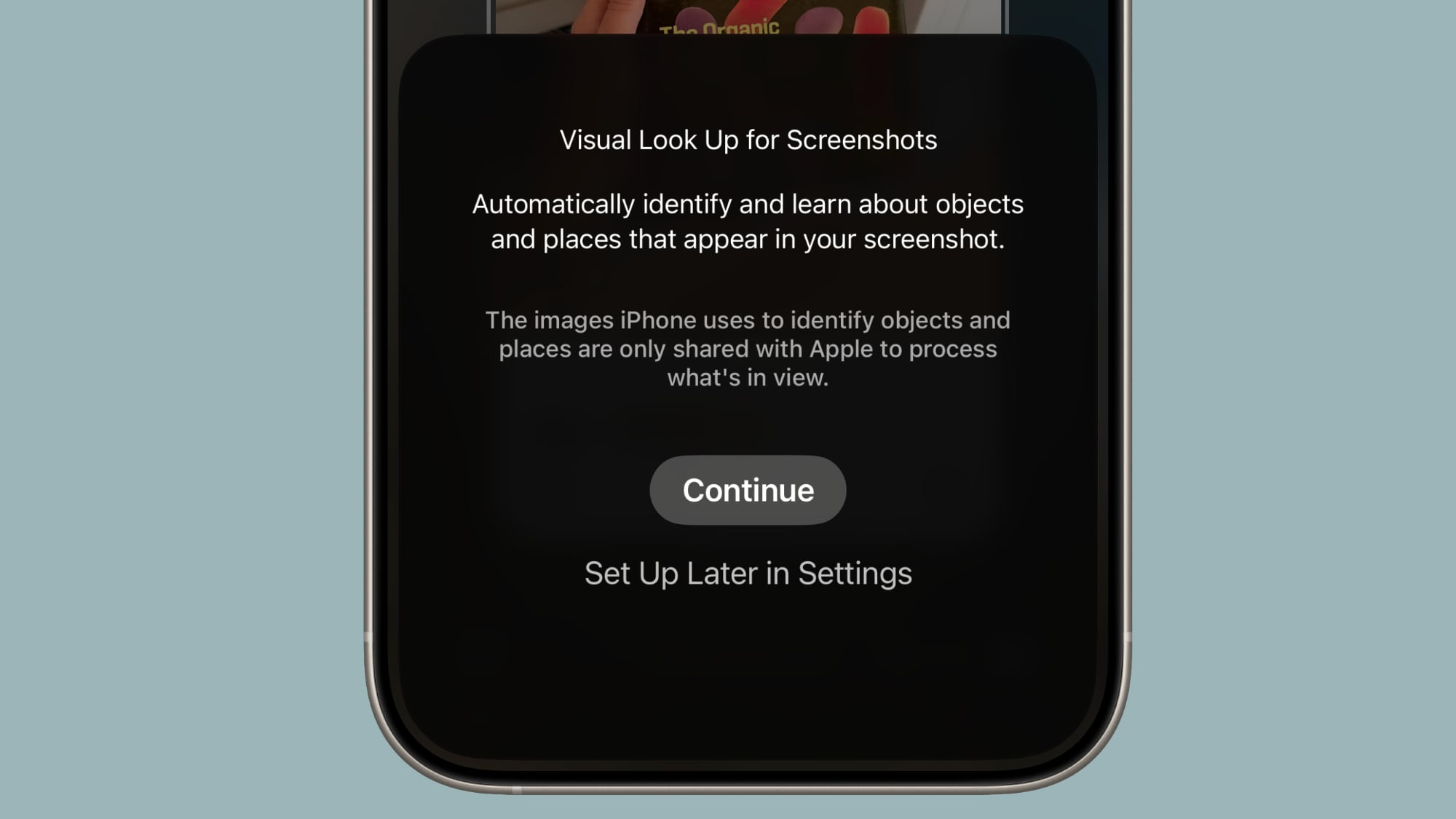

Onscreen Visual Intelligence

In iOS 26, you can use Visual Intelligence with content that's on your iPhone, asking questions about what you're seeing, looking up products, and more.

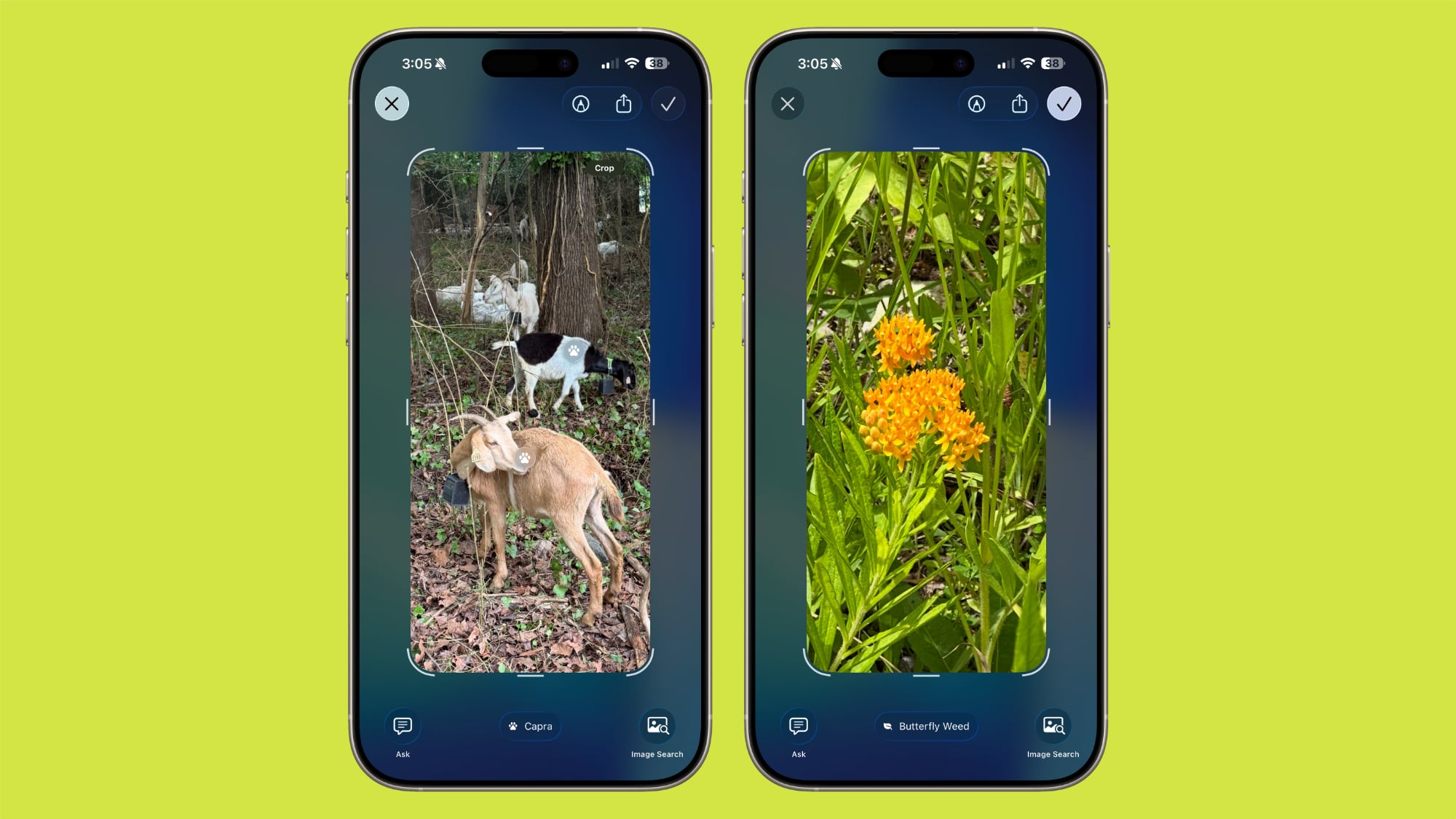

Visual Intelligence works with screenshots, so if you take a screenshot on your iPhone and tap into it, you'll see new Ask and Image Search buttons. With Ask, you can ask ChatGPT a question about what's in the screenshot. The image is sent to ChatGPT, and ChatGPT is able to provide a response.

Search has two features. You can send a whole screenshot to Google or another app, or you can use a Highlight to Search feature to select something specific in the screenshot. Just use a finger to draw over what you want to look up, and then swipe up to conduct a search.

You can search Google Images, Etsy, and other apps that implement support for the feature.

If there's an event in your screenshot, Visual Intelligence will pop up an "Add to Calendar" option and it can be added directly to the Calendar app. It will also automatically suggest identifications for animals, plants, sculptures, landmarks, art, and books.

Wallet Order Tracking

Apple Wallet can scan your emails to identify order and tracking information, adding it to the Orders section of the Wallet app. The feature works for all of your purchases, even those not made using Apple Pay.

Automatic order detection can be enabled in the Wallet app settings under Order Tracking. Once turned on, you can see your orders by opening up Wallet, tapping on the "..." button, and choosing the Orders section.

Tapping into an order will provide you with the merchant name, order number, and tracking number, if available. You can also see the relevant email that the order information came from, and tap it to go straight to the message in the Mail app.

Image Playground

Apple quietly upgraded Image Playground, and the images that it generates using the built-in Animation, Sketch, and Illustration styles have improved. Faces and eyes look more natural, hair is more realistic, and it's overall better at generating a cartoonish image that looks similar to a person.

The change is most notable with people, but objects, food, and landscapes have improved too. We have a full Image Playground guide with more info.

... Click here to read rest of article

Article Link: iOS 26: All the New Apple Intelligence Features