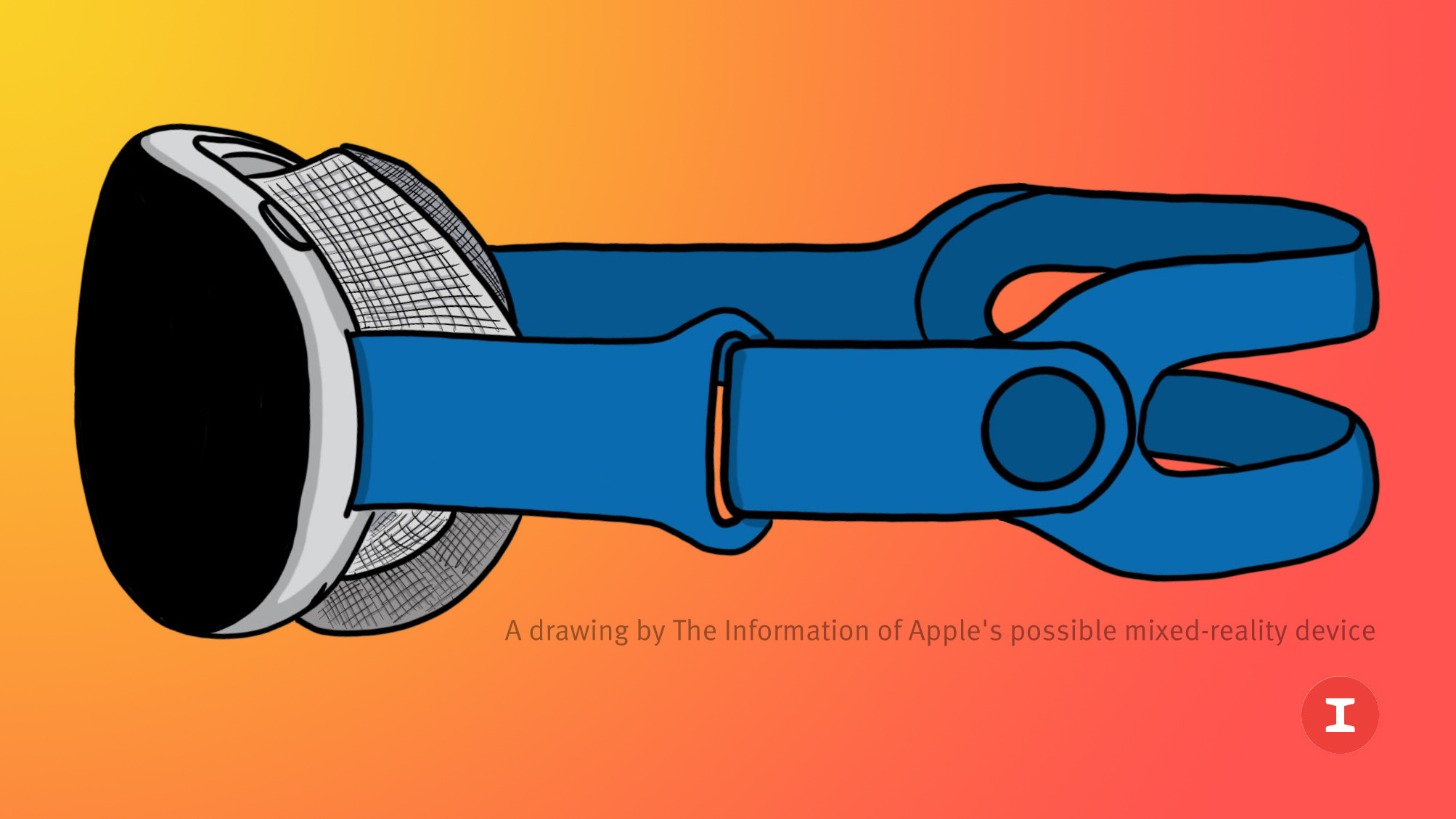

Apple plans to announce its long-rumored mixed reality headset during an event planned for January 2023, Apple analyst Ming-Chi Kuo said today.

In a tweet, Kuo offered a more precise timeline for Apple's mixed reality headset, which despite speculation did not make an appearance during yesterday's WWDC keynote.

According to Kuo, Apple will hold an event in January to reveal the product, with tools for developers shipping "within 2-4 weeks after the event." Pre-orders for the headset will start in the second quarter of 2023 with customers able to purchase the headset before next year's WWDC.

Multiple reports have highlighted ongoing issues with the development of the headset, delaying a launch until next year. The headset is expected to be a niche device that costs somewhere around $3,000. Learn more about the headset using our roundup.

Article Link: Kuo: Apple to Hold Special Event in January to Announce Mixed Reality Headset