![]()

An all-new "compute module" device has been spotted in Apple beta code, hinting that new hardware may soon be on the way.

The new "ComputeModule" device class was spotted in Apple's iOS 16.4 developer disk image from the Xcode 16.4 beta

by 9to5Mac, indicating that it runs iOS or a variant of it. The code suggests that Apple has at least two different compute modules in development with the identifiers "ComputeModule13,1" and "ComputeModule13,3."

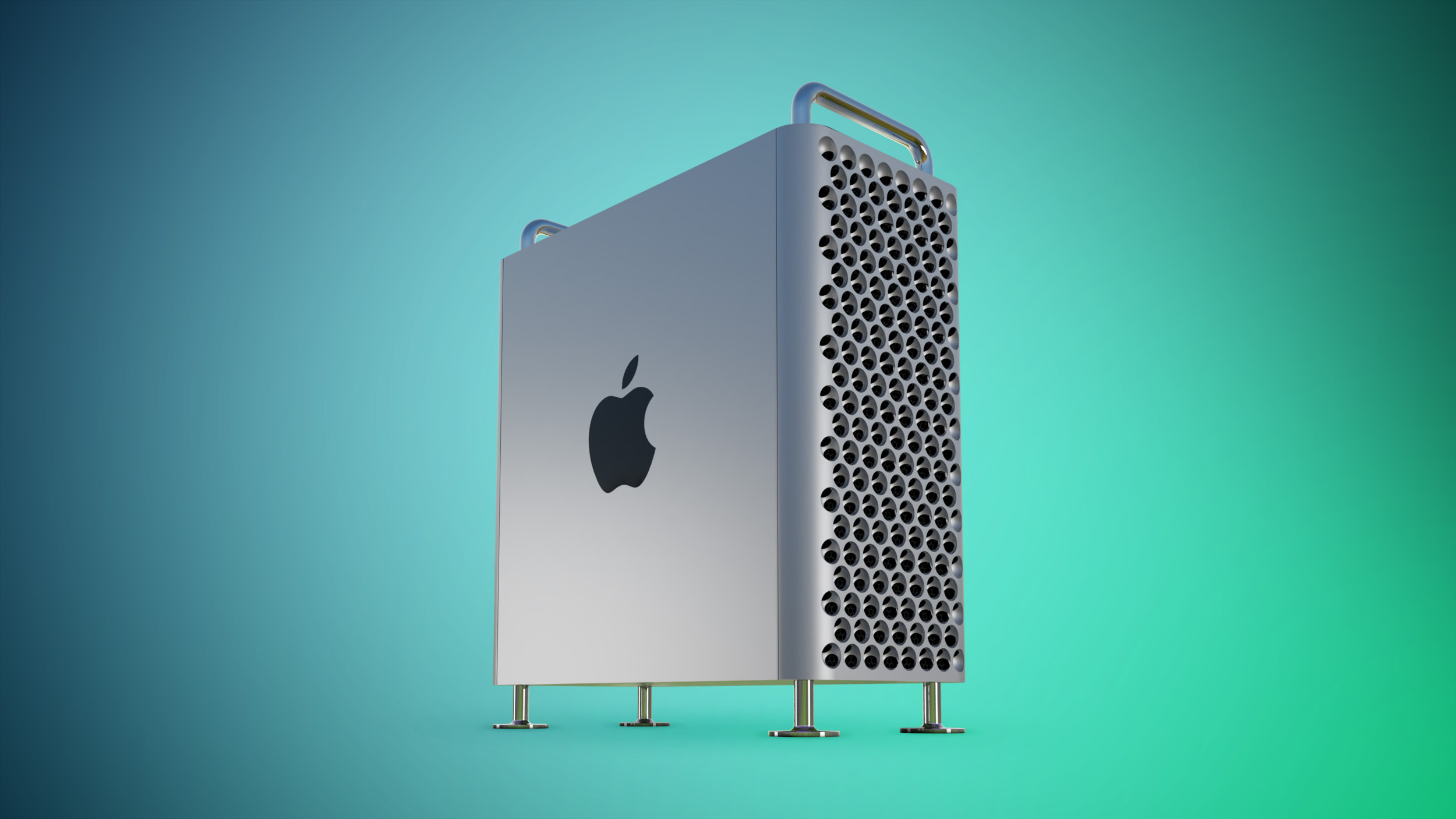

The modules' purpose is unclear, but speculation argues that they are designed for the Apple silicon Mac Pro – potentially serving as a solution to enable a modular interface for swappable hardware components or add additional compute power via technologies like Swift Distributed Actors. There is also a chance that the compute modules could be designed for Apple's upcoming mixed-reality headset or something else entirely.

Yesterday, recent Apple

Bluetooth 5.3 filings were uncovered, a move that often precedes the launch of new products, so the compute module finding could be the latest indication that new Apple hardware is likely on the horizon.

Article Link:

Mysterious New 'Compute Module' Found in Apple Beta Code