Sora, OpenAI's AI video app, will no longer allow users to create videos featuring celebrity likenesses or voices.

OpenAI, SAG-AFTRA, actor Bryan Cranston, United Talent Agency, Creative Artists Agency, and Association of Talent Agents today shared a joint statement about "productive collaboration" to ensure voice and likeness protections in content generated with Sora 2 and the Sora app.

Cranston raised concerns about Sora after users were able to create deepfakes that featured his likeness without consent or compensation. Families of Robin Williams, George Carlin, and Martin Luther King Jr. also complained to OpenAI about the Sora app.

OpenAI has an "opt-in" policy for the use of a living person's voice and likeness, but Sora users were able to create videos of Cranston even though he had not permitted his likeness to be used. To fix the issue, OpenAI has strengthened guardrails around the replication of voice and likeness without express consent.

Artists, performers, and individuals are meant to have the right to determine how and whether they can be simulated with Sora. Along with the new guardrails, OpenAI has also agreed to respond "expeditiously" to any received complaints going forward.

OpenAI first tweaked Sora late last week to respond to complaints from the family of Martin Luther King Jr., and the company said that it would strengthen guardrails for historical figures. OpenAI said there are "strong free speech interests" in depicting deceased historical and public figures, but authorized representatives or estate owners can request that their likeness not be used on Sora cameos.

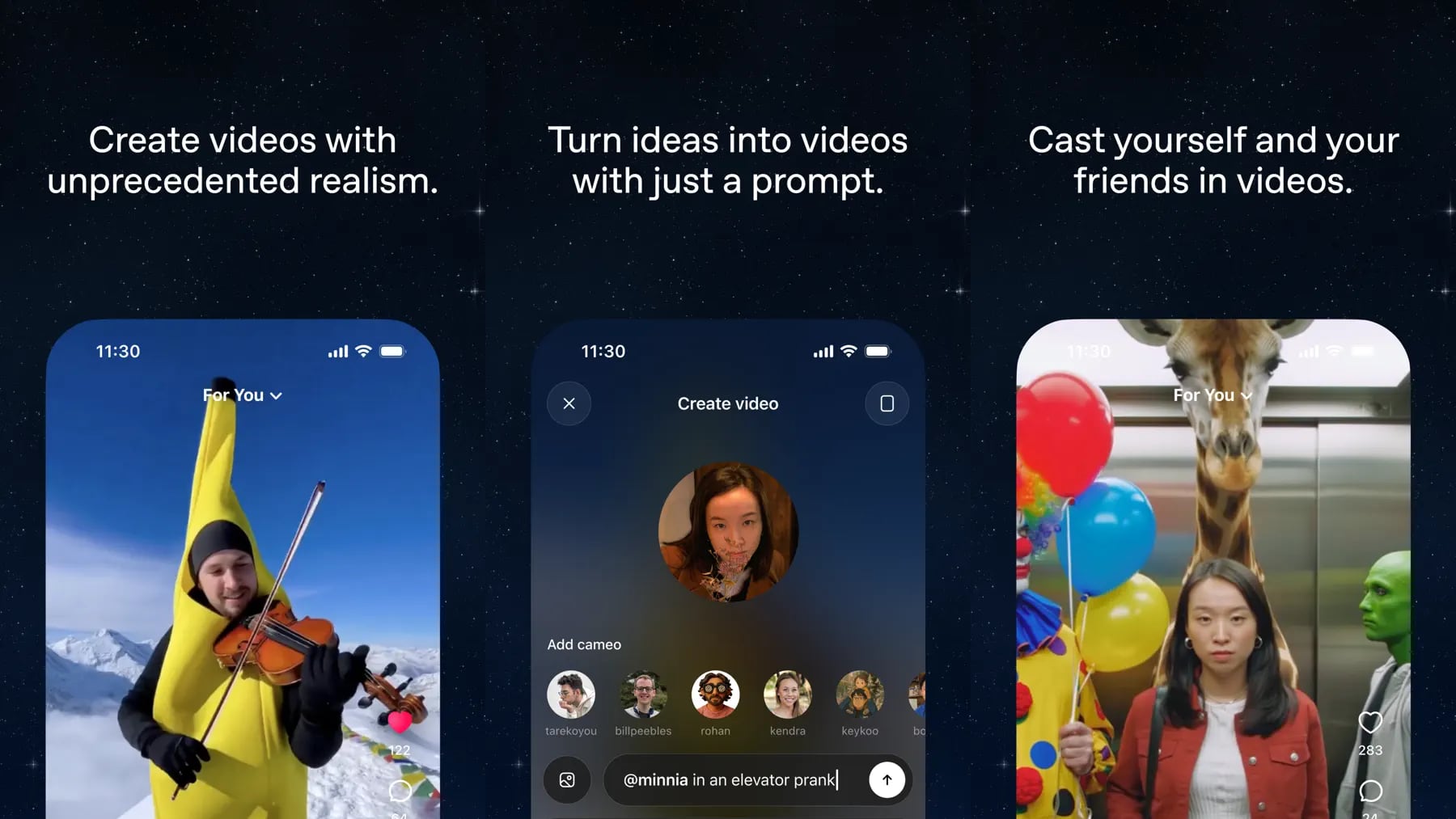

Sora launched on September 30, and it has since become one of the most popular apps in the App Store.

Article Link: OpenAI Strengthens Sora Protections Following Celebrity Deepfake Concerns