Following extended testing under the "Geekbench ML" name, Primate Labs is officially launching its new benchmarking suite optimized for AI-centric workloads under the name Geekbench AI. The tool seeks to measure hardware performance under a variety of workloads focused on machine learning, deep learning, and other AI-centric tasks.

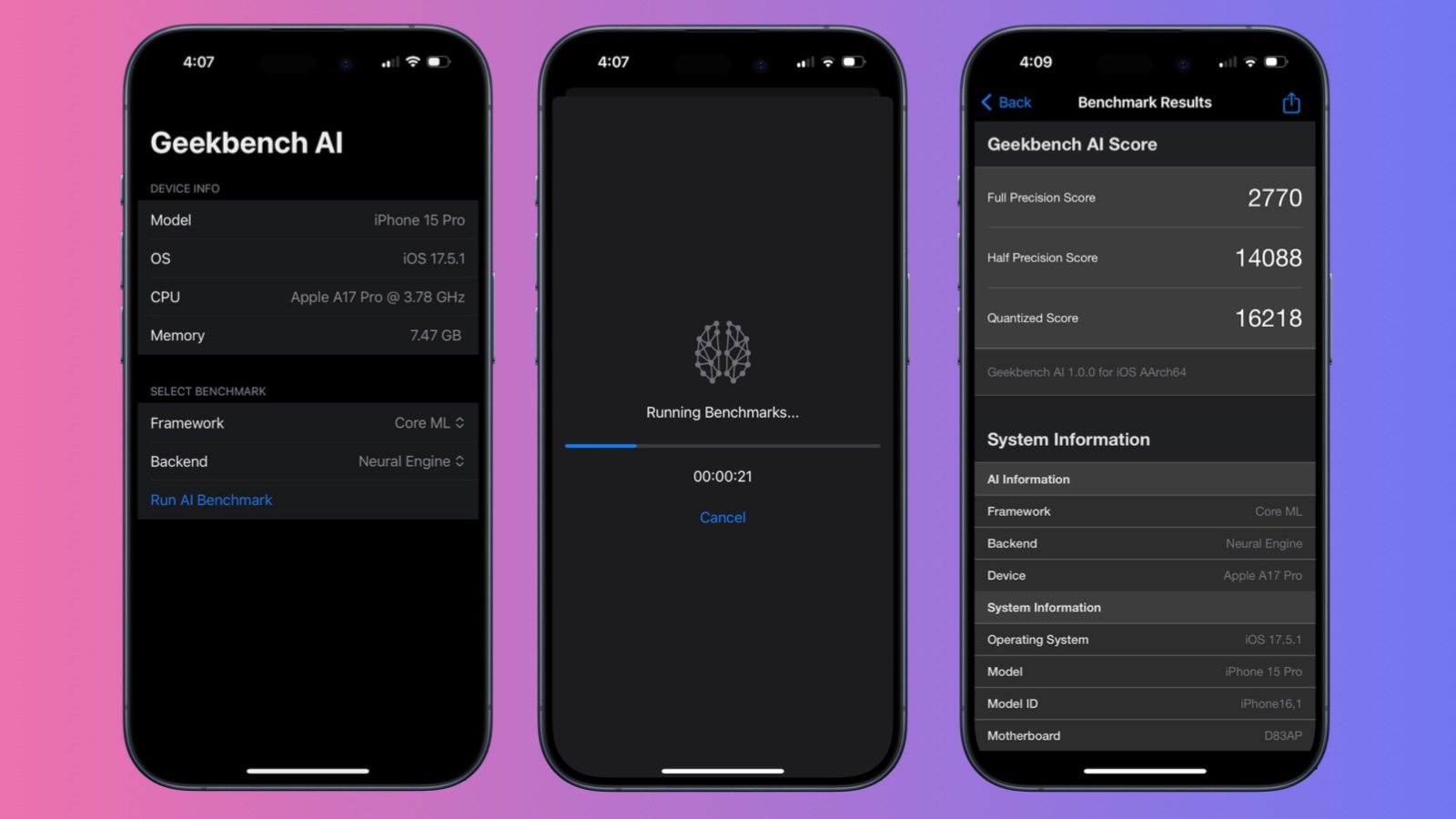

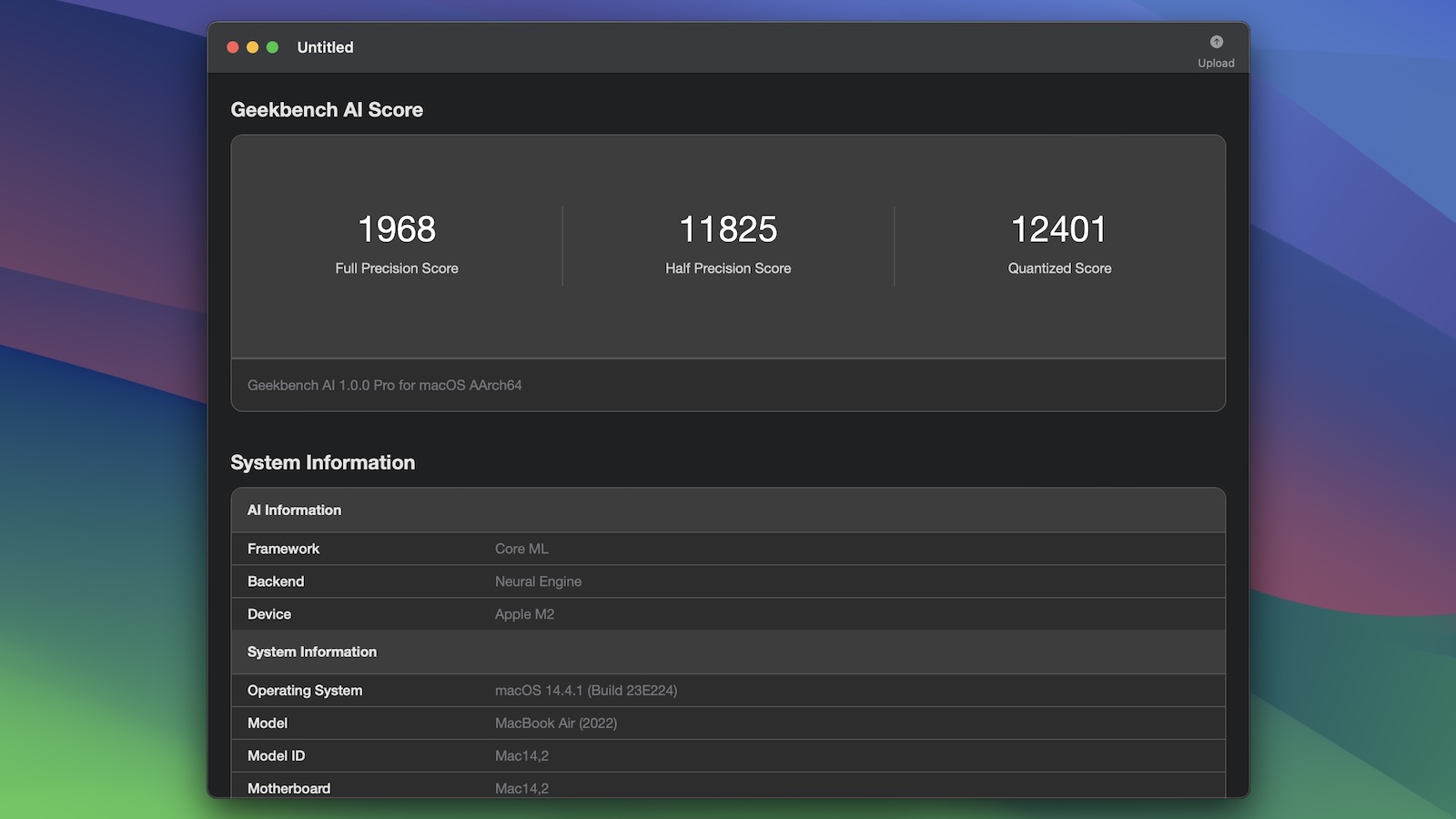

Geekbench AI 1.0 examines some of the unique workloads associated with AI tasks and seeks to encompass the variety of hardware designs employed by vendors to tackle these tasks, delivering a three-score summary as part of its benchmarking results to reflect a range of precision levels: single-precision, half-precision, and quantized data.

In addition to these performance scores, Geekbench AI also includes an accuracy measurement on a per-test basis, allowing developers to improve efficiency and reliability while assessing the benefits and drawbacks of various engineering approaches.

Finally, the 1.0 release of Geekbench AI includes support for new frameworks and more extensive data sets that more closely reflect real-world inputs, improving the accuracy evaluations in the suite.

Geekbench AI is available for an array of platforms, including iOS, macOS, Windows, Android, and Linux.

Article Link: Primate Labs Debuts New Geekbench Suite for AI-Centric Workloads