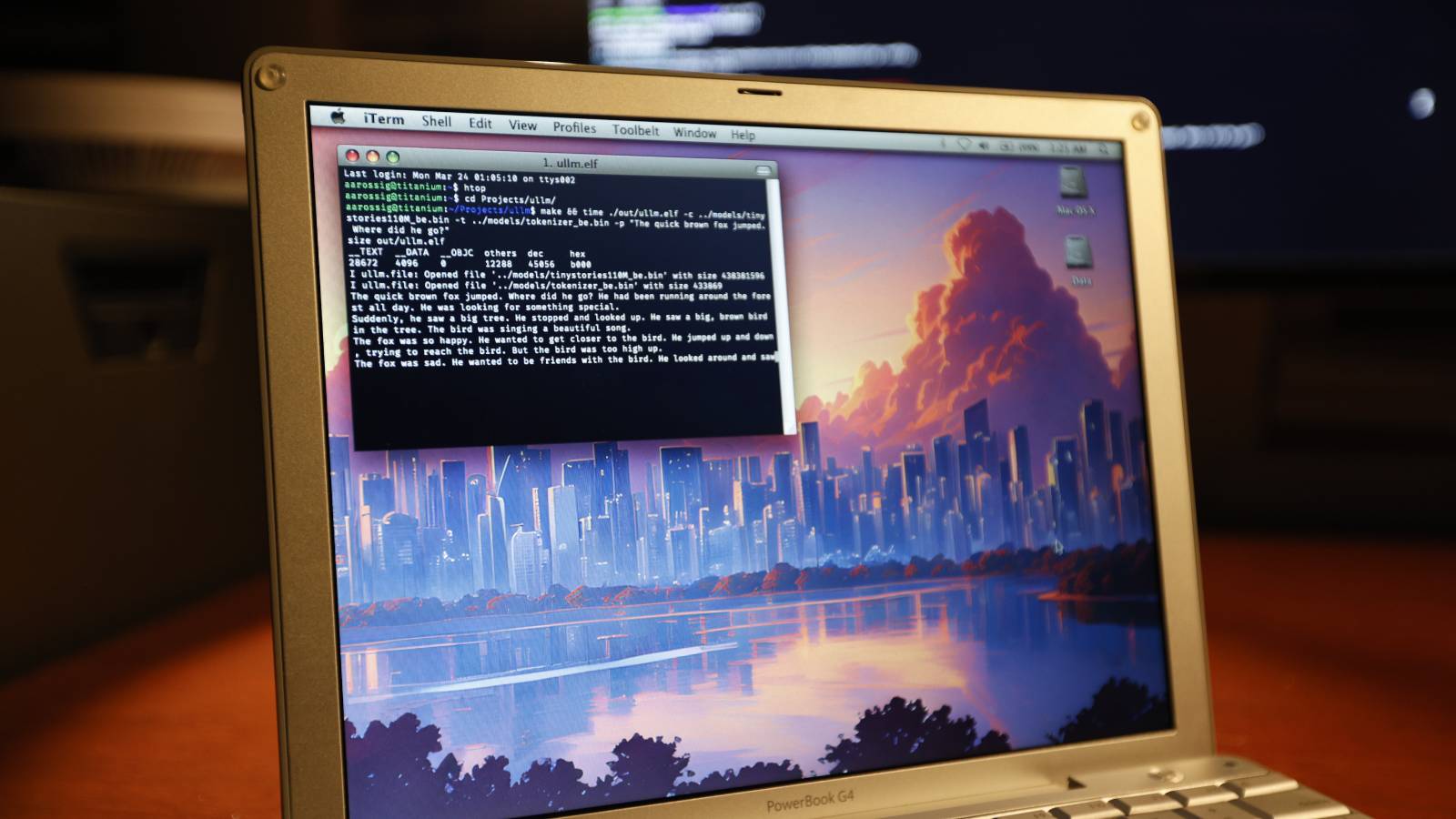

In a blog post this week, software engineer Andrew Rossignol (my brother!) detailed how he managed to run generative AI on an old PowerBook G4.

While hardware requirements for large language models (LLMs) are typically high, this particular PowerBook G4 model from 2005 is equipped with a mere 1.5GHz PowerPC G4 processor and 1GB of RAM. Despite this 20-year-old hardware, my brother was able to achieve inference with Meta's LLM model Llama 2 on the laptop.

The experiment involved porting the open-source llama2.c project, and then accelerating performance with a PowerPC vector extension called AltiVec.

His full blog post offers more technical details about the project.

Similar examples of generative AI models running on the PlayStation 3, Xbox 360, and other old devices have surfaced in the news from time to time.

Article Link: Software Engineer Runs Generative AI on 20-Year-Old PowerBook G4