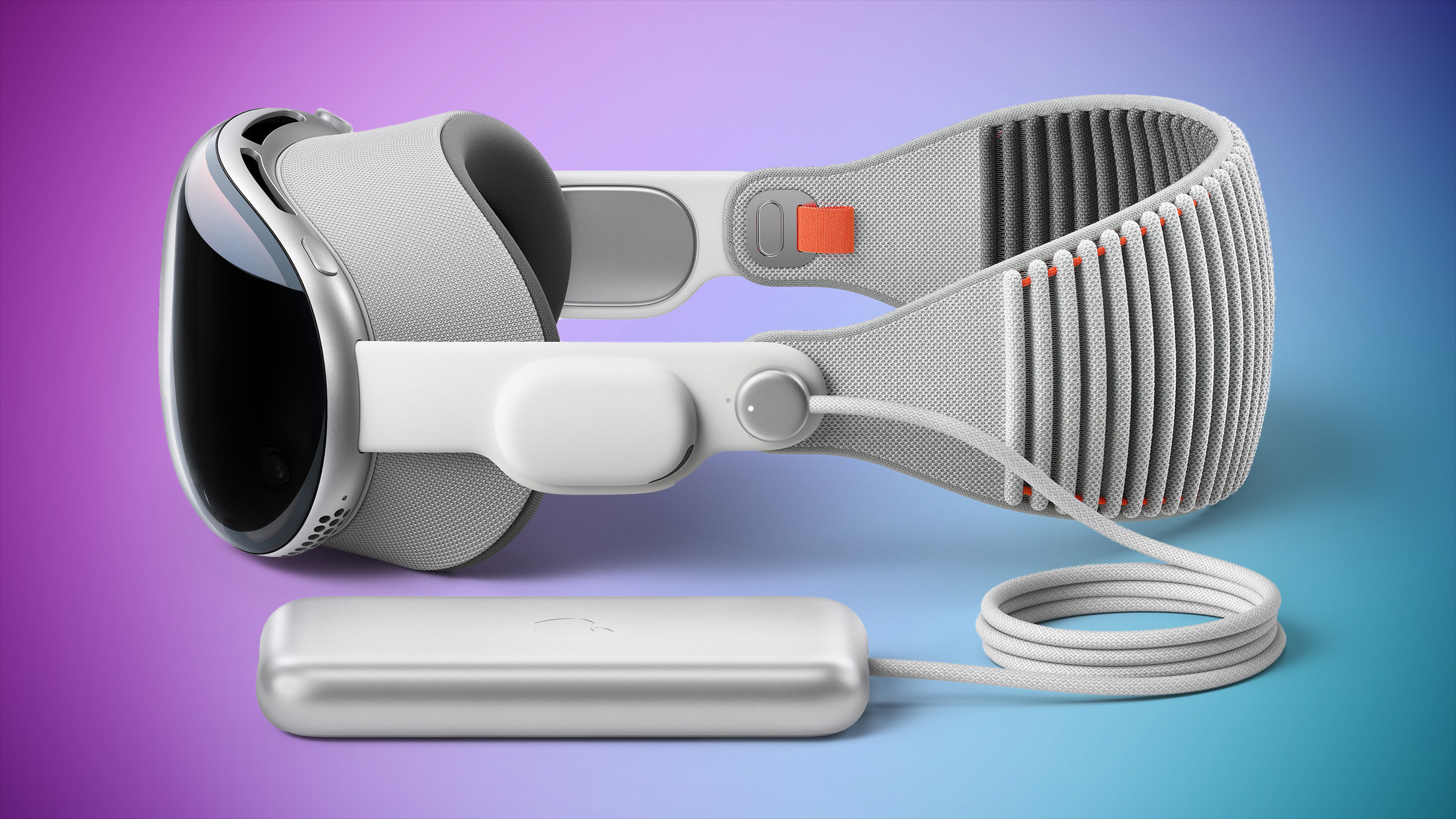

There have been multiple signs suggesting that Apple's mixed reality Vision Pro headset is struggling to take off, both due to the super high price and the heavy design that limits the amount of time it can be worn for most people. What does the Vision Pro's floundering mean for Apple's work on future virtual and augmented reality projects?

Vision Pro Interest

Interest in the Vision Pro was high in February when the device first launched because it was an all-new product category for Apple, but that didn't last. The Vision Pro is indisputably impressive, and it is mind blowing to watch a butterfly flit by so closely it feels like you can reach out and touch it, or to see the rough skin of an elephant as it walks right by you, but the magic quickly wears off for most.

Early reviews found the Vision Pro hard to wear for a long enough period of time to incorporate it into a real workflow, and it was hard to find a use case that justified the $3,500 price tag. The Verge's Nilay Patel found the Vision Pro to be uncomfortably isolating, and The Wall Street Journal's Joanna Stern got nauseous every time she watched the limited amount of Apple Immersive Video content available. Reviewers agreed that watching TV and movies was one of the best use cases, but that makes for an expensive TV that can't be watched with anyone else.

Months later, sentiment hasn't changed much. There was a lot of demand at Apple Stores when the Vision Pro launched, and long lines of people wanted to give it a try. Once the demo was over, though, interest fell. As early as April, there were reports that enthusiasm about the Vision Pro had dropped significantly, and by July, there were reports of waning sales.

At MacRumors, a few of us bought a Vision Pro at launch, and those headsets are tucked away in their cases and essentially not pulled out much at all anymore, except to sometimes watch Apple's latest Immersive Video or to update to new visionOS software. MacRumors videographer Dan Barbera uses his Vision Pro once a week or so for watching content, but only for about two hours because it's painful to continue use after that point. MacRumors editor in chief Eric Slivka and I haven't found a compelling use case, and there's no content appealing enough for even weekly use.

I still can't wear the Vision Pro for more than two hours or so because it's too uncomfortable, and I'm prone to motion sickness so it sometimes makes me feel queasy if there's too much movement. The biggest reason I don't use the Vision Pro, though, is that I don't want to shut out what's around me. Sure, it's great for watching movies, TV shows, or YouTube videos on a screen that looks like it's 100 feet tall, but to do that, I have to isolate myself. I can't watch with other people, and I feel genuinely guilty when my cat comes over for attention and I'm distracted by my headset.

Watching movies on the Vision Pro is not a better experience than using the 65-inch TV in front of my couch. I am a gamer, but there aren't many interesting games, and a lot of the content that's available feels like I'm playing a mobile game in a less intuitive way. Using it as a display for my Mac is the best use case I've found, but it's limited to a single display and it's not enough of an improvement over my two display setup to justify being uncomfortable while I work.

Beyond our own experiences with the Vision Pro, MacRumors site traffic indicates a lack of interest in the headset. When we publish a story about the Vision Pro, people don't read it. I wrote a Vision Pro story about the first short film on the headset just yesterday, for example, and it was our lowest traffic article for the day. It probably wasn't worth my time to even do, and that's not an isolated incident.

There are enterprise use cases for Vision Pro, and some people out there who do love the headset, so it does have some promise, and Apple has been marketing it to businesses. Some examples, from Apple and others:

- Porsche - Porsche engineers use the Vision Pro to visualize car data in real time.

- KLM - KLM Airlines is using the Vision Pro for training technicians on new engine models.

- Law enforcement - Police departments in California are testing Vision Pro for surveillance work.

- Medicine - A medical team in the UK used the Vision Pro for two spinal surgeries. Doctors in India also reportedly use it for laparoscopic surgeries, and an orthopedic surgeon in Brazil used it during a shoulder surgery. UCSD has been testing the use of Vision Pro apps for minimally invasive surgery.

- Science - MIT students wrote an app to control a robot using the Vision Pro's gesture support.

Confusion Over What's Next

With Vision Pro sales coming in under what Apple expected, we've seen some confusing rumors about what Apple's next move will be. There were initially rumors that Apple was working on two new versions of the Vision Pro, one that's cheaper and one that's a direct successor to the current model.

In April, Bloomberg's Mark Gurman said Apple would not launch a new version of the Vision Pro prior to the end of 2026, with Apple struggling to find ways to bring down the cost of the headset.

In June, The Information said Apple had suspended work on a second-generation Vision Pro to focus on a cheaper model. Later that same month, Gurman said that Apple might make the next Vision Pro reliant on a tethered iPhone or Mac, which could drop costs, and he said a cheaper headset could come out as early as the end of 2025.

In late September, Apple analyst Ming-Chi Kuo said Apple would begin productio... Click here to read rest of article

Article Link: Will Apple Ever Make AR Smart Glasses?