On iPhone 16 models, Visual Intelligence lets you use the camera to learn more about places and objects around you. It can also summarize text, read text out loud, translate text, search Google for items, ask ChatGPT, and more. And thanks to the latest iOS 18.4 update from Apple, iPhone 15 Pro models can now get in on the action, too.

Until recently, Visual Intelligence was a feature limited to iPhone 16 models with a Camera Control button, which was necessary to activate the feature. However, Apple in February debuted the iPhone 16e, which lacks Camera Control and yet supports Visual Intelligence. This is because the device ships with a version of iOS that includes Visual Intelligence as an assignable option to the device's Action button.

Apple later confirmed that the same Visual Intelligence customization setting would be coming to iPhone 15 Pro models via a software update. That update is iOS 18.4, and it's available now. If you haven't updated yet, you can do so by opening Settings ➝ General ➝ Software Update.

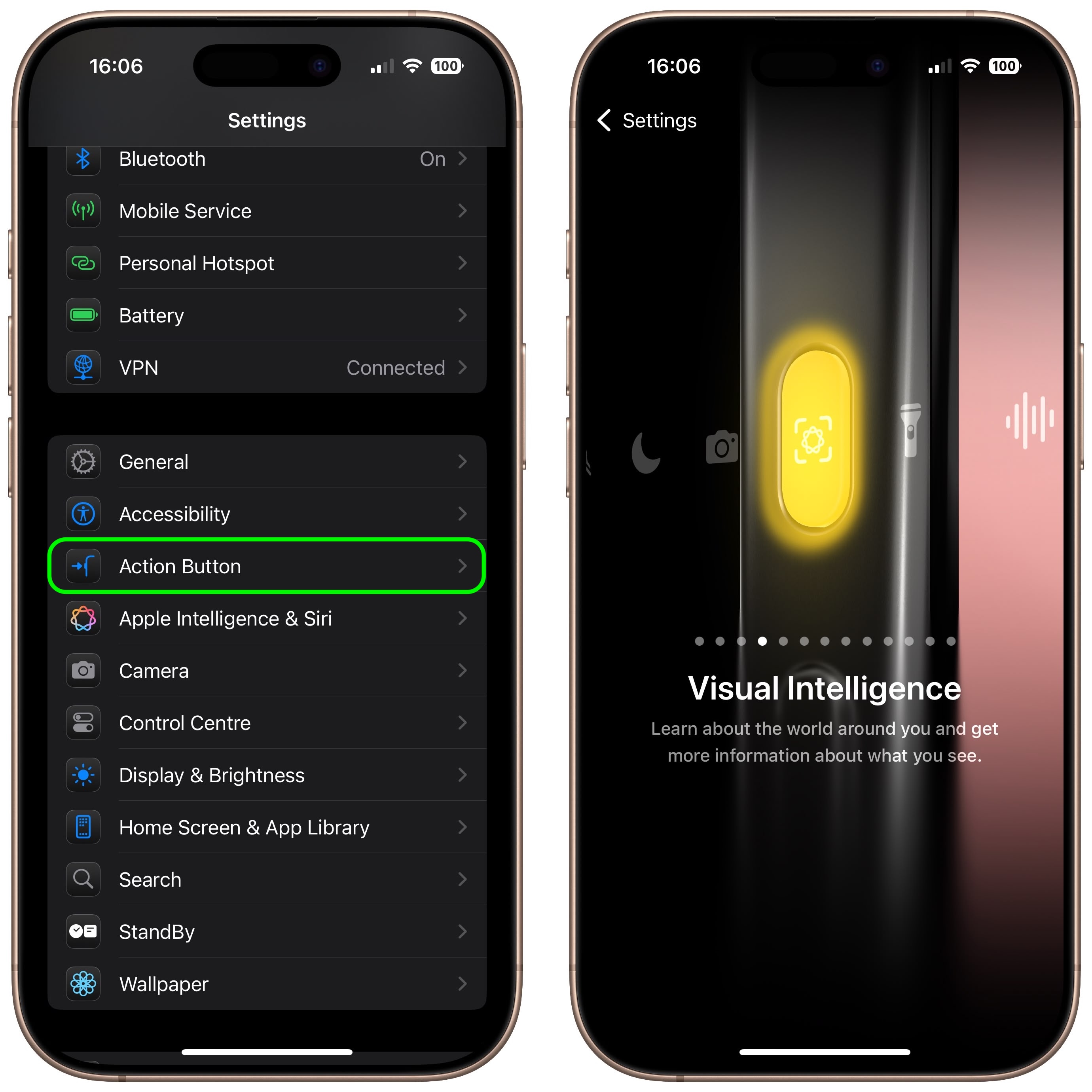

After your device is up-to-date, you can assign Visual Intelligence to the device's Action button in the following way.

- Open Settings on your iPhone 15 Pro.

- Tap Action Button.

- Swipe to Visual Intelligence.

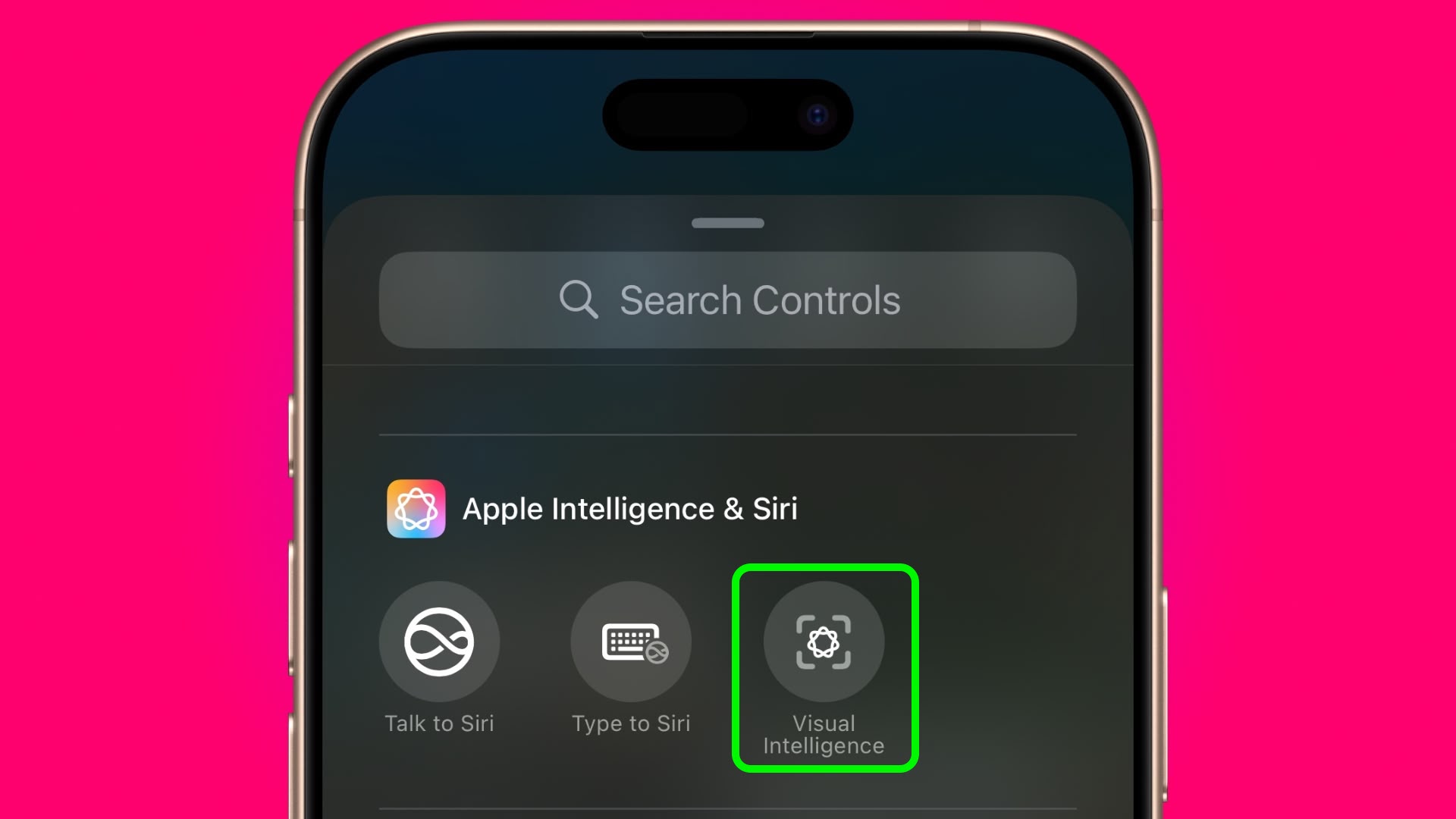

Pressing and holding the Action button will now activate Visual Intelligence. Note that you can also activate Visual Intelligence using the new button option in Control Center. Here's how.

- Swipe down from the top-right corner of your iPhone's display, then long press on the Control Center.

- Tap Add a Control at the bottom.

- Use the search bar at the top to search for Visual Intelligence, or swipe up to the "Apple Intelligence" section and choose the button.

- Tap the screen to exit the Control Center's edit mode.

Using Visual Intelligence

The Visual Intelligence interface features a view from the camera, a button to capture a photo, and dedicated "Ask" and "Search" buttons. Ask queries ChatGPT, and Search sends an image to Google Search.

When using Visual Intelligence you can either snap a photo using the shutter button and then select an option, or you can select an option in live camera view. You cannot use photos that you took previously.

To learn about everything that you can do with Visual Intelligence, be sure to check out our dedicated guide.

Article Link: You Can Now Get Visual Intelligence on iPhone 15 Pro – Here's How