At some point, it will begin to improve itself, killing everything in its path.

Got a tip for us?

Let us know

Become a MacRumors Supporter for $50/year with no ads, ability to filter front page stories, and private forums.

AI Companies Reportedly Struggling to Improve Latest Models

- Thread starter MacRumors

- Start date

- Sort by reaction score

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

ah so back to the drawing boards eventually it will get better because of course its future technology

There’s a peak where the experience of having a conversation blurs the line between a chat bot and a human. OpenAI has arguably gotten there with natural conversations that often feel human-like with the occasional reminder that you are in fact speaking with a bot. Beyond that, improvements will be gradual and maybe even limited because these LLMs are not actually intelligent, they just give off the illusion of being so.

This is why I think Apple will catch up. Siri with Apple Intelligence is maybe 1-2 years away from that chatGPT human-like illusion. But what Apple has and no one else does is the world’s biggest App Store. When Siri is capable of going out and acting on those apps for us and leverage the limiltess capability of apps to perform tasks for us, then AI will truly get useful.

This is why I think Apple will catch up. Siri with Apple Intelligence is maybe 1-2 years away from that chatGPT human-like illusion. But what Apple has and no one else does is the world’s biggest App Store. When Siri is capable of going out and acting on those apps for us and leverage the limiltess capability of apps to perform tasks for us, then AI will truly get useful.

The singularity will require software updates, lol.

That’s what I meant by “model complexity”… These AI systems aren’t a single monolith model. They have multiple parts that make a network. And that hasn’t hit our limitations. We still have plenty of data, cpu, and software complexity to achieve. What is meant by this is “diminishing”.. and it’s all meant economically. It won’t be achievable at $20 month plans. Maybe $70?This isn't a cpu, power or model issue, it's an approach issue. We (royal) aren't building machines that learn.

No idea what cap means.

You aren’t the only one. I hate the hype. Read Weapons of Math Destruction and Coded Bias and then get back to me on AI.Good. I genuinely hope the AI bubble bursts.

Some of the animosity of AI is a little overblown. At times, it’s great at handling complicated questions in plain speak English, and quickly giving a detailed answer. Sure, it will need to be regulated, but I like the technology.

But human computers did.Really? When calculators came along, mathematicians didn't suddenly disappear.

That’s what I meant by “model complexity”… These AI systems aren’t a single monolith model. They have multiple parts that make a network. And that hasn’t hit our limitations. We still have plenty of data, cpu, and software complexity to achieve. What is meant by this is “diminishing”.. and it’s all meant economically. It won’t be achievable at $20 month plans. Maybe $70?

I don't think more cpu, electricity, and data are going to make any difference, because we are approaching AI completely wrong. No amount of new models are going to matter unless we figure out how to make a machine that can actually learn without needing to throw the entire worlds data at it. AGI will not look anything like what we are currently doing and I suspect that none of the big players are even in the running.

I have found zero use for AI, personally, so I don't care either way. My company (hospital) doesn't even allow its use on the premises.

Last edited:

Honestly **** AI. One of the biggest modern examples of a solution looking for a problem. People need to get outside, touch grass and read a book. Particularly the youth.

The problem is that it doesn’t replace people and people rely on it as it’s the authenticated source, as they do Wikipedia now. I think it’s good in the long term as long as people realize it’s just a tool. I constantly joke with my daughter who I say should write “Thanks Grammarly” on her masters graduation cap.Some of the animosity of AI is a little overblown. At times, it’s great at handling complicated questions in plain speak English, and quickly giving a detailed answer. Sure, it will need to be regulated, but I like the technology.

Yup. It’s the freakin self driving car/fully autonomous car utopia that will never come to fruition in our lifetime all over again!! It’s insane that the tech industry didn’t learn from it…Considering the wildly unrealistic expectations (AGI) many people have for LLMs, it's inevitable that the bubble will have to burst at some point.

There are alternative approaches being worked on with some showing promise. One example is building in dendritic properties in deep neural networks: https://www.sciencedirect.com/science/article/pii/S0959438824000151This isn't a cpu, power or model issue, it's an approach issue. We (royal) aren't building machines that learn.

There have been huge breakthroughs in the past 5 years. Additional breakthroughs will happen, they simply will take more time and, as you implied, different approaches.

Apple upping their base RAM specs has been the only good thing from AI so far

It won’t. Generative AI is here to stay, regardless of what this article states. Progress will be slow at times, but the path is clear, there is no turning back now.Good. I genuinely hope the AI bubble bursts.

They've scraped up all the real, human created, content and are hitting a wall?

Dang, I'm so shocked by this...not.

Leading artificial intelligence companies including OpenAI, Google, and Anthropic are facing "diminishing returns" from their costly efforts to build newer AI models, according to a new Bloomberg report. The stumbling blocks appear to be growing in size as Apple continues a phased rollout of its own AI features through Apple Intelligence.

OpenAI's latest model, known internally as Orion, has reportedly fallen short of the company's performance expectations, particularly in handling coding tasks. The model is said to be lacking the significant improvements over existing systems when compared to the gains GPT-4 made versus its predecessor.

Google is also reportedly facing similar obstacles with its upcoming Gemini software, while Anthropic has delayed the release of its anticipated Claude 3.5 Opus model. Industry experts who spoke to Bloomberg attributed the challenges to the increasing difficulty in finding "new, untapped sources of high-quality, human-made training data" and the enormous costs associated with developing and operating new models concurrently with existing ones.

Silicon Valley's belief that more computing power, data, and larger models will inevitably lead to better performance, and ultimately the holy grail – artificial general intelligence (AGI) – could be based on false assumptions, suggests the report. Consequently, companies are now exploring alternative approaches, including further post-training (incorporating human feedback to improve responses and refining the tone) and developing AI tools called agents that can perform targeted tasks, such as booking flights or sending emails on a user's behalf.

"The AGI bubble is bursting a little bit," said Margaret Mitchell, chief ethics scientist at AI startup Hugging Face. She told Bloomberg that "different training approaches" may be needed to make AI models work really well on a variety of tasks. Other experts who spoke to the outlet echoed Mitchell's sentiment.

How much impact these challenges will have on Apple's approach is unclear, though Apple Intelligence is more focused in comparison, and the company uses internal large language models (LLMs) grounded in privacy. Apple's AI services mainly operate on-device, while the company's Private Cloud Compute encrypted servers are only pinged for tasks requiring more advanced processing power.

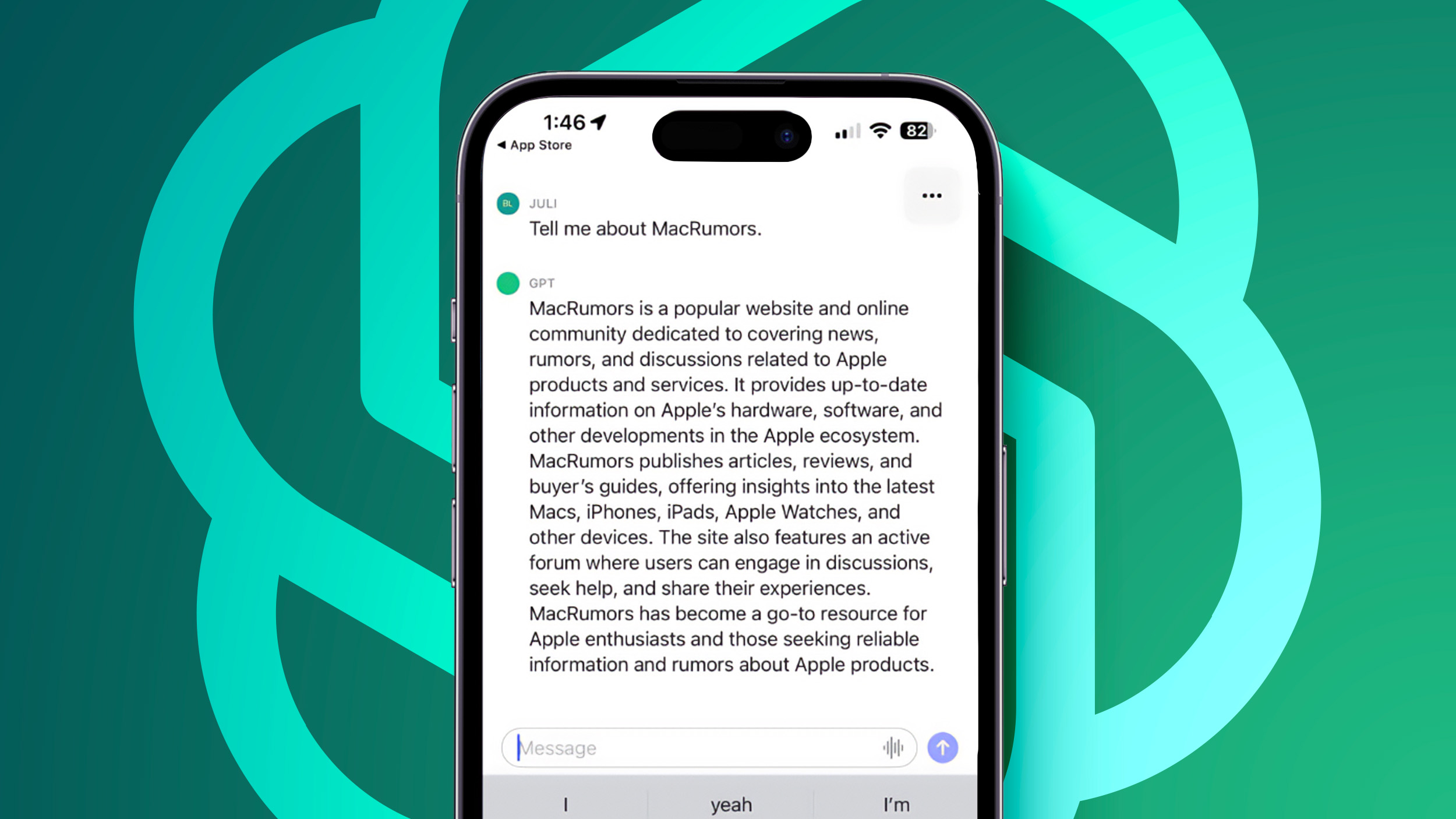

Apple is integrating AI capabilities into existing products and services, including writing tools, Siri improvements, and image generation features, so it can't be said to be competing directly in the LLM space. However, Apple has agreed a partnership with OpenAI that allows Siri to optionally hand off more open-ended queries to ChatGPT. Apple has also reportedly held discussions with other LLM companies about similar outsourcing partnerships.

It's possible that the challenges faced by major AI companies pursuing breakthrough general-purpose AI models could ultimately validate Apple's more conservative strategy of developing specific AI features that enhance the user experience. In that sense, its privacy-first policy may not be the straitjacket it first seemed. Apple plans to expand Apple Intelligence features next month with the release of iOS 18.2 and then via further updates through 2025.

Article Link: AI Companies Reportedly Struggling to Improve Latest Models

AI was ALWAYS going to be something that works best when it's made for one specific task to do and be good at and yet tech bros kept ignoring it because somehow that would not excite investors enough. AGI really only exists in sci fi for a reason.

That’s an oversimplification. Models like o1 are not just ‘pattern recognition’, and can genuinely produce something akin to reasoning. Sure it may be limited (for now) and it doesn’t always work as expected, but it’s definitely not just ‘pattern recognition’. Unless of course one defines ‘pattern recognition’ in such a broad manner that humans and their brains would also likely fall in this definition.Yup. I did some work with pattern recognition in the 70's. Fascinating stuff and not new; the only thing new is the availability of, and the ability to, take in large data sets.

I always verify, but it’s quicker to ask ChatGPT and verify than to manually look up the information in the first place.The problem is that it doesn’t replace people and people rely on it as it’s the authenticated source, as they do Wikipedia now. I think it’s good in the long term as long as people realize it’s just a tool. I constantly joke with my daughter who I say should write “Thanks Grammarly” on her masters graduation cap.

Meanwhile tech companies continue struggling to find a reason for consumers to give a darn about AI.

"Glorified"?Not quite sure what they’re expecting. It’s just glorified pattern-recognition software, and there are only so many ways to dress it up as ‘the next big thing’.

Well, yes. Righteously so. We built machines that pass the Turing test, it's undoubtedly a historical result. It definitely is the next big thing, like it or not.

Even getting practical with an example, about 90% of the daily needs I solved with Google a couple years ago are now handled much better by ChatGPT and I'm sure many people who haven't switched are just too lazy to learn. Potentially replacing Google is... huge.

Now, was AI it still inflated? Yes, but I think it's kinda kiddish to diminish its true importance which goes way beyond the thick smoke of catastrophism and scams.

In many ways, it looks like other fads (VR, 3D printing, drones, ecc). But so did the Internet, which turned out to be one of the biggest revolutions in the history of humanity. Not to say that AI is as big, but it surely has some substance to it behind the hype. Think social networks, that's about the amount of impact I believe it'll have. Not Internet, not drones.

And calling it "just pattern-recognition", considering that both roaches and humans have very different pattern-recognition capabilities, means almost nothing. It's just like saying "oh, a hurricane is nothing but air that moves fast" while it takes your house away.

Plus, nobody is saying AI is bad or it can't go further. We just won't see the (arguably incredible) big leaps we've seen in recent years, at least for a while, and you're twisting it into "it's not that good". Some people, especially those who haven't followed the small steps, think that it came out of the blue in a couple years, followed the growth curve and believed it would solve all of our questions in a couple more years. That's not gonna happen, this is what these opinions mean.

Now, if you still think AI is not great or important because it reached a plateau fairly quickly and will start having incremental updates, consider this: price for the best models we have now and simply the speed to double check answers will soon go down anyway. Adoption is still limited, we'll have to wait and see.

It may sound cool to dismiss AI, there definitely are some glorifying opinions that must be dismissed, but I think you guys should stay objective about achiements and potential.

Last edited:

Yeah, there is much hype around and Apple own research proved how fragile LLMs are in terms of calculations as Macrumors reported week or so ago. Plus some other research showed diminishing benefits of more power/parameters/whatever thrown into playfield.Considering the wildly unrealistic expectations (AGI) many people have for LLMs, it's inevitable that the bubble will have to burst at some point.

And I would say intention of many players are not humanity benefits but opposite so their plans can be hampered.

There are alternative approaches being worked on with some showing promise. One example is building in dendritic properties in deep neural networks: https://www.sciencedirect.com/science/article/pii/S0959438824000151

There have been huge breakthroughs in the past 5 years. Additional breakthroughs will happen, they simply will take more time and, as you implied, different approac

I love this damn idea. We expect Data but we wind up with an expendable red shirt 😂They've scraped up all the real, human created, content and are hitting a wall?

View attachment 2450781

This why I laugh when people say Apple is late and missed the boat. The AI boat is still dry docked.

Register on MacRumors! This sidebar will go away, and you'll see fewer ads.