![]()

Apple is facing calls to remove its AI-powered notification summaries feature after it generated false headlines about a high-profile murder case, drawing criticism from a major journalism organization.

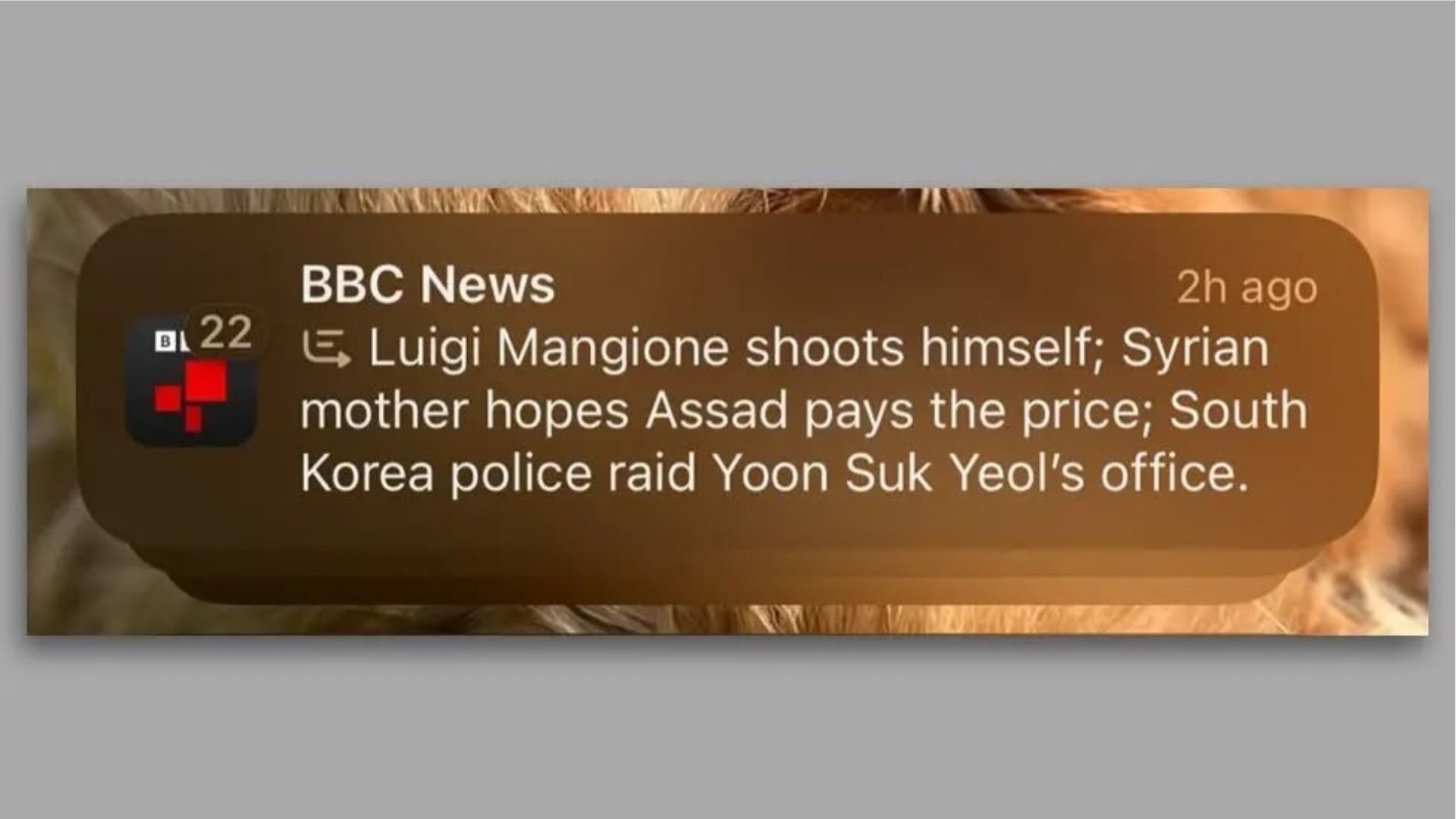

Updated to iOS 18.2? Then you may have received this notification (image credit: BBC News)

Reporters Without Borders (RSF) has urged Apple to disable the Apple Intelligence notification feature, which rolled out globally last week as part of its iOS 18.2 software update. The request comes after the feature created a misleading headline suggesting that murder suspect Luigi Mangione had shot himself,

incorrectly attributing the false information to BBC News.

Mangione in fact remains under maximum security at Huntingdon State Correctional Institution in Huntingdon County, Pennsylvania, after having been charged with first-degree murder in the killing of healthcare insurance CEO Brian Thompson in New York.

The BBC has confirmed that it filed a complaint with Apple regarding the headline incident. The RSF has since

argued that summaries of the type prove that "generative AI services are still too immature to produce reliable information for the public."

Vincent Berthier, head of RSF's technology and journalism desk, said that "AIs are probability machines, and facts can't be decided by a roll of the dice." He called the automated production of false information "a danger to the public's right to reliable information."

This isn't an isolated incident, either.

The New York Times reportedly experienced a similar issue when Apple Intelligence incorrectly summarized an article about Israeli Prime Minister Benjamin Netanyahu,

creating a notification claiming he had been arrested when the original article discussed an arrest warrant from the International Criminal Court.

Apple's AI feature aims to reduce notification overload by condensing alerts into brief summaries, and is currently available on iPhone 15 Pro, iPhone 16 models, and select iPads and Macs running the latest operating system versions. The summarization feature is enabled by default, but users can

manually disable it through their device settings.

Apple has not yet commented on the controversy or indicated whether it plans to modify or remove the feature.

(Via BBC News.)

Article Link:

Apple Faces Criticism Over AI-Generated News Headline Summaries