I'm sure p0rnhub would love it if people were that addictited to 'normal' porn they would make billions, your facts seem highly distorted. Links to this rabid addiction of any porn at all?As I said in another comment, people who are into ANY kind of porn normally are addicted to it. Addicts by definition have little or no self-control. Read pretty much any news story about someone caught with child porn, and it will rarely say, "Police searched his/her home and found 10 images of child porn on the computer." It's normally at MINIMUM in the hundreds, and very often thousands. You don't have to associate with these people to know this.

Got a tip for us?

Let us know

Become a MacRumors Supporter for $50/year with no ads, ability to filter front page stories, and private forums.

Apple Outlines Security and Privacy of CSAM Detection System in New Document

- Thread starter MacRumors

- Start date

- Sort by reaction score

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Does this constitute a reasonable search? What is the probable cause?

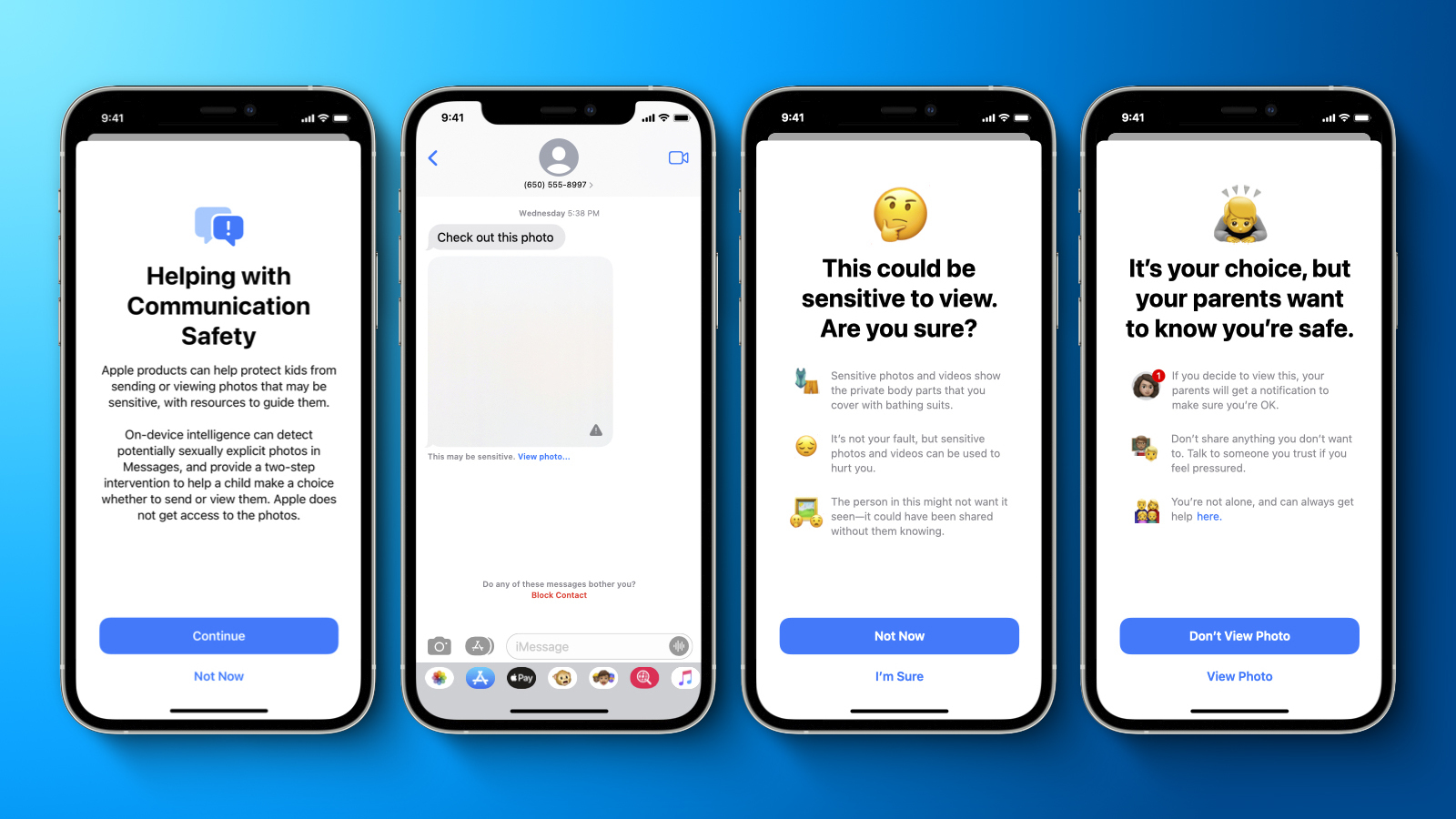

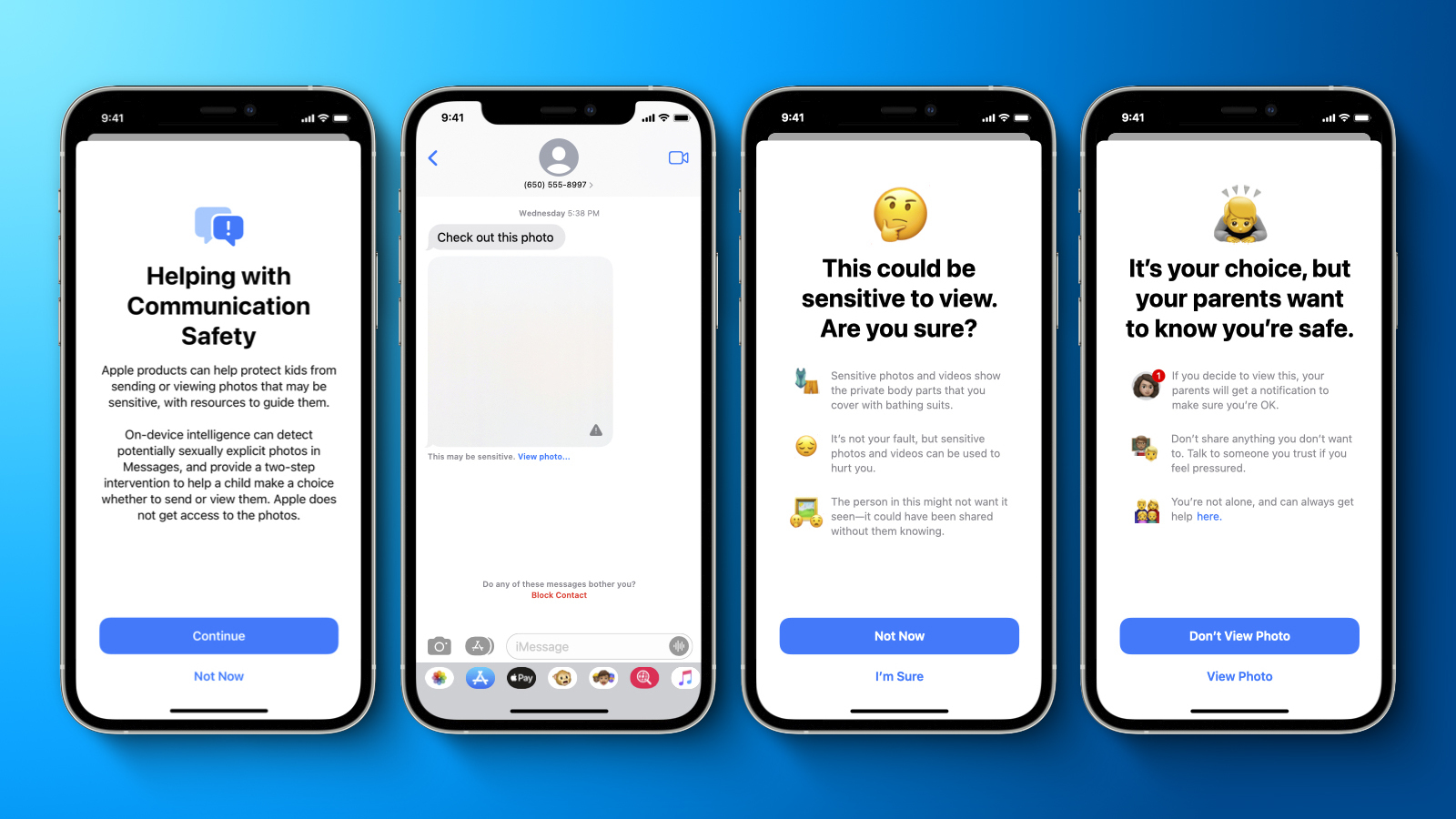

Apple today shared a document that provides a more detailed overview of the child safety features that it first announced last week, including design principles, security and privacy requirements, and threat model considerations.

Apple's plan to detect known Child Sexual Abuse Material (CSAM) images stored in iCloud Photos has been particularly controversial and has prompted concerns from some security researchers, the non-profit Electronic Frontier Foundation, and others about the system potentially being abused by governments as a form of mass surveillance.

The document aims to address these concerns and reiterates some details that surfaced earlier in an interview with Apple's software engineering chief Craig Federighi, including that Apple expects to set an initial match threshold of 30 known CSAM images before an iCloud account is flagged for manual review by the company.

Apple also said that the on-device database of known CSAM images contains only entries that were independently submitted by two or more child safety organizations operating in separate sovereign jurisdictions and not under the control of the same government.Apple added that it will publish a support document on its website containing a root hash of the encrypted CSAM hash database included with each version of every Apple operating system that supports the feature. Additionally, Apple said users will be able to inspect the root hash of the encrypted database present on their device, and compare it to the expected root hash in the support document. No timeframe was provided for this.

In a memo obtained by Bloomberg's Mark Gurman, Apple said it will have an independent auditor review the system as well. The memo noted that Apple retail employees may be getting questions from customers about the child safety features and linked to a FAQ that Apple shared earlier this week as a resource the employees can use to address the questions and provide more clarity and transparency to customers.

Apple initially said the new child safety features would be coming to the iPhone, iPad, and Mac with software updates later this year, and the company said the features would be available in the U.S. only at launch. Despite facing criticism, Apple today said it has not made any changes to this timeframe for rolling out the features to users.

Article Link: Apple Outlines Security and Privacy of CSAM Detection System in New Document

Contravention of your Fourth Amendment? Reasonable searching and probable cause, or are we all treated as guilty from the onset? I don't see how Tim Cooke will ever be able to face an audience and talk privacy again; handing iCloud data centers over to the Chinese and now thrashing through innocent users' phones to find the one in a billion offenders. The hypocrisy is outstanding...Weird, because I haven’t seen a single coherent explanation of why it’s a problem if your own device scans your photos for child porn, and only does so if you are trying to upload onto apple’s servers, and only produces information to Apple if you have at least thirty child porn photos that you are trying to upload.

Last edited:

It does not matter how they try to justify an illegal search with anti-colluding techniques, the search is still illegal. In Europe, it constitutes a breach in GDPR, as explicit consent is required for the use of ANY personal data. The fine for a breach in that can be as much as 20 billion Euros and the Europeans are always looking for a way to get money out of Apple.

Apple today shared a document that provides a more detailed overview of the child safety features that it first announced last week, including design principles, security and privacy requirements, and threat model considerations.

Apple's plan to detect known Child Sexual Abuse Material (CSAM) images stored in iCloud Photos has been particularly controversial and has prompted concerns from some security researchers, the non-profit Electronic Frontier Foundation, and others about the system potentially being abused by governments as a form of mass surveillance.

The document aims to address these concerns and reiterates some details that surfaced earlier in an interview with Apple's software engineering chief Craig Federighi, including that Apple expects to set an initial match threshold of 30 known CSAM images before an iCloud account is flagged for manual review by the company.

Apple also said that the on-device database of known CSAM images contains only entries that were independently submitted by two or more child safety organizations operating in separate sovereign jurisdictions and not under the control of the same government.Apple added that it will publish a support document on its website containing a root hash of the encrypted CSAM hash database included with each version of every Apple operating system that supports the feature. Additionally, Apple said users will be able to inspect the root hash of the encrypted database present on their device, and compare it to the expected root hash in the support document. No timeframe was provided for this.

In a memo obtained by Bloomberg's Mark Gurman, Apple said it will have an independent auditor review the system as well. The memo noted that Apple retail employees may be getting questions from customers about the child safety features and linked to a FAQ that Apple shared earlier this week as a resource the employees can use to address the questions and provide more clarity and transparency to customers.

Apple initially said the new child safety features would be coming to the iPhone, iPad, and Mac with software updates later this year, and the company said the features would be available in the U.S. only at launch. Despite facing criticism, Apple today said it has not made any changes to this timeframe for rolling out the features to users.

Article Link: Apple Outlines Security and Privacy of CSAM Detection System in New Document

And the fact that they suggest they can be so specific via our hardware also means they could be as specific in any target they set, whether it was law breaking, politics, commercial interest, or anything else, as it bypasses Apple's own System Integration protocols and where even Apple employees realise the potential for abuse.I am deeply rooted in the AI/ML world, and their language suggests they have embedding layers in the network (see word2vec and word embeddings) in order to assess semantic and perceptual likeness --- this is not simply face detection or object detection, they are looking for (and only looking for) images that nearly identically match.

So many posts ignore the actual issue. The issue is not the technicality of CSAM implementation, it is not about Apple's right to do with on its own servers, for then customers own hardware is sacrosanct.

This is about SURVEILLANCE. In history various excuses have been used to reduce privacy, inevitably they turn out to be just that excuses and history gives more than enough examples of the outcome where then subjugation was the outcome and even extermination!

This for me is primarily about Apple using equipment WE OWN, use processing power we have paid for, and electricity we pay for to initiate what is equivalent to iCloud pre check, but in any real sense it is SURVEILLANCE.

There can be no logical reason why Apple do not choose to emulate others in having such checks on their own server and where users then decide whether to use iCloud or not, but instead Apple have gone an insidious route, far more damaging than those they have criticised such as Facebook et al.

The nature of Apple software and safety revolves around System Integration Protection, but where Apple has a free pass on that, which allows them to adapt or add any software they choose, and in this case Surveillance if you strip out the emotive excuse for it, which I doubt will assist child safety anyway.

So all the assurances that this was designed ONLY for this, are toady words, because THIS might have been DESIGNED only for this, but the problem is its on YOUR DEVICES not Apple's.

Once that software is embedded in Operating Systems it is not correct to suggest it cannot be modified to include any surveillance that Apple wanted to initiate, and I can't recall Apple ever asking 1 billion users before this farce.

Once there then any update also bypasses System Integration Protection, so its comparatively easy then to modify the criterion on it, and if anyone believes it will not evolve, then its worth perusing history books!

In some cases existing users, will not even be able to use their equipment for the purposes they bought it, because having that software on it, many organisations would not be able to fulfil the remit either to customers or even government policy on security and privacy. Social Services or agencies involved in Child Abuse work often now use iPads in the field, with photos and information that is and should be not just confidential, but beyond question, and having an operating system capable of iCloud hash prechecks may make it untenable to use that equipment, whereas iCloud hash checks via the servers leave customers machines secure and sacrosanct as they should be, but still allows the intention (if it isn't just an excuse to get this software in) of CSAM, albeit no paedophile after Apple's backfoot attempts at explaining the surveillance, will be likely to ever use iCloud for photos, leaving innocent customers having embedded software on their own system for no technical reason at all.

I don't want to hear or see about how targeted this software is, or how its so anonymous, or how it is designed, how state of the art it is...history is littered with those excuses being a precursor to something rather more than was originally suggested. The fact is I do not want my operating system on my machine to be subject to censorship by Apple. I can do that myself! I don't want to tell my customers or my government that I can't guarantee the integrity of the equipment I own.

Have the checks conducted on iCloud by iCloud, not forced onto us via being part of the operating system where apple are do not have to be mindful of System Integration Protection so there is no protect from what Apple could do to modify this software, and why I suggest so many Apple employees have expressed their concern also, and they have likely far more access to how it is constructed and even how easy it is to modify than the public statements about it.

Just imagine social service, crime fighting agencies, government agencies, and contractors of them, working day in and day out to safeguard children, being subjected to software on their own devices, in many cases bought specifically because of Apple's public stance on privacy and security.

So all the arguments of technicality are obfuscation the substantive issue is Apple expecting 1 billion users to download software on their own hardware, software capable of much more modification. It is Surveillance and dressing it up into technicality or 'why would anyone object to pornography etc.' are erroneous comments, which I suspect is why Child safety was used, and where on some occasions its true Apple like others play a helpful role in fighting crime, but not in the manner they intend now.

Some will no doubt say you don't have to upload latest software, but you do, because for example those working on government issues, security issues, etc. etc., would find their business insurance would not indemnify them if they had not loaded the latest software, often for the reason of security updates.

My equipment becomes redundant if Apple do what they suggest and so would many customers who use Apple hardware for multiple situations, and yet if its iCloud based rather than OUR hardware, that is not the case.

User hardware should be sacrosanct from this, especially when Apple made such a thing about protecting our privacy with such features as managing website data et. al,. where Facebook and others kicked off about and where Apple's response looks decidedly crap now.

Last edited:

I still feel not well enough informed about what they want to do.

-How big is their search database?

-Can I legally get into hot water for having their -bad to terrible, coded content unknown to me- search data on my device?

-How much data traffic does all this generate? How much power does it need?

-How about my phone battery being emptied by their search algorithm while I need my phone with priority to present my boarding pass or pay something or similar?

-Does Apple intend to do anything else on my device in their interest without my knowledge?

-How big is their search database?

-Can I legally get into hot water for having their -bad to terrible, coded content unknown to me- search data on my device?

-How much data traffic does all this generate? How much power does it need?

-How about my phone battery being emptied by their search algorithm while I need my phone with priority to present my boarding pass or pay something or similar?

-Does Apple intend to do anything else on my device in their interest without my knowledge?

Last edited:

It's funny really as despite listening to a week of podcasts by many many different people on this very topic.

Not one person has every tackled the question of will this have the result of actually stopping children being used to take sick pictures by those who have a demand for this?

After all, that's the whole point of any new rule or law isn't it?

Will it actually help the whole point of it?

Honestly I can't see what difference this is going to make.

If I wanted to collect images, and distribute them, then they would be encrypted anyway, so impossible to scan.

No way I'd sent plain format illegal images to "customers"?

Catching the odd 'member of the public' who has, let's call them "Issues" and has some already known about sick photo's is going to protect children from today from being used/abused in this manner.

It really feels like it's something that LOOKS on the face of it to be the answer to tackle in a BIG way this crime, but the honest answer is that it's not doing to make a blind bit of difference whatsoever, and is borderline pointless/meaningless.

Not one person has every tackled the question of will this have the result of actually stopping children being used to take sick pictures by those who have a demand for this?

After all, that's the whole point of any new rule or law isn't it?

Will it actually help the whole point of it?

Honestly I can't see what difference this is going to make.

If I wanted to collect images, and distribute them, then they would be encrypted anyway, so impossible to scan.

No way I'd sent plain format illegal images to "customers"?

Catching the odd 'member of the public' who has, let's call them "Issues" and has some already known about sick photo's is going to protect children from today from being used/abused in this manner.

It really feels like it's something that LOOKS on the face of it to be the answer to tackle in a BIG way this crime, but the honest answer is that it's not doing to make a blind bit of difference whatsoever, and is borderline pointless/meaningless.

Here are some recent numbers:Honestly I can't see what difference this is going to make.

If I wanted to collect images, and distribute them, then they would be encrypted anyway, so impossible to scan.

No way I'd sent plain format illegal images to "customers"?

Catching the odd 'member of the public' who has, let's call them "Issues" and has some already known about sick photo's is going to protect children from today from being used/abused in this manner.

It really feels like it's something that LOOKS on the face of it to be the answer to tackle in a BIG way this crime, but the honest answer is that it's not doing to make a blind bit of difference whatsoever, and is borderline pointless/meaningless.

"Facebook reported almost 60 million photos and videos, the Times report states, based on some 15.9 million reports. Facebook tells The Verge that not all of that content is considered “violating” and that only about 29.2 million met that criteria.

Google reported 3.5 million total videos and photos in about 449,000 reports, and Imgur reported 260,000 photos and videos based on about 74,000 reports."

Yes those numbers demonstrate that Apple are completely wrong in attempting to put such software on OUR hardware. Not one of the organisations you mention incorporate these checks by having software embedded in users own hardware.Here are some recent numbers:

Many of the stats you quote are members of the public including Apple users, who volunteer if they see any suspicious situations, and they are to be commended for doing so.

That source is likely to be REDUCED if Apple insist on having our hardware involved, as the likelihood of culprits then surfacing are remote, making the whole exercise a sham.

I urge Apple users to always assist wherever you can as even agencies of all calibre require you to assist, but what Apple are doing in the name of Child safety is wrong in my opinion, as they have the propensity to do this via iCloud but are choosing not to, and that leaves a lot of doubt and suspicion.

Even the organisation whose database Apple intend to you are a very worthy organisation, albeit whose remit is not only child abuse, but child abduction and missing children and I suspect many parents are grateful to this organisation, but this is not what the situation is about, its about surveillance, its about compromising equipment I and you bought from Apple in good faith, where their public attitude on surveillance and privacy gave assurance, but where those assurances have been ripped to shreds if Apple persists in expecting 1 billion plus users to use their own equipment with operating systems that are engaged in surveillance, whether its anonymised or not it still interrogates via your own hardware, lending the software capable of modification easily.

Sadly Apple engaged in stealth in the name of child safety, and where there is absolute no reason for our hardware to be compromised in this way when they can and should do such checks via their own servers.

The irony is even the backfoot attempts to justify this unjustifiable situation has put more children at risk, because there is little doubt that anyone engaged in the activities this system is supposed to help prevent, will have taken remedial action, making it useless, and where then in the process its much harder for agencies employed throughout the world in fighting such crimes, as perpetrators either go to the dark web via Tor or similar, go VPN or even via cloned machines, and heavily encrypt anything they have making the task of finding them much much harder.

apologies if some words appear out of context, it actually provides a good illustration how checks are not always reliable, as anyone using predictive text may find out.

The problem to me is they put it on the device (I think the real reason is to save the $$$ that would be needed to compute those hashes for all uploaded images in their cloud).So am I correct to assume if you don’t want this Then you can not upgrade to ios15 ? Or are they just turning it on in icloud…. I currently do pay for family icloud

While they claim their spyware only triggered when uploading to iCloud it stops me upgrading to iOS 15 and buying an iPhone 13 Pro.

I DON'T ACCEPT SPYWARE ON MY DEVICES. PERIOD.

Don't forget the Mac.While they claim their spyware only triggered when uploading to iCloud it stops me upgrading to iOS 15 and buying an iPhone 13 Pro.

I DON'T ACCEPT SPYWARE ON MY DEVICES. PERIOD.

Depending on further developments, Apple devices must be regarded as untrutsted devices.

WhatsApp attacks Apple’s child safety ‘surveillance’ despite government acclaim

Global support for move to scan US users’ iPhone photos sets up privacy battle with rivals

of course many politicians and central governments/EU are said to applaud it. It gives more opportunity to extend control, but those on the front line within these organisations tell a different story.

We must remember the EU until recently had someone at the helm that boasted about and believe it or not governments lie!

Some memorable quotes from such people which some may suggest applies to this situation:

"We decide on something, leave it lying around, and wait and see what happens. If no one kicks up a fuss, because most people don't understand what has been decided, we continue step by step until there is no turning back"

"When the going gets tough you have to lie"

Last edited:

Providing a hash for the CSAM hash database is something that I think was absolutely necessary, and I am happy that Apple has committed to providing the verification. This will at least allow people to know the source of information that the devices will be scanning against.

I am really conflicted about this. Personally, I don't like the idea at all. I know that all of the cloud providers perform scanning, but I have not found a clear cut law that requires it.

It is not the implementation that is the problem. If there is a legal requirement for Apple to perform the scans, then I really have to applaud Apple for doing it the right way in regards to their users privacy. If, however, there is no legal requirement, I can't say that I stand behind their decision - because ultimately this erodes privacy and trust. It is definitely a step in the wrong direction. For a company that touts privacy, there should be lines that will not be crossed for any reason, and I feel this is one of them.

MacWorld had a good article about this situation, and I think it sums up my feelings exactly:

That is how I feel in a nutshell.

If Apple is legally required to do this, then good on them for doing it in the most private way they can, and our outrage should be directed at our legal representatives who consistently work to erode our privacy. If Apple is not legally required, then I feel the outrage about this situation is properly placed.

I am really conflicted about this. Personally, I don't like the idea at all. I know that all of the cloud providers perform scanning, but I have not found a clear cut law that requires it.

It is not the implementation that is the problem. If there is a legal requirement for Apple to perform the scans, then I really have to applaud Apple for doing it the right way in regards to their users privacy. If, however, there is no legal requirement, I can't say that I stand behind their decision - because ultimately this erodes privacy and trust. It is definitely a step in the wrong direction. For a company that touts privacy, there should be lines that will not be crossed for any reason, and I feel this is one of them.

MacWorld had a good article about this situation, and I think it sums up my feelings exactly:

Only your device sees your data. Which is great, because our devices are sacred and they belong to us.

Except… that there’s now going to be an algorithm running on our devices that’s designed to observe our data, and if it finds something that it doesn’t like, it will then connect to the internet and report that data back to Apple. While today it has been purpose-built for CSAM, and it can be deactivated simply by shutting off iCloud Photo Library syncing, it still feels like a line has been crossed. Our devices won’t just be working for us, but will also be watching us for signs of illegal activity and alerting the authorities.

That is how I feel in a nutshell.

If Apple is legally required to do this, then good on them for doing it in the most private way they can, and our outrage should be directed at our legal representatives who consistently work to erode our privacy. If Apple is not legally required, then I feel the outrage about this situation is properly placed.

Unfortunately inaccessible. Paywalled

WhatsApp attacks Apple’s child safety ‘surveillance’ despite government acclaim

Global support for move to scan US users’ iPhone photos sets up privacy battle with rivalswww.ft.com

What I find interesting is that people are finally outraged about privacy.

I worked in High-Tech for nearly 20 years. In the late 1990's during a company meeting, one manager

said "Someday, people will be walking by a pizza place and they will get a notification on their cell phone from that store for a discount."

Everyone else at that meeting was horrified that they would be tracked like that. In 2021 many would be ticked off if they didn't get a notification from that pizza place.

Today, people use Apple's Siri, Amazon Alexa, Google Assistant, etc., etc, etc. all of which send back every statement a person makes in hearing distance to the company server and people think nothing of it.

Companies got this way because people allowed it to happen.

I've used and owned Apple products since 1990 or so. For my computer I run Linux and have used that as my main operating system for 13 years. I currently own an Apple iPhone SE (original) that I had been planning to upgrade to an iPhone 13 of larger size. I also own an iPad (2017).

However, after what Apple has announced recently, I'm may hang onto my cell phone for a while longer to see what happens with Apple. I might move to an Android phone and de-google it. When I replace my iPad, I may well go to a Microsoft Surface and install Pop!_OS Linux on it.

On a sarcastic note, with the direction Apple is headed, maybe I should buy an iPhone 13, and run Red Star OS on my laptop. That way I can be spied on by everyone. I'll feel so safe that way... /s

I worked in High-Tech for nearly 20 years. In the late 1990's during a company meeting, one manager

said "Someday, people will be walking by a pizza place and they will get a notification on their cell phone from that store for a discount."

Everyone else at that meeting was horrified that they would be tracked like that. In 2021 many would be ticked off if they didn't get a notification from that pizza place.

Today, people use Apple's Siri, Amazon Alexa, Google Assistant, etc., etc, etc. all of which send back every statement a person makes in hearing distance to the company server and people think nothing of it.

Companies got this way because people allowed it to happen.

I've used and owned Apple products since 1990 or so. For my computer I run Linux and have used that as my main operating system for 13 years. I currently own an Apple iPhone SE (original) that I had been planning to upgrade to an iPhone 13 of larger size. I also own an iPad (2017).

However, after what Apple has announced recently, I'm may hang onto my cell phone for a while longer to see what happens with Apple. I might move to an Android phone and de-google it. When I replace my iPad, I may well go to a Microsoft Surface and install Pop!_OS Linux on it.

On a sarcastic note, with the direction Apple is headed, maybe I should buy an iPhone 13, and run Red Star OS on my laptop. That way I can be spied on by everyone. I'll feel so safe that way... /s

What is your background and credentials (academic, work-related, research papers, etc) that qualify you to assess the methods and precision you question and cast doubt on up above?

Fauci and Brilliant (among others) are my "tangent" because myself being an electrical engineer I'm not qualified to assess precision and methods with respect to infectious disease research and control. Therefore I have to rely on the words and papers of epidemiologists/virologists who have a demonstrated and verifiable track record of positive results over decades.

So please tell me about yours.

Cool. You trust virologists and epidemiologists, and you clearly want “experts” to provide you with the best information.

I gave you a very simple method to verify the quality of information from these experts you mentioned.

You are an EE, so I assume you can perform an electrical rating, yes? You would do this the appropriate oscilliscope or analyzer, yes? What’s the max bandwidth of your best tool? How precise are your measurements outside that range? Is there any kind of rolloff in your electrical expertise past the limitations of your observational abilities?

Would it be unprofessional for you to give an absolute measurement, based on guesses outside the range your tools can detect? At least without some qualification of uncertainty? Would it be ethical for you to “ballpark” it, and then make absolute declarations for a device’s output rating, beyond what you could know for certain? How do you measure it’s efficiency? With a percentage, yes? Why?

I’m not going to give you easy answers. It might take you a little time to skim around a few TEM and STEM articles and forums. But this way, the journey of discovery is all your own, bypassing any personality preconceptions, forum rhetorical rituals or any pesky partisan coconut boundaries, if applicable. You must know a few things if you’re an EE, so you can relatively easily drive yourself right upside this knowledge.

Your only breadcrumb: Why would expert virologists be writing papers to propose ideas and techniques for improving STEM and TEM viral results? Why would an expert ever want to upgrade from a “TEM Pro Max 12” to the “TEM Pro Max 13”? New form factor? Titanium case? Higher rez? What are they missing with the 12?

This year it’s children, next year will be right wing supporters!!!Lots of difficult words in that explanation. I don’t think that tactic is going to work either.

People read “on device spying” and that’s that.

It baffles me that a company like Apple monumentally messed up their PR twice this summer: with AM Losless and now with this.

They should have just kept quiet about the CSAM thing and added to their iCloud T&C that they would scan for CSAM content. Nobody would have cared.

WhatsApp attacks Apple’s child safety ‘surveillance’ despite government acclaim

Global support for move to scan US users’ iPhone photos sets up privacy battle with rivalswww.ft.com

There is no way that this represents 'the most private way' when it entails having a billion plus customers having the software for it embedded in their operating systems on THEIR devices, so there is the potential its going to be used and modified, as the costs to Apple must be greater having a billion plus users downloading software, whereas they only need to put the equivalence on their servers ONCE.Providing a hash for the CSAM hash database is something that I think was absolutely necessary, and I am happy that Apple has committed to providing the verification. This will at least allow people to know the source of information that the devices will be scanning against.

I am really conflicted about this. Personally, I don't like the idea at all. I know that all of the cloud providers perform scanning, but I have not found a clear cut law that requires it.

It is not the implementation that is the problem. If there is a legal requirement for Apple to perform the scans, then I really have to applaud Apple for doing it the right way in regards to their users privacy. If, however, there is no legal requirement, I can't say that I stand behind their decision - because ultimately this erodes privacy and trust. It is definitely a step in the wrong direction. For a company that touts privacy, there should be lines that will not be crossed for any reason, and I feel this is one of them.

MacWorld had a good article about this situation, and I think it sums up my feelings exactly:

That is how I feel in a nutshell.

If Apple is legally required to do this, then good on them for doing it in the most private way they can, and our outrage should be directed at our legal representatives who consistently work to erode our privacy. If Apple is not legally required, then I feel the outrage about this situation is properly placed.

It also flies against Applies Eco credentials, as the power drain on a billion plus downloads as opposed to loading the equivalent tools on their server and where then they haven't got to amend complete operating systems to do it!

Neither you or I have any idea then what that embedded software actually does, but we do know it can be easily modified, as it bypasses System Integration Protection, giving carte blanche then for modifications.

By all means have this check system on iCloud its Apple's equipment after all, but it should not be foisted on to customers own devices, where there is nothing to stop modification and even have access to the unique id identifiers.

Apple are admitted it was a bit of a botch, but are still putting it down to user misunderstanding when the problem is users understand very well what it means when Apple are going to put that software on OUR machines rather than theirs, utilise our processing, our devices, our battery life, etc. etc. and in a situation where I do not believe it will assist in the fight against Child Abuse or Child Pornography as they've telegraphed to these people and where Apple have consistently had to re explain or indeed try to justify the unjustifiable its given more and more details to these abusers exactly what they need to do to go underground, thereby making the whole thing a farce, or an excuse to just install what is surveillance in the name of child safety. Its irrelevant whether its a hash as it can be modified hence Apple's own employees being concerned about it.

The idea it safeguards our privacy by having this software on EVERY device rather than being checked out on Apple's own server is nonsense and no wonder WhatsApp, Facebook and others cannot believe the hypocrisy of Apple in embedding these 'tools' in over a billion devices, and where they've received much criticism from Apple, but where even they have the system on their servers, as its a positive dangerous step to have such software on the users' hardware.

If it was on the server, then there would not be the facility to check system information, unique device identifier etc., but installed on every device that and much more is possible and in my opinion will follow, as if the justification for this is child safety, who knows what the next justification sought will be.

Apple can do what they want to achieve but there is no reason they can't do that like all the other companies do via their servers, which is not as intrusive and does not have the potential to be modified on a user's own equipment, albeit where I do not believe Apple will achieve what they want, and in my opinion it will be a hindrance having frightened these people off, making tracking by the appropriate authorities even harder.

Even now Apple are plying their replies to this botched up and dangerous idea suggesting it is misunderstanding, when the problem is for Apple that people do understand which is why there has been so much media criticism let alone bb criticism of something that need not be put into our operating system and the fact they intend to do that raises significant justified concern.

Apple regrets confusion over 'iPhone scanning'

The iPhone-maker will introduce tools that can detect child sex abuse images uploaded to iCloud.

you do not know that.CSAM doesn't recognize objects or put photos into categories. That's not how the technology works.

If someone took an illegal picture of naked child and then raised the arms to take another picture at a slightly different angle, and the former picture made it into the CSAM database but the higher angle didn't, CSAM would only be able to match against the first image.

If you cropped the first image, rotate it, etc...CSAM would be able to detect that too, but it cannot detect the second image. That's what fingerprinting does.

Well said!Providing a hash for the CSAM hash database is something that I think was absolutely necessary, and I am happy that Apple has committed to providing the verification. This will at least allow people to know the source of information that the devices will be scanning against.

I am really conflicted about this. Personally, I don't like the idea at all. I know that all of the cloud providers perform scanning, but I have not found a clear cut law that requires it.

It is not the implementation that is the problem. If there is a legal requirement for Apple to perform the scans, then I really have to applaud Apple for doing it the right way in regards to their users privacy. If, however, there is no legal requirement, I can't say that I stand behind their decision - because ultimately this erodes privacy and trust. It is definitely a step in the wrong direction. For a company that touts privacy, there should be lines that will not be crossed for any reason, and I feel this is one of them.

MacWorld had a good article about this situation, and I think it sums up my feelings exactly:

That is how I feel in a nutshell.

If Apple is legally required to do this, then good on them for doing it in the most private way they can, and our outrage should be directed at our legal representatives who consistently work to erode our privacy. If Apple is not legally required, then I feel the outrage about this situation is properly placed.

Slippery slope when companies embrace enforcing morality, someone should remind Cook it was not that long ago homosexuality was against the law and probably is still on the books in some states, Will Apple hash those photos if those states decided to enforce those laws….. perhaps a good question to ask Mr Cook. Some how this whole scheme seems unconstitutional…… except it probably just comes down to terms of service, if you agree to use apple products you are giving permission the same way people are giving FB permission to play big brother…. Leaving FB was hard, leaving my whole Apple eco system will be harder

There is no way Apple is going to truly end to end encrypt iCloud, especially after this announcement.probably because apple knows people are excited for the os update and new phones and products and this would play against their dislike of the new csam scanning routine

also, i bet they are getting ready to roll out e2e encryption and the csam scanning is designed to mollify law enforcement

before on device scanning apple could tell the government they could not comply with court orders. (because they didnt have the software to do it)they have said explicitly that they are going to fight it, if you think they are lying or are going to be forced to do this by government without notifying users then all bets are off with regard to your relationship with apple and it's time to go elsewhere

this is definitely an issue of trust between the company and its customers, they/we need each other since there is no real alternative to apple, forget google as they are likely to do the same thing soon not to mention all the other ways they surveil their customers

you need to decide to make the leap of trust or go to one of the more privacy focussed os's and phones

personally i think it's better to just conclude that any real privacy when using technology in daily life is just over, it's finished and so i now act accordingly

After on device scanning what is apple going to tell governments?

Apple is not legally required to proactively scan iCloud Photos for CSAM. Apple is only legally required to file a report with NCMEC if it comes across CSAM on its servers.Providing a hash for the CSAM hash database is something that I think was absolutely necessary, and I am happy that Apple has committed to providing the verification. This will at least allow people to know the source of information that the devices will be scanning against.

I am really conflicted about this. Personally, I don't like the idea at all. I know that all of the cloud providers perform scanning, but I have not found a clear cut law that requires it.

It is not the implementation that is the problem. If there is a legal requirement for Apple to perform the scans, then I really have to applaud Apple for doing it the right way in regards to their users privacy. If, however, there is no legal requirement, I can't say that I stand behind their decision - because ultimately this erodes privacy and trust. It is definitely a step in the wrong direction. For a company that touts privacy, there should be lines that will not be crossed for any reason, and I feel this is one of them.

MacWorld had a good article about this situation, and I think it sums up my feelings exactly:

That is how I feel in a nutshell.

If Apple is legally required to do this, then good on them for doing it in the most private way they can, and our outrage should be directed at our legal representatives who consistently work to erode our privacy. If Apple is not legally required, then I feel the outrage about this situation is properly placed.

If they don't want the images on their servers they should implementing the scan on their servers and not adding spyware to iOS 15.

People using iCloud (I don't store any photos in iCloud and don't use iCloud Keychain) give up some of their privacy because they store their data on a system not owned and because it's not end-to-end encrypted.

Apple just needs to get their fingers off of devices I own!

So, do you use iCloud backup?

Register on MacRumors! This sidebar will go away, and you'll see fewer ads.