![]()

Apple today

shared a document that provides a more detailed overview of the

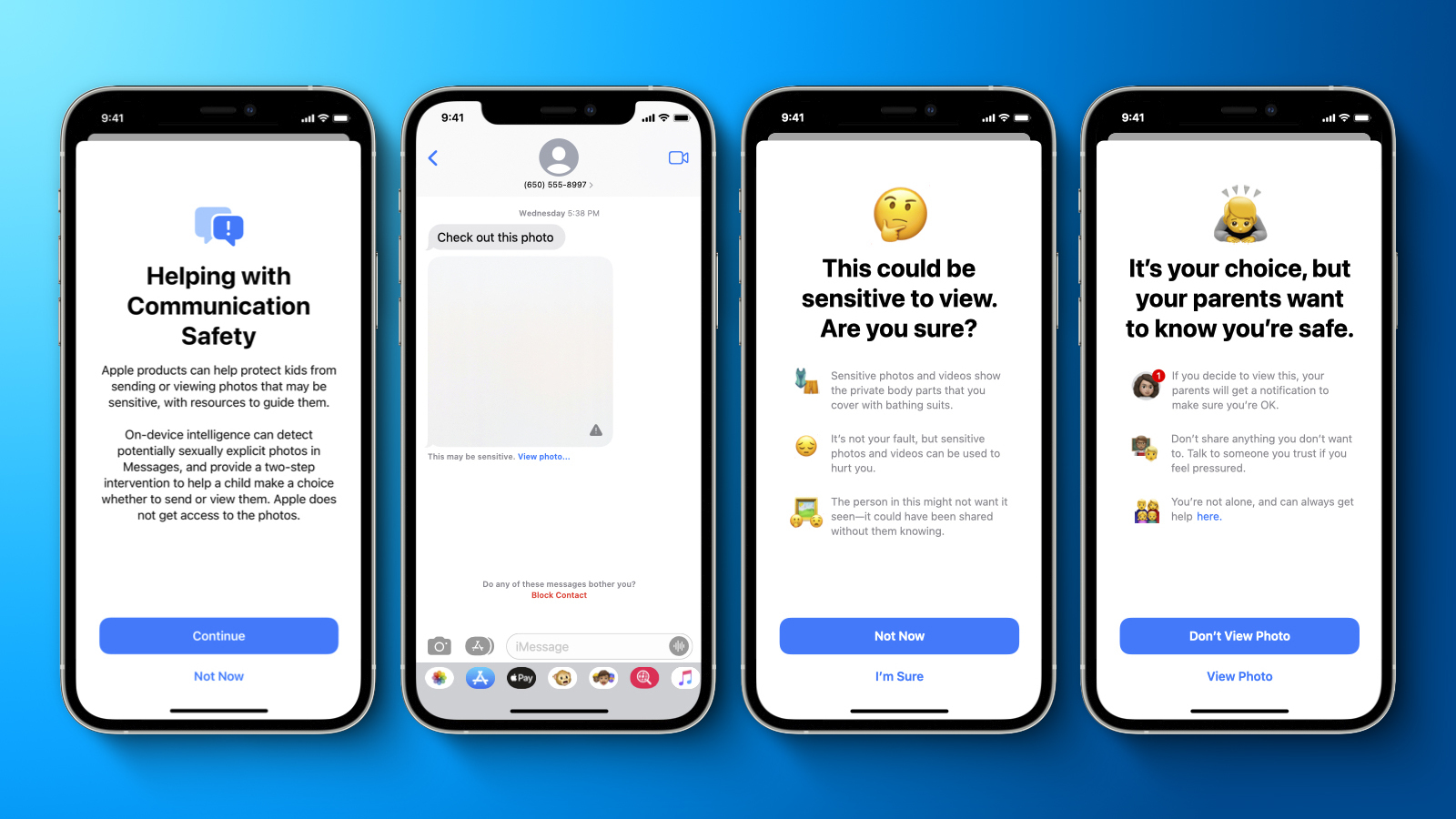

child safety features that it first announced last week, including design principles, security and privacy requirements, and threat model considerations.

Apple's plan to detect known Child Sexual Abuse Material (CSAM) images stored in iCloud Photos

has been particularly controversial and has prompted concerns from some security researchers, the non-profit Electronic Frontier Foundation, and others about the system potentially being abused by governments as a form of mass surveillance.

The document aims to address these concerns and reiterates some details that surfaced earlier in an

interview with Apple's software engineering chief Craig Federighi, including that Apple expects to set an initial match threshold of 30 known CSAM images before an iCloud account is flagged for manual review by the company.

Apple also said that the on-device database of known CSAM images contains only entries that were independently submitted by two or more child safety organizations operating in separate sovereign jurisdictions and not under the control of the same government.Apple added that it will publish a support document on its website containing a root hash of the encrypted CSAM hash database included with each version of every Apple operating system that supports the feature. Additionally, Apple said users will be able to inspect the root hash of the encrypted database present on their device, and compare it to the expected root hash in the support document. No timeframe was provided for this.

In a memo

obtained by Bloomberg's Mark Gurman, Apple said it will have an independent auditor review the system as well. The memo noted that Apple retail employees may be getting questions from customers about the child safety features and linked to

a FAQ that Apple shared earlier this week as a resource the employees can use to address the questions and provide more clarity and transparency to customers.

Apple initially said the new child safety features would be coming to the iPhone, iPad, and Mac with software updates later this year, and the company said the features would be available in the U.S. only at launch. Despite facing criticism, Apple today said it has not made any changes to this timeframe for rolling out the features to users.

Article Link:

Apple Outlines Security and Privacy of CSAM Detection System in New Document