They’re looking in a super smart way at particular parameters of little sub-divisions of the picture. Nothing to do with similarity you could spot with bare eyes. ”Differs drastically” is your human idea of differing drastically, not these AIs idea.That still doesn’t explain how a severely modified image can produce a match per apples claims. I can make a subject look 90 years old and the hulk in photoshop. But that’s still a match? How? If a picture differs that drastically is still a match, how is it impossible for an adult in a bathtub to not get flagged?

Are you saying it’s impossible for us to understand since we are humans? Then why am I getting all this hate when ALL I am asking is for some damn help understanding.

Got a tip for us?

Let us know

Become a MacRumors Supporter for $50/year with no ads, ability to filter front page stories, and private forums.

Apple Publishes FAQ to Address Concerns About CSAM Detection and Messages Scanning

- Thread starter MacRumors

- Start date

- Sort by reaction score

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Apple already scans photos for content. This is how they identify objects and people in your pictures. Regardless of the merit of and privacy concerns for this new policy and feature, if you are worried about Apple analyzing your photos you are too late.

Please don't stop investigating and learning more - seek information on cryptography, convoluted neural networks, image recognition etc.Okay so just no discussion at all? I can’t learn I should just stop? Right? Great attitudes these forums lately. Please explain it to me. I read the white paper, watched videos, listened to podcasts. What else do you expect me to do? I’m asking people more knowledgeable for help understanding. But I guess asking for help is not good in today’s world.

Don't stop when the math and formalism seem insurmountable, instead trace each subject to the first level you understand and the work your way upwards again!

Ask questions on this forum if you need, at least 2-3 regulars here, that I know of, have sufficient mastery of computer science and math to help you move forward.

Just my two €-cents 🥳.

They’re looking in a super smart way at particular parameters of little sub-divisions of the picture. Nothing to do with similarity you could spot with bare eyes. ”Differs drastically” is your human idea of differing drastically, not these AIs idea.

And what are those super smart ways? I don’t want a black box here. Is there an algorithm somewhere? I want to verify my personal pictures won’t get falsely flagged. I don’t trust Apples claim on the false positive rate when they also claim any modification to a flagged image will also be flagged.

I’m a 15 year senior developer. Is there an API I can play around to see if I put something as a match, then hulkify the subject for example I can see the error rate?

Please don't stop investigating and learning more - seek information on cryptography, convoluted neural networks, image recognition etc.

Don't stop when the math and formalism seem insurmountable, instead trace each subject to the first level you understand and the work your way upwards again!

Ask questions on this forum if you need, at least 2-3 regulars here, that I know of, have sufficient mastery of computer science and math to help you move forward.

Just my two €-cents 🥳.

That is what I’m doing! I have stated I’m looking for answers. But all I get is hate and things like “please stop you don’t know what your talking about”

I’m trying to understand the discrepancy in my knowledge here! But I guess I just shouldn’t ask?

And this is how the 4th amendment and right to privacy will fall……so the scan being on-device is MOOT…

So was your "people wouldn't x, y, z..." based on your consideration of logic.That's entirely subjective.

It has been clearly defined that people cannot expect the same level of privacy in public settings as inside their homes. Would you not consider a personal device as papers or effects with a reasonable expectation of privacy? If not, I guess there's no reason to secure it with passcodes, fingerprint sensors, face ID. Seems pretty reasonable and not that subjective to me.

Apple did a quick 180 with notifications regarding certain spyware that they can stop.I bet not even one person here will vote with their wallet. So much phony outrage.

And all of the analytical data stays on my device. And this data is to enrich my use of my phone, camera, and photos app. And its purpose isn’t to spy on me. This is an apples and oranges comparison.Apple already scans photos for content. This is how they identify objects and people in your pictures. Regardless of the merit of and privacy concerns for this new policy and feature, if you are worried about Apple analyzing your photos you are too late.

Simple reply: why wouldn’t you edit that picture so his thingy isn’t showing? When my parents were still alive I sent pictures of her in the tub to them as I’m on the left coast and they were back east, anyway before I sent them I made sure nothing was showing. That’s not to say I deleted the picture I just never put it online.Simple question: if I take a photo of my son in the tub and you can see his thingy, will that image get 1. Reviewed by an apple employee, and/or 2. Reported to the police?

I have read the white papers. And watched videos. And listened to podcasts.

Apples claim here is that ANY MODIFICATION to the image will still flag it. But have you seen how much people can modify an image in photoshop? They must be overstating this.

Hmm, based on what I have read, it only works for images that have been slightly edited. What extent this means, I have no idea, but it doesn’t seem like Apple is suggesting this works for all modifications.

Apple Introducing New Child Safety Features, Including Scanning Users' Photo Libraries for Known Sexual Abuse Material

Apple today previewed new child safety features that will be coming to its platforms with software updates later this year. The company said the features will be available in the U.S. only at launch and will be expanded to other regions over time. Communication Safety First, the Messages...

For example, an image that has been slightly cropped, resized or converted from color to black and white is treated identical to its original, and has the same hash

And what are those super smart ways? I don’t want a black box here. Is there an algorithm somewhere? I want to verify my personal pictures won’t get falsely flagged. I don’t trust Apples claim on the false positive rate when they also claim any modification to a flagged image will also be flagged.

I’m a 15 year developer. Is there an API I can play around to see if I put something as a match, then hulkify the subject for example I can see the error rate?

Doesn’t necessarily work that way otherwise you’d be able to crack it. Apple is said to have given some access to the system to at least 3 scholars of the field.

Just know that your counter-examples about what in your opinion should and shouldn’t match were very dumb for anyone having read (and understood) the gist about how something like PhotoDNA works, maybe your area of expertise as a developer is in a very distant field from this.

5. They will ask you to consent. They‘re leaving the possibility to opt-out. They’re doing this scan ONLY, EXCLUSIVELY, SOLELY on data you’re about to PUT ON THEIR FRICKIN’ SERVERS anyway. (or better, it’s like a tree falling in a forest with no one listening until you upload them)

I think you might be incorrect about #5. Or maybe it's a semantic issue. The hash matching happens on your device whether you have iCloud Photos enabled or not. I've read everything, including some of the deeper technical articles, and seen nothing to show me otherwise.

Have you read this? It's an interesting take from someone who knows their stuff (you've probably already seen it): http://www.hackerfactor.com/blog/index.php?/archives/929-One-Bad-Apple.html

I agree with you, and others, completely that there is some serious FUD and misinformation being spread about this. However, I don't think you can be as quick to dismiss some of the rational concerns being brought up here, and I'd be curious your take on this hypothetical scenario:

There is a rampant problem with theft in the world. Because of that, my homeowner's association has a new device that they're going to require all homeowners in our neighborhood to have in their homes. That device can scan every barcode that comes into the house and label it as legit, or match it up with barcodes in a stolen database housed elsewhere. As long as the stolen item stays in my home, the device stays quiet. But if the item leaves my house, the device immediately reaches out to local police to let them know it's out there. By the way, if you don't want to participate in this, simply never take any items out of your house and you'll be fine, the device will remain dormant and quiet.

While I realize that's not a perfect analogy, it's very similar in concept. I think the problem that I (and others trying to stay rational here) have with this change, is that they're stepping into my personal space, so to speak, to implement this change. To stick with that analogy, I don't believe I should be required to allow that device in my house in order to take things I own wherever I want to take them. Why not let the police do their job and track down the stolen devices, get a warrant to search my house, and then come find it/me?

I'm 100% fine if Apple decided to implement this exact same process with everything uploaded to iCloud. It's on their servers at that point, so they have every right to take steps to find that filth, and should be able to implement the exact same secure process. Yes, they would have the encryption keys, but they have them already, and we already trust them with that. So why do this massive encryption workaround just to do the scanning on my device?

Right you are. So what are you going to do about it? What can we do to not let it happen? And since it does seem to be happening, what then?

- Vote with your wallet.

- Influence – spread the word to colleagues, friends & family that Apple is now as bad if not worse than Microsoft, Google, and others in terms of data privacy (assuming this goes through).

- Use & support Linux.

- Use & support other distributed, non-centralized, open-source services & tools.

If your device (not Apple) analyses an image using A.I algorithms, converts the outcome to an encrypted ticket that is then compared to a database of indecent images, then where is the unreasonable/invasion of privacy?Would you not consider a personal device as papers or effects with a reasonable expectation of privacy?

Hmm, based on what I have read, it only works for images that have been slightly edited. What extent this means, I have no idea, but it doesn’t seem like Apple is suggesting this works for all modifications.

Apple Introducing New Child Safety Features, Including Scanning Users' Photo Libraries for Known Sexual Abuse Material

Apple today previewed new child safety features that will be coming to its platforms with software updates later this year. The company said the features will be available in the U.S. only at launch and will be expanded to other regions over time. Communication Safety First, the Messages...forums.macrumors.com

So a heavily modified image in photoshop will NOT get flagged? Thank you. This is ALL I WANTED TO KNOW.

So I can take a flagged image, Turn the subject into the hulk, it will NOT get flagged?

At least for now there isn't from an API point of view.And what are those super smart ways? I don’t want a black box here. Is there an algorithm somewhere? I want to verify my personal pictures won’t get falsely flagged. I don’t trust Apples claim on the false positive rate when they also claim any modification to a flagged image will also be flagged.

I’m a 15 year developer. Is there an API I can play around to see if I put something as a match, then hulkify the subject for example I can see the error rate?

And, we can't recreate or white-box reimplement the entire chain ourselves:

(1) We don't have the CSAM database used to train the network

(2) Apple hasn't released sufficiently detailed descriptions of their Neuralhash's CNN specifications or hash implementation for a white-box re-coding.

They have though - and since you're a developer that'll help - released very extensive details on the cryptographic approach (links on their webpage) including standard, formalistic proofs of the security.

Given (1) and (2) we only have half of the information needed. That said, if we had (2) though, we could train the network on one of the standard image libraries, used for image recognition benchmarking, and be quite certain we had a good approximation of the model's real-life performance.

Odd you receive a down vote for that! Besides that “employee” if I’m thinking the same story line, didn’t even work for Apple.Really...when did the actions of a rogue employee scale up to the general business practices of Apple?

And what are those super smart ways? I don’t want a black box here. Is there an algorithm somewhere? I want to verify my personal pictures won’t get falsely flagged. I don’t trust Apples claim on the false positive rate when they also claim any modification to a flagged image will also be flagged.

I’m a 15 year senior developer. Is there an API I can play around to see if I put something as a match, then hulkify the subject for example I can see the error rate?

Some of the questions you're asking are address in Apple CSAM Detection Technical Document, which I've referenced a couple times already:

It gets a little dense, but it's worth the read if you're interested. I got more than a little lost on the Apple PSI document:

Not necessarily. The original picture could be on someone’s pc with photoshop and the edited version is what “gets out there”. Then that version might get put in the database and another heavily modified version gets made.

You are correct…no discussion is needed…me along with many others on here have tried to explain it/dumb it down as much as possible, but you continue to give false and incorrect examples/possibilities despite claiming you read the white paper. Read it again.

I’ve done my best to explain to you why an image cannot be altered to match an image in the database And even gave you the odds of how unlikely it is to happen…nearly impossible by the way…and even if an image was altered but found NOT to be a match or a=even a modified version of an image in the database, you will never know as you technically did nothing wrong.

For your latest example…IT DOESN’T MATTER!! If the image is in the database and hashed (modified or not…and btw, they can easily detect if the image is modified from an original source), any version of that imaged (modified or not) will set off alarms via IDENTICAL hashes. This happening to one image alone will not flag your account….ONE IMAGE WILL NOT FLAG YOUR ACCOUNT.

Multiple images (again, not one) must have an identical match to the hashes of a database image (in part or in whole, modified or unmodified) for you account to be flagged and reviewed by Apple. If this happens you either a) are in possession of child pornography and should be reported or b) are one of th luckiest people in the world since it is a one in one trillion chance that the images are innocent shots that happen to identically match the hashes associated with a child pornography image….again, ONE IN ONE TRILLION CHGANCE!

Now, please respond with more examples of how photoshopped images can be flagged…

Google has been doing this with GMail since 2014

Google scans everyone’s email for child porn, and it just got a man arrested

Search giant trawls photos for illegal “digital fingerprints”www.theverge.com

No one bats an eye for that

But when Apple does it, NOW everyone gets upset

Why is this?

Google (and others) are doing it on the server side with shared photos. As far as I know they are not doing it device side.

Apple is taking this to a whole other level.

Apple has published a FAQ titled "Expanded Protections for Children" which aims to allay users' privacy concerns about the new CSAM detection in iCloud Photos and communication safety for Messages features that the company announced last week.

"Since we announced these features, many stakeholders including privacy organizations and child safety organizations have expressed their support of this new solution, and some have reached out with questions," reads the FAQ. "This document serves to address these questions and provide more clarity and transparency in the process."

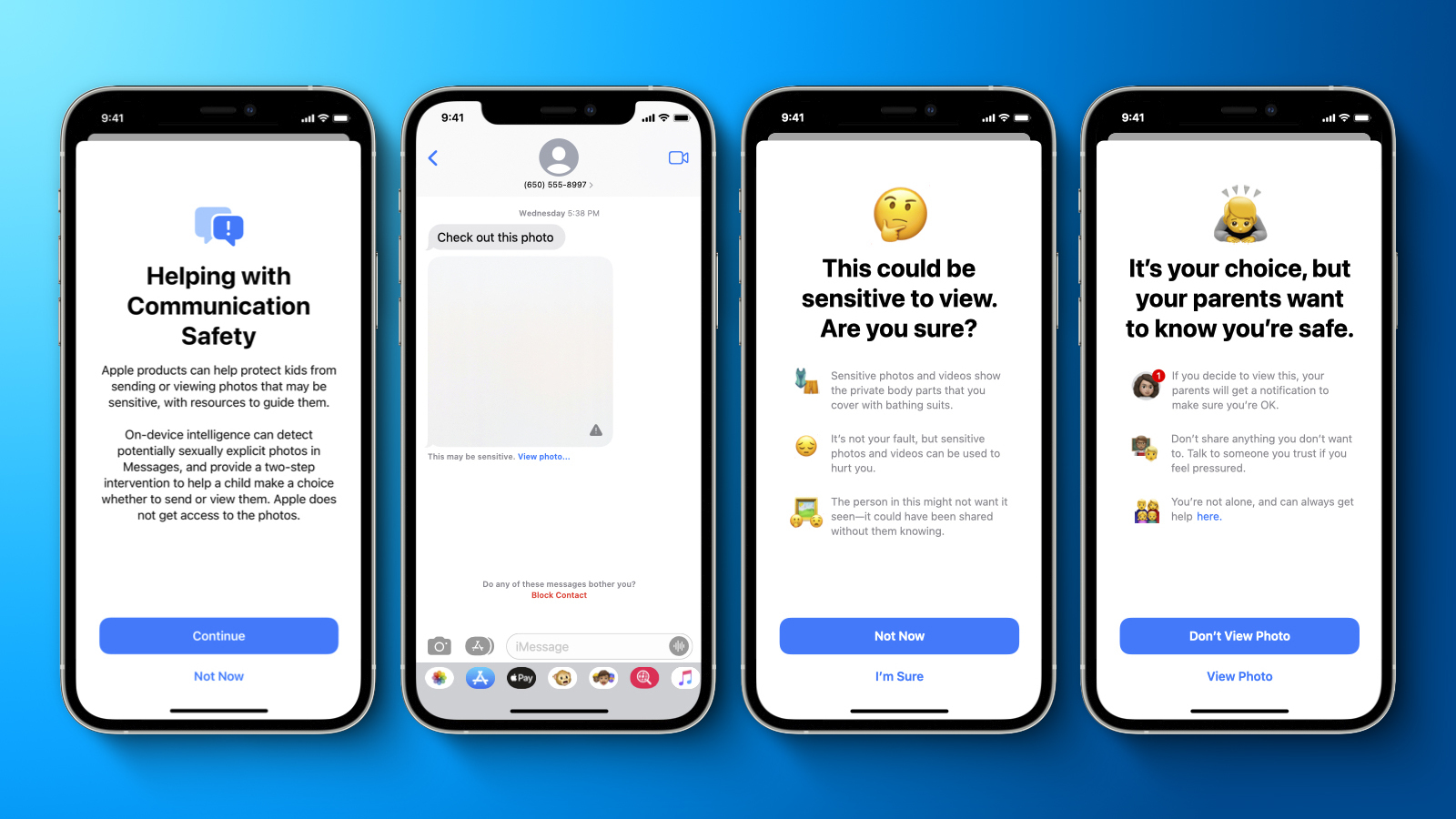

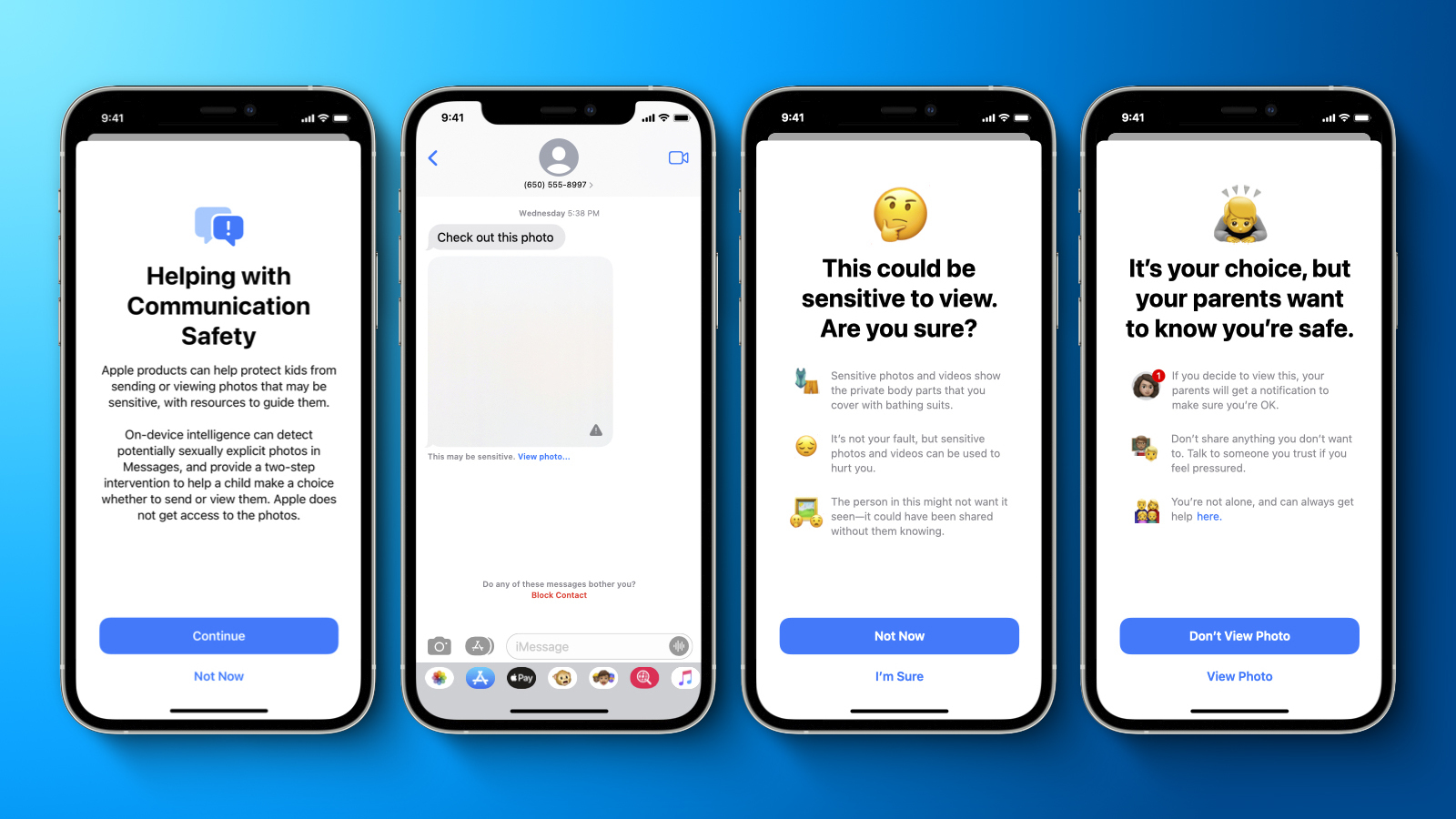

Some discussions have blurred the distinction between the two features, and Apple takes great pains in the document to differentiate them, explaining that communication safety in Messages "only works on images sent or received in the Messages app for child accounts set up in Family Sharing," while CSAM detection in iCloud Photos "only impacts users who have chosen to use iCloud Photos to store their photos… There is no impact to any other on-device data."

From the FAQ: The rest of the document is split into three sections (in bold below), with answers to the following commonly asked questions:

Interested readers should consult the document for Apple's full responses to these questions. However, it's worth noting that for those questions which can be responded to with a binary yes/no, Apple begins all of them with "No" with the exception of the following three questions from the section titled "Security for CSAM detection for iCloud Photos:"

- Communication safety in Messages

- Who can use communication safety in Messages?

- Does this mean Messages will share information with Apple or law enforcement?

- Does this break end-to-end encryption in Messages?

- Does this feature prevent children in abusive homes from seeking help?

- Will parents be notified without children being warned and given a choice?

- CSAM detection

- Does this mean Apple is going to scan all the photos stored on my iPhone?

- Will this download CSAM images to my iPhone to compare against my photos?

- Why is Apple doing this now?

- Security for CSAM detection for iCloud Photos

- Can the CSAM detection system in iCloud Photos be used to detect things other than CSAM?

- Could governments force Apple to add non-CSAM images to the hash list?

- Can non-CSAM images be "injected" into the system to flag accounts for things other than CSAM?

- Will CSAM detection in iCloud Photos falsely flag innocent people to law enforcement?

Apple has faced significant criticism from privacy advocates, security researchers, cryptography experts, academics, and others for its decision to deploy the technology with the release of iOS 15 and iPadOS 15, expected in September.

This has resulted in an open letter criticizing Apple's plan to scan iPhones for CSAM in iCloud Photos and explicit images in children's messages, which has gained over 5,500 signatures as of writing. Apple has also received criticism from Facebook-owned WhatsApp, whose chief Will Cathcart called it "the wrong approach and a setback for people's privacy all over the world." Epic Games CEO Tim Sweeney also attacked the decision, claiming he'd "tried hard" to see the move from Apple's point of view, but had concluded that, "inescapably, this is government spyware installed by Apple based on a presumption of guilt."

"No matter how well-intentioned, Apple is rolling out mass surveillance to the entire world with this," said prominent whistleblower Edward Snowden, adding that "if they can scan for kiddie porn today, they can scan for anything tomorrow." The non-profit Electronic Frontier Foundation also criticized Apple's plans, stating that "even a thoroughly documented, carefully thought-out, and narrowly-scoped backdoor is still a backdoor."

Article Link: Apple Publishes FAQ to Address Concerns About CSAM Detection and Messages Scanningnmultinational with ties to globalists

Snowden is right, give them an inch and they will take a mile. Apple like other other corporations are in bed with globalists and they have a political leaning. Human nature, power, and politics are factors.

If your device (not Apple) analyses an image using A.I algorithms, converts the outcome to an encrypted ticket that is then compared to a database of indecent images, then where is the unreasonable/invasion of privacy?

See here: https://forums.macrumors.com/thread...n-and-messages-scanning.2306957/post-30159331

Curious as to your take on that scenario as well.

Really? Really? Do you honestly believe anyone concerned about this actually "supports child exploitation"?What an ignorant response.

it’s sad (and disgusting) that so many MacRumors readers support child exploitation. I hope none of you have young siblings or children.

Like MLK, I have a dream. I dream that one day, we can have a serious discussion of a serious issue without someone going hyperbolic and throwing around outrageous accusations like this. Do you REALLY not see ANY possible reason why ANYONE would be concerned about privacy unless they "support child exploitation"?

Go ahead and compare me to a famous German dictator for disagreeing with your blatantly false accusation against numerous MacRumor readers, including myself, who have legitimate concerns about how this technology might be used in the future. That seems to be the normal reaction to anyone challenging inflammatory rhetoric on here.

Precisely, a scenario. Just more "what if/maybe/could/" et al.See here: https://forums.macrumors.com/thread...n-and-messages-scanning.2306957/post-30159331

Curious as to your take on that scenario as well.

Register on MacRumors! This sidebar will go away, and you'll see fewer ads.