ok so one in trillion is a false. that one in trillion goes for human review . Now thats 1 in Tredecillion chance a human will make a mistake. thats about the chance an amoeba evolves into a humanOk, Trillion number one we have here - that went quick - wish I did play these numbers in a lottery...

Apple Publishes FAQ to Address Concerns About CSAM Detection and Messages Scanning

You want to see two pictures that do match regarding the hash? - here you go. Hey Mr. Cook, we got a match! Yes, I am aware, that both are NOT in that database, but they obviously match. So: If it is not allowed to have pictures of women in a white shirt, do not try to have that butterfly in...forums.macrumors.com

Got a tip for us?

Let us know

Become a MacRumors Supporter for $50/year with no ads, ability to filter front page stories, and private forums.

Apple Publishes FAQ to Address Concerns About CSAM Detection and Messages Scanning

- Thread starter MacRumors

- Start date

- Sort by reaction score

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

I Disagree.logically impossible because What apple is scanning is what you chose to decrypt

First, they introduced iCloud, made it the DEFAULT option, and educated the users for YEARS to let most of them get accustomed to it.

Now, they tell you "Hey, I am gonna do something CONTROVERSIAL on your phone, you can SAY NO by NO LONGER USE SOMETHING you're accustomed to and convenient, is that ok?"

I don't think that's called "You chose to decrypt", but rather put people in a situation where a hard choice against everyday convenience must be made, and statistically most of the people would not "choose" not to obey.

This information is available here: https://www.apple.com/child-safety/pdf/CSAM_Detection_Technical_Summary.pdf

It's complicated (although lucid). I suppose there are two ways to take that: either (a) the folks working on this at Apple are pretty smart and are doing due dilligance or (b) complicated concepts exist to hide information. I read it as (a) and, admittedly, have no particular reason to believe that Apple would attempt to lie about the implementation.

Eventually they were going to be strong-armed into doing something; this really seems like a measured approach.

Yes I have read that. But I also heard from people that it tracks more than just those extremely basic edits.

Nevertheless - that nice picture of your wife was reviewed by Apple Control and categorized as legal. They might send you an apology for having violated your wifes privacy.ok so one in trillion is a false. that one in trillion goes for human review . Now thats 1 in Tredecillion chance a human will make a mistake. thats about the chance an amoeba evolves into a human

But what the heck - they do not know her...

I’m sorry Apple but you are not trustworthy. You and your comrades from the big tech are evil.

Let me guess then ... by that opinion you have - you're not using ANY hardware/software/service from any Big tech including:

FaceBook,

Microsoft,

Google,

right? Right?!!?

I Disagree.

First, they introduced iCloud, made it the DEFAULT option, and educated the users for YEARS to let most of them get accustomed to it.

Now, they tell you "Hey, I am gonna do something CONTROVERSIAL on your phone, you can SAY NO by NO LONGER USE SOMETHING you're accustomed to and convenient, is that ok?"

I don't think that's called "You chose to decrypt", but rather put people in a situation where a hard choice against everyday convenience must be made, and statistically most of the people would not "choose" not to obey.

Incorrect

- you have to opt in to iCloud

- apple is doing on client side what they can do on server side. the privacy of the server content is the same as client side because

- For a end to end encrypted photo to be able to transport to a cloud server it has to be decrypted

- the reason it has to be decrypted is because iCloud is not end to end so the content it stores cannot be end to end either

- as a result of 2. the photo is decrypted and then transitioned to icloud servers. a copy of that file remains on your device.

- that copy of the icloud file is decrypted because it needs to remain in sync with icloud

- Apple is scanning hashes, not full photo image on your device

- APple is using Device AI to scan, not a software which uploads hash information to Apple for every photo

- the only time Apple is sent hash information is when large bulk of photos get a position hit for CSAM content

- The chance for even 1 false photo is 1 in trillion, that chance increases to 1 in infinite when it comes to BULK content

- the only time Apple is notified is when a BULK of CSAM is identified

- Then the Photos are sent to a HUMAN reviewer to review.

- Once the photos are verified by a human it is sent to appropriate authorities

We're agreeing. The poster I was replying to claimed photos aren't illegal trying to justify Apple's move here.I'm not sure what you're advocating or not here.

I'm obviously not ok with any of those examples.

But - I'm also VERY much not ok with now just "scanning for evidence of it everywhere on everyone/anyones devices at all times"

That is a dystopian police state.

No thank you.

The ends don't justify the means on going about dealing with crime in that way.

Uhhh yes they are in many many cases; and far many categories than just CSAM.

Very odd this is the narrow issue they attack now after a decade of cloud. Who now in 2021 finally?

Because NO ONE can argue against "save the poor kids." You would be argued to be a monster if you oppose Apple's steps.

I bet you the Chinese government will be very interested in this technology.

Notice how Apple keeps changing the wording and being very careful with the document. I hope this backfires and Apple catches themselves in a massive lawsuit.

Privacy matters. Apple: Let us (the consumers decide) if we want you to scan our iPhone.

Apple you are a TECH company. You are not a law enforcement. Don’t lose your vision. Stop chasing the $.

Reports like this will be out left and right…

Student's nude photos leaked to Facebook by iPhone service centre, Apple now paying her millions of dollars

A settlement in a recent lawsuit required Apple to pay a multi-million-dollar amount as compensation for “severe emotional distress” caused to the individual.www.google.com

News and Trends

The latest news, videos, and discussion topics on News and Trends - Entrepreneurwww.entrepreneur.com

Easiest way to avoid this spying technology.

1. Turn off iCloud photos and messages.

2. Do not login to your iPhone using your iCloud credentials.

3. Possibly use a fake account to continue using your iPhone. Or, simply do not login with your Apple ID at all.

Fun pictures aside ... I hope you're wrong.

Curious why you hope this - the need to find and isolate those whom store and distribute pictures/links/video of children that have been SEXUALLY ABUSED AGAINST THEIR WILL AND AGAINST THEIR RIGHT TO PRIVACY OF THEIR BODY PARTS AND THE HORRORS THAT EXPLOITED THEM ... for them to live a normal life after the horror bothers you.

But hey you also forgot to mention NOT to use

Microsoft or Google's products and services that searches and catalogs EVERYTHING and never publicly made it clear they've been doing this since 2008!

Seems like you're on the wrong side of this discussion because you're ONLY focusing on YOUR priviledged privacy and sense of fear of what it COULD turn into vs what it's ACTUALLY going to do.

Complaints without offering suggestions of a better way is not just a complaint, its showing complacency of the main issue to be continually ignored.

You don't seem to get it. If a threshold is reached, a person at Apple will review the images. Moreover, how is any perceptual algorithm going to classify an image as child porn without assessing the amount of skin exposed as a feature? Thus, a human reviewer might be looking at sensitive photographs of you, your partner, or some innocent photo of your kid swimming, as a false positive. And how, exactly, is Apple going to keep pedophiles and pervs out of that job or reviewing your photographs?

Apple in 2022: "We have reveiewed a photo on your iCloud for marching with CSAM system. We inform you that the photo doesn't match any known child abuse images. Nice bikini."

If there’s something I would give far less credibility to than Apple’s “1 in a trillion” quote, it would be the back-of-the-envelope back-of-napkin clueless layman reasonings on this forum about how Apple’s system would fail at matching hashes and the fantasies about Apple fine tuning the system to catch more false positives than strictly necessary. (for some reason..go figure!)

I’ll take Apple’s “1 in a trillion” quote over those, thank you.

If that quote is wrong TENFOLD, on average it would still only red flag (and thus escalate to human review) only one “innocent” account PER CENTURY, worldwide.

I Disagree.

First, they introduced iCloud, made it the DEFAULT option, and educated the users for YEARS to let most of them get accustomed to it.

Now, they tell you "Hey, I am gonna do something CONTROVERSIAL on your phone, you can SAY NO by NO LONGER USE SOMETHING you're accustomed to and convenient, is that ok?"

I don't think that's called "You chose to decrypt", but rather put people in a situation where a hard choice against everyday convenience must be made, and statistically most of the people would not "choose" not to obey.

Very well put.

If we were introducing iCloud Photos today from scratch and we had...

1. Your photos, we don't touch them

2. We scan your photos and compare against databases checking for things that are bad

The amount of people that would choose 2 would be much lower than I think some would think.

It's not that we are against "getting rid of bad stuff", but not at the cost of rifling through my content and the cognitive load of worrying what that means now and in the future, etc.

By the way - on Point #1 - I'd be perfectly happy to have them never scan my photos for any reason - not even face detection. I don't care about or use any of that (too inaccurate and not really useful to me anyhow)

The hash system is a perceptual summary of the picture. Just because a system does not explicitly code perceptual features of a picture does not mean it does not represent them implicitly. If the matching is exact, it will lead to arms race between pedophiles editing pictures and Apple using new hashes, until it becomes unsustainable for Apple. If matches are based on perceptual similarity, the false positives won't be randomly related to the offending photos, they will look similar (at least to a machine). To me that suggests that any picture of people in lingerie, kids in swim suits etc. are likely to be false positives. Those are precisely the pictures people consider the most private.All I can say is read the white paper from the original article. It explains how hashes work and why Apple does not need to “see” the image, pixels, etc.

Moreover, I do not know how Apple could possible estimate the probability (supposedly 1 in a trillion) of an account being flagged. That has to be dependent on the number of photos in the account (an infinite number of photos has an infinite chance of a false positive), and the nature of those photos. I suspect people who take pictures of others are more likely to be flagged than people like me who take pictures mostly of nature.

The introduction of this system without consent is a fool's errand. It won't end well and anybody who knows anything about perception or psychology would tell you that. Just look at how random face recognition scanning was received in London. People do not like being treated like they're guilty. Apple is committing commercial suicide by violating the trust we had in the company not to invade our privacy.

The hash system is a perceptual summary of the picture. Just because a system does not explicitly code perceptual features of a picture does not mean it does not represent them implicitly. If the matching is exact, it will lead to arms race between pedophiles editing pictures and Apple using new hashes, until it becomes unsustainable for Apple. If matches are based on perceptual similarity, the false positives won't be randomly related to the offending photos, they will look similar (at least to a machine). To me that suggests that any picture of people in lingerie, kids in swim suits etc. are likely to be false positives. Those are precisely the pictures people consider the most private.

Moreover, I do not know how Apple could possible estimate the probability (supposedly 1 in a trillion) of an account being flagged. That has to be dependent on the number of photos in the account (an infinite number of photos has an infinite chance of a false positive), and the nature of those photos. I suspect people who take pictures of others are more likely to be flagged than people like me who take pictures mostly of nature.

The introduction of this system without consent is a fool's errand. It won't end well and anybody who knows anything about perception or psychology would tell you that. Just look at how random face recognition scanning was received in London. People do not like being treated like they're guilty. Apple is committing commercial suicide by violating the trust we had in the company not to invade our privacy.

We’re honored to have on the forum the head engineer of this multi-million $ system, good evening sir.

Thanks for the great insight.

Anybody else wanna adlib in detail about how this system works and fails?

I’ll bring pencils and napkins to write on.

I’ll bring pencils and napkins to write on.

Half of my career has been in the neurophysiology of perceptual systems in the brain. I know how perceptual codes, both natural and machine-based, work. My comments are not based on a back-of-the-napkin reasoning, nor am I a lay person with respect to this issue. There are serious issues in what Apple is contemplating.If there’s something I would give far less credibility to than Apple’s “1 in a trillion” quote, it would be the back-of-the-envelope back-of-napkin clueless layman reasonings on this forum about how Apple’s system would fail at matching hashes and the fantasies about Apple fine tuning the system to catch more false positives than strictly necessary. (for some reason..go figure!)

I’ll take Apple’s “1 in a trillion” quote over those, thank you.

If that quote is wrong TENFOLD, on average it would still only red flag (and thus escalate to human review) only one “innocent” account PER CENTURY, worldwide.

Last edited:

Apple Open to Expanding New Child Safety Features to Third-Party Apps

Apple today held a questions-and-answers session with reporters regarding its new child safety features, and during the briefing, Apple confirmed that it would be open to expanding the features to third-party apps in the future. As a refresher, Apple unveiled three new child safety features...

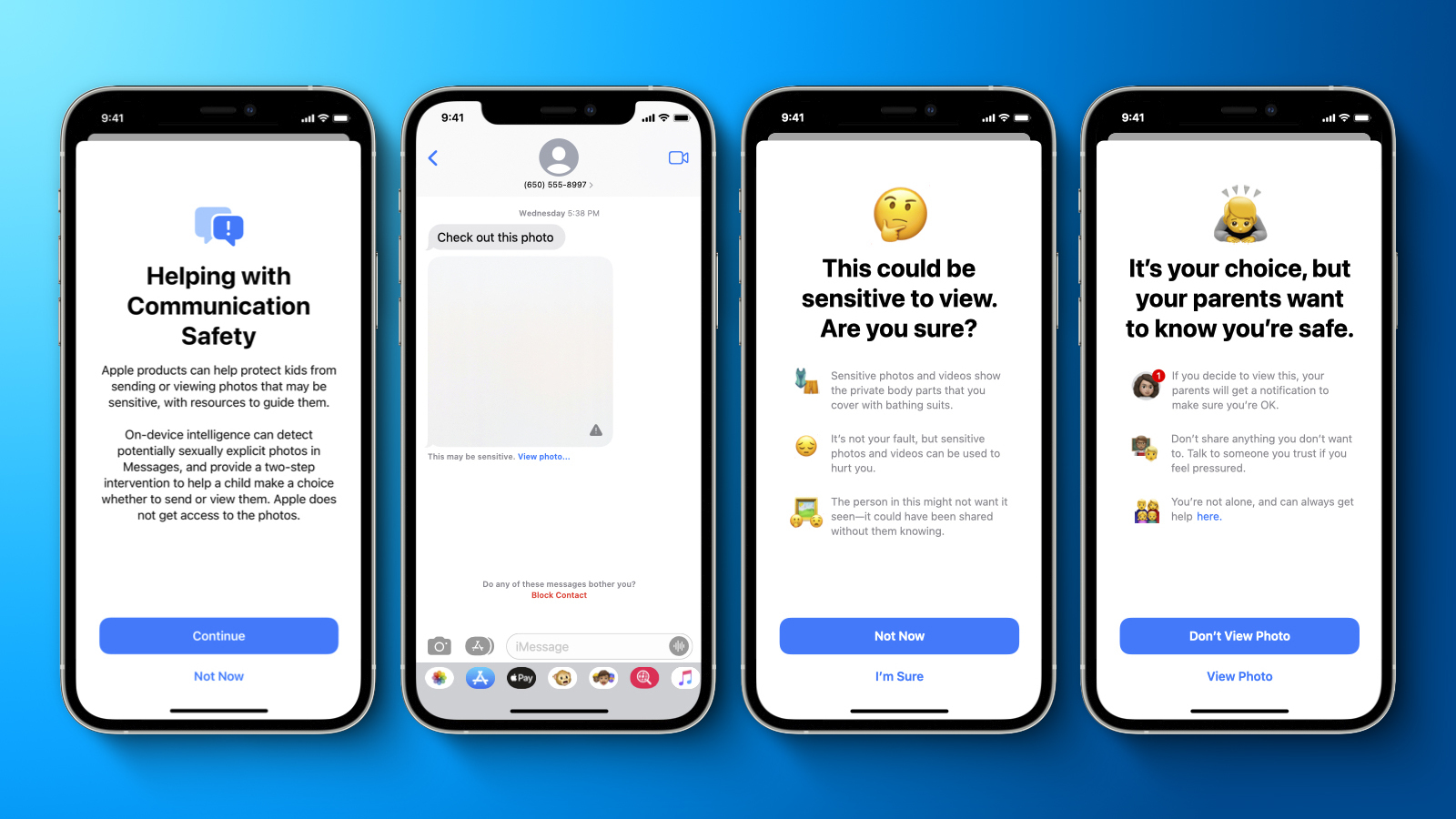

Now to be clear, I AM ok with this. If an account is set to a kids account and they actively send/receive naughty content then ok. Blur it with the popup, etc, great. That is their parents' choice to set that up. It is not invading anyone's privacy who has legal rights to it. It could help stop some of the sexting with minors issues, sure.

Although, let's be real here. Safari is wide open still if a kid wants to look at nudies.

But violating tens/hundreds of millions of people's privacy to hope to catch a few predators is a very different story. It is the old:

"You should have just complied"

"If you have nothing to hide just let us search you"

type of logical fallacies. You should not have to prove you are not guilty to not have your basic rights violated.

Maybe law enforcement should work harder to catch these predators instead. And the last stat I could find said os to the child porn out there is out of Mexico. So this only in the US really won't help much. A huge chunk of it is global.

It just really sounds like an excuse to get people to let them slip in these measures which who knows what it could be in 5-10 years; or what other countries will require where people don't have the basic human rights we have in the US.

Speaking of "for the children" I need to come to your house and search it, just to make sure you aren't exploiting any children there. I care about protecting innocent children, and so do you, so I assume you're totally ok with this.Yea, that terrible agenda of protecting innocent children. How dare they push that onto us Adults.

I am not an engineer. I am a life scientist who studied the visual and auditory systems for decades. I am aware of the issues around perceptual coding and categorisation, and I currently teach statistics at Master's level. So while you're right that I do not know the exact computations behind Apple's approach, I do understand the principles, I suspect far more than you do.We’re honored to have on the forum the head engineer of this multi-million $ system, good evening sir.

Thanks for the great insight.

One more thing: knowledge, let alone wisdom, is not measured by the monetary value of a system.

You're welcome.

Half of my career has been in the neurophysiology of perceptual system in the brain. I know how perceptual codes, both natural and machine-based, work. My comments are not based on a back-of-the-napkin reasoning, nor am I a lay person with respect to this issue. There are serious issues in what Apple is contemplating.

I am not an engineer. I am a life scientist who studied the visual and auditory systems for decades. I am aware of the issues around perceptual coding and categorisation, and I currently teach statistics at Master's level. So while you're right that I do not know the exact computations behind Apple's approach, I do understand the principles, I suspect far more than you do.

One more thing: knowledge, let alone wisdom, is not measured by the monetary value of a system.

The line between adding on point contributions to the discussion and humble-bragging unrelated theory is thin.

Monitor web sites and infiltrate sex offender networks online, which is what governments already do....

Complaints without offering suggestions of a better way is not just a complaint, its showing complacency of the main issue to be continually ignored.

I wish Apple the best of luck holding this line but I don't see how this is realistic once governments start passing laws requiring additional scanning.Could governments force Apple to add non-CSAM images to the hash list?

Apple will refuse any such demands. Apple's CSAM detection capability is built solely to detect known CSAM images stored in iCloud Photos that have been identified by experts at NCMEC and other child safety groups. We have faced demands to build and deploy government-mandated changes that degrade the privacy of users before, and have steadfastly refused those demands. We will continue to refuse them in the future. Let us be clear, this technology is limited to detecting CSAM stored in iCloud and we will not accede to any government's request to expand it. Furthermore, Apple conducts human review before making a report to NCMEC. In a case where the system flags photos that do not match known CSAM images, the account would not be disabled and no report would be filed to NCMEC.

You questioned my expertise. I let you know what that expertise is.The line between adding on point contributions to the discussion and humble-bragging unrelated theory is thin.

You still don't get it..what a surprise since I've only said it 10 times and am repeating exactly what Apple says...The "one in a trillion" odds do NOT apply to a single picture match.Ok, Trillion number one we have here - that went quick - wish I did play these numbers in a lottery...

Apple Publishes FAQ to Address Concerns About CSAM Detection and Messages Scanning

You want to see two pictures that do match regarding the hash? - here you go. Hey Mr. Cook, we got a match! Yes, I am aware, that both are NOT in that database, but they obviously match. So: If it is not allowed to have pictures of women in a white shirt, do not try to have that butterfly in...forums.macrumors.com

Winning the lottery (PowerBall, Megamillions, etc.) is one time is about 1 in 257 million chance.

Do you know what the odds are for winning the lottery twice? (hint: it's NOT 1 in 500 million).

EDIT: An even more apples to apples comparison...what are the odds of winning multiple different lotteries in the same week?

Having one hashed picture match a single hashed picture in the database is not one in one trillion...but multiple different pictures on your phone that happen to match multiple different pictures in the database and are NOT true matches?? One in a trillion seems like pretty realistic odds.

Last edited:

One in a trillion related to the CSAM API as tested, this version, as asserted by Apple after private review.

iOS is not secure, certainly not to a one-in-a-trillion level.

Now Apple has created a way to target any iOS user for automatic referral to law enforcement. Bad actor plants photo in your phone, rest is a fait accompli. You probably have thousands of photos, do you really scroll through all of them frequently?

I really don't care about the technical implementation of CSAM detection. It works or it doesn't. If it works, this creates a huge incentive for targeted phone hacking.

Unless Apple is going to ensure us that every bug related to iOS, every malicious text escalation, has been permanently fixed.

If it works, this creates a huge incentive for targeted phone hacking.

Sure does!

That happens to me ALL the time...hackers randomly picking me to put offensive images onto my private camera roll so I will then have it uploaded to iCloud to be perfectly matched with multiple images in the CSAM database of hashed images Apple has added to my phone so they can then review and of course prove that I uploaded/saved those photos myself...happens to me at least twice a day... just unlucky I guess.. /sOne in a trillion related to the CSAM API as tested, this version, as asserted by Apple after private review.

iOS is not secure, certainly not to a one-in-a-trillion level.

Now Apple has created a way to target any iOS user for automatic referral to law enforcement. Bad actor plants photo in your phone, rest is a fait accompli. You probably have thousands of photos, do you really scroll through all of them frequently?

I really don't care about the technical implementation of CSAM detection. It works or it doesn't. If it works, this creates a huge incentive for targeted phone hacking.

Unless Apple is going to ensure us that every bug related to iOS, every malicious text escalation, has been permanently fixed.

Register on MacRumors! This sidebar will go away, and you'll see fewer ads.