Google has launched its dedicated Gemini artificial intelligence app for iPhone users, expanding beyond the previous limited integration within the main Google app. The standalone app offers enhanced functionality, including support for Gemini Live and iOS-specific features like Dynamic Island integration.

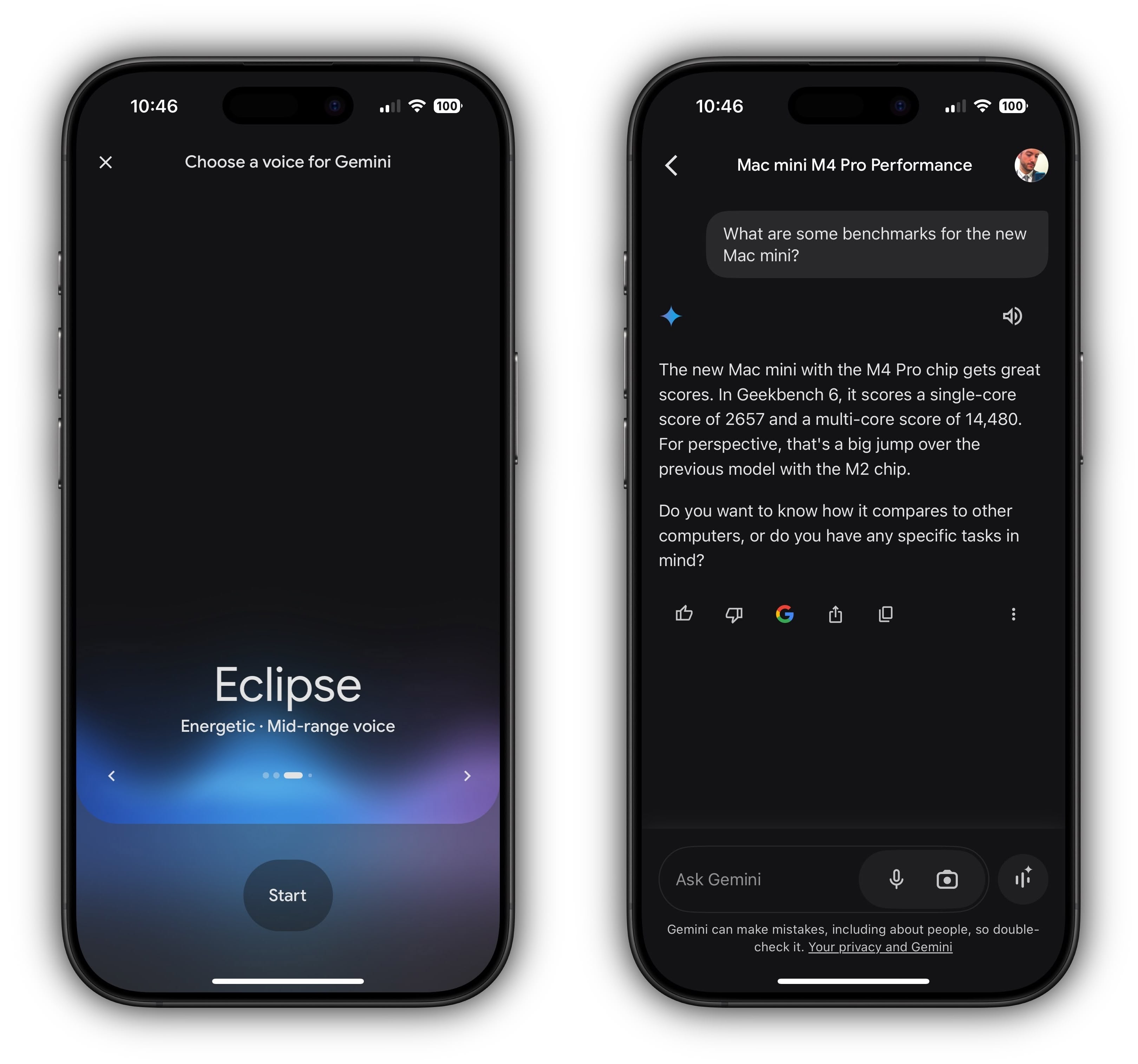

The new app allows iPhone users to interact with Google's AI through text or voice queries and includes support for Gemini Extensions. A key feature is Gemini Live, which wasn't available in the previous Google app implementation. When engaged in a conversation, Gemini Live appears in both the Dynamic Island and Lock Screen, letting you control your AI interactions without returning to the main app.

The app is free to download, and Google offers premium features through Gemini Advanced subscriptions available as in-app purchases. Gemini Advanced is part of a Google One AI premium plan costing $18.99 per month. Apart from Gemini in Mail, Docs, and more, it includes access to Google's next-generation model, 1.5 Pro, priority access to new features, and a one million token context window. Users need to sign in with a Google account to access the service.

The rollout follows an initial soft launch in the Philippines earlier this week, with availability now extending to additional regions including Australia, India, the US, and the UK.

Previously, iOS users could only access Gemini through a dedicated tab within the main Google app, which offered a more limited experience compared to the Android version. This standalone release, available on the App Store, brings feature parity closer between the two mobile platforms.

Article Link: Google Releases Standalone Gemini AI App for iPhone