Any ideas when iOS 15 will finally be released to non-beta testing public?

Got a tip for us?

Let us know

Become a MacRumors Supporter for $50/year with no ads, ability to filter front page stories, and private forums.

iOS 15: How to Use Visual Lookup in Photos to Identify Landmarks, Plants, and Pets

- Thread starter MacRumors

- Start date

- Sort by reaction score

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

I’m not sure that I understand your question. The public beta is out since last week. The official public release is set to this fall (probably September)Any ideas when iOS 15 will finally be released to non-beta testing public?

I would like this to work the other way around. Would be nice to be able to search my almost 20,000 photos with words. For instance "Red Car" might help me quickly find that old mustang picture. I suppose it would mean that Apple would use AI to search all your photos and tag them with what it thinks the content is. Now that would be useful.

You can already do that, although you can't specify a particular color. Just click Search and enter, for example, CAR, or SKI BOOT, or COFFEE MUG, or BABY SEAT... and you get a list of photos with those objects in them.

Live text and visual lookup are great new features.

This points to another needed feature...the camera identifies all significant or major objects in the scene and the user can lock the focus to one of the identified objects and move around it taking focused pictures or when it comes back into camera view.

This points to another needed feature...the camera identifies all significant or major objects in the scene and the user can lock the focus to one of the identified objects and move around it taking focused pictures or when it comes back into camera view.

Nope, I have never tagged a single photo. All done magically.

No you don't. I have never tagged a single photo and my entire library is searchable.

Are y'all sure? Is there something called "tags" that are different than keywords? The only keywords I see in my photo library are ones that I added manually. I'm looking in Photos on my Mac. iOS 14 Photos doesn't appear to expose keywords. iOS 15 Photos seems like it will expose more of the EXIF data to the user.

Where do I go to see the tags that it adds automatically?

I know Photos has scanned for faces for years now and photos that are geotagged are searchable by location. But I don't think that's what we're talking about.

Photos can do that to an extent. It’s not always very accurate though. (For instance I searched ”snake” and got 0 results despite there being snake photos in my library.) Hopefully this will make searches better.I would like this to work the other way around. Would be nice to be able to search my almost 20,000 photos with words. For instance "Red Car" might help me quickly find that old mustang picture. I suppose it would mean that Apple would use AI to search all your photos and tag them with what it thinks the content is. Now that would be useful.

But did you tag all the photos yourself, or were they tagged automatically by Apple when they were uploaded to the photos app? I have to try this

I didn’t do any manual tagging.

Add a caption to the picture in Photos. For example "red Mustang car". Search will find it right-away.I would like this to work the other way around. Would be nice to be able to search my almost 20,000 photos with words. For instance "Red Car" might help me quickly find that old mustang picture. I suppose it would mean that Apple would use AI to search all your photos and tag them with what it thinks the content is. Now that would be useful.

I wondered why it wasn't working for me (ios15, Australia). Can you easily switch the region to US to try it?Only available when region set to US

Just tried. Yes. Change to US region and it works. Doesn’t seem perfect but it’s a good featureI wondered why it wasn't working for me (ios15, Australia). Can you easily switch the region to US to try it?

I wish the LiDAR Scanner can scan the area for Coronaviruses. The Apple Clips app has a nice visual for LiDAR in action.

There could be some great outcomes with this. On two occasions working in an ER, suicidal patients have come in 1, having eaten 'these mushrooms' and 2, haven eaten 'these leaves'. They didnt know what they where and neither did we. Having even a modest approach to identifying them would have been useful.

Those feral greyhounds can tear up your garden.Still needs work 😂

This is bad user experience. If it shows stars why should we tap "info" button? Just show little icon in the center of all photo that have stars so we can tap that in a single tap.

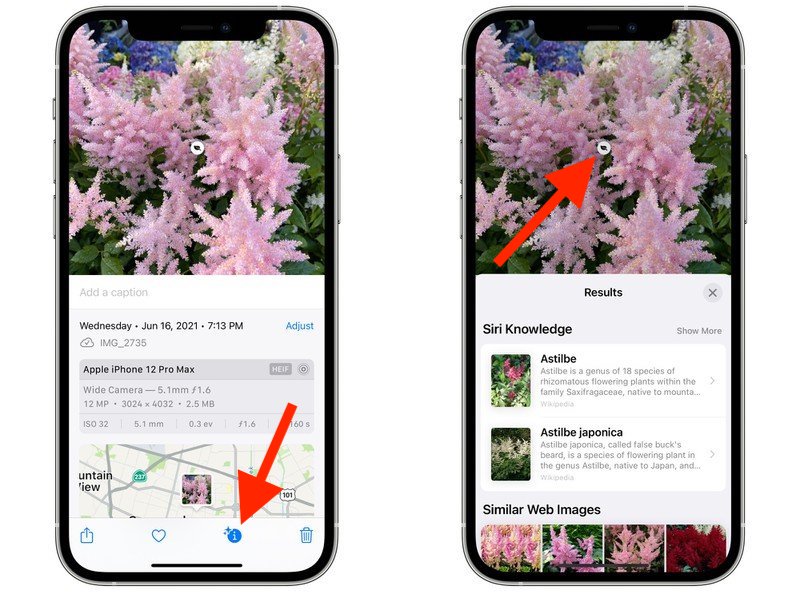

- Open the Photos app on your iPhone and select a picture with a clearly defined subject, such as a flower or animal.

- Check the info ("i") icon at the bottom of the screen. If it has a little star over it, tap it – this indicates there's a Visual Lookup you can examine.

- Tap the little icon in the center of the photo to bring up the Lookup search results.

It got a couple of my houseplants but didn't do great. The plant-identifying apps I've used did better. The UI is really weird and also put the little leaf in seemingly random places (the small area in the corner that's just out of focus floor?

It doesn't add tags/keywords, it builds it's own internal 'categories' lists to which it adds all appropriate photos, and these are exposed to the user through the search function - so if in Photos you search for 'car' it will return any photos it has put in its 'cars' category, as well as anything you've tagged with 'car'. There's always a few it gets wrong, but it's sometimes very impressive (e.g. I thought it had falsely identified a photo as including a cat, but there was a very small reflection of our family cat in a window). It will also file a photo under multiple categories if appropriate so you can search 'car tree' to just get photos that include both.Are y'all sure? Is there something called "tags" that are different than keywords? The only keywords I see in my photo library are ones that I added manually. I'm looking in Photos on my Mac. iOS 14 Photos doesn't appear to expose keywords. iOS 15 Photos seems like it will expose more of the EXIF data to the user.

Where do I go to see the tags that it adds automatically?

I know Photos has scanned for faces for years now and photos that are geotagged are searchable by location. But I don't think that's what we're talking about.

The only slight downside is you can't correct it - if it doesn't recognise there's a car in your photo, you can add a keyword, but if it thinks there's a car in your photo but there isn't, you can't tell it so.

Photos has actually supported this AI recognition/search function for several years now, though the number of categories it recognises has continued to grow.

Last edited:

I don't think this is intended as a serious tool for dog breeders or horticulturists etc to professionally identify breeds/species, I think it's aimed more at those 'that's a nice flower, I wonder what it is?" and "I've got 1000 dog photos on my phone but I need to find that one of a Labrador" moments as a convenience feature. I'm really not seeing that 'major headache' scenario.I didn't call breeds fake. As a novelty, this is fine. But it's going to cause major headaches for people that actually rely on breed accuracy. Trained people get breeds wrong. Heck, DNA testing gets breeds wrong. An AI is only going to be as good as the algorithm.

Last edited:

Best thing to do is likely just try using the search and see how it works. Not sure how else to convince you that the functionality is there. Photos have been searchable with keywords for some time now. The only thing I have done with any of my photos is shoot them on the phone, but they have been automatically categorised and it's mostly pretty accurate.Are y'all sure? Is there something called "tags" that are different than keywords? The only keywords I see in my photo library are ones that I added manually. I'm looking in Photos on my Mac. iOS 14 Photos doesn't appear to expose keywords. iOS 15 Photos seems like it will expose more of the EXIF data to the user.

Where do I go to see the tags that it adds automatically?

I know Photos has scanned for faces for years now and photos that are geotagged are searchable by location. But I don't think that's what we're talking about.

Exactly this.It doesn't add tags/keywords, it builds it's own internal 'categories' lists to which it adds all appropriate photos, and these are exposed to the user through the search function - so if in Photos you search for 'car' it will return any photos it has put in its 'cars' category, as well as anything you've tagged with 'car'. There's always a few it gets wrong, but it's sometimes very impressive (e.g. I thought it had falsely identified a photo as including a cat, but there was a very small reflection of our family cat in a window). It will also file a photo under multiple categories if appropriate so you can search 'car tree' to just get photos that include both.

The only slight downside is you can't correct it - if it doesn't recognise there's a car in your photo, you can add a keyword, but if it thinks there's a car in your photo but there isn't, you can't tell it so.

Photos has actually supported this general search function for several years now, though the number of categories it recognises has continued to grow.

Incorrect. You do not have to add keywords manually. I just tested it. Went to my photos and searched “cats”. Got many results. I never add keywords to any photos.That is cool, but you have to add the keywords yourself, manually. That can be very tedious and time-consuming if you have a very large library. I speak as one who has a large photo library and who does use the keywords feature.

The hope here is that that Photos will now analyze your library and automatically identify photos with cars in them.

This visual lookup sounds like it will be a fun feature to play with.

Thanks for this. It's been driving me nuts trying to get it working. Especially since live text in my photos works great.Only available when region set to US

How come no one's up in arms about the OS scanning your photos for this feature? This is actually far more intrusive than the CSAM topic. With CSAM, it's only looking at the hash for the image. In this case it's doing a full visual assessment of every image on your phone to identify what's in it. Where is the database for these images? Does this feature require that iCloud be turned on? If not, again, this is far more intrusive for the OS to be scanning images on your phone. Why isn't anyone asking how this works?

Please correct me if I have anything mistaken.

Please correct me if I have anything mistaken.

So far for landmarks it works fine (although given the GPS tagging duh...) but for animals failed pretty routinely. Sure nailed cat and dog but couldn't get goats (I have a small herd). Even worse from a UX why tag landmarks as "landmark", how about actually putting the name in the landmark button?

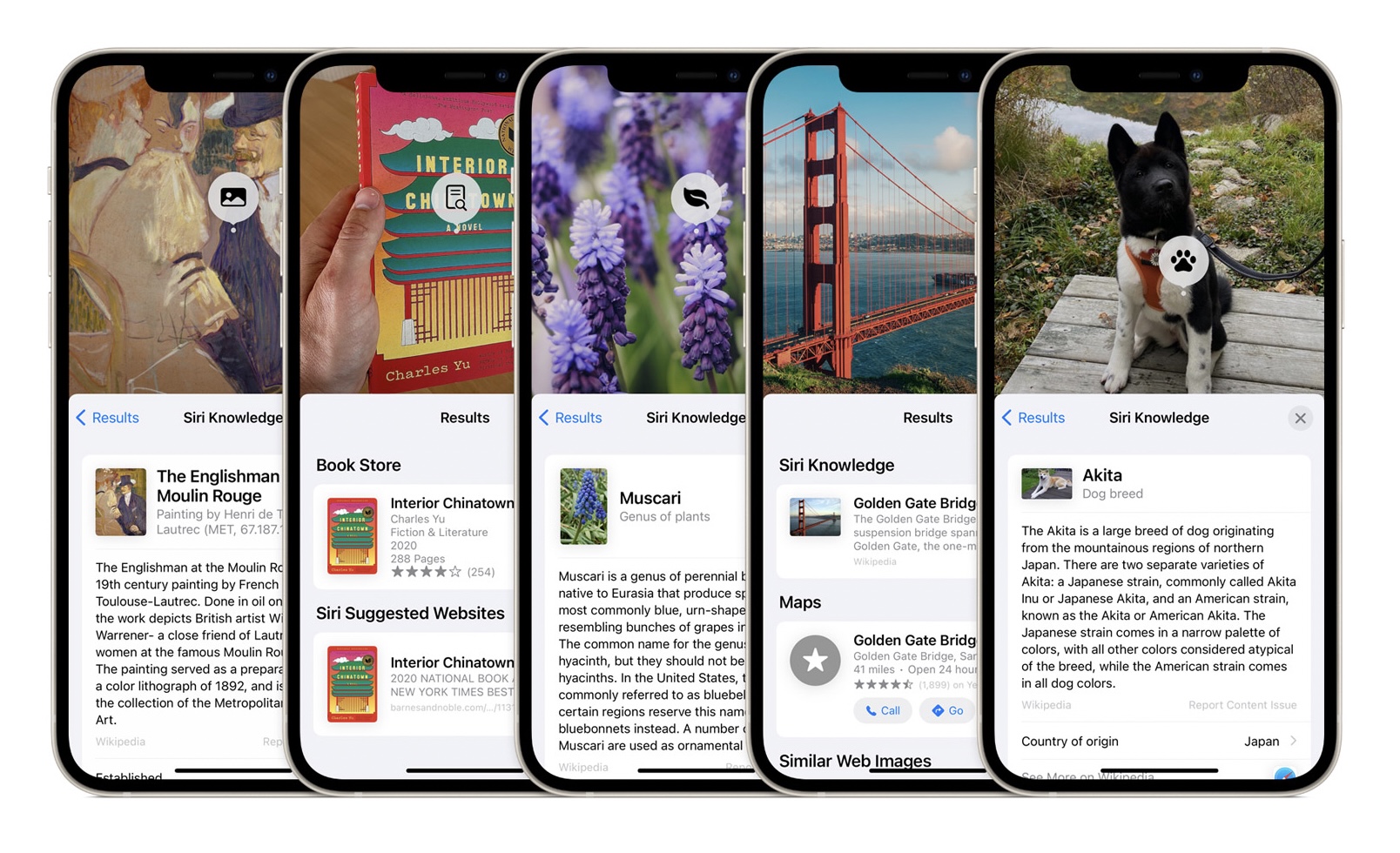

In iOS 15, Apple made further advancements in on-device machine learning and integrated them into the Photos app to make your iPhone more intelligent at recognizing the contents of pictures.

In other words, the Photos app can now identify various objects, landmarks, animals, books, plants, works of art, and more in your image library, and then offer information about them that it draws from the web.

This new intelligent feature is called Visual Lookup, and the following steps show you how you can use it to get more clued up on the things you've taken pictures of with your iPhone through the years.

- Open the Photos app on your iPhone and select a picture with a clearly defined subject, such as a flower or animal.

- Check the info ("i") icon at the bottom of the screen. If it has a little star over it, tap it – this indicates there's a Visual Lookup you can examine.

- Tap the little icon in the center of the photo to bring up the Lookup search results.

In Visual Lookup, search results consist of Siri Knowledge, similar images found on the web, and other online sources of information. For all the details on the other new Photos features in iOS 15, be sure to check our dedicated roundup.

Article Link: iOS 15: How to Use Visual Lookup in Photos to Identify Landmarks, Plants, and Pets

Tried everything to get this working on an iPhone 12 Pro Max with region/language set to English (US) on final release version of iOS 15.

Nothing appears anywhere.

No issues with Live Text in either US or UK English.

Does this actually work in the final version?

Apple UK say Visual Look Up supported, with no caveats.

Nothing appears anywhere.

No issues with Live Text in either US or UK English.

Does this actually work in the final version?

Apple UK say Visual Look Up supported, with no caveats.

Last edited:

Register on MacRumors! This sidebar will go away, and you'll see fewer ads.