Visual Intelligence is an Apple Intelligence feature that's exclusive to the iPhone 16, iPhone 16 Pro, and iPhone 16e models, but it is rumored to be coming to the iPhone 15 Pro in the future. Visual Intelligence is available as of iOS 18.2, and this guide outlines what it can do.

Activating and Using Visual Intelligence

To use Visual Intelligence, you need to hold down on the Camera Control button for a few seconds to activate the Visual Intelligence mode. On the iPhone 16e, you need to use a Control Center toggle or the Action Button assigned to the Visual Intelligence feature as there is no Camera Control button.

Just pressing opens up the camera with Camera Control, so you do need a distinct press and hold gesture to get to it. Make sure you're not already in the Camera app, because it doesn't work if the camera is already active.

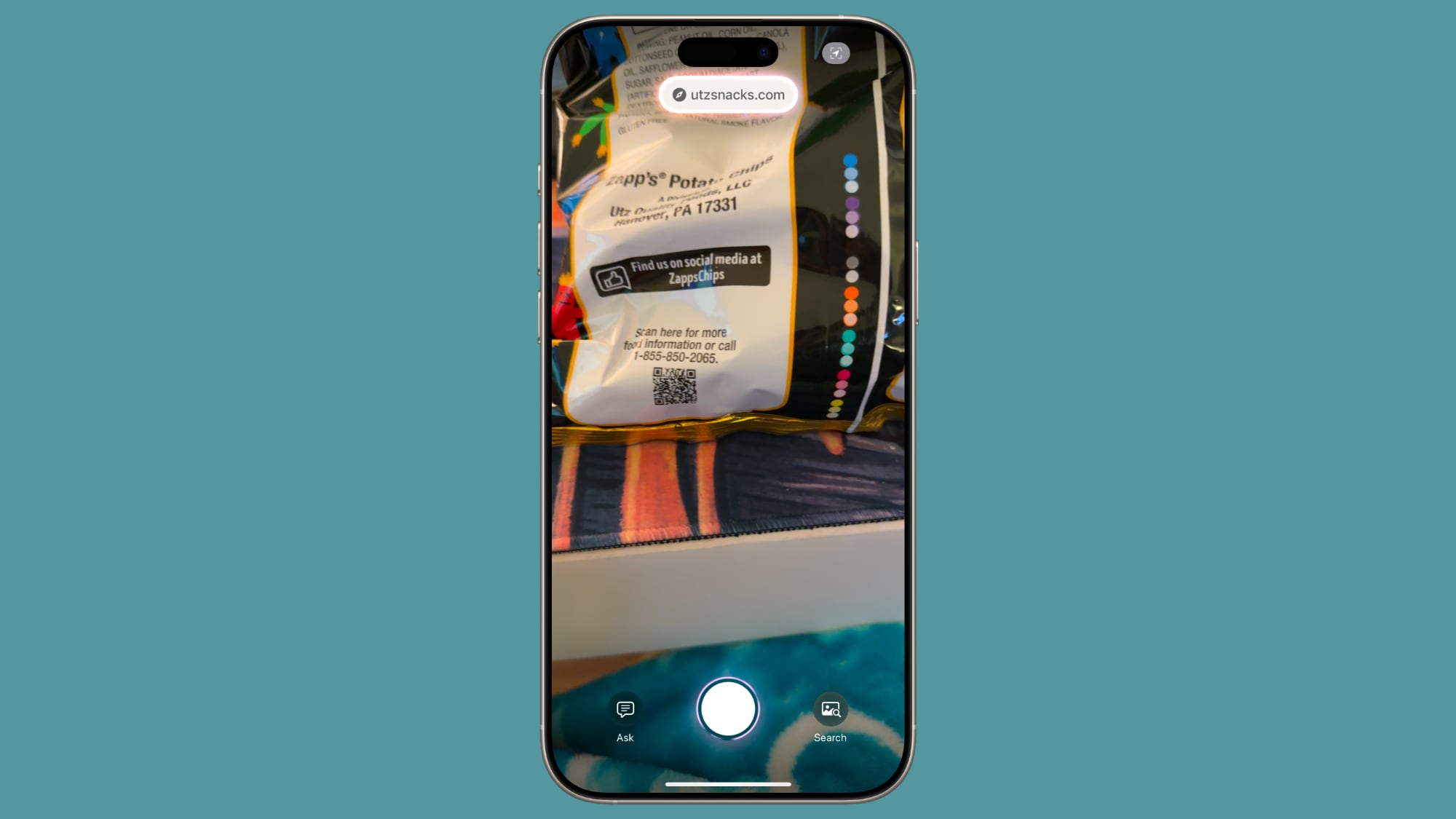

The Visual Intelligence interface features a view from the camera, a button to capture a photo, and dedicated "Ask" and "Search" buttons. Ask queries ChatGPT, and Search sends an image to Google Search.

Using Visual Intelligence requires taking a photo of whatever you're looking at. You need to snap a photo, which you can do with the Camera Control button, and select an option. It does not work with a live camera view, and you cannot use photos that you took previously.

Get Details About Places

If you're out somewhere and want to get more information about a restaurant or a retail store, click and hold Camera Control, and then click Camera Control again to take a photo or tap the name of the location at the top of the display.

From there, you can see the hours when the business is open, place an oder for delivery at relevant locations, view the menu, view offered services, make a reservation, call the business, or visit the location's website.

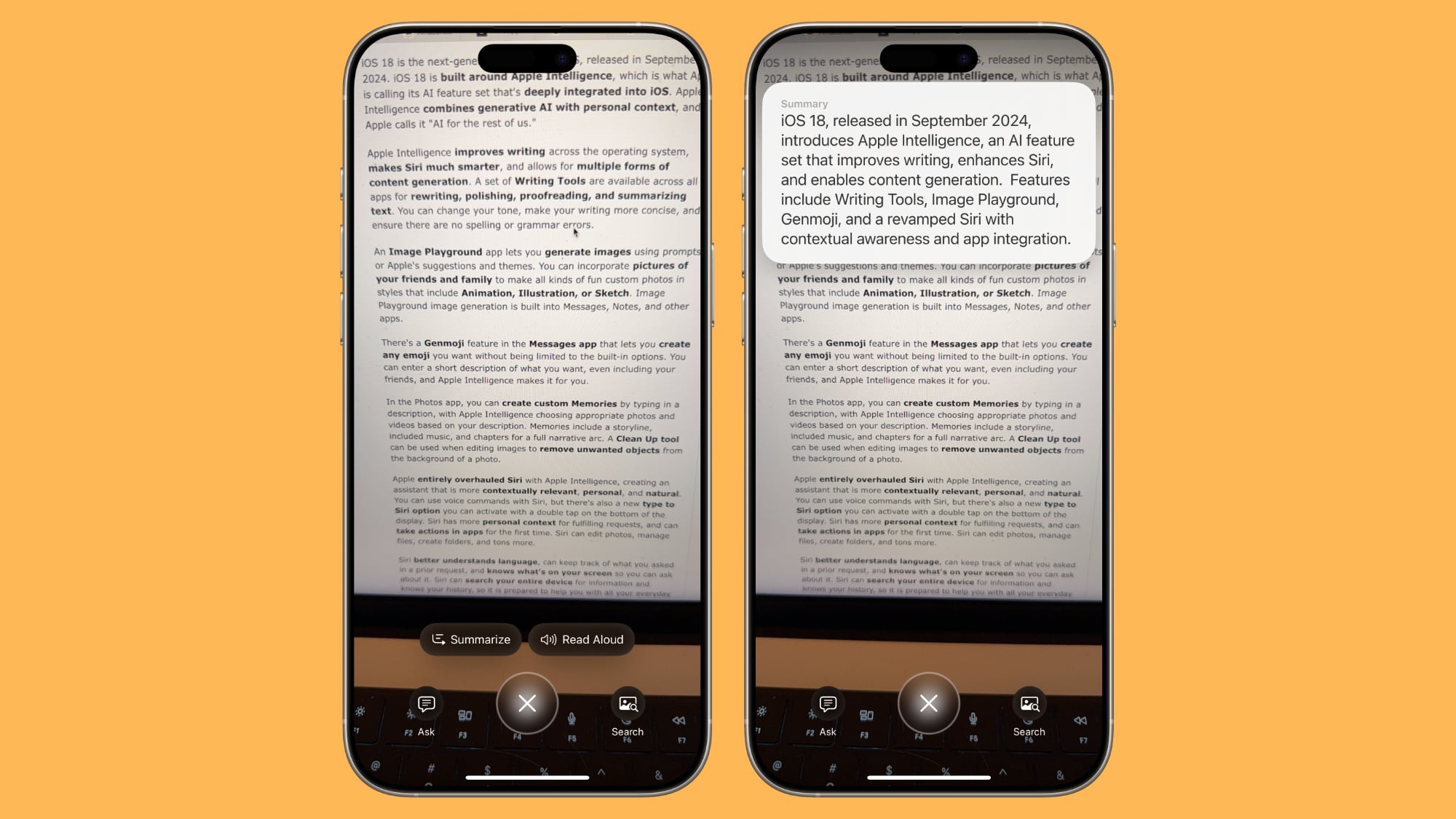

Summarize Text

Take a photo of text from the Visual Intelligence interface. Choose the "Summarize" option to get a summary of what's written.

The Summarize option is useful for long blocks of text, but it is similar to other Apple Intelligence summaries so it is brief and not particularly in-depth.

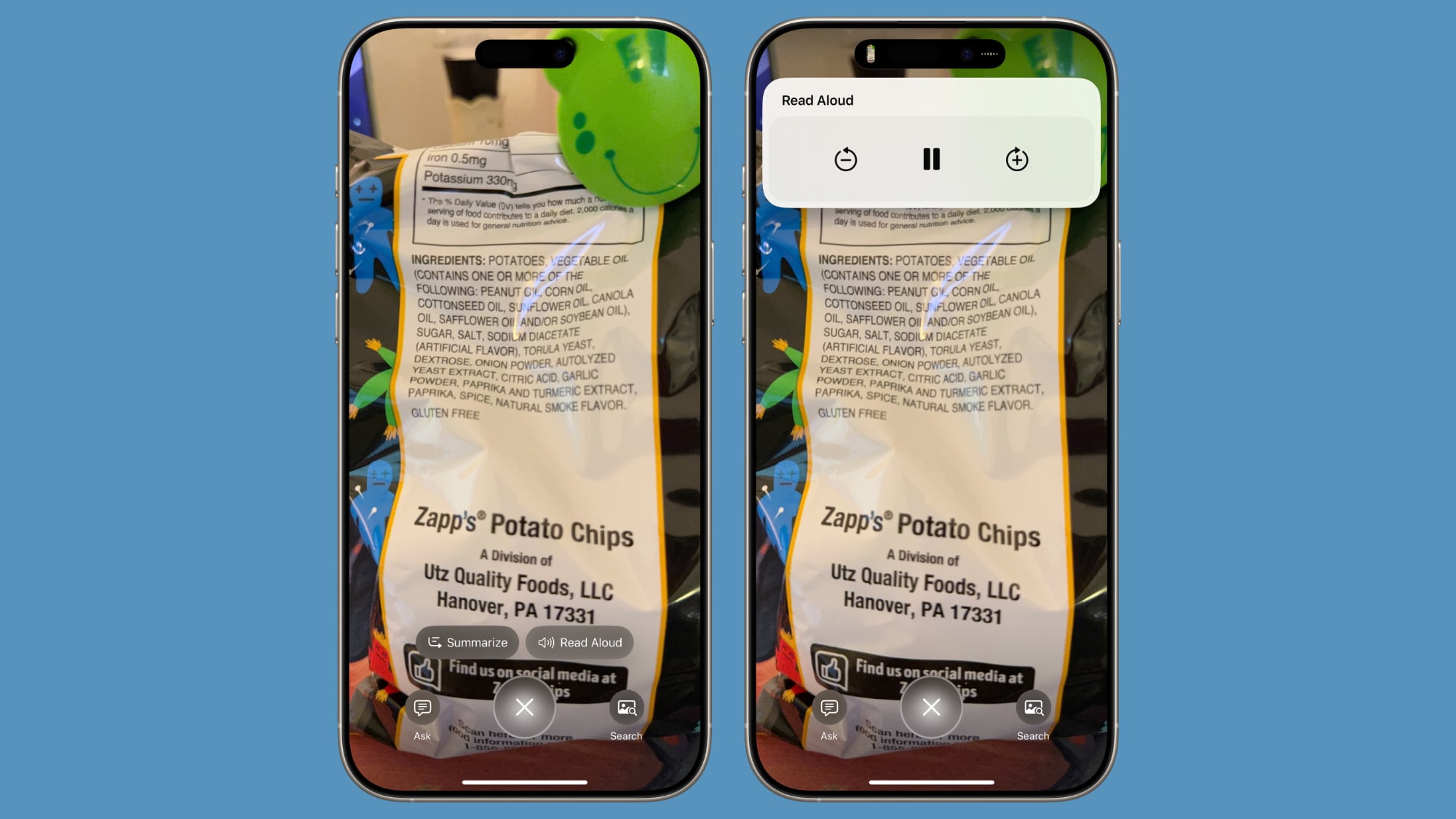

Read Text Out Loud

Whenever you take a Camera Control image of text, there is an option to hear it read aloud. To use this, just tap the "Read Aloud" button at the bottom of the display, and Siri will read it out loud in your selected Siri voice.

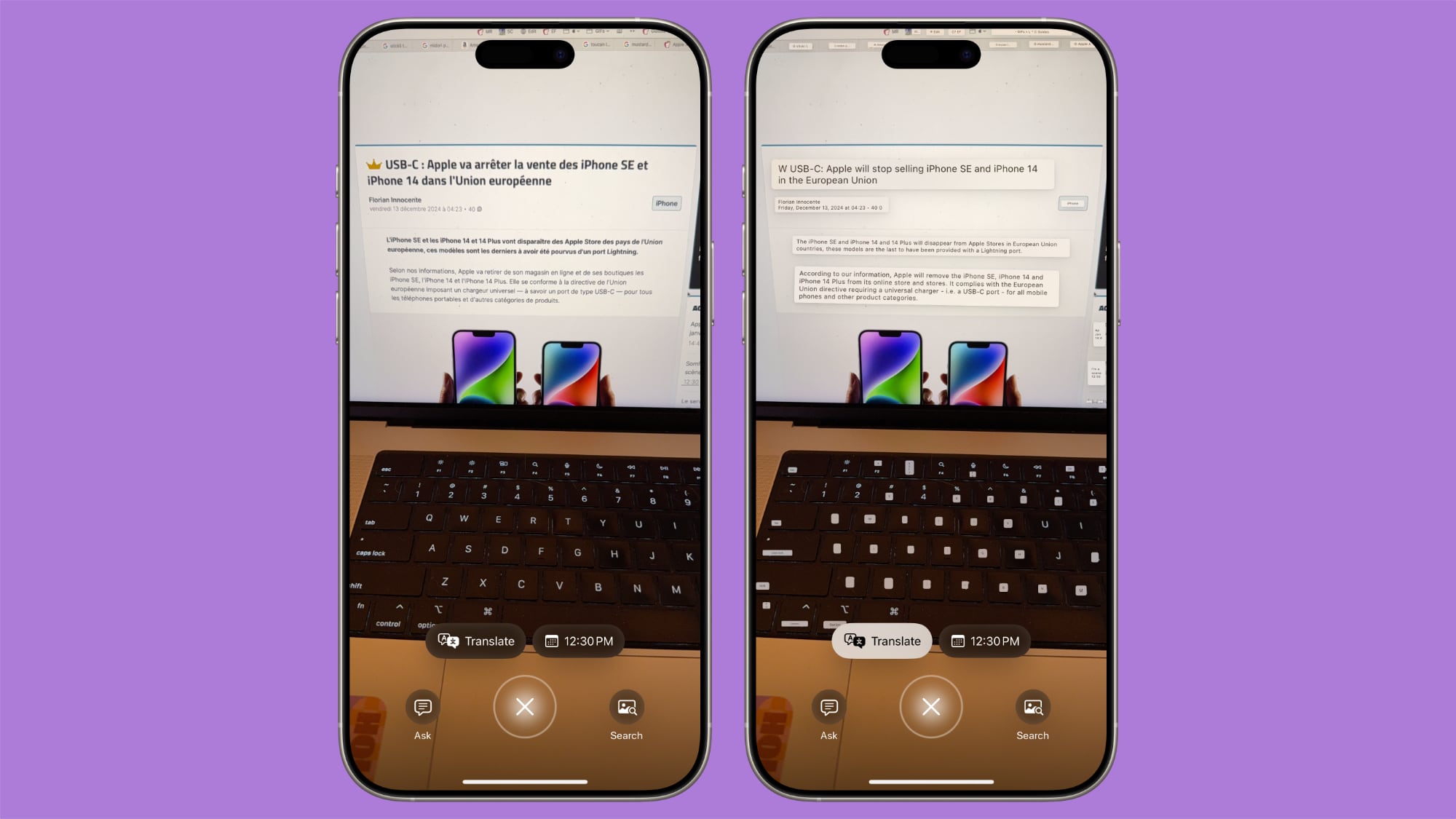

Translate Text

If text that you capture with Visual Intelligence is not in your language (limited to English at this time), you'll see a "Translate" option. You can tap it to get an instant translation.

Go to Website Links

If there's a link in an image that you capture with Visual Intelligence, you'll see a link that you can tap to visit the website.

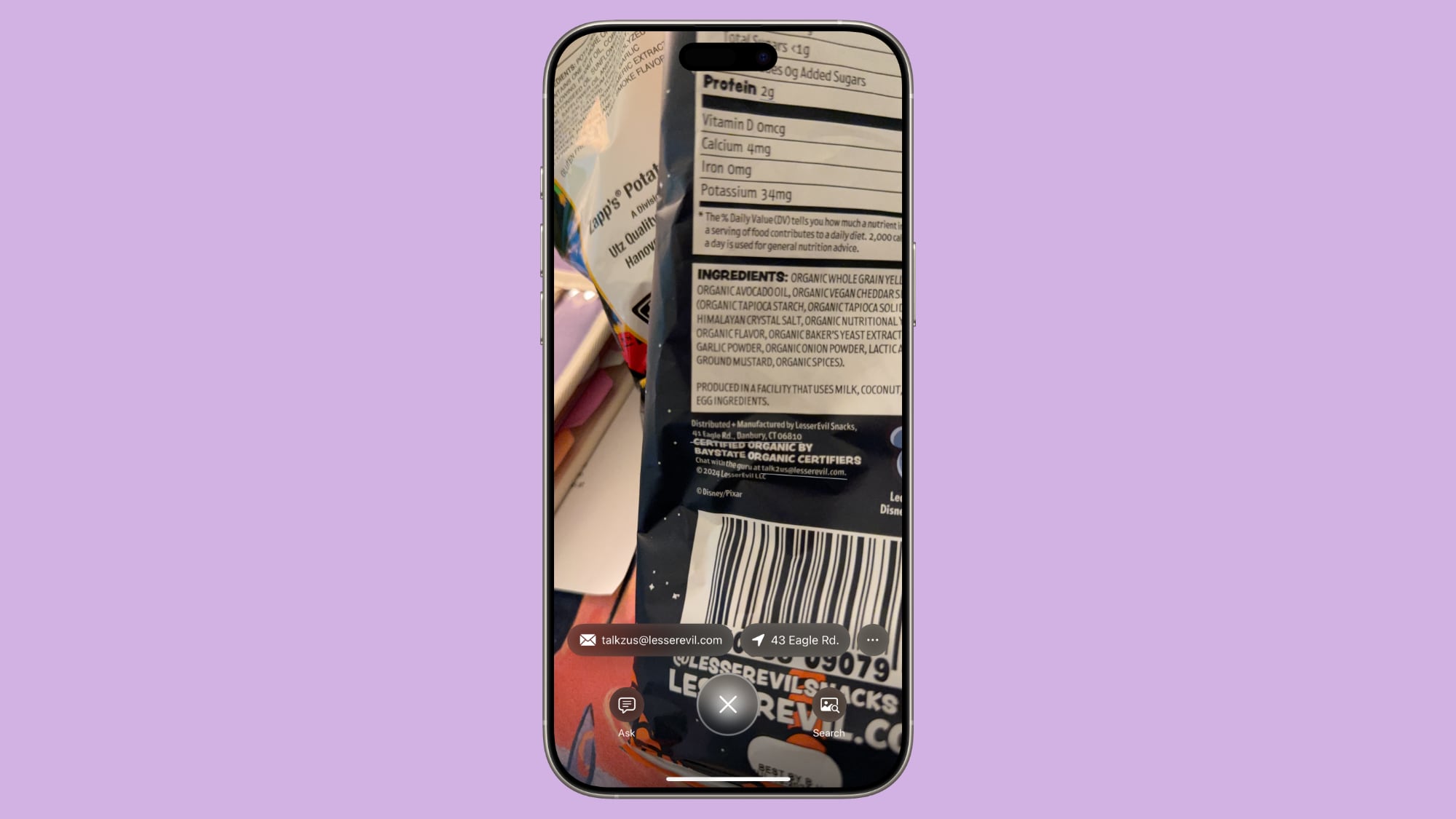

Send Emails and Make Phone calls

If there is an email address in an image, you can tap it to compose an email in the Mail app. Similarly, if there is a phone number, you'll see an option to call it.

Create a Calendar Event

Using Visual Intelligence on something that has a date will give you an option to add that event to your calendar.

Detect and Save Contact Info

For phone numbers, email addresses, and addresses, Apple says you can add the information to a contact in the Contacts app. You can also open address in the Maps app.

Scan QR Codes

Visual Intelligence can be used to scan a QR code. With QR codes, you don't actually need to snap an image, you simply need to point the camera at the QR code and then tap the link that pops up.

QR code scanning also works in the Camera app without Visual Intelligence active.

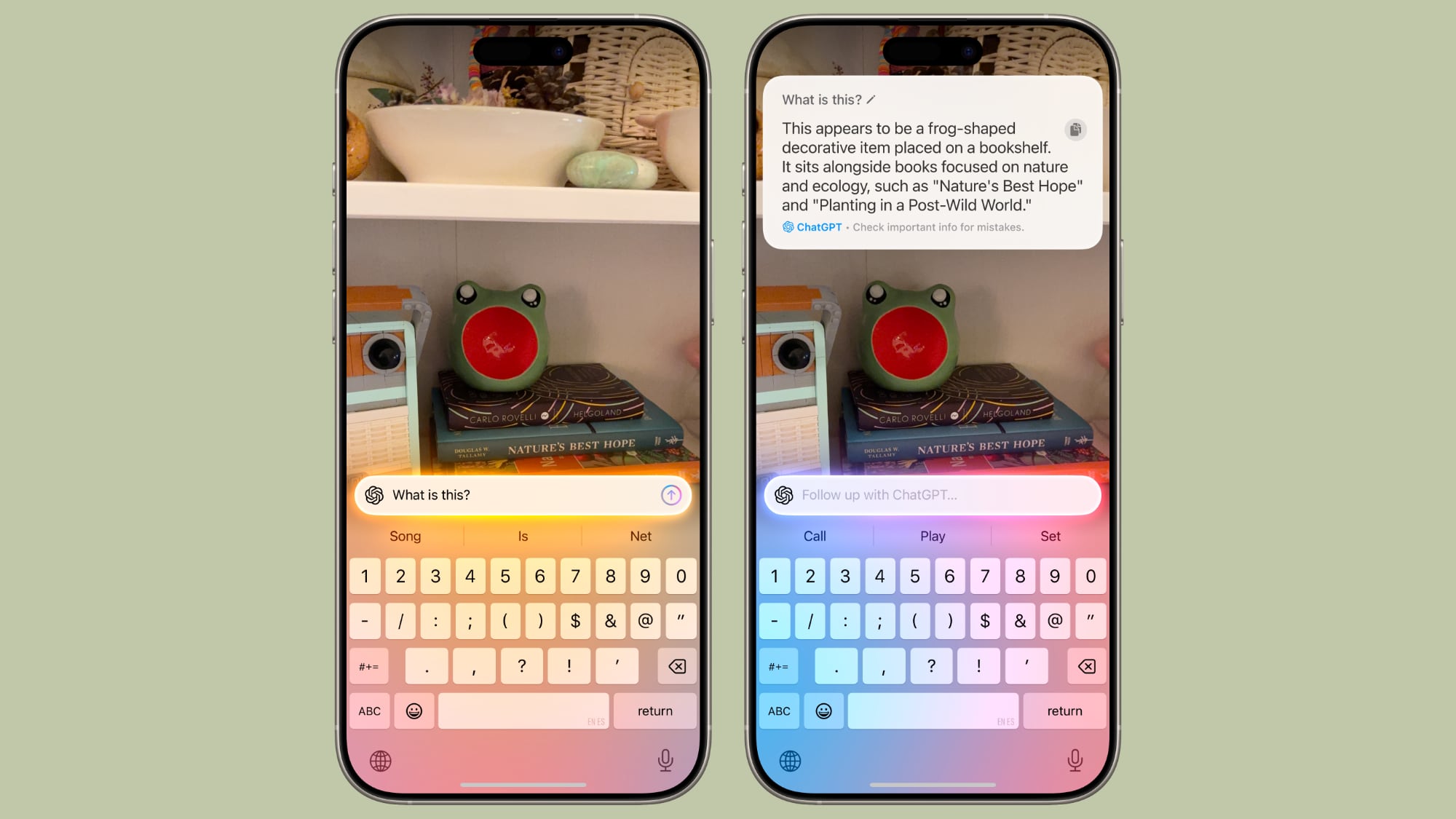

Ask ChatGPT

You can take a photo of anything and tap on the "Ask" option to send it to ChatGPT while also asking a question about it. If you take a picture of an item with Visual Intelligence and want to know what it is, for example, you tap on Ask and then type in "What is this?" to get to a ChatGPT interface.

ChatGPT will respond, and you can type back if you have followup questions.

Visual Intelligence uses the ChatGPT Siri integration, which is opt-in. By default, no data is collected, but if you sign in with an OpenAI account, ChatGPT can remember conversations.

Search Google for Items

You can take a picture of any item that you see and tap on the "Search" option to use Google Image Search to find it on the web. This is a feature that's useful for locating items that you might want to buy.

Read More

For more on the features that you get with Apple Intelligence, we have a dedicated Apple Intelligence guide.

Article Link: Visual Intelligence: How to Use It, Features and More

- Article Link

- https://www.macrumors.com/guide/visual-intelligence/

Last edited: