Apple are making fools of themselves with this feature, only being available on 16's....it's a nice to have for most of us, however nowhere near important enough to upgrade a 15 Pro!

Visual Intelligence is an Apple Intelligence feature that's exclusive to the iPhone 16 and iPhone 16 Pro models because it relies on the Camera Control button. Visual Intelligence is available as of iOS 18.2, and this guide outlines what it can do.

Activating and Using Visual Intelligence

To use Visual Intelligence, you need to hold down on the Camera Control button for a few seconds to activate the Visual Intelligence mode.

Just pressing opens up the camera with Camera Control, so you do need a distinct press and hold gesture to get to it. Make sure you're not already in the Camera app, because it doesn't work if the camera is already active.

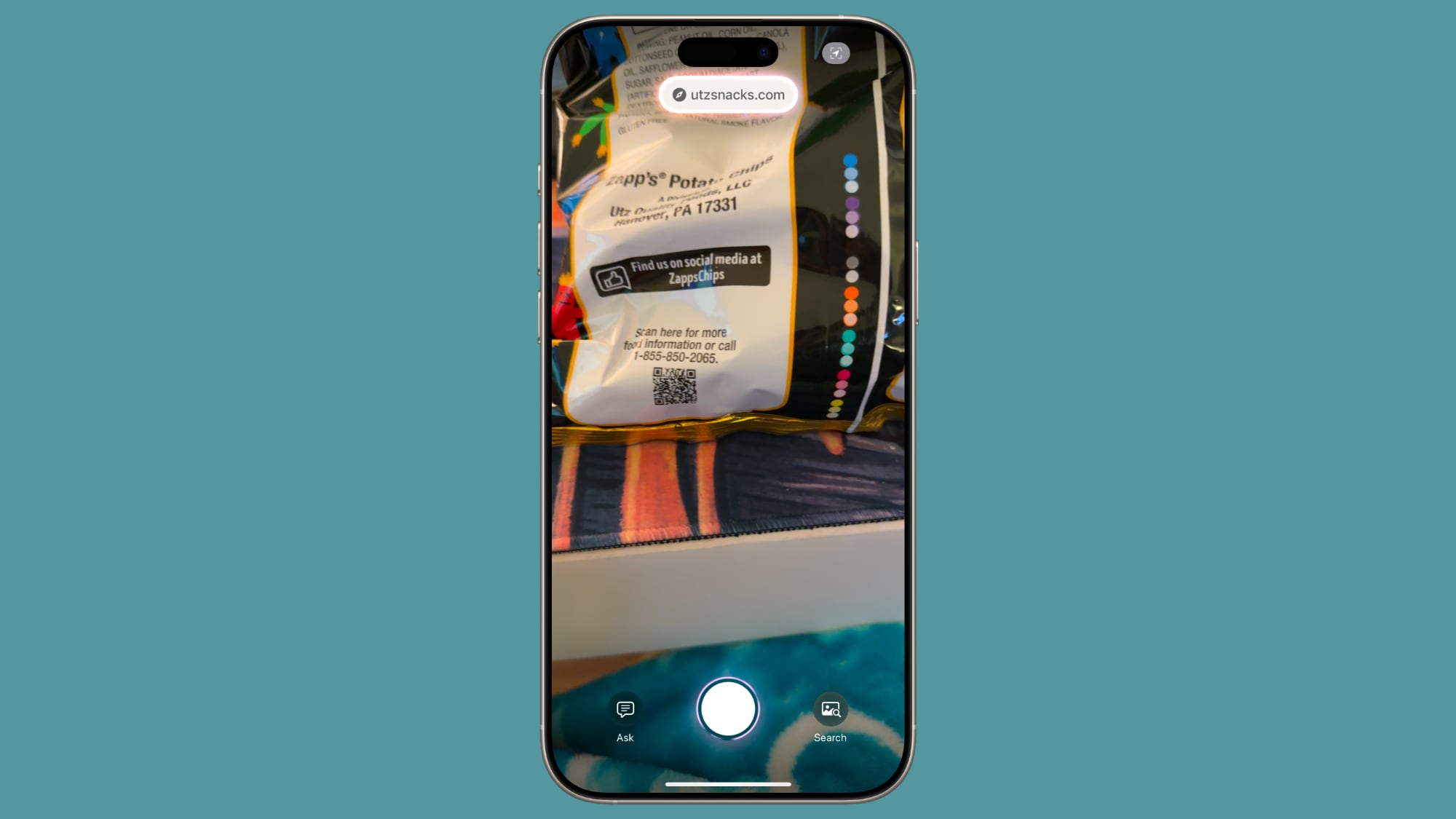

The Visual Intelligence interface features a view from the camera, a button to capture a photo, and dedicated "Ask" and "Search" buttons. Ask queries ChatGPT, and Search sends an image to Google Search.

Using Visual Intelligence requires taking a photo of whatever you're looking at. You need to snap a photo, which you can do with the Camera Control button, and select an option. It does not work with a live camera view, and you cannot use photos that you took previously.

Get Details About Places

If you're out somewhere and want to get more information about a restaurant or a retail store, click and hold Camera Control, and then click Camera Control again to take a photo or tap the name of the location at the top of the display.

From there, you can see the hours when the business is open, place an oder for delivery at relevant locations, view the menu, view offered services, make a reservation, call the business, or visit the location's website.

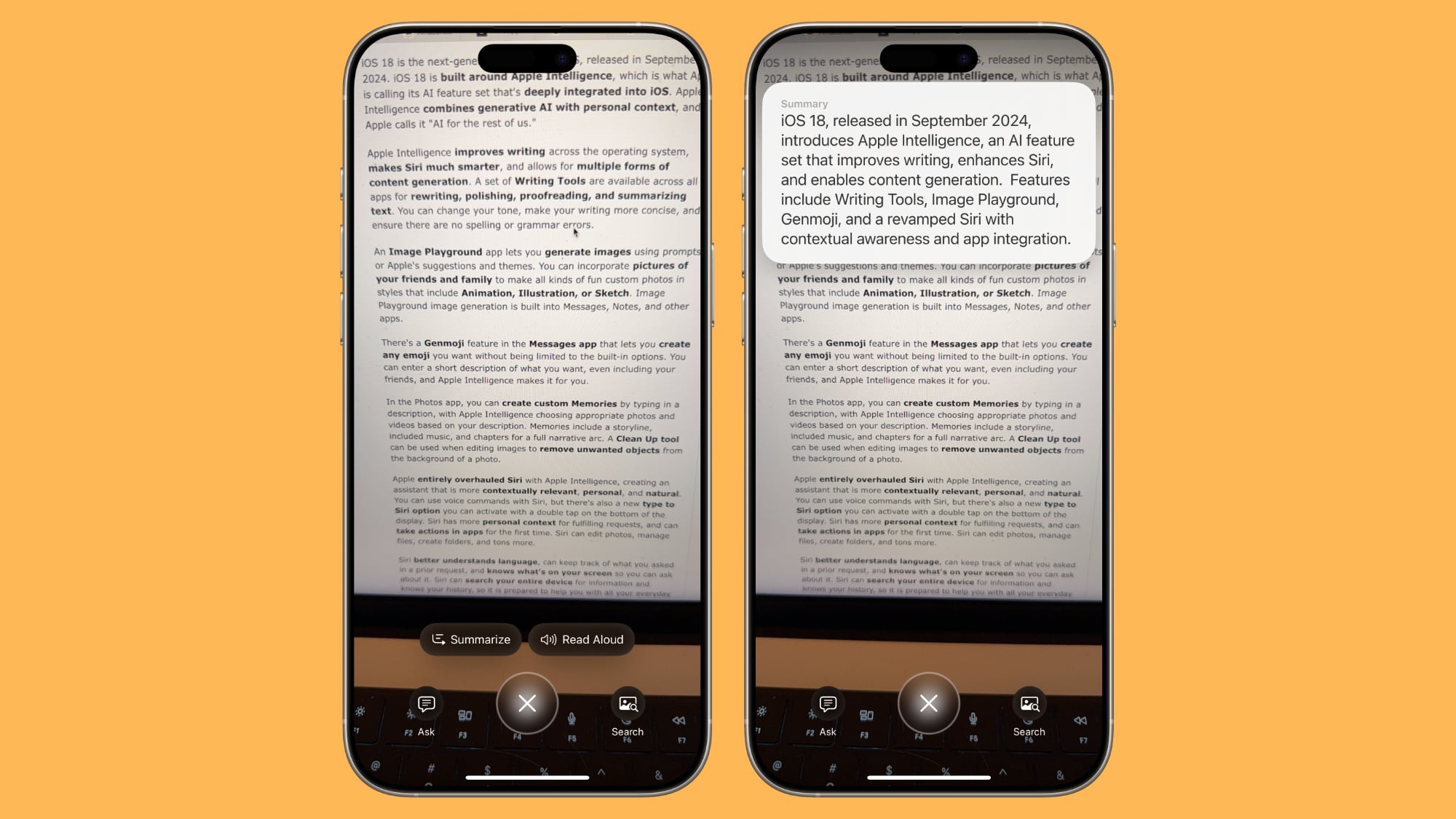

Summarize Text

Take a photo of text from the Visual Intelligence interface. Choose the "Summarize" option to get a summary of what's written.

The Summarize option is useful for long blocks of text, but it is similar to other Apple Intelligence summaries so it is brief and not particularly in-depth.

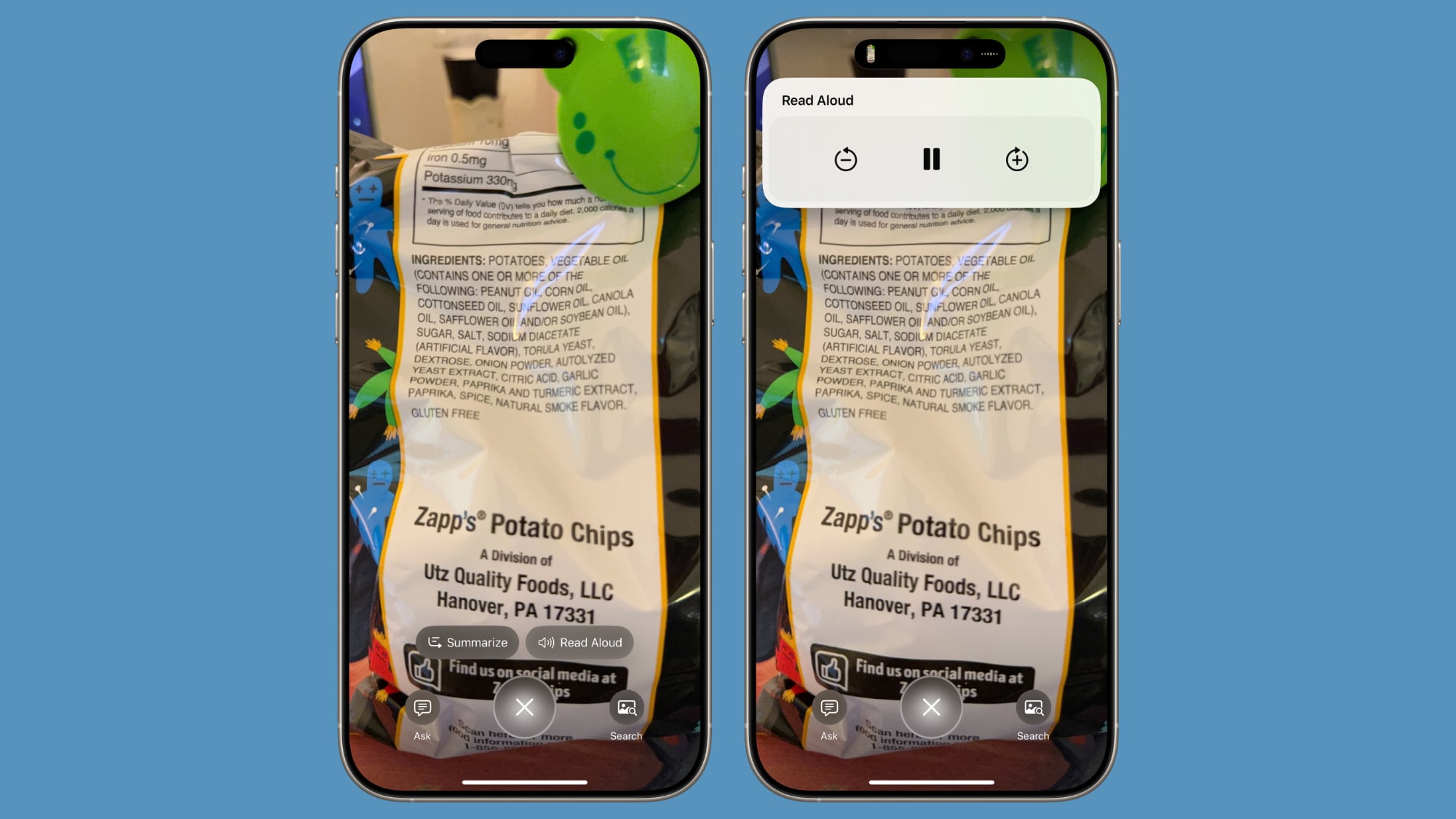

Read Text Out Loud

Whenever you take a Camera Control image of text, there is an option to hear it read aloud. To use this, just tap the "Read Aloud" button at the bottom of the display, and Siri will read it out loud in your selected Siri voice.

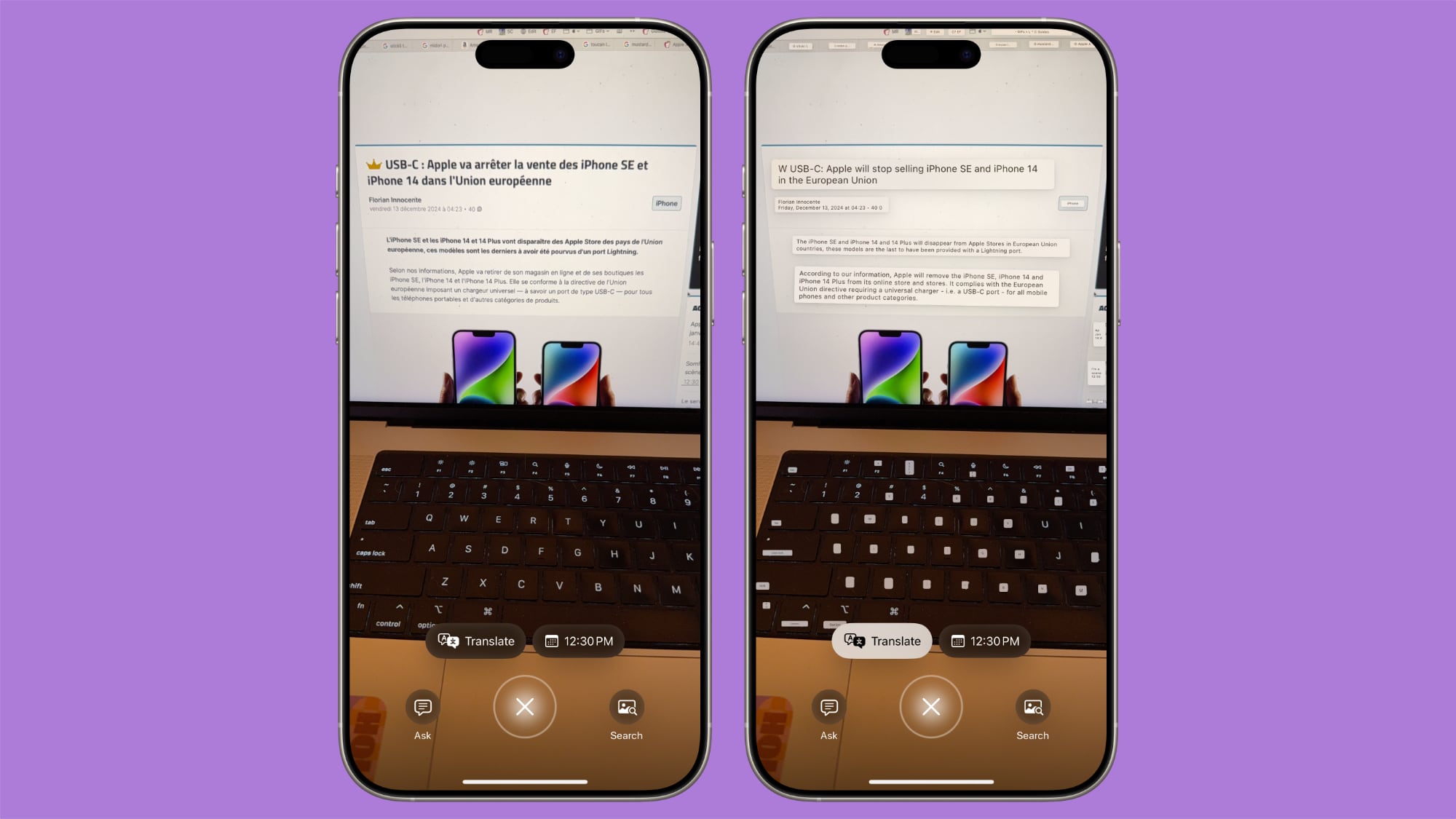

Translate Text

If text that you capture with Visual Intelligence is not in your language (limited to English at this time), you'll see a "Translate" option. You can tap it to get an instant translation.

Go to Website Links

If there's a link in an image that you capture with Visual Intelligence, you'll see a link that you can tap to visit the website.

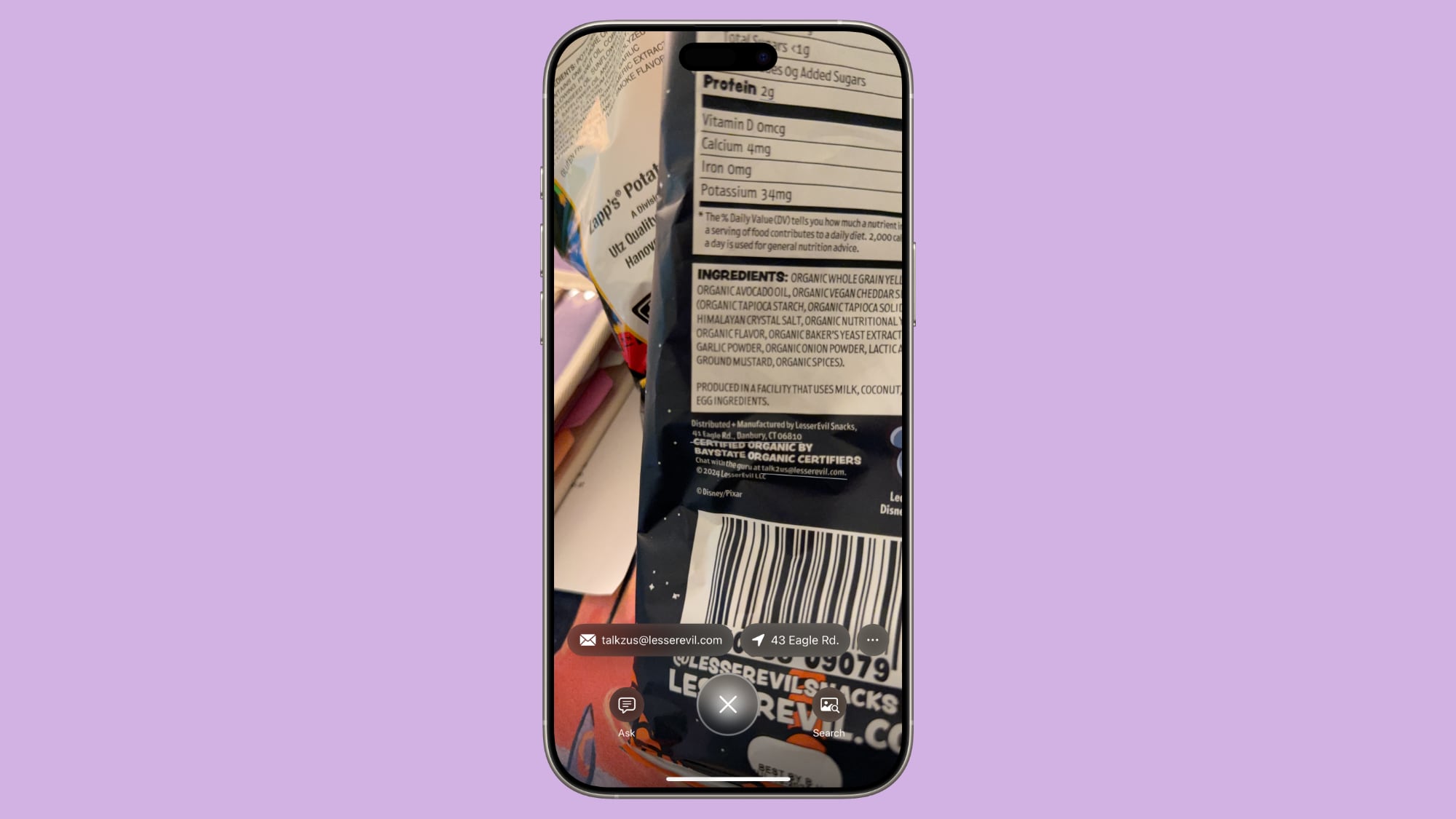

Send Emails and Make Phone calls

If there is an email address in an image, you can tap it to compose an email in the Mail app. Similarly, if there is a phone number, you'll see an option to call it.

Create a Calendar Event

Using Visual Intelligence on something that has a date will give you an option to add that event to your calendar.

Detect and Save Contact Info

For phone numbers, email addresses, and addresses, Apple says you can add the information to a contact in the Contacts app. You can also open address in the Maps app.

Scan QR Codes

Visual Intelligence can be used to scan a QR code. With QR codes, you don't actually need to snap an image, you simply need to point the camera at the QR code and then tap the link that pops up.

QR code scanning also works in the Camera app without Visual Intelligence active.

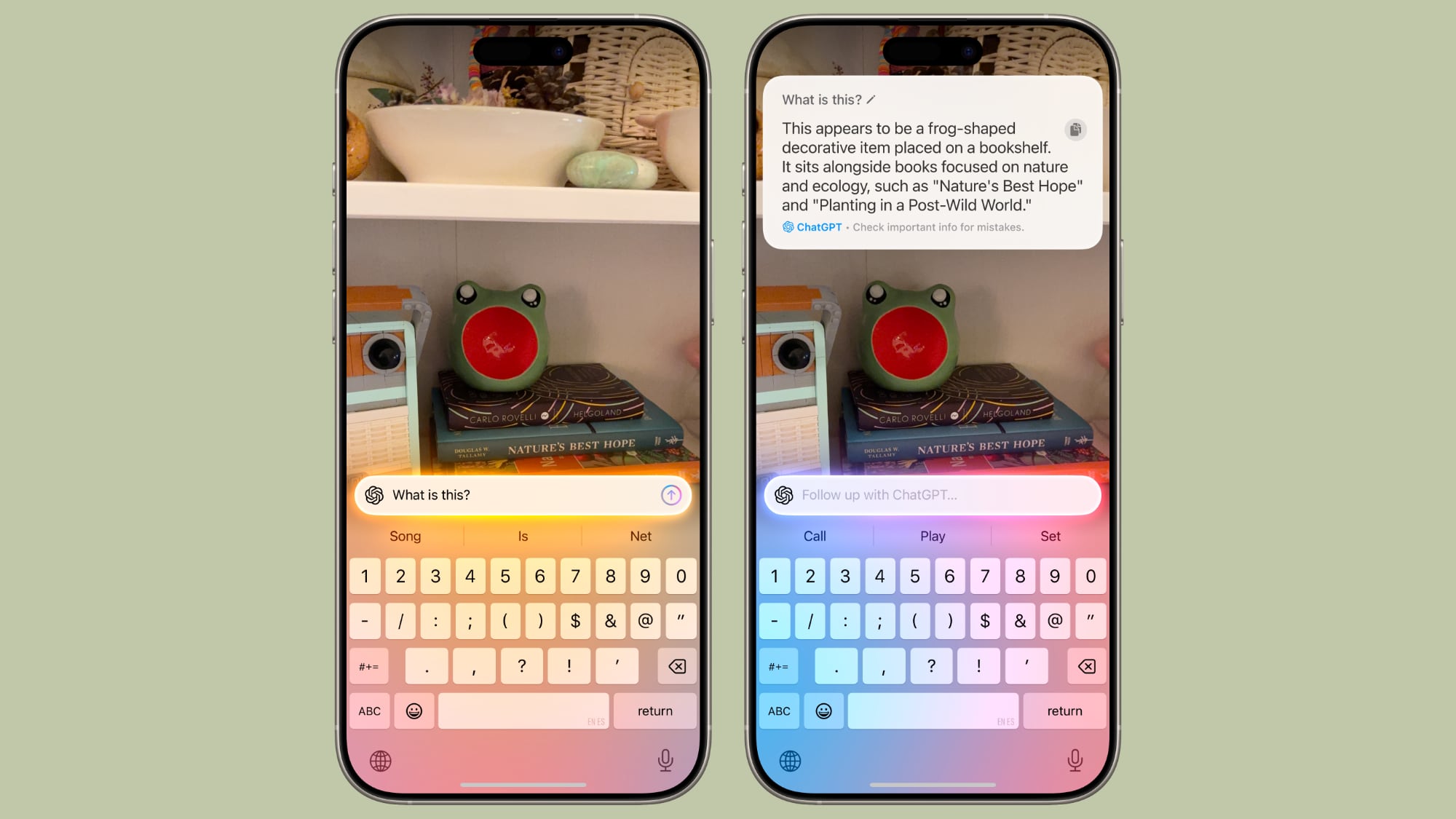

Ask ChatGPT

You can take a photo of anything and tap on the "Ask" option to send it to ChatGPT while also asking a question about it. If you take a picture of an item with Visual Intelligence and want to know what it is, for example, you tap on Ask and then type in "What is this?" to get to a ChatGPT interface.

ChatGPT will respond, and you can type back if you have followup questions.

Visual Intelligence uses the ChatGPT Siri integration, which is opt-in. By default, no data is collected, but if you sign in with an OpenAI account, ChatGPT can remember conversations.

Search Google for Items

You can take a picture of any item that you see and tap on the "Search" option to use Google Image Search to find it on the web. This is a feature that's useful for locating items that you might want to buy.

Read More

For more on the features that you get with Apple Intelligence, we have a dedicated Apple Intelligence guide.

Article Link: iOS 18.2: What You Can Do With Visual Intelligence

Got a tip for us?

Let us know

Become a MacRumors Supporter for $50/year with no ads, ability to filter front page stories, and private forums.

Visual Intelligence: How to Use It, Features and More

- Thread starter MacRumors

- Start date

- Sort by reaction score

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

TrollWow so one redundant button is keeping me from having this on my 15 Pro that has all other Apple Intelligence features? Does Apple have no shame left?

This is extra annoying as its the only truly useful feature that stands out in the extremely lacklustre Apple Intelligence Features released so far.

So buy an Android and quit being so unhappyQuestion: does Apple have no shame left?

Apple: check out these upgrade prices for RAM and SSD

I'm just waiting for a fine woven polishing cloth that costs 100$ but I guess I'll settle on a camera control button.

At leats you can correct a mistype number in the dial pad in any phone, except Iphone!

Absolutely not true. Apparently you don't own an iPhone.

I have a 15 Pro Max, and I'm fine with using that Image Playground thing for a while, until I get bored with it just showing the same few images of my face being too big in the small image and with little background. It'll go the way of Memoji (took me a second to remember what it was called).

Oh, have you tried "Me as an alcoholic" in Image Playground? Lots of fun.

Oh, have you tried "Me as an alcoholic" in Image Playground? Lots of fun.

When the Apple tactics are this blatant and this obvious, you know things are (comparatively) bad. Before they actually had genuine bonafide reasons for you to upgrade that made you convince yourself it was worth it (truth be told, it usually was).

This just….it seems either like complete disregard for their customers or “panic mode engaged”.

Apple is no longer making THE best phones, it makes one of the good phones.

And yes, I bought a brand new out of contract iP15 Pro 512GB in May this year and I’m quite happy I didn’t wait for the 16. I’m disappointed by how it feels, software wise coming from Android. It’s sloppy-slow/not snappy and it just feels outdated. I know it’s good, I’m working on learning, getting used to the new system. But….its a bit stale. And I also absolutely love my M1 12.9 iPad 512GB and it’s the best purchase I’ve ever made in my entire life….but then again I don’t work on it.

This just….it seems either like complete disregard for their customers or “panic mode engaged”.

Apple is no longer making THE best phones, it makes one of the good phones.

And yes, I bought a brand new out of contract iP15 Pro 512GB in May this year and I’m quite happy I didn’t wait for the 16. I’m disappointed by how it feels, software wise coming from Android. It’s sloppy-slow/not snappy and it just feels outdated. I know it’s good, I’m working on learning, getting used to the new system. But….its a bit stale. And I also absolutely love my M1 12.9 iPad 512GB and it’s the best purchase I’ve ever made in my entire life….but then again I don’t work on it.

I honestly had to look at the discussion to realise the "Camera Control" thing was limited to 16P models. Sadly, another 15P user here.....it actually makes me feel annoyed that this is "locked in" and the outcome of that is I will keep my 15P longer and consider not taking an iPhone next time.

Not that Tim Apple will care that much.

Not that Tim Apple will care that much.

There’s no good reason whatsoever not to enable this feature on 15 pro phones, feels very cynical.

That said there’s not a single AI feature added in this panicked rollout that I either want or need to use. The whole thing is quite disenchanting tbh. I want to see some genuine innovation, not gimmickry.

That said there’s not a single AI feature added in this panicked rollout that I either want or need to use. The whole thing is quite disenchanting tbh. I want to see some genuine innovation, not gimmickry.

Agreed. I bought my 15 Pro specifically because it was going to handle Apple Intelligence. Although a lawyer could successfully argue that ‘Visual Intelligence’ is not the same thing as ‘Apple Intelligence’ so a Genmoji phone it is.There’s no good reason whatsoever not to enable this feature on 15 pro phones, feels very cynical.

The VI features which we won’t get sound like they might be useful, which is a step above AI (or 🍎I?) but the bar has been set so low I think it’s actually in the basement.That said there’s not a single AI feature added in this panicked rollout that I either want or need to use.

Will 'Visual Intelligence" help people understand the difference between an airliner, a star and a drone before they post it to social media?

for anyone feeling left out in the cold, also realize how little of what is being advertised here is new.

i have a 13 pro from 3 years ago. it has on device text detection from imagery. and while its not live in the viewfinder, you can very quickly from that snapped photo:

edit: also worth noting, while i can do most of the stuff mentioned above... i rarely do. and it wasn't that it was inconvenient before. its just that most of these features aren't addressing daily problems. and when it does come in handy, the extra half-step over whatever they've made with 'visual intelligence' doesn't bug me in the least? ymmv of course, but i just can't imagine this being a driver for the average person to run out and replace any device form the last half decade.

i have a 13 pro from 3 years ago. it has on device text detection from imagery. and while its not live in the viewfinder, you can very quickly from that snapped photo:

- translate text (just as advertised above)

- read text aloud (just as advertised above)

- detect website url text (just as advertised above)

- detect calendar events (just as advertised above)

- detect actionable emails and phone numbers and contact information (just as advertised above)

- detect qr codes in the camera app without snapping a photo (this article event points out you can do this without visual intelligence... further underscoring this isn't a new feature)

- ask chatgpt - you're sending data off device either way, so outside of the convenience of triggering it with camera control button, you can always just... launch their app. maybe apple has some supposed extra layer of privacy with their servers, but at the end of the day, i dunno, you're using chatgpt.

- same deal with google lens, outside of a minor convenience over launching a dedicated app, i'm just not sure what the fuss is.

edit: also worth noting, while i can do most of the stuff mentioned above... i rarely do. and it wasn't that it was inconvenient before. its just that most of these features aren't addressing daily problems. and when it does come in handy, the extra half-step over whatever they've made with 'visual intelligence' doesn't bug me in the least? ymmv of course, but i just can't imagine this being a driver for the average person to run out and replace any device form the last half decade.

Last edited:

Did I say that? When you buy a 2023 Civic and they introduce a new feature in the 2024 Cicic - what do you do??

Expecting every new feature in an OS to work on every piece of HW is just not realistic.

If the new feature is a button that heats up the seats faster in the cold and you find out both models have the same seat heating hardware? You bet you I expect them to update the 2023 info center to have a software button to do the same thing.

This is the equivalent of preventing something like Spotify on last year's models of phones in CarPlay. It doesn't make any sense except greed.

Couldn’t agree more!I bought it with the intention of having a phone they could introduce new features with adding the buttons via a software update...just as Apple touted back in 2007.

Car analogies continue to be one of silliest analogies to make as if legacy car makers practices in terms of software is worth emulating. BMW would love to charge you $3000 to enable heat warmers in software. Modern car makers like Tesla, Rivian, Lucid, NIO etc, with connected cars now continue to update old models with new software features.Did I say that? When you buy a 2023 Civic and they introduce a new feature in the 2024 Cicic - what do you do??

But its not every piece of hardware, just last year's model at least. There is nothing preventing them in adding this feature to iPhone 15 via a shortcut and with the use of action button. It is very realistic to expect iOS 18 with all its AI features to work on iPhone 16 and 15. Most of these features are literally on 5 year old Android phones and does not require a special button to use.Expecting every new feature in an OS to work on every piece of HW is just not realistic.

It’s almost as if freedom of choice is something Apple actively rejects, and honestly, it’s starting to make me resent the company. I don’t even recognize Apple anymore

Think of it as enlightened despotism, maybe the best form of government if you want uniformity, control of noisy people, harsh treatment of people making a nuisance of themselves, etc.

Apple has always been like this with iOS.

It’s sad Apple continually flakes out on the consumers.Wow so one redundant button is keeping me from having this on my 15 Pro that has all other Apple Intelligence features? Does Apple have no shame left?

This is extra annoying as its the only truly useful feature that stands out in the extremely lacklustre Apple Intelligence Features released so far.

Absolute garbage that this feature "relies" on the Camera Control button. Another stupid excuse to limit software so they can push their new (and also ordinary) new hardware. Apple are cooked.

When I buy a $1000 phone, I expect it to get the newest software updates for at least, you know, more than a few months.When you bought your 15Pro, did you do that in anticipation to get this feature?

Register on MacRumors! This sidebar will go away, and you'll see fewer ads.