Single core hurts me on 7.1 - especially compared to my MBP M3. Arming ann Instrument channel with big library based instrument and performing in Logic Pro is unbearable. AI also doesn’t perform well on the 7,1 where it flies on my MBP M3

Got a tip for us?

Let us know

Become a MacRumors Supporter for $50/year with no ads, ability to filter front page stories, and private forums.

MP All Models Waiting for MacPro 8,1

- Thread starter davidec

- Start date

- Sort by reaction score

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

I am using browsers ALL day, massive numbers of tabs open, multiple different browsers, virtual desktops as well - and they are all working in a snappy manner.

Oh and a local dev instance of a big enterprise CMS is also running at the same time. Running builds for that is also very fast.

No problems with any of them.

Good to know it's doing well for you.

On my end, I jump between the M1 Max (personal laptop) and then an M4 Pro (work laptop) with the 7,1 for work and it's a huge difference at least on surface level for single core perf. The 28 core definitely still has a great amount of raw power especially multi-threaded and amount of memory.

Look we have to be realistic, we are comparing a 5 year old computer with ones that are far more modern.

What were Apple laptops in 2019 doing in comparison? In 2019 you certainly weren't jumping between M4 and M1 Max.

Is it really even appropriate to compare computers across such large time frames?

What were Apple laptops in 2019 doing in comparison? In 2019 you certainly weren't jumping between M4 and M1 Max.

Is it really even appropriate to compare computers across such large time frames?

Look we have to be realistic, we are comparing a 5 year old computer with ones that are far more modern.

What were Apple laptops in 2019 doing in comparison? In 2019 you certainly weren't jumping between M4 and M1 Max.

Is it really even appropriate to compare computers across such large time frames?

This is true, its more like a 6 year old computer

My overall point was, at least to my eye, the 7,1 is getting long in the tooth, for what I'm doing that is. Even the M1 Max feels less snappy than the M4 Pro and I can't imagine how snappy the M5 Pro/Max/Ultra will be. I may get Mac Studio M5 Max/Ultra next year to replace the 7,1 next year. For me, the 7,1 has served me well, made me a lot of money and made me productive for years so I'm thankful for it.

Also when coming from the 120Hz MacBook Pro displays, the Studio Display's I have are also feeling "sluggish" haha, it's hard to explain, you have to use it to see what I mean.

It will be interesting what they will do with the 9,1, that is even if they'll do anything with it. I really think without PCIe GPU support in M class Macs, for me personally, the Mac Pro serves no purpose.

The title of the thread is offbase on model number identifier. There is no and will never be an 8,1. Or an 9,1. The Intel era is over.

The Mac Pro 2023 model number is : Model Identifier: Mac14,8

Identify your Mac Pro model - Apple Support

Learn all the ways to identify your Mac Pro model.

The Mac Pro is directly coupled to the rest of the Mac line up. There is a generation ( 'year' ) number there preceeds all the Macs in that generation. gen , product

The new MBP 14" with M5

Model Identifier: Mac17,2

Identify your MacBook Pro model - Apple Support

Use this information to find out which MacBook Pro you have, and where it fits in the history of MacBook Pro.

what would probably see is is an 17,x . Where is 'x' is probably some number after '5'.

It is similar to the scheme they switched to with the operating system. Where this Fall all of them are ___26 . Next year it will be ___27 , etc. Only the model numbers are more 'invisible' to the general public and aren't tracking calendar years.

P.S. the Mac Studio 2025 (Model Identifier: Mac16,9 )

Identify your Mac Studio model - Apple Support

Use this information to find out how to identify your Mac Studio.

Last edited:

M5 released and now we ask the question. Are we getting a MacPro M5 based machine early 2026? How long do we wait before we abandon ship and purchase PCIe expansions and move to Mac Studio?

2-2.5 cadence would be 'fast' relative to the 6 and 4 year track record over the last decade.

Studio and PCI-e enclosure isn't going to work if need better than x4 PCI-e v4 bandwidth ( 64 Gb/s ). So depends upon the set of expansion cards want to run. For legacy ( pre-2018 or pre 2016 era ) cards TBv5 will likely provide more coverage.

However, if it is forward looking storage I/O requirements then not so much. ( future x16 SSD 2-4+ carrier card).

I'd like to think there's an update due in the next few months but given I am on a maxed out 2019 Intel and don't want to spend $ on the M2 I'm in limbo.

Curious for everyone's thoughts?

Unless Apple radically changes how they are going multiple die packages, there is a good chance that "Ultra" will only come every other generation. I suspect the M2 Ultra was more a fluke, because the M1 didn't work out. ( they could have hit their two year deadline with that. )

So Ultra M3 , M5 , M7

M3 Ultra showed up too lake and got 'entangled' in TSMC N3B 'drama'.

Early 2026 is highly doubtful. The MBP 14/16" Pro/Max models probably need to roll out first. If the Max is selling out then there would be little left for the upper Mac Studio or the Mac Pro. ( if either same strategy as two Max's glued together or Max is some deeper functional chiplet breakdown. ) The Mini and "Mini Pro" could roll out with those laptops.

The Studio and Mac Pro could come after those. Depending upon how production constrained they were the Mac Pr could be decoupled from that. End of June probably will have substantive more information.

Another point of 'distraction' also is how much effort Apple put into their "AI Server Units'.

Apple begins shipping AI servers from Houston factory

Apple has started shipping artificial intelligence servers built in a factory in Houston, it said on Thursday, part of the company's plans to invest $600 billion in the U.S. in the next few years.

A rack unit with a screen on the back and some custom motherboard , etc. etc. Decent chance server and Mac Pro development teams share a fixed amount of resources ( not 'walking and chewing gum' at the same time). How 'new' that is ( where screwdriver assembling in China and switch to screwdriver assemble in TX ) or not may delay Mac Pro a bit also.

These are shipping so if Apple single tracked the development ( serve first , mac pro second) then probably not early 2026.

I believe there is a good chance that a Mac Pro is coming.

Why?

Mac Studio M3 Ultra has already launched.

I suspect Apple didn't 'fix' the PCI-e backhaul problem with the M3 Ultra. That wouldn't be exposed by a Mac Studio because that part isn't used in that product. Also appears Apple did a Max-no-ultrafusion die and another Max-with-Ultra for the Studio. That also likely makes that variant a 'problem child'.

There is a limited chance that Apple makes the Mac Pro 'eat' the M3 Ultra while the Mac Studio moves onto M5 Ultra. However, that seems like a strategy to kill the Mac Pro longer term. Decent chance the M5 Max will walk over it in most performance tasks.

Reason?

A new Pro XDR Display seems to coming soon. In the past, Apple presented their top tier displays along a Mac Pro. And I assume that both will have Thunderbolt 5, without the need for Display Stream Compression (DSC).

Apple's life cycles on 'large' displays seems to run 6-8 year range. The Mac Pro really cannot afford to go to sleep that long and stay competitive. a 2-3 year cycle would eventually sync up to the screen , but they aren't (and shouldn't be) very tightly coupled. If they happen to line up ... then OK . However, if one is ready to go and the other isn't then ship. ( wither the display is 'way old' and loosing ground in competition or effectively vice versa (Mac Pro is loosing competitive ground). )

Even though share the same "Studio" adjustive the Mac and Display don't 'have to' line up. It wouldn't be too surprising if Apple put the two display onto the same cycle. ( 'short' cycle the Studio Display to align it. )

I am not going to speculate on the specifics of the hardware. Because I am not in the Pro market.

I am just an enthusiast, who loves the Mac Pro 7,1. And I would like to avoid the “intelligence” stuff as much as possible.

The Intelligence stuff is going to be in the product. The NPU is being made a consistent foundational feature in every Mac. ( if a constant , useful facility folks will use it. ). I suspect the NPU will be more used on what Apple used to call "Machine Learning" (NL) stuff . Less LLM hype train and more general utility.

The "Matrix Mult" stuff going into the GPUs in the M5 generation... same thing. It will span all the M5 iterations ( Pro , Max , Ultra). Apple isn't going to do substantively different GPUs cores for different Macs. How much cache and how many cores would vary. The basic die building blocks would be the same. And agian that isn't just for LLM stuff. AI/ML in rendering speed ups is here to stay. that is a different dimension than generating more AI video slop for the Internet. ( although can be used for that also).

Apple needs graphics code that is portable from the iPhone up through the Mac Pro to max out the ecosystem and synergies aspects.

The margins must be paper thin on the Mac Pro, they haven't done anything for it in a while.

The margins are the Mac Pro are likely high; not low. It has very likely has a 'low volume' tax on it. So it doesn't matter if it sells in low volume, just as long as it is relatively consistent (and Apple matches production with demand). It won't be very surprising if the next Mac Pro's base entry price goes up a bit on the next iteration. ( if only to cover component inflation/tariff/muddled-context costs. )

The M2 Ultra is an even more "paid for' SoC design than the M3 Ultra is. On a pre- N3B node... again substantially cheaper. I suspect the multiple chip packaging is less expensive also. ( the M3 Max grew big enough so packaging was a bigger cost. )

Trying to churn out a new Mac Pro every 12 months would incur higher R&D costs. Would need new motherboard every 12 months as opposed to every 24-30 months. Apple could time-slide the team in covering something else during gaps ( uipdates to the server boards ... which also do not need an every 12 month churn rate at all either).

A hefty chunk of the legacy Mac Pro market is covered by the MBP 16" and Mini Pro and Mac Studio now. There is also a siegment that they are not targeting (e.g., hyper modular crowd of dGPU cards). There is a much smaller and specific demographic that Apple. If those folks upgrade every 3-8 years then the numbers each year is smaller. Aggregating folks into bigger groups would be necessary to get substantive volume.

However, too long of a cycle and it runs into major conflicts with Apple's policy of no future product guidance. 5-6 is way too long and customer planning gets too far into the speculative range. 3-4 is also has problems. Moore's Law may be slowing but likely more than a node cycle is probably an uncompetitive mode.

And frankly the Studio Ultra model extremely likely needs something to help with economies of scale. The Mac Pro can't drift toooooo far off the Mac Studio cycle. Spreading that Ultra's development costs over more units is necessary.

That's why I'm so elated, even past a 6 year old computer.This is true, its more like a 6 year old computer

My overall point was, at least to my eye, the 7,1 is getting long in the tooth, for what I'm doing that is. Even the M1 Max feels less snappy than the M4 Pro and I can't imagine how snappy the M5 Pro/Max/Ultra will be. I may get Mac Studio M5 Max/Ultra next year to replace the 7,1 next year. For me, the 7,1 has served me well, made me a lot of money and made me productive for years so I'm thankful for it.

Also when coming from the 120Hz MacBook Pro displays, the Studio Display's I have are also feeling "sluggish" haha, it's hard to explain, you have to use it to see what I mean.

A decade old computer, OH YEAH!

5,1 decked out of course.

Man I am jealous. Stuff I do is CPU bound. My multithread on a dual x5690 5,1 is barely better than a M1 MBAThat's why I'm so elated, even past a 6 year old computer.

A decade old computer, OH YEAH!

5,1 decked out of course.

View attachment 2574890

That's why I'm so elated, even past a 6 year old computer.

A decade old computer, OH YEAH!

5,1 decked out of course.

View attachment 2574890

snapshot of scores ranking tables of today.

AMD Radeon Pro 5700XT _____ 75247

M5 _______________________ 75555

AMD Radeon Pro W5700X. _____ 94661 (25% faster than M5, M5 Pro probably has twice the GPU core count)

...

M4 Max. _____ 179489 (which tops that the 5,1's GPU score)

M2 Ultra _____ 209797

M3 Ultra _____ 229126

AMD Radeon Pro 6950XT _____ 238269

Metal Benchmarks - Geekbench

There are no more AMD (or Nvidia cards) coming. macOS on Intel is ending (e.g., no new cards coming). [ AMD is scaling back on RDNA 1 and RDNA 2 in Windows also. Even if macOS on Intel was going to continue, new AMD support would be highly dubious.

https://www.tomshardware.com/pc-com...d-handhelds-will-still-work-with-future-games ]

2x the M4 Max score would walk over that top 6950XT score. The plain M5 is doing better than some cards folks put in MP 2019's as an more affordable upgrade (open market 5700) and likely the M5 Pro will pass up the card Apple said was the most used card in a MP; the W5700X ( yeah they are probably not counting very late aftermarket cards acquired used).

The 2018-2020 era Macs 'bought' the 5,1 a possible option for a AMD 6000 series card. It didn't earn it on its own. No new cards means the modularity doesn't buy much on macOS.

The Metal score measures really nothing of the 5,1's actual core hardware. It is a bit of a misdirection to label what is scoring the points there as a '5,1'. What you have there is a microbenchmark which is offloaded to another piece of hardware to actually run. The 5,1 is just used to report the results.

For the price, and being able to achieve my goals, and thousands of radio ready, and movie tracks.

Surely a sensible buy when I bought the 5,1 used for a mere dirt price, and doing upgrades still was way cheaper and does get any job done.

Analogy.

When I have clients listen between a PCM90, and a Bruscati reverb tail. They absolutely can't tell the difference.

192Kz, or 96Khz. no one can tell the difference, unless a great engineer knows the gear well.

Surely a sensible buy when I bought the 5,1 used for a mere dirt price, and doing upgrades still was way cheaper and does get any job done.

Analogy.

When I have clients listen between a PCM90, and a Bruscati reverb tail. They absolutely can't tell the difference.

192Kz, or 96Khz. no one can tell the difference, unless a great engineer knows the gear well.

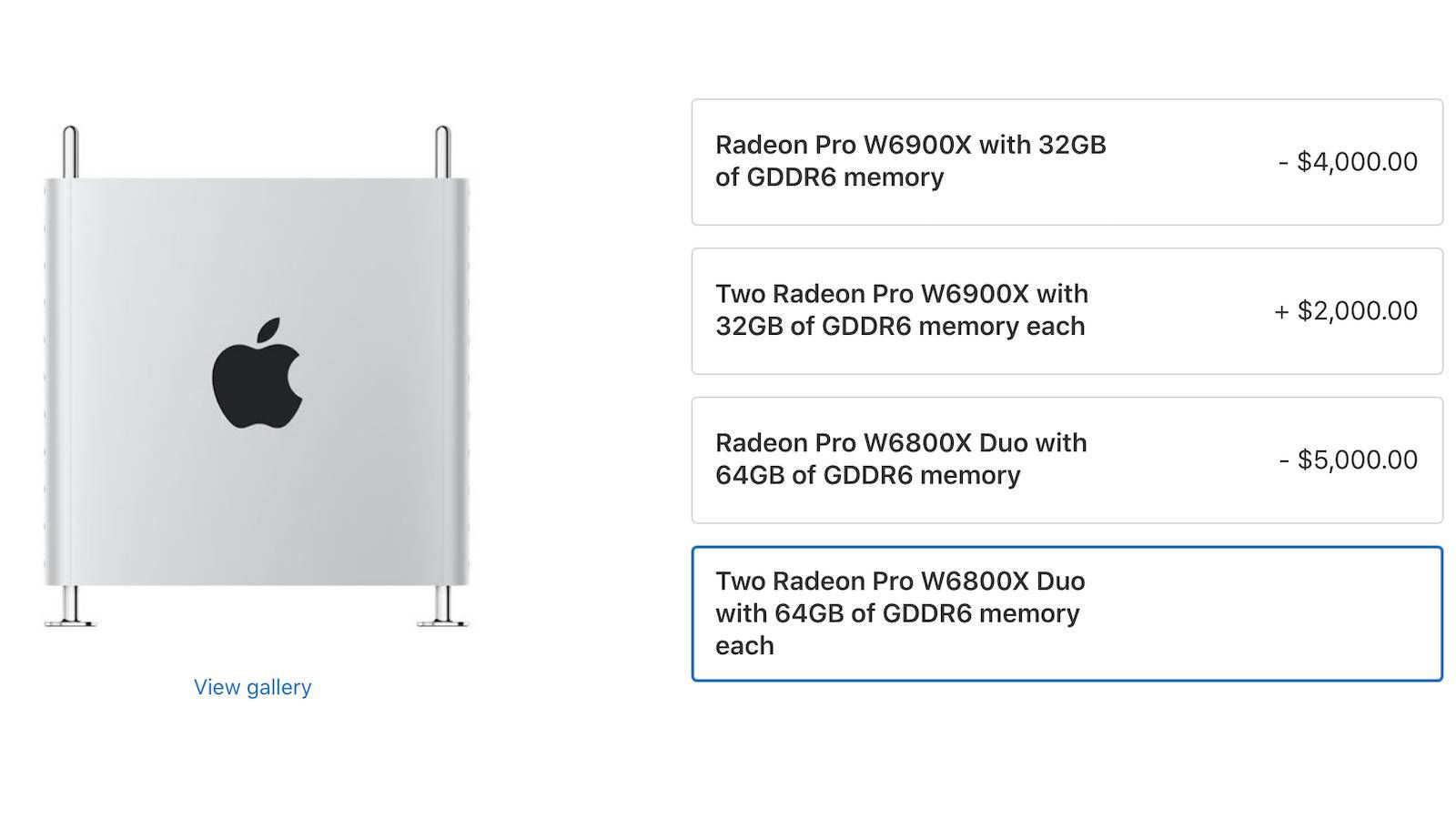

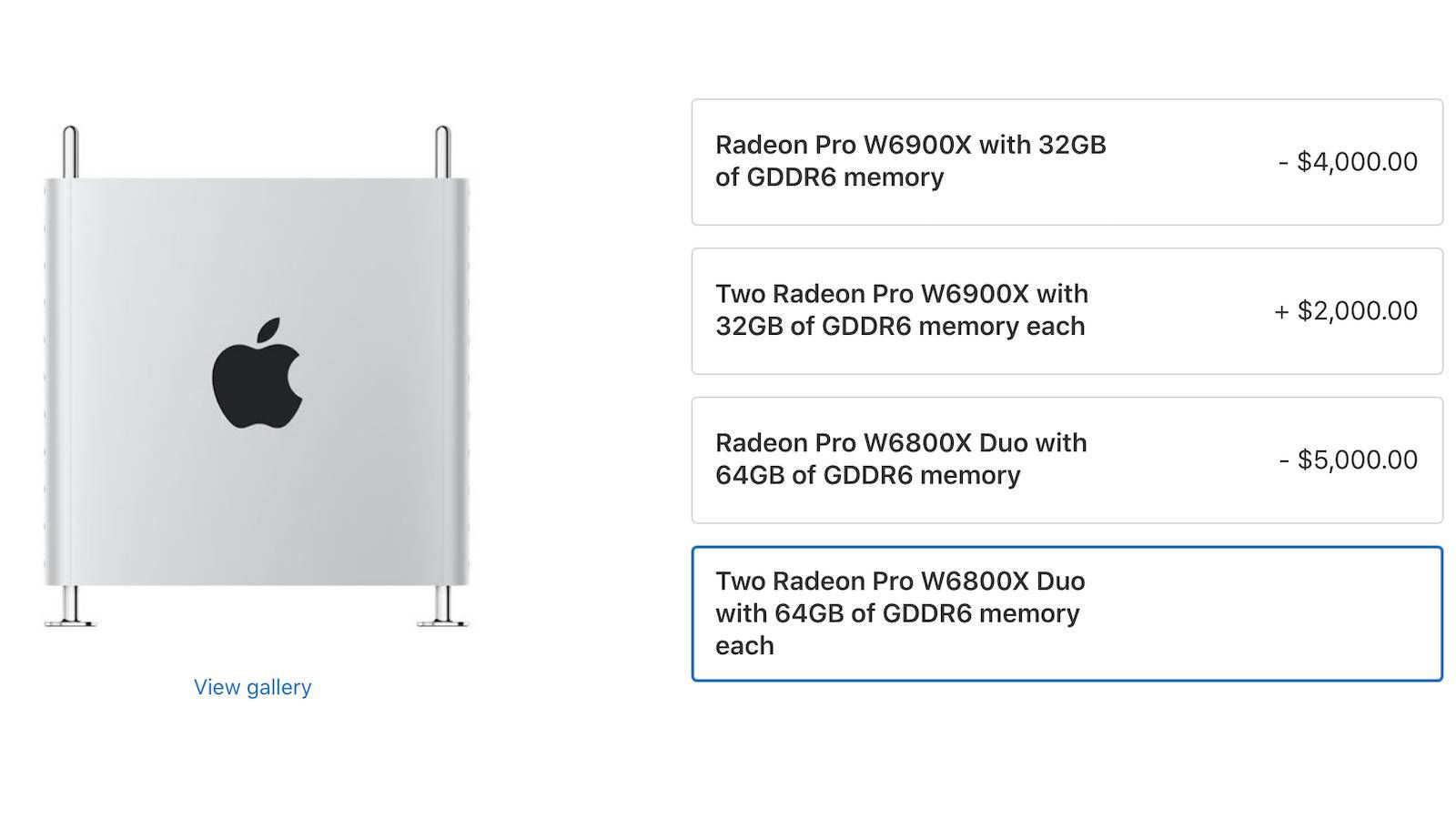

What drives me insane, even if Apple does come out with a new Mac Pro, how expensive is the top end GPU option going to be? We can get a 5090 for $2000, the world's fastest single card consumer GPU, but with Apple, you can't get near anywhere that performance, and it will probably cost $3000 for an option that is half the perf.

Apple needs to step up their GPU game especially on their "production ready gear" if they do decide to do another Mac Pro.

Apple needs to step up their GPU game especially on their "production ready gear" if they do decide to do another Mac Pro.

What drives me insane, even if Apple does come out with a new Mac Pro, how expensive is the top end GPU option going to be? We can get a 5090 for $2000, the world's fastest single card consumer GPU, but with Apple, you can't get near anywhere that performance, and it will probably cost $3000 for an option that is half the perf.

when was Apple aiming at consumer GPUs? ( 'Pro' system but really primarily after 'consumers' ???? )

" ...

he new options come at steep prices, ranging from $2,400 for a single W6800X module to $11,600 for two W6900X modules. ..."

Apple Introduces New High-End Graphics Options for Mac Pro

Apple today began offering new high-end graphics upgrade options for both the tower and rack versions of the Mac Pro desktop computer. This comes on the same day that Apple started selling the Magic Keyboard with Touch ID on a standalone basis. As noted by CNN Underscored's Jake Krol, the Mac...

The AMD x800 solution was more than that Nvidia. x090 solution you are citing. The '9' series was $5,000 (a piece).

Assign that 5090 a 42GB sized problem and see if it is still "world's fastest".

And RTX PRO 5000 will set you back $4-5K ..... (lists at $6K )

PNY NVIDIA Quadro RTX PRO 5000 Graphic Card - 48 GB GDDR7 - Full-height - VCNRTXPRO5000B-PB - Graphic Cards - CDW.com

Buy a PNY NVIDIA Quadro RTX PRO 5000 Graphic Card - 48 GB GDDR7 - Full-height at CDW.com

"I just want an Intel box that can native boot into Windows to play my consumer games on a 3090" ... Apple really wasn't primarily intending to sell that in 2019 either. It 'happened to work", but extremely likely really wasn't a primary design driver for the system.

If you can find a consumer GPU card with an effective VRAM the same size as a Mac Pro for $2000 then you got a really good deal.

Apple needs to step up their GPU game especially on their "production ready gear" if they do decide to do another Mac Pro.

Back to post #36 above, the plain M5 is up in the MetalCompute range of the W5700X . The notion that Apple's GPU are not making very substantive progress on each generation does not really have any empirical back up. The 'x090' is a bogeyman that is trotted out. A solution that will burn any amount of power just to finish across the 'king of mountain' line first at any cost. It is an outlier that Nvidia would love for competitors to go down a deep rabbit hole chasing with diminishing returns results.

The memory controllers in Apple base design practice at coupled to the GPU function units ( containing the cores). More function units leads directly to more memory controllers. More memory controllers directly leads to more minimal RAM. More RAM ( at Apple pricing) is more costs. The consumer 5090 actually taps out on RAM capacity

Even if Apple slapped four Max-class dies together , who is going to pay for that? For the current gen technology, the base RAM capacity is WAY higher than a 5090. So it would be Apples vs Oranges comparison.

It is not really the 'performance' that is core issue here. It is 'price'. the 'consumer 5090' ignores the issue that Nvidia probably needs the RTX Pro pricing to subsidize the 5090 development. Apple is paying from the other end of the spectrum. It isn't the outermost fringe of the legacy Mac user base that they are targeting. Not going to cover 4TB fringe. Not going to cover > 64 CPU core fringe. Not going to cover max consumer display frame rate fringe.

What the whole Mac (and iPad Pro) ecosystem performance range covers is growing. A '5090' point in that 2-D range is not some magic cookie spot that explodes the number of overall systems sold.

Similar issues present on those fringes not covered. 4TB RAM aspect of the Xeon W-3000 series was more so paid for but different Xeon SP class ( Xeon Gold x3xx , Silver x3xx ) offerings. Ditto for the CPU counts. the Mac Pro sales weren't paying for that , it was completely different systems outside the Apple ecosystem.

A few things would help the next Mac Pro get more coverage.

1. Pass-thru , fixed assignment GPU cards. Assign an Nvidia 5090 to a Windows 11 VM and mostly problem solved with the "part time game on Windows" crowd. Would allow more experiments to be run on the hardware with other OS that some folks in that fringe area want to play around in.

2. More modular chiplets might help incrementally. Double GPU cores without doubling CPU cores. Or vice versa ( although might have issues with memory bandwidth). Probably still doesn't work for quadrupling GPU cores. Something more than double Max-class GPU core count might help close the 'gap' a bit. [ the problem if not reusing chiplets in multiple configurations and in "large enough" deployments, than they are not really saving money. ]

3. (coupled to 1 or 2) is give back some more AUX power to add in cards. If SoC isn't going 'Extreme' then don't really need to reserve that power.

4. Add back ECC. No ECC is losing some folks also. Triple digit GB RAM range and no error checking is ignoring data integrity.

P.S. another 'no brainer' update would be PCI-e v5 backhaul. The 2023 version is less competitive in bandwidth than it is in the GPU space. If Apple continues to slacks in that , then they should just stop.

1. Pass-thru , fixed assignment GPU cards. Assign an Nvidia 5090 to a Windows 11 VM and mostly problem solved with the "part time game on Windows" crowd. Would allow more experiments to be run on the hardware with other OS that some folks in that fringe area want to play around in.

Windows on ARM is basically DOA, especially for gaming. Unless this changes, and/or unless x86_64 emulation gets really good, really quickly and/or Apple goes back to Intel for some reason, this type of machine would be a non-starter for gamers.

What drives me insane, even if Apple does come out with a new Mac Pro, how expensive is the top end GPU option going to be?

You can rest easy as it won’t have a GPU of any sort, not as we are used to and certainly not to take on say 4x Nvidia A6000 as you can run in some PC workstations.

There are all sorts of excuses in why Apple doesn’t need to compete, or they are only doing efficient performance, or GPU users are cashed up hobbyists or that GPUs for PCs are not fairly priced or this or that or something else. Oh and the other thing written was that nobody needs triple or four digit ECC ram levels, and besides, Apple doesn’t want those users anyway, and it can’t add ECC RAM and it won’t… Just like it couldn’t ever add a discreet GPU because that was completely impossible.

Shame that Apple has stepped down.

Last edited:

when was Apple aiming at consumer GPUs? ( 'Pro' system but really primarily after 'consumers' ???? )

" ...

he new options come at steep prices, ranging from $2,400 for a single W6800X module to $11,600 for two W6900X modules. ..."

Apple Introduces New High-End Graphics Options for Mac Pro

Apple today began offering new high-end graphics upgrade options for both the tower and rack versions of the Mac Pro desktop computer. This comes on the same day that Apple started selling the Magic Keyboard with Touch ID on a standalone basis. As noted by CNN Underscored's Jake Krol, the Mac...www.macrumors.com

The AMD x800 solution was more than that Nvidia. x090 solution you are citing. The '9' series was $5,000 (a piece).

Assign that 5090 a 42GB sized problem and see if it is still "world's fastest".

And RTX PRO 5000 will set you back $4-5K ..... (lists at $6K )

PNY NVIDIA Quadro RTX PRO 5000 Graphic Card - 48 GB GDDR7 - Full-height - VCNRTXPRO5000B-PB - Graphic Cards - CDW.com

Buy a PNY NVIDIA Quadro RTX PRO 5000 Graphic Card - 48 GB GDDR7 - Full-height at CDW.comwww.cdw.com

"I just want an Intel box that can native boot into Windows to play my consumer games on a 3090" ... Apple really wasn't primarily intending to sell that in 2019 either. It 'happened to work", but extremely likely really wasn't a primary design driver for the system.

If you can find a consumer GPU card with an effective VRAM the same size as a Mac Pro for $2000 then you got a really good deal.

Back to post #36 above, the plain M5 is up in the MetalCompute range of the W5700X . The notion that Apple's GPU are not making very substantive progress on each generation does not really have any empirical back up. The 'x090' is a bogeyman that is trotted out. A solution that will burn any amount of power just to finish across the 'king of mountain' line first at any cost. It is an outlier that Nvidia would love for competitors to go down a deep rabbit hole chasing with diminishing returns results.

The memory controllers in Apple base design practice at coupled to the GPU function units ( containing the cores). More function units leads directly to more memory controllers. More memory controllers directly leads to more minimal RAM. More RAM ( at Apple pricing) is more costs. The consumer 5090 actually taps out on RAM capacity

Even if Apple slapped four Max-class dies together , who is going to pay for that? For the current gen technology, the base RAM capacity is WAY higher than a 5090. So it would be Apples vs Oranges comparison.

It is not really the 'performance' that is core issue here. It is 'price'. the 'consumer 5090' ignores the issue that Nvidia probably needs the RTX Pro pricing to subsidize the 5090 development. Apple is paying from the other end of the spectrum. It isn't the outermost fringe of the legacy Mac user base that they are targeting. Not going to cover 4TB fringe. Not going to cover > 64 CPU core fringe. Not going to cover max consumer display frame rate fringe.

What the whole Mac (and iPad Pro) ecosystem performance range covers is growing. A '5090' point in that 2-D range is not some magic cookie spot that explodes the number of overall systems sold.

Similar issues present on those fringes not covered. 4TB RAM aspect of the Xeon W-3000 series was more so paid for but different Xeon SP class ( Xeon Gold x3xx , Silver x3xx ) offerings. Ditto for the CPU counts. the Mac Pro sales weren't paying for that , it was completely different systems outside the Apple ecosystem.

A few things would help the next Mac Pro get more coverage.

1. Pass-thru , fixed assignment GPU cards. Assign an Nvidia 5090 to a Windows 11 VM and mostly problem solved with the "part time game on Windows" crowd. Would allow more experiments to be run on the hardware with other OS that some folks in that fringe area want to play around in.

2. More modular chiplets might help incrementally. Double GPU cores without doubling CPU cores. Or vice versa ( although might have issues with memory bandwidth). Probably still doesn't work for quadrupling GPU cores. Something more than double Max-class GPU core count might help close the 'gap' a bit. [ the problem if not reusing chiplets in multiple configurations and in "large enough" deployments, than they are not really saving money. ]

3. (coupled to 1 or 2) is give back some more AUX power to add in cards. If SoC isn't going 'Extreme' then don't really need to reserve that power.

4. Add back ECC. No ECC is losing some folks also. Triple digit GB RAM range and no error checking is ignoring data integrity.

P.S. another 'no brainer' update would be PCI-e v5 backhaul. The 2023 version is less competitive in bandwidth than it is in the GPU space. If Apple continues to slacks in that , then they should just stop.

My point was that Apple has shifted gears with SoC and has changed their approach completely by getting rid of the decoupling of the GPU from the CPU.

And production folks, especially 3D artists, will need fast GPUs like the 5090 to do any real work. Those folks have moved on to Windows and that's my biggest concern.

Having a low powered M5 that hits 5700 GPU levels (a 6-7 year old GPU btw) is great for mobile, but not for desktop.

Are you not understanding my point?

You can rest easy as it won’t have a GPU of any sort, not as we are used to and certainly not to take on say 4x Nvidia A6000 as you can run in some PC workstations.

There are all sorts of excuses in why Apple doesn’t need to compete, or they are only doing efficient performance, or GPU users are cashed up hobbyists or that GPUs for PCs are not fairly priced or this or that or something else. Oh and the other thing written was that nobody needs triple or four digit ECC ram levels, and besides, Apple doesn’t want those users anyway, and it can’t add ECC RAM and it won’t… Just like it couldn’t ever add a discreet GPU because that was completely impossible.

Shame that Apple has stepped down.

Well we pretty much know that a non-Apple GPU will never make it back into a Mac ever again, and the 2023 Mac Pro is the sign that won't change.

They are truly behind in raw GPU performance by many years, even by AMD standards. If you take out AI cores, the Apple GPUs, especially the mid range ones are just "ok" and not much better than iGPUs from Intel. It's great for laptops and long lasting devices that use battery, but not powerful desktops like the Mac Pro. That's why Apple is stuck in this weird place with the MP line.

I know many 3d artists who gave up on Apple many moons ago, but production folks (editors, music producers, designers, and artists in general) are still on Macs because they are not super GPU dependent.

Strong GPU perf is not only for gaming. Tools like Redshift and Octane and even built in tools for Blender are used every day for high-end work.

But as you said, Apple probably just doesn't care about the niche 3d market anyway.

My point was that Apple has shifted gears with SoC

It's great for laptops and long lasting devices that use battery

I'm so sick of the Performance Per Watt™ argument. Apple Silicon has been a double-edge sword - I love my M1 Air. But the vertical integration has made them so much money that they have no desire to partner with a GPU mfg or to develop a true Power Mac chip. It's cheaper to pay for skewed benchmarks from the influencer crowd and brag about memory bandwidth that's only impressive on the higher-end chips and only compared to DDR5 - nowhere near as high as modern VRAM. And they love an excuse for that RAM tax. And make sure you get enough GPU cores because you can't upgrade that anymore. Ugh.A few cherrypicked benchmarks and the Apple reviewer (cough influencer) crowd can sort out the shortfalls on GPU performance.

Apple has become BOSE - overhyped mediocrity that insecure middle income MTV Cribs clichés use in an attempt to flaunt their fabulous wealth.Shame that Apple has stepped down.

I'm so sick of the Performance Per Watt™ argument. Apple Silicon has been a double-edge sword - I love my M1 Air. But the vertical integration has made them so much money that they have no desire to partner with a GPU mfg or to develop a true Power Mac chip. It's cheaper to pay for skewed benchmarks from the influencer crowd and brag about memory bandwidth that's only impressive on the higher-end chips and only compared to DDR5 - nowhere near as high as modern VRAM. And they love an excuse for that RAM tax. And make sure you get enough GPU cores because you can't upgrade that anymore. Ugh.

I just think they also streamlined their whole Mac lineup to use the same chips all over...and as mentioned earlier, Mac Pro's are so niche that it doesn't make them much money, so it wouldn't make sense for them to change the way their graphics subsystem works. They used to use muxing when they were on Intel to switch between iGPU and GPU or full GPU, but now it's just Apple silicone.

So 2026 according to Gurman and potentially WWDC?

www.macrumors.com

www.macrumors.com

M5 Ultra Chip Coming to Mac Studio in 2026

Apple doesn't release an "Ultra" variant for every Apple silicon chip, but the company is planning to debut an M5 Ultra chip in 2026, reports Bloomberg. The M5 Ultra is slated for the Mac Studio, and it's also likely that Apple will use it in a Mac Pro update. There's no word on when the M5...

My point was that Apple has shifted gears with SoC and has changed their approach completely by getting rid of the decoupling of the GPU from the CPU.

And production folks, especially 3D artists, will need fast GPUs like the 5090 to do any real work. Those folks have moved on to Windows and that's my biggest concern.

this is like the arguments about how folks are not real professional photographers unless they has a Hasselblad Medium format or a Canon EOS R3 or Nikon D6 camera(s). The 'tool' defins the 'real work' as opposed to the skill brought to the tool.

Also reminiscent of the discussions in this Mac Pro forum in 2013 of how single CPU of MP 2013 was huge mistake because rendering graphics on a CPU was a path to the future. ( as opposed to being a constraint of legacy software.)

Having a low powered M5 that hits 5700 GPU levels (a 6-7 year old GPU btw) is great for mobile, but not for desktop.

the M5 has real benchmarks. For example, Blender on GPUs:

Blender - Open Data

Blender Open Data is a platform to collect, display and query the results of hardware and software performance tests - provided by the public.

Where if narrow the search to 'Apple'.

" ...

| Apple M5 (GPU - 10 cores) | 1734.16 | 11 |

| Apple M1 Ultra (GPU - 64 cores) | 1675.85 | 3 |

..."

Apple has made no progress? the M5 is turning in a score higher than the M1 Ultra.

I presumed that folks could do some straightforward math, but lets walk through this. 64 cores for the M1 Ultra. Only 10 cores for the M1 (and turns in a higher score). There are 6.4 times as many cores on Ultra. "Back of the envelope" just go with perfect scaling, 6.4 * 1,734 => 11,097

Flip back to chart no filter... Nvidia 5080 ... 9,162 ( number of benchmarks for 5080 is also 612 ... relatively a pretty high number on that chart).

The Nvidia 5090 ...14,931. That is 34% bigger than the estimate above. But ...

the M3 Ultra has 80 cores. which is an estimate of 13,872. Which is only 7.6% bigger. 7.6% is suppse to be a huge gap???

The Nvidia RTX Pro 6000 Workstaiton is 16,557 .. which is 10% bigger than the 5090. Why isn't the Pro 6000 the stalking horse is absolute maximum performance is huge critically necessary for any decent real work getting done?

The 5070 TI also has a relatively high set of benchmark entries. The 5090 isn't the "whole' market for real world work. It likely isn't even the majority.

Are you not understanding my point?

The point that Apple is no where near 5090 performance? No, I don't understand that. It is very likely not true. Is it shipping this holiday sason? No. Have they finished working on it ? Probably yes at the hardware level. (software probably only needs more time.)

That folks in these forums often mask complaints about price with talk of maximum performance? I understand that also.

Apple made some trade-offs on time to market versus the benefits gained by coupling the CPU+GPU. Are they 'doomed' to be hopelessly behind for forever? No. Apple and Nvidia are on the same Moore's Law curve slowing down. Nivida isn't going to. be able to make reticle busting, bigger dies , bigger forever. Apple adds hardware ray tracing and they catch up. Apples more matmul/tensor/AI into the GPU cores and they catch up.

Apple has a bigger 'trust' problem than they have a 'hardware design' problem. The Mac Pro has irregular release dates. If there are 2-3 GPU vendors and modular slots then end users can take more control of when upgrades come. but that is mainly control , not root cause performance.

Apple has made more GPU progress in 5 years than AMD has (AMD has something that is within 8% of a 5090 on ray tracing? ) . One of the factors of fast rate of improvement, is because hey minimized the distractions. Intel bungled their entry into dGPU competition in part by trying to do cater to the "make everything for everybody" market.

The folks who prioritize modularity and control over all other factors aren't going to be Mac customers. Mac on Intel was not Apple's way of catering specifically to those folks. It was more of a 'happens to work' thing.

The folks who have very deep Nvidia software entrenchment. Apple really walked away from those folks on Mac OS on Intel era. That isn't a 'new' hardware change in strategy thing.

Last edited:

Windows on ARM is basically DOA, especially for gaming. Unless this changes, and/or unless x86_64 emulation gets really good, really quickly and/or Apple goes back to Intel for some reason, this type of machine would be a non-starter for gamers.

high end gaming probably wasn't the best example. ( VM linux with tagged computed accelerator is likely more a short term option). MS emulation is more aimed at legacy emulation flexibility than in maximum speed. ( e.g. allows arm app with x86 plug-ins. Stuff that Apple just bans and moves on. )

Widows on ARM is 'slow evolving' not dead. 35+% folks still on Windows 10 is just as 'slow motion'. Windows as a hole has a slow motion problems.

Windows on ARM is more limited on gaming because there is pragmatically only an Qualcomm GPU that has been the target. MediaTek/Nvidia. N1/N1X/GB10 should very substantively change that once they get released. It looks like Intel/Nvidia deal is about the same infrastructure as the MediaTek/Nviia It wouldn't be surprising if that become the same graphics chiplet used for both segmentation products.

there hasn't been a high volume 'desktop' ARM chip with slots. N1X ( or N2X if gets delayed long enough waiting on Windows). The DGX Spark in a better container and no Connectix networks to lower costs and free up expansion.

Qualcomm and Nvidia combined investments probably will keep that effort moving forward.

Part of the Mac Pro's problem now is that it is aiming more at the past. It seems as long Apple is primarily concern with legacy , high-sunk-costs cards that some users have. PCI-e v3 standard PCI-e slots is not aiming at the future. Great for PCI-e v1.0 and 2.0 cards though.

I just can't see Windows-on-ARM going anywhere in the forseeable future. MS has been "working on it" for over a decade with very little in the way of sales. The whole point of Windows is backwards compatibility for software and the ability to use commodity hardware. If one is willing to give up either or both, then why use Windows? The set of people who would be interested in Windows-on-ARM is pretty much limited to those who care deeply about battery life on laptops, are willing to give up backwards compatibility, but still want Windows for some reason. That's a pretty small segment of the market.

Register on MacRumors! This sidebar will go away, and you'll see fewer ads.