OK, I wasn't really meaning to chime in on the specific content of this thread (4K vs. 1080P), but the more general concept of some people's (many on these fora, IMO) propensity to believe and state that THEIR experience necessarily equals EVERYONE'S.Watch PART 2 of these videos from cinematographer Steve Yedlin (Star Wars: The Last Jedi, Looper, Carrie).

PART 2 is a MUST SEE for everyone who claims to see a difference between 2K and 4K on their TV.

Got a tip for us?

Let us know

Become a MacRumors Supporter for $50/year with no ads, ability to filter front page stories, and private forums.

Apple TV tvOS Simulator Shown Running in 4K Resolution

- Thread starter MacRumors

- Start date

- Sort by reaction score

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

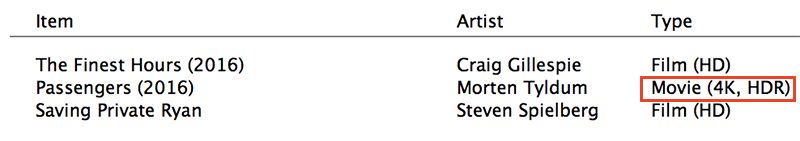

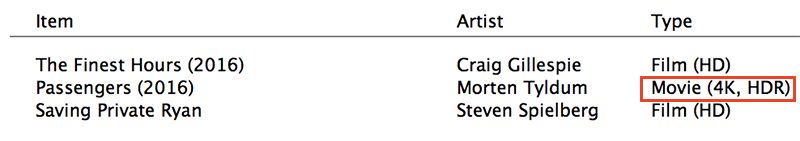

Actually, people are saying for Passengers they have purchased the 4K version according to iTunes, even though the actual version they got was 1080p.4K content still isn't widely available, so Apple was wise to wait for more content to be developed along with more afforadable 4K TV's. Hell, I have 4K now and mostly use it to watch the 40 or so movies/shows available via NetFlix and Amazon Prime. I just hope they allow movies already purchased to be upgraded to 4K for free..........the ones that are available in 4K, that is. For example, I own the HD digital copy of Passengers. If Passengers is released in 4K on iTunes, I would like for Apple to recognize I have a 4K AppleTv and automatically allow me to access the 4K version. They did this when they switched to HD. Guess we shall see.

https://www.macrumors.com/2017/07/28/apple-listing-select-itunes-movies-as-4k-hdr/

BTW, it's not just about waiting for content, but I suspect it was partially also the hardware. Until last month, none of the Macs could decode 4K HDR HEVC content in hardware on the CPU/iGPU. Apple requires a Kaby Lake CPU to make this work on Macs. Yes, they could have supported it on AMD GPUs, but that would have made for a very fragmented experience, since many Macs do not have discrete GPUs.

This is one big reason why I waited so long to get a new Mac desktop and new Mac laptop. But as expected, Apple launched its HEVC support in June, and for 10-bit HDR content, it requires a 7th generation Intel Kaby Lake CPU. Such playback works great in QuickTime on not only my 5K iMac, but also on my lowly 2017 MacBook Core m3. However, it does need High Sierra 10.13 too.

"Retina" displays on mobile devices?That's not true. They are sometimes last, but sometimes first, or near first.

Examples where Apple was an early adopter:

USB

HEIF

SuperDrives in laptops

Getting rid of SuperDrives in laptops

SSD

h.264

Thunderbolt

Consumer gigabit Ethernet

WiFi

EDIT: Just adding a data point, btw.

Yep, that too. And Retina on laptops too. In fact, Apple arguably still has the best implementation of Retina on laptops, even though it's roughly the same implementation they had when they introduced it."Retina" displays on mobile devices?

According to the leak code from the Apple Homepod, the new 4K AppleTV will be Dolby Vision and HDR 10 compatible, which is great news........however, you still need a TV that's capable of displyaing DV, which I believe most 4K displays aren't right now.Yeah nothing I've ever found that confirms that.....just some people that tend to be in the know. Yeah, Netflix 4K tops out around 15.5Mbps. Overall I have found Vudu's bitrates for UHD to be really nice. Now only wish they would finally implement HDR10, as they are Dolby Vision only right now and I don't have a DV set. Hoping that once iTunes starts selling UHD titles, they'll offer them in both DV and HDR10 to give Vudu a push! Or I can just go get that OLED ;-)

All I want is true 4K content shot at 60 FPS and no judder! If you watch a 4K action movie that's poorly filmed, upscaled, or converted to 4K post-production, it can be hard to watch with all the judder.

Fast motion has always been a problem since the introduction of digital cameras. Nothing to do with how its filmed or post the camera just can't capture the way film does (yet)

4K content still isn't widely available, so Apple was wise to wait for more content to be developed along with more afforadable 4K TV's. Hell, I have 4K now and mostly use it to watch the 40 or so movies/shows available via NetFlix and Amazon Prime. I just hope they allow movies already purchased to be upgraded to 4K for free..........the ones that are available in 4K, that is. For example, I own the HD digital copy of Passengers. If Passengers is released in 4K on iTunes, I would like for Apple to recognize I have a 4K AppleTv and automatically allow me to access the 4K version. They did this when they switched to HD. Guess we shall see.

Between Netflix, UHD Blu-rays, Amazon and Video Games I find there's plenty of 4K content to keep me busy for at least another 12 months.

Between Netflix, UHD Blu-rays, Amazon and Video Games I find there's plenty of 4K content to keep me busy for at least another 12 months.

Now you do but last few years 4k content wasn't there. Internationally it didn't make sense either. With the global rollout of these services it makes sense to include 4k now. Apple knows how sell and up/resell better then anyone.

Actually, people are saying for Passengers they have purchased the 4K version according to iTunes, even though the actual version they got was 1080p.

https://www.macrumors.com/2017/07/28/apple-listing-select-itunes-movies-as-4k-hdr/

BTW, it's not just about waiting for content, but I suspect it was partially also the hardware. Until last month, none of the Macs could decode 4K HDR HEVC content in hardware on the CPU/iGPU. Apple requires a Kaby Lake CPU to make this work on Macs. Yes, they could have supported it on AMD GPUs, but that would have made for a very fragmented experience, since many Macs do not have discrete GPUs.

This is one big reason why I waited so long to get a new Mac desktop and new Mac laptop. But as expected, Apple launched its HEVC support in June, and for 10-bit HDR content, it requires a 7th generation Intel Kaby Lake CPU. Such playback works great in QuickTime on not only my 5K iMac, but also on my lowly 2017 MacBook Core m3. However, it does need High Sierra 10.13 too.

This is also exciting in that Sony up to this point has been a pain in the UHD side. So far the ONLY way to watch a Sony digital UHD is through their app on a Sony TV. Even Vudu doesn't have any Sony UHD. So if this is true above, that could be very exciting to get some Sony UHD content.

According to the leak code from the Apple Homepod, the new 4K AppleTV will be Dolby Vision and HDR 10 compatible, which is great news........however, you still need a TV that's capable of displyaing DV, which I believe most 4K displays aren't right now.

Yeah, and it will instantly become a leading box, since no other mainstream streamer does DV - although I'd have to imagine Roku won't be far behind with an updated model that supports both DV and HDR.

HDR10 support in TVs is much better than DV support still - but DV is getting better. OLEDs are the best bet with both HDR and DV (although some argue that OLED needs DV/HDR because it doesn't have that max brightness of the LEDs.) I know I'm kicking myself for going with my Samsung 4K late last year. I didn't do enough research at the time regarding HDR formats. I would have held off to get an OLED this year. And of course if you have a AVR setup, that would need to support DV if you plan to run HDMI through that (otherwise you will have to run optical to the AVR, thus losing other benefits).

2017 seems about right for a set top box honestly. Two years ago not really.

in 2017 still 4k isn't very mainstream. I don't see it getting there till 2019+. Once oled's come down in price to where normal high end sets are I think it will be time to buy. Hoping next year is a big oled year.

in 2017 still 4k isn't very mainstream. I don't see it getting there till 2019+. Once oled's come down in price to where normal high end sets are I think it will be time to buy. Hoping next year is a big oled year.

Now you do but last few years 4k content wasn't there. Internationally it didn't make sense either. With the global rollout of these services it makes sense to include 4k now. Apple knows how sell and up/resell better then anyone.

Yeah but that's the case with any and all technology - especially content ones. Enough people need to take the plunge in the hardware before content providers start putting out content. Content is very expensive to produce with very low margins so they need to see a significant market movement before they jump in. It becomes a chicken or egg or omlette thing.

[doublepost=1502216756][/doublepost]

2017 seems about right for a set top box honestly. Two years ago not really.

in 2017 still 4k isn't very mainstream. I don't see it getting there till 2019+. Once oled's come down in price to where normal high end sets are I think it will be time to buy. Hoping next year is a big oled year.

I think next Holiday will be the moment where it really takes off. By then Cable companies like Xfinity will finally have their boxes ready

[doublepost=1502218385][/doublepost]

This is also exciting in that Sony up to this point has been a pain in the UHD side. So far the ONLY way to watch a Sony digital UHD is through their app on a Sony TV. Even Vudu doesn't have any Sony UHD. So if this is true above, that could be very exciting to get some Sony UHD content.

Yeah, and it will instantly become a leading box, since no other mainstream streamer does DV - although I'd have to imagine Roku won't be far behind with an updated model that supports both DV and HDR.

HDR10 support in TVs is much better than DV support still - but DV is getting better. OLEDs are the best bet with both HDR and DV (although some argue that OLED needs DV/HDR because it doesn't have that max brightness of the LEDs.) I know I'm kicking myself for going with my Samsung 4K late last year. I didn't do enough research at the time regarding HDR formats. I would have held off to get an OLED this year. And of course if you have a AVR setup, that would need to support DV if you plan to run HDMI through that (otherwise you will have to run optical to the AVR, thus losing other benefits).

I have an LG OLED and honestly DV is far better. I had a Samsung 4k and my general issue with HDR10 is it makes the entire movie/show "darker" while DV is scene specific and made for specific TVs. So no matter what kind of TV you have, if it supports DV, it'll produce the best DV image possible for your TV model since DV is hardware AND software driven.

Last edited:

Hopefully Apple fixes the remote and the UI with the next release.

Get a Samsung UHD Player. Framerate switching works perfectly. I hope someday an Apple TV will support this, until that its kind of useless for perfect movie streaming.That would be nice - but probably not likely. There isn't a current streamer out yet that does auto resolution switching. It's on the wishlists of lots of people.

As for matching UHD discs, doubt that as well. I think I've read that Vudu UHD is around 25Mbps whereas I think the Ultra HD BR spec is around 35Mbps. At this point you'll always get the best picture overall with physical media - especially with your 90" screen (which sounds like an awesome experience BTW).

I strongly suspect many of the righteously indignant 4K TV owners here are comparing a years old 1080p screen to a brand new 4K screen (it's not like technology ever improves, after all), and may be running older, more highly compressed source material out to the 1080p screen. They are seeing a substantial improvement, and they are comparing a 1080p screen to a 4K screen, but correlation does not imply causation.Love how the only person talking sense is getting bashed by everyone – even people who think that resolution could benefit a smooth image. LOL.

I don't have a horse in this race, my ATV4 is still connected to a 720p screen. And when the 4K ATV5 comes out, I'll happily upgrade because of everything else that will be improved on it, not because of the potential for 4K output.

I don't remember the exact screen size / distance ratio off the top of my head, but I've read many articles that seem to be along the same lines. Basically, for anyone not interested in a 60"+ screen and sitting at an average 10' from that screen, there is no real incentive to get a 4K or 8K TV.You're joking.. Right?

And even then, you'd likely need a 4K Blu-ray player / super stable 32Mbps+ streaming bandwidth to really tell the difference. So there's definitely an audience for that, but there's also people who buy audio cables that cost thousands of dollars, and very few people can really tell the difference.

https://carltonbale.com/does-4k-resolution-matter/

http://referencehometheater.com/2013/commentary/4k-calculator/

http://www.rtings.com/tv/reviews/by-size/size-to-distance-relationship

As has been already mentioned in this thread, this presupposes a near-perfect 1080p signal. Unfortunately, streaming 1080p is far from perfect. Note it's true that streaming 4K is also not perfect, but it can often be hugely better than streaming 1080p quality.I don't remember the exact screen size / distance ratio off the top of my head, but I've read many articles that seem to be along the same lines. Basically, for anyone not interested in a 60"+ screen and sitting at an average 10' from that screen, there is no real incentive to get a 4K or 8K TV.

And even then, you'd likely need a 4K Blu-ray player / super stable 32Mbps+ streaming bandwidth to really tell the difference. So there's definitely an audience for that, but there's also people who buy audio cables that cost thousands of dollars, and very few people can really tell the difference.

https://carltonbale.com/does-4k-resolution-matter/

http://referencehometheater.com/2013/commentary/4k-calculator/

http://www.rtings.com/tv/reviews/by-size/size-to-distance-relationship

Put it this way: With 4K, they're finally streaming high enough bitrates with newer codecs that would be needed to make 1080p actually look good.

Why can't they just increase streaming 1080p bitrates and use better codecs? Well, they could, but they don't. That is the real world. If you want better quality then you need to go 4K. I suspect the reason for this is they've determined that up until now, most mainstream users have been OK with lowish bitrate h.264 1080p for streaming. It is now the standard for streaming, and if you want better, you pay more for higher quality. The key here is that newer higher quality tier is not higher quality 1080p, but it is 4K. In this context, those 1080p-is-good-enough articles are irrelevant, because they are not testing streaming video quality sources.

And as mentioned, the only real world way to get HDR is with 4K. As I've said many times before in various forums, I'd love to have high end 1080p HDR, but unfortunately, that just doesn't exist in any meaningful way. Well, it does sort of in the projector space. There projectors now that have 1080p chips but which accept 4K HDR signals, but which then pixel shift the 1080p signal to get higher-than-1080p images (although it's not true 4K) and with a wider colour gamut than previous models. But again, the only way to get this is with a 4K source. These machines don't replicate HDR brightness range well though.

Last edited:

It's not like the Apple TV 4 came out yesterday, it's been a while. Thus the update.A little frustrating that they don't already have 4k. I mean come on, it's 2017, and we still don't have it, AND we are now forced to buy new Apple TV's, yet again, if we want it.

Experts have found that for you to see a difference between 1080p and 4k, you need a giant 80" TV and sit as close as 6.5 feet.

Short: Nobody needs 4k, you can't see it. It's a marketing gimmick.

HDR on the other hand, makes a real difference.

You are completely and utterly wrong. 4K is the natural progression of technology and in no sense of the word a gimmick. You are making an argument that is equivalent to saying: “a quad core processor is a gimmick, most apps only take advantage of two cores anyway”

You’re absolutely on point. Until that day, I will save my breath against those with poor eyesight, allergy to progress, or other limitations.Actually, it's simpler than your advice. Right now a 4KTV is a rumor, albeit one seeming to gain a lot of traction in the last week or two. As soon as Apple takes to a stage and rolls out a 4K

TV, this anti-4K sentiment quickly evaporates.

The exact same thing happened in the days when Apple still clung to 720p as "HD" (maximum) before announcing the 1080pTV3. Back then, all of these same arguments being made against 4K were being slung around against 1080p. 1080p is a marketing gimmick, you can't see the difference, "the chart", until everything in the iTunes store is available at 1080p (which is still not the case by the way), until everyone's bandwidth everywhere is upgraded, hard drive storage and on and on.

One of the best: "I don't want to throw away a perfectly good TV set because..." implying some perception that better hardware will "force" everyone to only download 4K video, in spite of an obvious history that advancingTV technology can still download SD, 720p and 1080p versions rather than only 1080p versions. (this will be no different). A 4K

TV is going to play 1080p or 720p or SD to it's fullest as better hardware always has no problem with lessor software demands. Hook it up to a 1080p or 720P HDTV and it's just going to be a better, more robust

TV4 with a hardware capability that you can't use on that existing TV. Your iPhone has abilities to tap cellular bands that you don't use either but it's added hardware capability built in should you ever want to change providers and thus need that hardware.

So basically, the crowd that seems to passionately argue for whatever Apple has for sale right now is just doing their (paid or unpaid) job. Note how you tend to not find these same people in threads for all of Apple's other products that already embrace 4K bashing against Apple for embracing "the gimmick" in iPhones, iPads & Macs- only here with this ONE Apple product that does not yet embrace 4K.

As soon as Apple has done the big reveal, the vast majority of this crowd will not dare show up to bash Apple for stupidly embracing "the gimmick" that "no one's eyes can see." Instead, it will be "Shut up and take my money" and the same old, TIRED arguments will go into the long-term recycling bin to be revived again as rumors start picking up for 8K a few years down the road.

We've already seen this exact same movie before and after the "3" was announced. I expect no difference here. A God will speak and then 4K will be fine to all His followers forevermore.

Higher bitrate makes for an incredible difference even on 1080p content. Playing a 8000+Mbs high quality rip of Orphan Black you can swear you are looking at 4K footage when it is in fact downscaled to 1080p.I still believe that in this case, a higher bitrate would benefit everyone more than quadrupling pixels, but oh well.

4K will bring people much higher (and noticeable) quality, but will be from improved source material (shot in 4K and above) as well as better compression (h.265) as much as the resolution itself.

[doublepost=1502249832][/doublepost]

You can buy external upscalers. I connect my Switch to my 4K TV through a high quality (yet inexpensive) external upscale dongle and the difference in quality is dramatic.The problem is that a lot of lower end TVs have crappy upscalers.

What do you mean how can I tell? That's a pretty silly question.

You are assuming because YOU can't tell the difference, no one else can and basing your argument on the fact that YOU can't see the difference. MY Eyes can clearly see the difference. I don't want a monitor in my face. Did you say you need an 80 inch TV to see the difference? Now you are saying wow, on a 4K Computer monitor it's so much better? Are you trolling?

Just because yours CAN'T doesn't mean it's not there. And I have an OLED and an LCD. 4K Content even makes 1080p Content look superior because 4K TVs will upscale 1080p.

Never said 80 inch. Don't make things up.

The point you are failing to notice.....viewing distance is crucial what it comes to seeing detail. Hence....why a 4K monitor makes a difference . A 4K 55" Tv and a 1080p 55" TV both 3 metres away.... next to each other , are you sure you can tell the difference ?

How are you missing the point about an A/B test. It's awesome u have a 4K at home....you need the two next to each other to compare....

Hey , if I was buying now, I'd get a 4K unit , cause of OLED. Though until now I've actually need using a plasma cause it had superior image quality and blacks over the LED crap that was released. Though don't worry , I know so many who could tell the difference how the newer LEDs were so superior to plasmas.....

[doublepost=1502264293][/doublepost]

Actually, I did have my 65" 4K TV right next to my 65" 1080P TV when I first purchased the 4K set. It was night and day difference with video that was made for 4K. Even my wife, who will happily watch a old SD show and could care less about HD was amazed.

I do agree that HDR adds even more on top of 4K, but 4K alone is stunning.

It would be interesting to compare the same panels with the same tech side by side.

Even comparing an old 1080p to new 1080p sees a big difference in quality . Brightness makes a big difference and colours really pop on new units, and HDR.

Look I'm not saying anyone should buy a 1080p currently , now that the prices have dropped. Newer units , be 720, 1080p etc all look better than the old units . My next unit is a 4K OLED HDR

Wow, you people believing the so called experts haven't a clue, I would have thought this nonsense would have died down by now but guess not.

I currently have a 50 inch 4K set that's about 12 feet away in the living room, even at that size and distance it's a very obvious difference between Full HD and 4K.

I can only guess this is a combination of some people that either have poor eyesight or the visual part of the brain doesn't work as well believing people who have been paid by the TV manufacturers.

I currently have a 50 inch 4K set that's about 12 feet away in the living room, even at that size and distance it's a very obvious difference between Full HD and 4K.

I can only guess this is a combination of some people that either have poor eyesight or the visual part of the brain doesn't work as well believing people who have been paid by the TV manufacturers.

Love how the only person talking sense is getting bashed by everyone – even people who think that resolution could benefit a smooth image. LOL. There should be absolutely no debate about 4K and 1080p. Your eye can’t see the difference unless the screen is really close and/or huge. If you think you can see a difference, bravo, that’s how marketing works. Any difference you really see is just years of display technology having developed ahead, and these displays also feature 4K, but it isn’t the thing making everything better.

Do your reading and stop ******** all over people talking sense. https://carltonbale.com/does-4k-resolution-matter/

I think a lot of the confusion comes from the 4K stream itself, which looks better even on 1080p displays.

This is because of chroma subsampling. In 1080p streaming, it's 4-2-2. 4K stream is also 4-2-2 but when viewed on 1080p it's effectively 4-4-4, which is considerably sharper.

Register on MacRumors! This sidebar will go away, and you'll see fewer ads.