I just ordered my first NAS with RAID mirroring. No more paying for iCloud or store anything on my Apple devices. You don't deserve my money Apple. Maybe my next phone will be a de-googled Android phone.

Got a tip for us?

Let us know

Become a MacRumors Supporter for $50/year with no ads, ability to filter front page stories, and private forums.

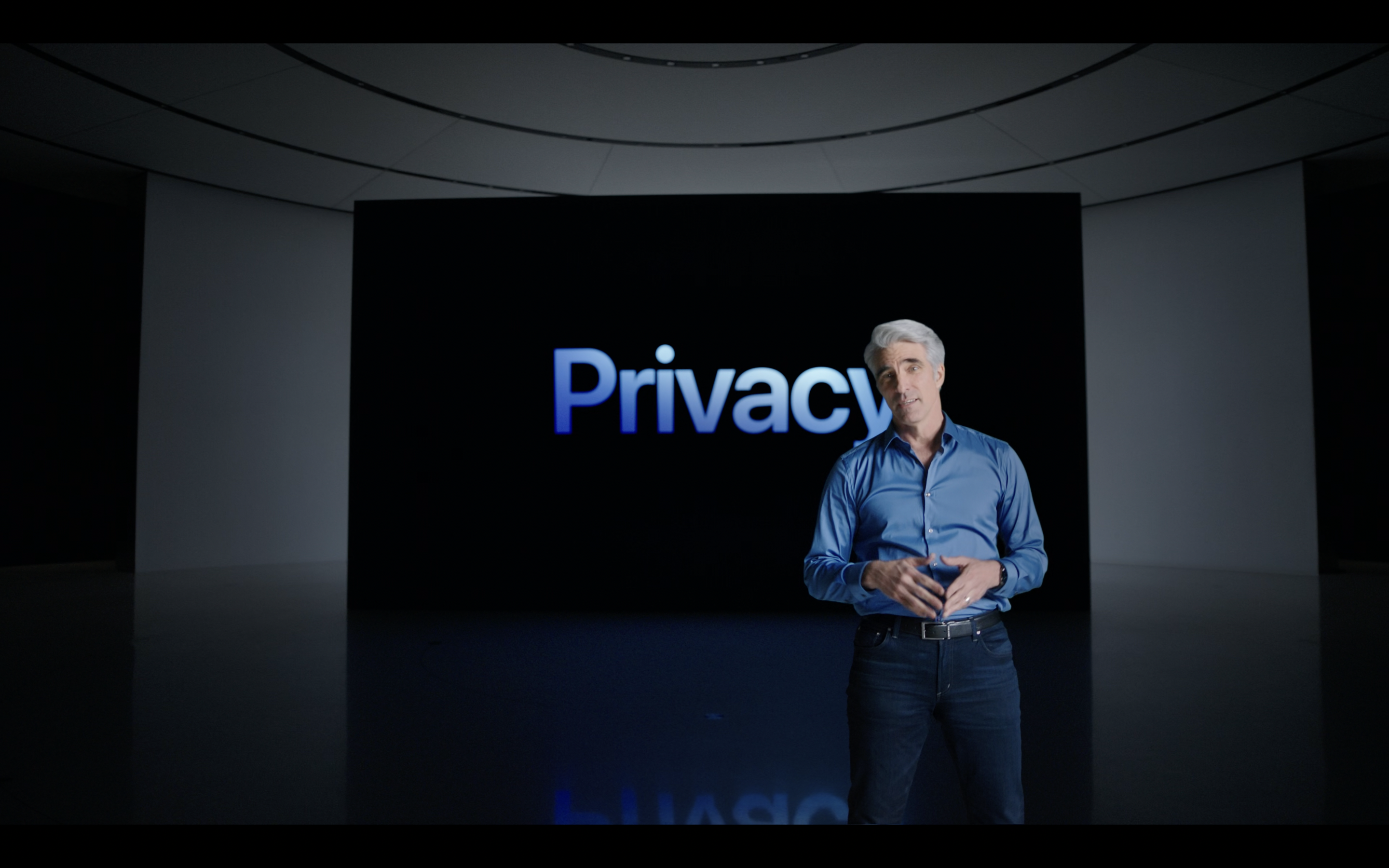

Craig Federighi Acknowledges Confusion Around Apple Child Safety Features and Explains New Details About Safeguards

- Thread starter MacRumors

- Start date

- Sort by reaction score

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Err no, many experts have stated the system is very easy to trick. And of course, the scanning list will be injected with other things it needs to find, without the knowledge of Apple and others. At which point, a person at Apple will be able to see the image, not a hash, the actual image.Only if you have 30 image which matches CSAM know images from a independent database. In that casa your privacy will be at risk... but you are a pedophile. Remember matche is not scan, an hash is not an image.

That’s a cover up lie and a random number Craig is throwing at us.

Since you are accusing Craig of being a liar, you must know the real number. What is it?

I think it is time for Jonathan Ive to start a tech company......Apple is not going back on this. Very disappointed.

I would have been impressed by such kind of straight statement:Thank you, Craig! I wonder if Craig woke up and read my comment from the previous article haha!

Craig Federighi Acknowledges Confusion Around Apple Child Safety Features and Explains New Details About Safeguards

Apple's senior vice president of software engineering, Craig Federighi, has today defended the company's controversial planned child safety...www.macrumors.com

All jokes aside! Craig, you do not know what you are talking about when all you did was praise how important privacy was. Stay away from my PRIVACY, please. It is my HUMAN RIGHT. Craig! please, give us an Opt-Out option from CSAM, please. Let our voices be heard. I will not appreciate Apple scanning my iCloud photos whether it's through AI or Hash.

It sounds like Apple is using "Protecting Children" as an example to be spying on the consumers.

STOP this mass surveillance to be launched. Apple you are not a Law Enforcement organization. Stop acting like one. Apple, how are you not getting the point. You are violating our privacy. Over 7000 signatures were collected. Stop playing with our privacy and human right.

LLCBuddy® - Your Business Formation & Management Buddy

Comprehensive guides on LLC and business formation and management essentials with LLCBuddy - your business formation & management partner.appleprivacyletter.com

"We at Apple have been forced by law to perform image scans, which we are supposed to justify with the protection of children. Since we are too weak to enforce consumer interests against the institutions by pushing for a general ban on image analysis, we want to be a little better than Google and the like, and have created an instrument that is difficult to explain."

This would return the game to those responsible and Apple would not have lost any trust and would not have to give crude and funny interviews…

i see apple have wheeled out the fancy haired good looking funny man to try win us over.

if it really works how they say it works and can really stop governments abusing it for other means then i'm all for it. but they can't really prove it so ... what do ya do?

if it really works how they say it works and can really stop governments abusing it for other means then i'm all for it. but they can't really prove it so ... what do ya do?

Seems like a lot of effort to go to just to rickroll an Apple employee.Err no, many experts have stated the system is very easy to trick. And of course, the scanning list will be injected with other things it needs to find, without the knowledge of Apple and others. At which point, a person at Apple will be able to see the image, not a hash, the actual image.

Apple is giving up the privacy grounds opening room for the next contender.

And if they get a match, they will just hand over the hash numbers to the police to figure it out?Matching is not scanning, the issue there is 99% of users don't know what an hash is. It is not the photos, just numbers.

They can "match" anything, once they are getting started with some NCMEC DB. It's not numbers, it's your data. Or in some cases, it might be some data misplaced or planted in your account.

people on this site are the 0.001% of fanboys. 90% of apple users wont even know this feature exists. This wont change apple's profits one iota.This just gets more stupid:

- Mass surveillance of a billion iPhone users for what – now that every criminal has been warned?

- On the device so that every security scientist knows what happens – no, they don't know if there is more in iCloud

- Since it is on the device it looks like a first step, the second step could be a neural network detecting images

To reiterate myself, after buying a new iPhone every since 2007, I will not update to iOS 15 and will not buy an iPhone 13 Pro until this is sorted out. Same applies to macOS Monterey.

The image is damaged to word it polite.

I think I've bought my last apple product.

Somebody else mentioned this in another thread.

What will happen if a bad state-sponsored actor intentionally send you a whatsapp/imessage 30-50 photos matching the database? iMessage, whatsapp, and many other messaging services defaulted to automatically download any sent photos, and iPhones store them in the photo library, which will then be automatically uploaded to iCloud, without the user's intervention. What then?

I can already expect some critical journalists/politicians to suddenly be arrested for owning CP. It's true, private companies do things more efficiently. Great job Apple!

What will happen if a bad state-sponsored actor intentionally send you a whatsapp/imessage 30-50 photos matching the database? iMessage, whatsapp, and many other messaging services defaulted to automatically download any sent photos, and iPhones store them in the photo library, which will then be automatically uploaded to iCloud, without the user's intervention. What then?

I can already expect some critical journalists/politicians to suddenly be arrested for owning CP. It's true, private companies do things more efficiently. Great job Apple!

Yep.I just ordered my first NAS with RAID mirroring. No more paying for iCloud or store anything on my Apple devices. You don't deserve my money Apple. Maybe my next phone will be a de-googled Android phone.

Plus you can store an encrypted backup on AWS S3 or wherever makes sense and meets your cost requirement.

Using Arq, CCC, etc. to make it easy and automated.

It's work but not that hard once you get it set up.

I am certainly going to avoid the new mac OS and iOS releases for as long as possible. Even though if iCloud is disabled it should not matter, it is not clear where Apple is going with this. They have already stated they may expand the features to third parties.Will this lead to an iOS 15 Boycott ???

And if so, will that lead to the Board forcing Tim Cook out ???

Neither can be discounted at this point !!!

Apple decided we have so much local CPU power available on people’s devices; don’t let it go to waste.

Scanning for abusive material might be a first step, but what will be their next initiative to harvest all that CPU power?

- Scanning for illegal video / music / books / games / software

- Using AI to ‘get to know customers needs better’.

- Running some local datacenter services from a swarm of iPhones.

No matter what, just stay of my devices Apple or I’ll be gone as a customer leaving your platforms for good!

Scanning for abusive material might be a first step, but what will be their next initiative to harvest all that CPU power?

- Scanning for illegal video / music / books / games / software

- Using AI to ‘get to know customers needs better’.

- Running some local datacenter services from a swarm of iPhones.

No matter what, just stay of my devices Apple or I’ll be gone as a customer leaving your platforms for good!

Visual Look Up

Discover more in photos

Learn more about the objects in your photos. Visual Look Up highlights objects and scenes it recognizes so you can get more information about them.2

Learn more with a tap

With Visual Look Up, you can quickly learn more about art, landmarks, nature, books, and pets simply by tapping a photo on your device or on the web.

Announced at WWDC this year...general consensus on this site is, "Wow...this is so kewl!!! I took a picture of a dog and it can tell me what breed it is!! This is the greatest feature ever! Thank you Apple for doing this on my device so it doesn't share my interests to the world!!"

When Apple announces they are doing the exact same on device picture scanning except now to help the exploitation of children in an even more secure way with many toll gates to prevent abuse. "WHAT ABOUT MY PRIVACY!!! GOVERNMENTS AND BAD PEOPLE WILL HACK INTO THIS AND USE IT AGAINST ME!!

And by the way, they have been doing this on device picture/info screening for years now.

i would love if everyone refused to upgrade to iOS 15 (assuming 14.8 doesn't patch it in)

GrapheneOS. Android without the spying.i really don't wanna have to go back to Android.

Sorry but you are comparing chalk and cheese.Visual Look Up

Discover more in photos

Learn more about the objects in your photos. Visual Look Up highlights objects and scenes it recognizes so you can get more information about them.2

Learn more with a tap

With Visual Look Up, you can quickly learn more about art, landmarks, nature, books, and pets simply by tapping a photo on your device or on the web.

Announced at WWDC this year...general consensus on this site is, "Wow...this is so kewl!!! I took a picture of a dog and it can tell me what breed it is!! This is the greatest feature ever! Thank you Apple for doing this on my device so it doesn't share my interests to the world!!"

When Apple announces they are doing the exact same on device picture scanning except now to help the exploitation of children in an even more secure way with many toll gates to prevent abuse. "WHAT ABOUT MY PRIVACY!!! GOVERNMENTS AND BAD PEOPLE WILL HACK INTO THIS AND USE IT AGAINST ME!!

And by the way, they have been doing this on device picture/info screening for years now.

The feature that finds dogs etc, does so all on device. Apple made a big point about this, the same with the finding faces feature.

This new feature scanning for illegal content, scans on the device and makes it possible for an Apple employee to view images from your phone which have not even been uploaded to the cloud. Do you not understand how that really takes the biscuit?

basically your own phone that you bought with your hard earned money will treat you as a criminal until proven wrong.

Its basically changing the innocent till proved guilty to guilty till proven innocent plus you pay for it

Its basically changing the innocent till proved guilty to guilty till proven innocent plus you pay for it

Well...you are completely wrong in your statement...read how it works again until you get it right.Sorry but you are comparing chalk and cheese.

The feature that finds dogs etc, does so all on device. Apple made a big point about this, the same with the finding faces feature.

This new feature scanning for illegal content, scans on the device and makes it possible for an Apple employee to view images from your phone which have not even been uploaded to the cloud. Do you not understand how that really takes the biscuit?

The damage control spin they’re putting on this is as hilarious as it is tragic. It’s really telling that their solutions for this is to disable major features.

Many people - myself included - are locked into long-term payment plans because these phones cost more than a rent payment or deposit for a used car. Even the monthly bills from bloodsuckers like Verizon are out of control. So we’re stuck with these things, like it or not. What do we do until we can get a new phone? Just don’t use it?

Many people - myself included - are locked into long-term payment plans because these phones cost more than a rent payment or deposit for a used car. Even the monthly bills from bloodsuckers like Verizon are out of control. So we’re stuck with these things, like it or not. What do we do until we can get a new phone? Just don’t use it?

i.e. Budapest declare its illegal to be LBGT, they tell Apple to scan for any such imagery.

Except the CSAM Detection system is extremely bad at doing this.

It's not good at detecting "category of pictures" only "recognising pictures from a predetermined list".

Let's say the Hungarian policy takes all the photos from an iPhone from a gay person. I'm not gay so I don't know what kind of special photos they have which heterosexuals don't. Maybe of men hugging and kissing, dressing more feminine, going to pride parades. Doesn't matter, the Hungarian police submit those photos which they believe are "gay" or showing "gay activities" to Apple. They also have the power to force Apple to hash them and put the hashes into iOS.

After a while those hashes reaches another gay person in Hungary who doesn't know the first guy.

He doesn't have the same pictures. Even if he was at the same pride parade as the first guy, his photos from that parade wouldn't match.

So the tool is useless for catching gay people.

Unless this group of people often save an "iconic" picture on their phone.

Register on MacRumors! This sidebar will go away, and you'll see fewer ads.