Apple over the summer announced new Child Safety features that are aimed at keeping children safer online. Apple has confirmed that one of those features, Communication Safety in Messages, has been enabled in the second beta of iOS 15.2 that was released today, after hints of it appeared in the first beta. Note that Communication Safety is not the same as the controversial anti-CSAM feature that Apple plans to implement in the future after revisions.

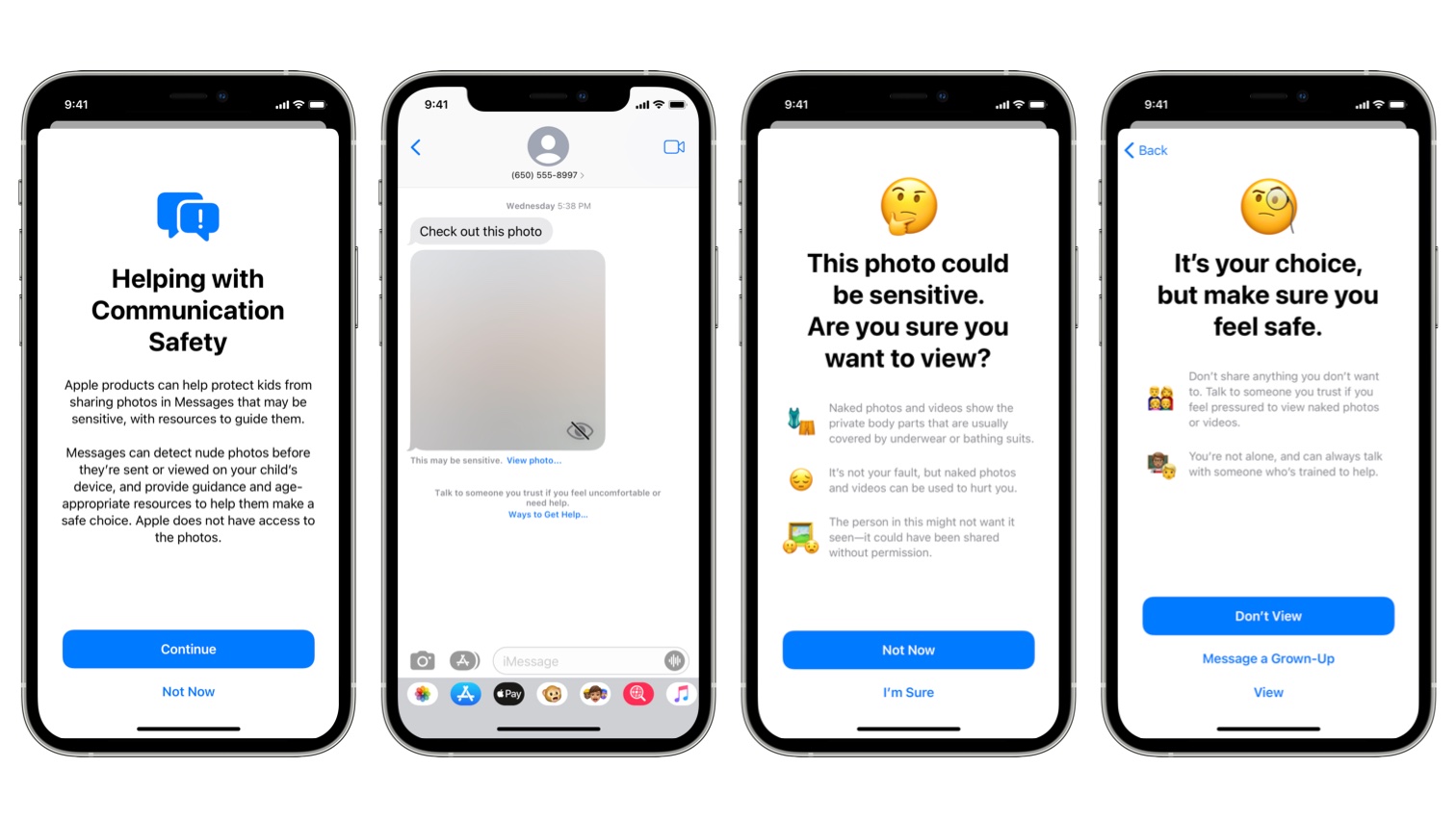

Communication Safety is a Family Sharing feature that can be enabled by parents, and it is opt-in rather than activated by default. When turned on, the Messages app is able to detect nudity in images that are sent or received by children. If a child receives or attempts to send a photo with nudity, the image will be blurred and the child will be warned about the content, told it's okay not to view the photo, and offered resources to contact someone they trust for help.

When Communication Safety was first announced, Apple said that parents of children under the age of 13 had the option to receive a notification if the child viewed a nude image in Messages, but after receiving feedback, Apple has removed this feature. Apple now says that no notifications are sent to parents.

Apple removed the notification option because it was suggested that parental notification could pose a risk for a child in a situation where there is parental violence or abuse. For all children, including those under the age of 13, Apple will instead offer guidance on getting help from a trusted adult in a situation where nude photos are involved.

Checking for nudity in photos is done on-device, with Messages analyzing image attachments. The feature does not impact the end-to-end encryption of messages, and no indication of the detection of nudity leaves the device. Apple has no access to the Messages.

In addition to introducing Communication Safety, Apple later this year plans to expand Siri and Search with resources that will help children and parents avoid unsafe situations online. Users who ask Siri how to report child exploitation, for example, will receive information on how to file a report.

If an Apple device user performs a search related to child exploitation, Siri and Search will explain that interest in the topic is harmful, providing resources for users to get help with the issue.

Apple in September promised to overhaul Communication Safety after hearing feedback from customers, advocacy groups, and researchers before implementing the feature, which is where the changes introduced today stem from.

Communication Safety is available in a beta capacity at the current time, and there's no word yet on when iOS 15.2 will see an official release. We're only at the second beta, so it still may be some time before launch.

Article Link: iOS 15.2 Beta Adds Messages Communication Safety Feature for Kids

Last edited: