Got a tip for us?

Let us know

Become a MacRumors Supporter for $50/year with no ads, ability to filter front page stories, and private forums.

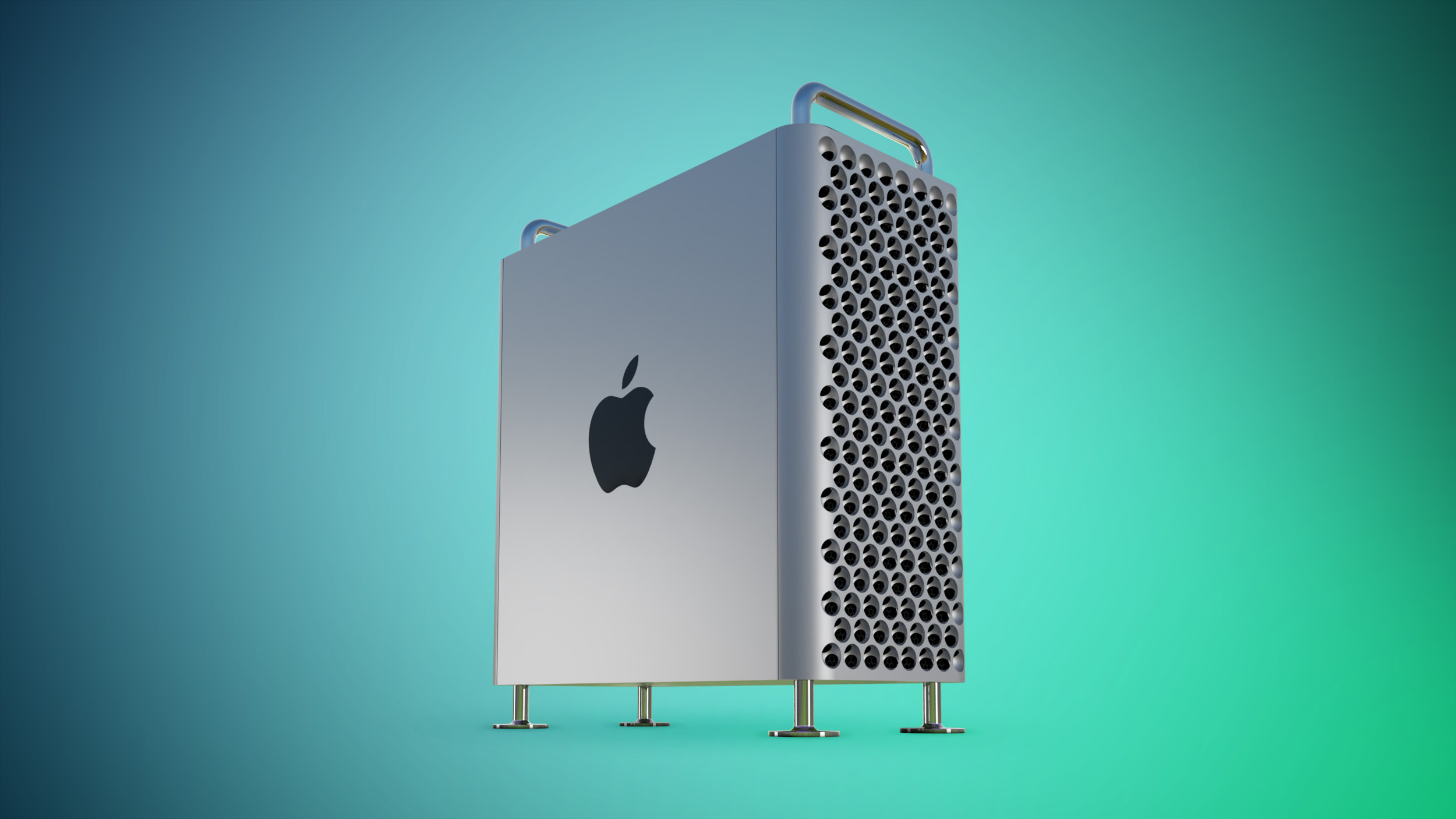

Apple Executive Discusses New Mac Pro's Lack of Graphics Card Support

- Thread starter MacRumors

- Start date

- Sort by reaction score

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

apple has little reason to even bother upgrading the iMac for every M-chip generation. no power hungry person would buy it in the first place and anyone considering the iMac would likely not care or notice the difference between m1 and m2. it's probably easier for apple to mass produce the m1 iMac and sell it for 2 generations than to upgrade it at every generation and clearance sell any remaining last gen imacs.I would be more inclined to believe this if the iMac was not still stuck on M1.

You can use 2 sided tape now with any 27" display. 😅How about a 27 inch screen with a place to bolt a Studio on the back? The screen might be a touch thick because, you would need to do some interesting things with the airflow.Y

In all seriousness I think Apple can figure it out.

- $9199 M2 Extreme

Imagine doing an Nollie Frontside 360 while using Final Cut Pro with your Vision Pro onI still think the new Mac Pro is still an overpriced machine.

Apple needs to come out with Mac Pro Mini.

View attachment 2216585

Last edited:

Yes, I agree for use cases there are some comparison. And I agree that there should be more users could do with this PCI slots.For the specific use cases they're designed for, sure.

The video codec hits in performance aren't a surprise to me as Apple for years seems to have went all in with their different flavors of Prores codecs even so much as making the afterburner cards.

I'm not the one you quoted, but running in-memory DBs is such a case where you can easily use up 1TB+ memory. Digital Twin simulation can eat away more memory than 192GB, specifically when you run larger factory size models. I occasionally do 3D models scanned with Lidar + Camera which are then reconstructed into Unreal/Unity environments which are much larger than 192GB. You might also look into scaling of reinforcement learning for autonomous systems applications, which can have much larger requirements. If you dive into the Nvidia world with Drive Sim and environment + sensor simulation, you're looking at two fully loaded DGX workstations. That is, at least initially for getting things up and running. Then further work is usually done on a cluster.Can you give an example of your workflow where 192GB is not sufficient and causing so many roadblocks that you’re considering switching to a Linux or windows machine?

They did plan, they planed not to use it. The biggest use case for putting video cards in a machine like this is not for gamers, they think they have a solution for why people buying a $50k machine puts video cards in a machine like this, and they might be right, they might be wrong, only real world results will tell.How do you not plan for the most common device used in PCIe slots? WTF is wrong with Apple?

Their solution for gaming is based on Metal, and their optimized internal GPUs, we‘ll see how that goes as well.

While the new Mac Pro features six available PCI Express expansion slots for audio, video capture, storage, networking, and more, the desktop tower is no longer compatible with graphics cards. Instead, graphics processing is handled entirely by the M2 chip, which includes up to a 76-core GPU that can access up to 192GB of unified memory.

Apple's hardware engineering chief John Ternus briefly touched on the matter in an interview with Daring Fireball's John Gruber last week, explaining that expandable GPU support for Apple silicon is not something that the company has pursued.

"Fundamentally, we've built our architecture around this shared memory model and that optimization, and so it's not entirely clear to me how you'd bring in another GPU and do so in a way that is optimized for our systems," Ternus told Gruber. "It hasn't been a direction that we wanted to pursue."

Another limitation of the new Mac Pro compared to the Intel-based model is the lack of user-upgradeable RAM, given the unified memory is soldered to the M2 Ultra chip. In addition, the Intel-based model could be configured with up to 1.5TB of RAM, which is 8× as much as the 192GB maximum for the Apple silicon model.

There are certainly advantages to the new Mac Pro and its unified architecture. For example, Apple says the new Mac Pro is up to 3× faster than the Intel-based model for certain real-world workflows like video transcoding and 3D simulations. For video processing, Apple says the new Mac Pro's performance is equivalent to an Intel-based model with seven Afterburner cards. For overall CPU performance, the new Mac Pro's $6,999 base model is up to 2× faster than a 28-core Intel-based Mac Pro, which started at $12,999.

The new Mac Pro is available to order now, and launches in stores on Tuesday. Customers who don't need PCI Express expansion should consider the Mac Studio, which can be configured with the M2 Ultra chip for $3,000 less than the Mac Pro.

Article Link: Apple Executive Discusses New Mac Pro's Lack of Graphics Card Support

Apple can sugarcoat it however they want. They adopted a cell phone like chipset structure to a desktop work environment, and this is the limitations. He didn't even give an assessment of whether it's as good, he simply stated the fact that they didn't think about it. Because they can't do it.

Not with the direction that they chose. That said, the way that they can stack the processors and get nearly linear performance gains, there's got to be some theoretical way that they could do the same thing with the GPU.

They can keep adding Cores cores cores cores but even the best of what they have now is still low end on the PC market and its preferable discreet solutions.

So basically, if I want improved GPU performance, say, 2 years from now, I will have to buy a brand new entire Mac Pro? Lol are you kidding me? This has to be a joke. Those things run for about 7 grand BEFORE tax. And as we know, those M2 graphics will be out of date in just a couple of years and simply cannot do everything that discrete GPUs can do. Apple is basically putting us on a Mac Pro subscription. I've officially heard it all. 🤣🤣🤣🤣

Fair enough though we can also make a case the other way around: it’s crazy Nvidia is able to beat Apple’s dual GPU with a single 4 year old GPU that’s much cheaper than Apple’s.It's not, and I never said it was. I think what Nvidia is doing is amazing. Can't wait for Apple to have a version of RTX!

RTX or not, the bottom line is which one offers superior performance, better bang for the buck for the end user. Any case that Nvidia uses X to its advantage can be made against Apple too.

Sadly, that's what I do (ssh into a linux box, but same difference).I don't know what everyone is talking about. Of course you can use an external graphics card with the new Mac Pro. Just use the Mac to VNC or SSH into a Windows machine of your choice and voila.

I think their model makes sense. Sure they can’t fill every market gap, but I would argue those in need of greater than 192GB of RAM or dedicated GPUs are a very small percent of the market, and not worth the headache for Apple to figure out how to make their SoC work for that buyer.

You can argue that it's a small part of the market but that's just an excuse. It doesn't cover for the fact that they can't solve that issue unless they build some new hardware setup.

These lazy rationalizations don't help anyone. The tech is right there for everybody to assess, shared memory structure and integrated graphics is simply not going to compete with real workstations. That's the end of it. It's so simple.

Linux already rules in areas like genomics and bioinformatics. In its highly compressed representation, the NCBI "nt" database is currently 297 GB (and growing). Uncompressed, it's well over a TB, although that's not the form in which it's commonly used, but some users would use it that way. The "nr" database is currently 217 GB (and growing).Can you give an example of your workflow where 192GB is not sufficient and causing so many roadblocks that you’re considering switching to a Linux or windows machine?

The 2019 Mac Pro was competitive in these areas (although Apple's prices for RAM upgrades were not), but the 2023 Mac Pro is not.

Last edited:

I still don’t understand the current Mac Pro. Why didn’t Apple come up with an M1Ultra Mac Pro last year? Was it just to boost Mac Studio sales, did they want to axe it?

Probably somewhere north of 95% of the people who need the M2Ultra performance don’t need PCI-E. But that 5% of that already small market can lead to a domino-effect if you abandon them. It isn’t strange for games, video-tools or 3D-modeling software to require certain functionality in graphics cards that Apple doesn’t offer. And that’s maybe 0.01% of Apple’s customers that need such functionality, but you’re witholding them from doing the best thing they could. Yes, the graphics in the M2Ultra are likely amazing, but it’s lacking certain functionality and that’s where I think the fuzz is all about.

I really hope Apple could just enable PCI-E graphics cards in the new Mac Pro with a firmware update, else I think the Mac Pro is finally dead. Yes, there are people that need PCI-E without the graphics cards, but that group is so small Apple can’t afford to make a Mac Pro just for them and those people aren’t going to invest in a machine Apple doesn’t look confident about in the long run.

Probably somewhere north of 95% of the people who need the M2Ultra performance don’t need PCI-E. But that 5% of that already small market can lead to a domino-effect if you abandon them. It isn’t strange for games, video-tools or 3D-modeling software to require certain functionality in graphics cards that Apple doesn’t offer. And that’s maybe 0.01% of Apple’s customers that need such functionality, but you’re witholding them from doing the best thing they could. Yes, the graphics in the M2Ultra are likely amazing, but it’s lacking certain functionality and that’s where I think the fuzz is all about.

I really hope Apple could just enable PCI-E graphics cards in the new Mac Pro with a firmware update, else I think the Mac Pro is finally dead. Yes, there are people that need PCI-E without the graphics cards, but that group is so small Apple can’t afford to make a Mac Pro just for them and those people aren’t going to invest in a machine Apple doesn’t look confident about in the long run.

I don't think they would even admit it for say the next Mac Pro using M3 or nobody would buy the M2 version knowing the next one has the worthy upgradesOkay, they just confirmed upgradable memory and GPU is not coming to Apple Silicon so now we can put that discussion to rest.

This is apple's arrogance on display. They know better they build better silicon. Why would anyone want anything more. Nvidia and amd have only been making graphics chips for 30 years. But apple's m2 is superior. The hubris is off the charts.

Besides a graphics card or a capture card what does apple think people are going to do with 6 pcie slots?I still don’t understand the current Mac Pro. Why didn’t Apple come up with an M1Ultra Mac Pro last year? Was it just to boost Mac Studio sales, did they want to axe it?

Probably somewhere north of 95% of the people who need the M2Ultra performance don’t need PCI-E. But that 5% of that already small market can lead to a domino-effect if you abandon them. It isn’t strange for games, video-tools or 3D-modeling software to require certain functionality in graphics cards that Apple doesn’t offer. And that’s maybe 0.01% of Apple’s customers that need such functionality, but you’re witholding them from doing the best thing they could. Yes, the graphics in the M2Ultra are likely amazing, but it’s lacking certain functionality and that’s where I think the fuzz is all about.

I really hope Apple could just enable PCI-E graphics cards in the new Mac Pro with a firmware update, else I think the Mac Pro is finally dead. Yes, there are people that need PCI-E without the graphics cards, but that group is so small Apple can’t afford to make a Mac Pro just for them and those people aren’t going to invest in a machine Apple doesn’t look confident about in the long run.

That's overpriced too.It’s called Mac Studio.

The solution does not require new hardware. It requires new software. The biggest use for a graphics card in this market is for computation, not displaying stuff. It would not be that hard to add drivers for scientific computing. The reason why they don't put in that effort is political. They have a long standing disagreement with NVIDIA . Beyond that, they don't want to admit, there are uses where their current solution wont work.You can argue that it's a small part of the market but that's just an excuse. It doesn't cover for the fact that they can't solve that issue unless they build some new hardware setup.

These lazy rationalizations don't help anyone. The tech is right there for everybody to assess, shared memory structure and integrated graphics is simply not going to compete with real workstations. That's the end of it. It's so simple.

Here’s one for you, maybe Apple just doesn’t care to have that part of the market anymore. How many MacPro were sold total vs MacBook Pro and Mac Studio since Apple Silicon became available? If I were Apple, I’d give that market up rather than twist the engineers in a pretzel trying keep Apple Silicon work in a way it’s simply not designed to.You can argue that it's a small part of the market but that's just an excuse. It doesn't cover for the fact that they can't solve that issue unless they build some new hardware setup.

These lazy rationalizations don't help anyone. The tech is right there for everybody to assess, shared memory structure and integrated graphics is simply not going to compete with real workstations. That's the end of it. It's so simple.

CyberPorn.Besides a graphics card or a capture card what does apple think people are going to do with 6 pcie slots?

i believe the term for this in 2023 is "gaslighting"?

The solution does not require much pretzel twisting. Arm code for CUDA already exists. Just port it over to MacOS, then use it for computation only. There would be no need to tie it in to a display.Here’s one for you, maybe Apple just doesn’t care to have that part of the market anymore. How many MacPro were sold total vs MacBook Pro and Mac Studio since Apple Silicon became available? If I were Apple, I’d give that market up rather than twist the engineers in a pretzel trying keep Apple Silicon work in a way it’s simply not designed to.

Dude, that's just crazy! I can't believe you would say something like that.i believe the term for this in 2023 is "gaslighting"?

Apple products benchmark really well. It's almost has if they're designed to do exactly that. Apple is always better nobody else knows how to make chips. Lucky for everyone apple is saving the world. I'm glad they were able cobble together some vacuum tubes and make a processor. But they really need some humility their chips are not the greatest ever.Cause Apple is already smashing what AMD is doing in parts of the GPU realm. The first bench for Blender has just popped up and it shows the M2 Ultra almost doubling the performance of the M1 Ultra in a heavy GPU dependent application.

Nvidia of course is miles ahead in Blender because of RT Cores which are a big big benefit in raytracing task. But who's to say RT cores aren't coming to the M3?

View attachment 2216606

Apple products benchmark really well. It's almost has if they're designed to do exactly that. Apple is always better nobody else knows how to make chips. Lucky for everyone apple is saving the world. I'm glad they were able cobble together some vacuum tubes and make a processor. But they really need some humility their chips are not the greatest ever.Cause Apple is already smashing what AMD is doing in parts of the GPU realm. The first bench for Blender has just popped up and it shows the M2 Ultra almost doubling the performance of the M1 Ultra in a heavy GPU dependent application.

Nvidia of course is miles ahead in Blender because of RT Cores which are a big big benefit in raytracing task. But who's to say RT cores aren't coming to the M3?

View attachment 2216606

Register on MacRumors! This sidebar will go away, and you'll see fewer ads.