Got a tip for us?

Let us know

Become a MacRumors Supporter for $50/year with no ads, ability to filter front page stories, and private forums.

Apple Open to Expanding New Child Safety Features to Third-Party Apps

- Thread starter MacRumors

- Start date

- Sort by reaction score

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Apple is laying the foundation with the on device scanning of the iPhone. The Mac is next along with the iPad. Mark my words. iMessage and FaceTime scanning (including text) is going to be next. This is going to go deeper and become an integral part of the OS were it will eventually be scanning regardless of what a person has toggled on or off.If it is true and the CSAM image analyzer isn't applied en masse to the library, then (as above):

1. Perps will just crop the image by a row of pixels or use an image editor to change one pixel and the system will be useless.

2. Perps will turn iCloud Photos off.

The people who will be subjected to this are the ones who have nothing to hide and just want convenience. Then it will be useful as a mass surveillance tool where government X can insert items into the CSAM database and find out who among the population has those images on their phone.

Whether that be in China, Egypt, the US, or elsewhere, it makes it trivial to scan everyone's photos for thought-crimes.

If this is a good idea, why shouldn't they use AI to analyze images for child porn? That is the next step. Or perhaps require that cameras and mics on the phone and computer be on continuously so that they can monitor homes for safety. It is for the children.

Every step that empowers this type of surveillance is abuse, and lied about - just ask Clapper and Brennan.

You’re not answering the question. I described how the likely API works and asked how it could be misused, since you were very concerned about its potential for misuse. You said, they could arrest you. They can do that already, and it doesn’t have anything to do with the API.

They might not know that you had a photo of a student standing in front of a tank on your phone. It could be the governor of Virginia in blackface or a klan robe. It could be a photo of a boulder that is deemed racist. Once they know that, they begin more monitoring, arrest you, etc.

The API itself isn't the issue, the issue is the purpose to which it could be put: finding any image on any phone that has iCloud Photos turned on that is indicative of someone who is at odds with whatever authoritarian government anywhere in the world.

Surveillance would explain why Apple suddenly backed away from end-to-end on-device iCloud encryption "under pressure from the US government". This "feature" wouldn't have been possible with end-to-end on-device encryption which was why it was critical that it was squashed before it rolled out.

Whether this is a smokescreen for such capability or not the end result is the same, the ability to conduct massive, nearly instant, surveillance and searches for particular images worldwide.

All this privacy stuff is starting to just seem like theater

It’s funny people think this is about the children, that’s just the excuse they use to start opening the door.I agree with you 100%. However, Apple is not obligated to scan our iPhones. There has to be an alternative way to fight against child pornography.

Privacy is being exposed at it's fullest. Especially, now third party will be involved. Imagine if the information/photos gets leak by a third party? Who's held responsible for that.

It’s all done on-device, so yes it could.This "feature" wouldn't have been possible with end-to-end on-device encryption which was why it was critical that it was squashed before it rolled out.

This new "expansion" announcement is an immediate spreading of the liability due to the inevitable privacy class action lawsuit that will "drop" in the coming weeks.

Last edited:

The cost (in more ways than one) is getting to be a lot for both kinds. I think that a lot of people need to start determining how much they are willing to pay.All this privacy stuff is starting to just seem like theater

If I were a developer on the store, there is no way in the world I would touch that safety API.This new "expansion" is an immediate spreading of the liability due to the inevitable privacy class action lawsuit that will "drop" in the coming weeks.

They weren't, they just marketed it to squeeze that penny Timo style.Apple used to be about privacy and security. Not any more. Apple has no more highground to stand on.

Apple has already stated it happens with both the mac and ipad at the same time in the next month upgrade.Apple is laying the foundation with the on device scanning of the iPhone. The Mac is next along with the iPad. Mark my words. iMessage and FaceTime scanning (including text) is going to be next. This is going to go deeper and become an integral part of the OS were it will eventually be scanning regardless of what a person has toggled on or off.

I think this subject is the first time I have seen you really 'fired up' about something on here, besides iPhone ordering and launch.I agree with you 100%. However, Apple is not obligated to scan our iPhones. There has to be an alternative way to fight against child pornography.

Privacy is being exposed at it's fullest. Especially, now third party will be involved. Imagine if the information/photos gets leak by a third party? Who's held responsible for that.

So they are doubling and tripling down. Stunning. I honestly have a hard time grasping this overnight implosion. It's like they lied to us from day one to suck us in. In the internal battle between the old guard, good business, traditional Democrat crowd (the guys who worked with Jobs) and the new, woke, diversity hires, the diversity hires must have won the day. While CSAM is a terrible thing and an important issue, the reality is that it likely isn't seen and doesn't impact 99.99% of Apple users, yet Apple is invading every one of its law-abiding, decent users to likely NOT catch any actual abusers. Here's the sobering reality: It is probably more likely that innocent people will be ensnared in false positives than it is that actual bad actors will be caught. Yet, the new woke Apple doesn't seem to care. Let that sink it. Steve cared about the USER first and it showed. Today's Apple, well, doesn't. The only thing that will have a chance to change this, although one wonders what is left to save, would be a hit to the bottom line. Conduct yourselves accordingly.

I think the scanning of text on iMessage will come before the end of next year. I think Apple is also going to integrate some kind of safety monitoring with FaceTime as well when the app is turned on.Apple has already stated it happens with both the mac and ipad at the same time in the next month upgrade.

As sensitive as this new feature is, I don't think Apple should be opening it up to third part apps. Big mistake, in my opinion.

Agreed. 1000%.

I don't get how they didn't expect this. I get the "why" behind all of this from their perspective. It allows them to continue to say they can't access our information and claim true E2E encryption. Yet, at the same time, this workaround basically takes away some of the benefit and appeal of E2E encryption...

IMHO they would've been better off saying, "Listen, we haven't been doing this, but CSAM is a real problem, so we have to start doing what other companies do and scan iCloud Photos for CSAM. Everybody else is doing this already, and Apple needs to get on board given the size of our iCloud platform."

I also don't get why the NCMEC is applauding this so much - other than the simple fact that Apple is finally starting to do something about CSAM on their platform. I would've though the NCMEC would've been disappointed that a criminal can simply turn off iCloud Photos to avoid this.

Seems like MacRumor's AAPL holders skipped commenting on this one. Although I'm sure at the expense of more profit they would even sell their souls.

Very easy. Someone (Apple) needs to decide the images that need to be compared and hash them. Government tells Apple, in order to sell phones in our Country, you need to add this image (nothing to do with kiddy porn) and notify us of all individuals flagged with it.You’re not answering the question. I described how the likely API works and asked how it could be misused, since you were very concerned about its potential for misuse. You said, they could arrest you. They can do that already, and it doesn’t have anything to do with the API.

You may argue, Apple would never agree to this but unfortunately their moral high ground gets trumped by shareholders desire for money. China already dictates much of what Apple does and goes directly against what they have been and are preaching. Opening it up to third parties just made life a lot easier for using this system for things it wasn't designed to do. Great escape goat for Apple when things go wrong.

Apple has the key to view photos and other data, if someone uses iCloud backup, as well as messages in the Cloud.Agreed. 1000%.

I don't get how they didn't expect this. I get the "why" behind all of this from their perspective. It allows them to continue to say they can't access our information and claim true E2E encryption. Yet, at the same time, this workaround basically takes away some of the benefit and appeal of E2E encryption...

IMHO they would've been better off saying, "Listen, we haven't been doing this, but CSAM is a real problem, so we have to start doing what other companies do and scan iCloud Photos for CSAM. Everybody else is doing this already, and Apple needs to get on board given the size of our iCloud platform."

I also don't get why the NCMEC is applauding this so much - other than the simple fact that Apple is finally starting to do something about CSAM on their platform. I would've though the NCMEC would've been disappointed that a criminal can simply turn off iCloud Photos to avoid this.

If Apple had come forward and said we will start scanning any pictures you upload to our servers, I don't think Apple would have gotten the kind of push back they have.

Pandoras Box?

Apple today held a questions-and-answers session with reporters regarding its new child safety features, and during the briefing, Apple confirmed that it would be open to expanding the features to third-party apps in the future.

As a refresher, Apple unveiled three new child safety features coming to future versions of iOS 15, iPadOS 15, macOS Monterey, and/or watchOS 8.

Apple's New Child Safety Features

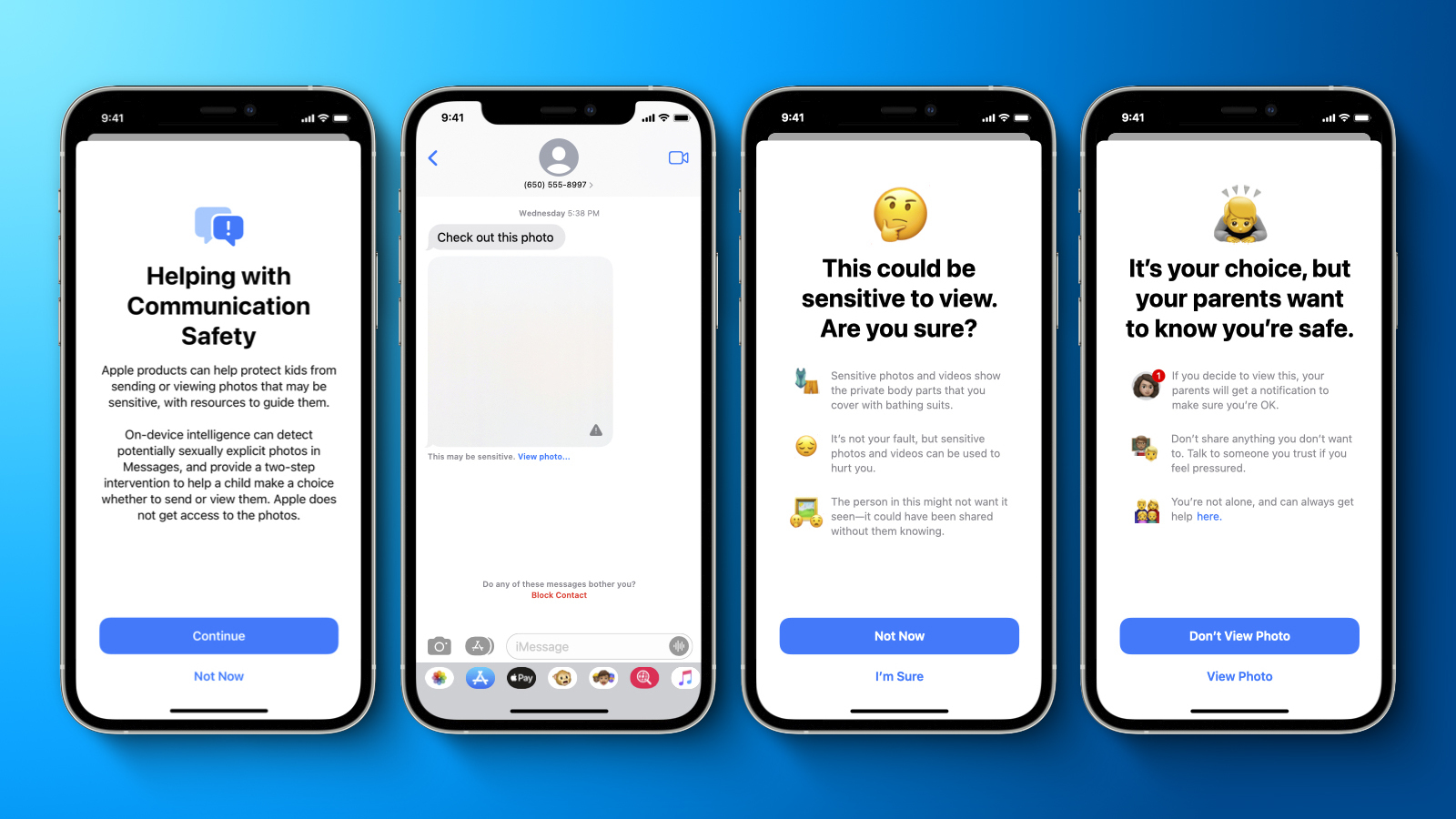

First, an optional Communication Safety feature in the Messages app on iPhone, iPad, and Mac can warn children and their parents when receiving or sending sexually explicit photos. When the feature is enabled, Apple said the Messages app will use on-device machine learning to analyze image attachments, and if a photo is determined to be sexually explicit, the photo will be automatically blurred and the child will be warned.

Second, Apple will be able to detect known Child Sexual Abuse Material (CSAM) images stored in iCloud Photos, enabling Apple to report these instances to the National Center for Missing and Exploited Children (NCMEC), a non-profit organization that works in collaboration with U.S. law enforcement agencies. Apple confirmed today that the process will only apply to photos being uploaded to iCloud Photos and not videos.

Third, Apple will be expanding guidance in Siri and Spotlight Search across devices by providing additional resources to help children and parents stay safe online and get help with unsafe situations. For example, users who ask Siri how they can report CSAM or child exploitation will be pointed to resources for where and how to file a report.

Expansion to Third-Party Apps

Apple said that while it does not have anything to share today in terms of an announcement, expanding the child safety features to third parties so that users are even more broadly protected would be a desirable goal. Apple did not provide any specific examples, but one possibility could be the Communication Safety feature being made available to apps like Snapchat, Instagram, or WhatsApp so that sexually explicit photos received by a child are blurred.

Another possibility is that Apple's known CSAM detection system could be expanded to third-party apps that upload photos elsewhere than iCloud Photos.

Apple did not provide a timeframe as to when the child safety features could expand to third parties, noting that it has still has to complete testing and deployment of the features, and the company also said it would need to ensure that any potential expansion would not undermine the privacy properties or effectiveness of the features.

Broadly speaking, Apple said expanding features to third parties is the company's general approach and has been ever since it introduced support for third-party apps with the introduction of the App Store on iPhone OS 2 in 2008.

Article Link: Apple Open to Expanding New Child Safety Features to Third-Party Apps

(Link: Pandora's_box) I guess, this is it for privacy on all fronts.

Apple caving in, is a bit surprising, but… someone must have “convinced “ Tim Apple, that this is the way forward. By the means necessary.

I can see a loooot of company admins starting to get questions from the higher ups. Like “Is our data safe”? What about content on a company network, that has ANY Apple product connected? It will surely not be “only” iPhones and iPads (the newest one sharing the same capabilities and chipsets with Mac mini, MacBooks, iMacs).

How about the odd MacBook with Apple Silicon used in company settings? Suddenly it looks like Apple has created a whole new market: Protection against unknown “data exports” with “unknown content” to “unknown receivers” for “unknown reasons”.

How about iPhones used in South America? In Europe? In Asia? In the Middle East? What if a European citizen transfers in New York on the way to Guatamala? Will contents suddenly be hashed and transferred to US-servers? You’re legally spied upon by both NSA, CIA and a whole host of US agencies. Airside EU citizens are not protected in any way by US laws. US citizens outside US soil are not protected by US laws either, and certainly not from surveillance by foreign powers. Democracies or not. The laws, that apply, are the laws of the land, where you stay. Like it or not.

How do you distinguish hashes of images and hashes of documents or books? The data pushed to Apple’s servers, will - of course - be encrypted, and no ordinary person will know, what is actually transferred, when push comes to shove. You’ll have to trust Apple to ONLY do good, and NEVER cave into ANY government pressure ANYWHERE. All countries in this world have security laws, that require any legal entity to support any legal request to the fullest - also to accept, that the existence of any such request is not divulged to affected parties.

If Apple has access to phone content, and the ability to transfer information of any content on the device (or any connected content e.g. content on your local NAS, or information present on the company network, you’re connected to), no modern country needs to create new laws to get access to the information. The twist in China was, that China demanded that data of Chinese citizens be stored in China, and not in the US (Imagine, that data from US citizens were to be stored on Chinese servers - that would probably lead to a quick response from US-authorities too, don’t you think?).

No new laws will be required anywhere, if Apple gets even an inkling of a slight thought of granting permission to execute ANY scans, but Apple has just signalled “willingness” and “ability”, where none was so blatantly present before.

This is the “new privacy” in modern “double talk”. What governments WILL get access to and know, will of course be available to others. NSA could not even keep their own spy-tools safe; why do you even suspect, that any government agency can keep things secret. It’s not that US military or security personnel are not engaging in highly profitable “private information distribution enterprise” covering - maybe hitherto - widely unknown - ahem - “government facts” from time to time (I refrain from using the word intelligence, where governments are involved - expediency yes, but intelligence? No!).

What Apple is suggesting looks like (US controlled!) NSO Group Pegasus on steroids, and it begs the question “why”?

Now, whatever REAL reason Apple has for the new approach, what is to prevent other multinationals from getting inspired? And governments everywhere?

Should any Word document, you open on a computer, automatically be scanned, and a “hash” automatically sent to Microsoft for further processing and possible action? What will be the basis for the hash? A set of words or phrases? Like “democracy”, “freedom” or even “Winnie the Pooh” etc.?

Will - for instance - US-citizens traveling abroad be subject to “foreign scanning rules”, when travelling to, let’s say Mexico? Using their iPhones in Cancun, Oaxaca, San Miguel de Allende or Mexico City? How about a European departing from Helsinki, Finland passing through Hong Kong in transit to Brisbane, Australia? Many quite ordinary books, documents, songs and videos pose no problem what so ever in Europe, the US, Canada etc., but they will be “illegal and punishable content” by existing on your phone in Hong Kong - also “airside”.

Who is to say, that Byelorussia wouldn’t get “hashes” of “items of interest” on their citizens. That country recently hi-jacked a Ryan Air flight between two European capitals and forced it to land by threads from fighter aircrafts because a dissident was spotted on the passenger list! Lukashienko is a ruffian with around 30 years in power, and few regimes would behave with so obvious stupidity, but that does not prevent any regime from seeking access to your physical person in order for you to help the authorities in their enquiries - based on a hash of a word or phrase in ANY language.

Just for fun, two completely innocent examples: In Denmark you can see signs in elevators containing the words “I FART” (which light up, when the elevator is moving, meaning “AT SPEED”) and “GODSELEVATOR” (which just means “GOODS ELEVATOR”). Now imagine, what religious police in some countries could interpret the latter term to represent? Blasphemy? In some countries punishable by death. Also for minors. After a looong period of “interrogation”, if you’re extremely lucky, you may not even be keen on being released into “freedom” without any emergency hospital treatment.

Do you really think, that the world will let Tim Apple control, what is scanned, hashed and transferred? And would it at all be guaranteed, that it was ONLY hashes, and not complete sentences or paragraphs containing a “suspect phrase”, that was transferred. Or a hash indicating an image of Martin Luther King on your phone today - and only today - in a future Trump-leaning - or worse - US administration? The cloud never forgets.

Just to illustrate, that if you open Pandora’s box, you’d better be absolutely certain about, what you’re starting. There’s no way back. Only forward. Especially, when the “power hungry” smell “opportunities” for influencing the future limits on the freedom for “the unwashed masses”.

Until now, you had the possibility or at least an illusion of privacy. Encryption could go a long way, but most countries in this world have no problem breaking any encryption, if you’re in their hands. They just use the good ol’ universal decryption key (you know, the “rubber hose” approach or the CIA method “water boarding” deemed legal by a former US administration).

What Tim Apple has demonstrated, is, that the time of privacy has passed. It will no longer exist for any of us. Governments will see to that, and you can do nothing to prevent it. Pandoras box has opened, and there is no way back. Not for Tim Apple. Not for Apple. Not for any of us.

Life will become rough in a lot of places on this planet within the foreseeable future.

Agreed. 1000%.

I don't get how they didn't expect this. I get the "why" behind all of this from their perspective. It allows them to continue to say they can't access our information and claim true E2E encryption. Yet, at the same time, this workaround basically takes away some of the benefit and appeal of E2E encryption...

IMHO they would've been better off saying, "Listen, we haven't been doing this, but CSAM is a real problem, so we have to start doing what other companies do and scan iCloud Photos for CSAM. Everybody else is doing this already, and Apple needs to get on board given the size of our iCloud platform."

I also don't get why the NCMEC is applauding this so much - other than the simple fact that Apple is finally starting to do something about CSAM on their platform. I would've though the NCMEC would've been disappointed that a criminal can simply turn off iCloud Photos to avoid this.

Possibly waiting for it to launch before demanding that the currently mentioned defeat methods are eliminated.

Am I the only one (even if I'm not a native English speaker to see 80% of the comment here does not have read or understood how this will work ?

1 child safety only report to PARENTS NOT APPLE GOOGLE FACEBOOK THE POPE OR POLICEMEN.

2 CSAM only to apple and AFTER verification to CSAM who is not a government or police.

3 child safety don't work if you aren't linked to a "parent" account and your phone a "child account".

This party app will have the possibility to report to PARENTS if their child have an image like that, so not privacy invading.

The only one potentially privacy invading is CSAM check and the Child safety and CSAM are two different system who will report to two different person, parent for Child and apple for CSAM.

1 child safety only report to PARENTS NOT APPLE GOOGLE FACEBOOK THE POPE OR POLICEMEN.

2 CSAM only to apple and AFTER verification to CSAM who is not a government or police.

3 child safety don't work if you aren't linked to a "parent" account and your phone a "child account".

This party app will have the possibility to report to PARENTS if their child have an image like that, so not privacy invading.

The only one potentially privacy invading is CSAM check and the Child safety and CSAM are two different system who will report to two different person, parent for Child and apple for CSAM.

An actual bad thing they can do is extend Apple’s demonstrated willingness and ability to compare photos to proscribed data in a database and make it a condition of selling their devices in that country.So, you have a new function/API in iOS, something like, is_it_CSAM(img). You as some third party app (good or nefarious), give this new function an image and it tells you “true” or “false”, answering the question “does this image match one of the hashes in the CSAM hash database that’s baked into iOS 15?” - you can’t supply anything other than an image, and it only answers yes or no.

Please explain how some nefarious actor, Chinese or otherwise, can do something bad with this. Not hand waving. An actual bad thing they could do. You seem very certain there’s an obvious horrible problem here, so it should be easy to explain.

is_it_CSAM(img) becomes do_we_approve(img) or txt or doc or xls or…

Apple already conforms to the will of, say, China. Now they’ve demonstrated additional functionality that would be very useful in the seeking of proscribed information of all sorts.

No doubt.I think the scanning of text on iMessage will come before the end of next year. I think Apple is also going to integrate some kind of safety monitoring with FaceTime as well when the app is turned on.

I believe most of the negative comments you are seeing are in regards to the on device phone scanning of images.Am I the only one (even if I'm not a native English speaker to see 80% of the comment here does not have read or understood how this will work ?

1 child safety only report to PARENTS NOT APPLE GOOGLE FACEBOOK THE POPE OR POLICEMEN.

2 CSAM only to apple and AFTER verification to CSAM who is not a government or police.

3 child safety don't work if you aren't linked to a "parent" account and your phone a "child account".

This party app will have the possibility to report to PARENTS if their child have an image like that, so not privacy invading.

The only one potentially privacy invading is CSAM check and the Child safety and CSAM are two different system who will report to two different person, parent for Child and apple for CSAM.

I am not against the new parent opt-in feature for iMessage, and I don't recall seeing anyone here railing about it due to privacy concerns, although I could have missed it. I think that that is a good thing although I think the age should have been higher than 13.

Yes but I have seen many post who seen to think this will apply to their smartphone, and they cannot do anything, and other who think Child and CSAM are the same technology.I believe most of the negative comments you are are in regards to the on device phone scanning of images.

I am not against the new parent opt-in feature for iMessage, and I don't recall seeing anyone here railing about it due to privacy concerns, although I could have missed it. I think that that is a good thing although I think the age should have been higher than 13.

The only one we must ask proof from apple that their list will never do anything else than CSAM ... is CSAM, since the Child one cannot be "perverted" since it warn the phone who is "parent" on the family account.

Register on MacRumors! This sidebar will go away, and you'll see fewer ads.