Smart move. It's important for antitrust reasons that Apple does not use its dominant position to create an illegal monopoly on destroying the Fourth Amendment.

Apple today held a questions-and-answers session with reporters regarding its new child safety features, and during the briefing, Apple confirmed that it would be open to expanding the features to third-party apps in the future.

As a refresher, Apple unveiled three new child safety features coming to future versions of iOS 15, iPadOS 15, macOS Monterey, and/or watchOS 8.

Apple's New Child Safety Features

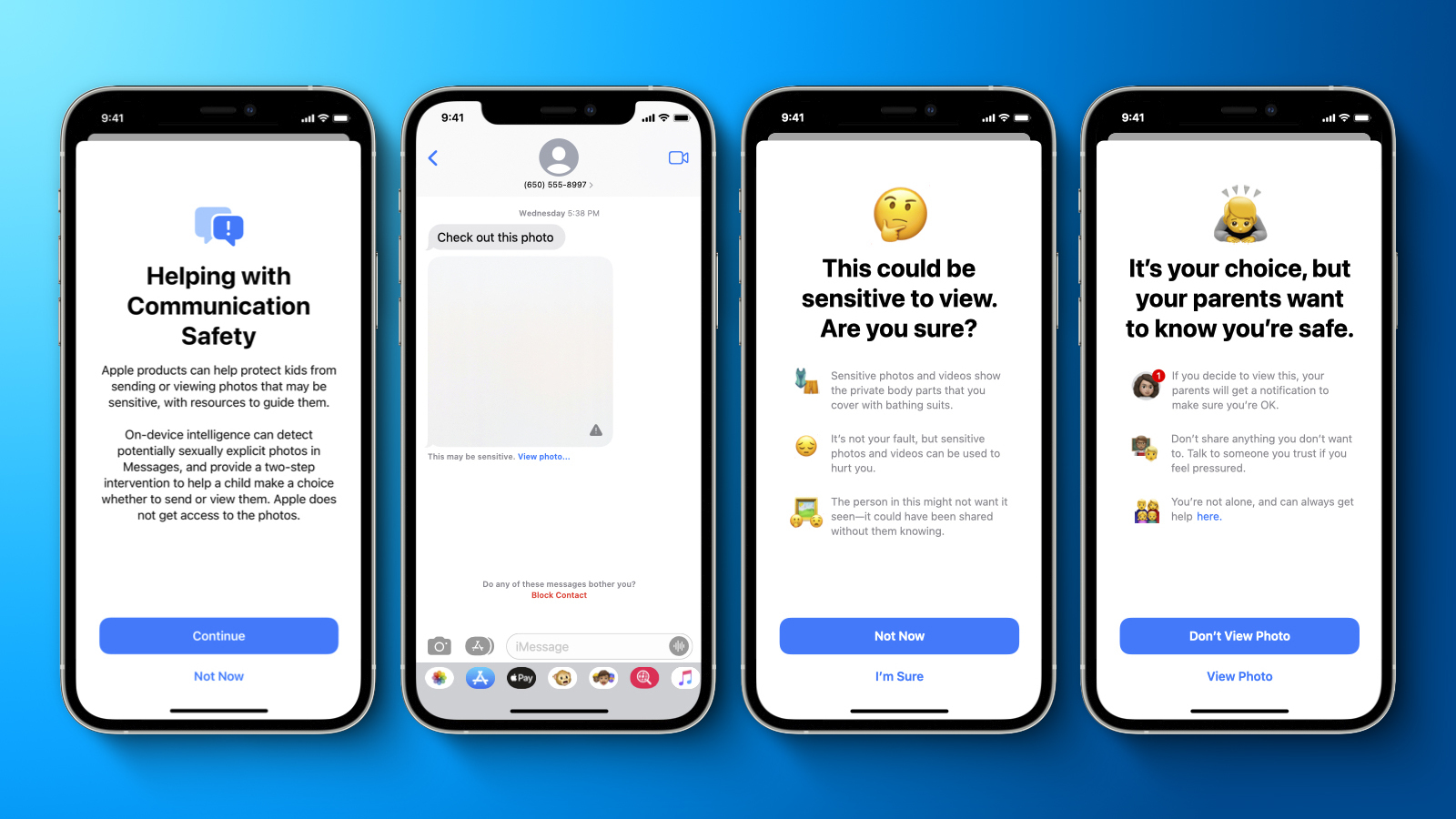

First, an optional Communication Safety feature in the Messages app on iPhone, iPad, and Mac can warn children and their parents when receiving or sending sexually explicit photos. When the feature is enabled, Apple said the Messages app will use on-device machine learning to analyze image attachments, and if a photo is determined to be sexually explicit, the photo will be automatically blurred and the child will be warned.

Second, Apple will be able to detect known Child Sexual Abuse Material (CSAM) images stored in iCloud Photos, enabling Apple to report these instances to the National Center for Missing and Exploited Children (NCMEC), a non-profit organization that works in collaboration with U.S. law enforcement agencies. Apple confirmed today that the process will only apply to photos being uploaded to iCloud Photos and not videos.

Third, Apple will be expanding guidance in Siri and Spotlight Search across devices by providing additional resources to help children and parents stay safe online and get help with unsafe situations. For example, users who ask Siri how they can report CSAM or child exploitation will be pointed to resources for where and how to file a report.

Expansion to Third-Party Apps

Apple said that while it does not have anything to share today in terms of an announcement, expanding the child safety features to third parties so that users are even more broadly protected would be a desirable goal. Apple did not provide any specific examples, but one possibility could be the Communication Safety feature being made available to apps like Snapchat, Instagram, or WhatsApp so that sexually explicit photos received by a child are blurred.

Another possibility is that Apple's known CSAM detection system could be expanded to third-party apps that upload photos elsewhere than iCloud Photos.

Apple did not provide a timeframe as to when the child safety features could expand to third parties, noting that it has still has to complete testing and deployment of the features, and the company also said it would need to ensure that any potential expansion would not undermine the privacy properties or effectiveness of the features.

Broadly speaking, Apple said expanding features to third parties is the company's general approach and has been ever since it introduced support for third-party apps with the introduction of the App Store on iPhone OS 2 in 2008.

Article Link: Apple Open to Expanding New Child Safety Features to Third-Party Apps

Got a tip for us?

Let us know

Become a MacRumors Supporter for $50/year with no ads, ability to filter front page stories, and private forums.

Apple Open to Expanding New Child Safety Features to Third-Party Apps

- Thread starter MacRumors

- Start date

- Sort by reaction score

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Ok, Apple went from Privacy vision to creep vision. Apple is completely hypocritical.

So, you have a new function/API in iOS, something like, is_it_CSAM(img). You as some third party app (good or nefarious), give this new function an image and it tells you “true” or “false”, answering the question “does this image match one of the hashes in the CSAM hash database that’s baked into iOS 15?” - you can’t supply anything other than an image, and it only answers yes or no.if one of those 3rd party apps happens to be from a developer that's 47% owned by a Chinese tech company... which is controlled by a Chinese state corporation with direct ties to the Chinese communist party.... well... they'll never use this as a backdoor into scanning iPhones of U.S users to detect unflattering images of Chinese communist leaders... right?

Please explain how some nefarious actor, Chinese or otherwise, can do something bad with this. Not hand waving. An actual bad thing they could do. You seem very certain there’s an obvious horrible problem here, so it should be easy to explain.

Twitter and Facebook have their AI implementation to flag mis-information, many times they shut down the wrong accounts and apologize for it. In the case of Apple's AI making a mistake identifying a photo it's not shutting down your account it's law enforcement at your door.😒 it's hash data, not the actual picture...

Mac Rumors is overboard with the sensationalism lately.

I am a little bit confused by your answer:

1. a hash function is per definiton a non-reversible function (lookup a "perfect hash" if you like)

2. No need for Apple to reverse engineer the image anyhow - the "safety voucher" already contains a copy

so sorry - I cannot follow you.

Secure multi-party computation - Wikipedia

This is the original web page I got my idea from. Much better than my 2 second skimming (did not read deep enough for comprehension).

Help me understand, because I clearly don’t get why everyone is so angry about this

If I offered an app with the ability to upload images or whatever, and I’m legally and morally compelled to prevent child abuse images, why would I not want to use a free service from Apple that does this in a way that maximizes privacy.

I get no corporation can be trusted. Facebook, Boeing, every single Wallstreet firm, et al….all scum. I get the slippery slope arguments. But even if you don’t trust Apple’s leadership and their intentions, trust how they make money. They sell premium devices which will expand more and more into health and financial transactions. There is a lot more money for Apple there then selling you a new iPhone or Mac every 3 years. And this strategy needs rock solid security and privacy. Plus, they need consumer trust. (You would be nuts to give your medical info to Facebook, Google, Amazon, Verizon.)

I think the truth is far more simple and benign:

1. Apple wants end-to-end encryption on iCloud. They haven’t done it because people lock themselves out of their account losing access to a lifetime of memories and critical date and because law enforcement bullies tech companies.

2. So Apple solves these problems under their terms. At wwdc we see ability to designate a person who has access to your iCloud. Yes, it was framed as post-life, but it’s a safety mechanism for any scenario when someone can’t get access. And they decide to focus on child safety because it‘s the law, it’s morally correct, and because this is an area where they can have the most impact.

facebook, Google, yahoo, Adobe, Amazon, you name it, all scan your uploads for child porn. All of them. Again, it’s the law.

If I offered an app with the ability to upload images or whatever, and I’m legally and morally compelled to prevent child abuse images, why would I not want to use a free service from Apple that does this in a way that maximizes privacy.

I get no corporation can be trusted. Facebook, Boeing, every single Wallstreet firm, et al….all scum. I get the slippery slope arguments. But even if you don’t trust Apple’s leadership and their intentions, trust how they make money. They sell premium devices which will expand more and more into health and financial transactions. There is a lot more money for Apple there then selling you a new iPhone or Mac every 3 years. And this strategy needs rock solid security and privacy. Plus, they need consumer trust. (You would be nuts to give your medical info to Facebook, Google, Amazon, Verizon.)

I think the truth is far more simple and benign:

1. Apple wants end-to-end encryption on iCloud. They haven’t done it because people lock themselves out of their account losing access to a lifetime of memories and critical date and because law enforcement bullies tech companies.

2. So Apple solves these problems under their terms. At wwdc we see ability to designate a person who has access to your iCloud. Yes, it was framed as post-life, but it’s a safety mechanism for any scenario when someone can’t get access. And they decide to focus on child safety because it‘s the law, it’s morally correct, and because this is an area where they can have the most impact.

facebook, Google, yahoo, Adobe, Amazon, you name it, all scan your uploads for child porn. All of them. Again, it’s the law.

I would think that knowing who you are and arresting you would be enough for anyone to think it's bad. (And I'm talking about the U.S., not China, they already could do that just as easily.)Please explain how some nefarious actor, Chinese or otherwise, can do something bad with this. Not hand waving. An actual bad thing they could do. You seem very certain there’s an obvious horrible problem here, so it should be easy to explain.

I keep seeing the term “hash” tossed around with this image analysis. CSAM is not traditional hashing.

Hashing is, in layman's terms, one-way encryption. Standard security practice. Instead of Apple storing my plaintext password, they hash it and then store it. When I try to log in later, they hash the password I just entered and compare it against their database. The original password is unviewable in the event of a security breach. But… even with salted hashes, the passwords are limited in number of bytes.

Now compare that to an image that is megabytes in size. For hashing to work, the following would have to occur:

1. Apple would have to obtain an original (illegal) image, identify that it is illegal, and store the hash

2. That image would need to be redistributed unchanged (no re-sizing or modifications)

3. The distributed copy would need to be hashed and compared

Even a single bit or byte in the wrong place will result in the copy’s hash not matching the original.

—

CSAM is far different - some poor souls had the privilege of viewing all that filth, feeding it through machine learning processes, and fine-tuning the recipe to detect illegal images. They are not hashing these images - they are applying ML analysis. We have no idea what standards they are using to label images as bad/illegal - is it Tim Cook’s standards? The United States? The State of California? This is being used to identify criminal activity so you damn well better be concerned about the information that Apple is collecting, how it is being portrayed, and what exactly they are giving to a prosecutor. If/when you get a knock on your door for flagged images, Apple is acting as an expert witness against you. Good luck with that.

Hashing is, in layman's terms, one-way encryption. Standard security practice. Instead of Apple storing my plaintext password, they hash it and then store it. When I try to log in later, they hash the password I just entered and compare it against their database. The original password is unviewable in the event of a security breach. But… even with salted hashes, the passwords are limited in number of bytes.

Now compare that to an image that is megabytes in size. For hashing to work, the following would have to occur:

1. Apple would have to obtain an original (illegal) image, identify that it is illegal, and store the hash

2. That image would need to be redistributed unchanged (no re-sizing or modifications)

3. The distributed copy would need to be hashed and compared

Even a single bit or byte in the wrong place will result in the copy’s hash not matching the original.

—

CSAM is far different - some poor souls had the privilege of viewing all that filth, feeding it through machine learning processes, and fine-tuning the recipe to detect illegal images. They are not hashing these images - they are applying ML analysis. We have no idea what standards they are using to label images as bad/illegal - is it Tim Cook’s standards? The United States? The State of California? This is being used to identify criminal activity so you damn well better be concerned about the information that Apple is collecting, how it is being portrayed, and what exactly they are giving to a prosecutor. If/when you get a knock on your door for flagged images, Apple is acting as an expert witness against you. Good luck with that.

His point, as I understand it, is that Apple is not scanning your photos. Your phone is scanning its own photos. Apple still doesn’t see them at all unless your phone hits a certain threshold of hash matches.Thus, they are LITERALLY scanning your photos.

The samsung phones are looking better and better every day.

Cannot believe they are doubling down on this - treating everyone like a nonce until proven otherwise.

Appalling. Awful. Hope Tim chokes on his cereal.

Appalling. Awful. Hope Tim chokes on his cereal.

They won’t. They can’t, with how this system works.if one of those 3rd party apps happens to be from a developer that's 47% owned by a Chinese tech company... which is controlled by a Chinese state corporation with direct ties to the Chinese communist party.... well... they'll never use this as a backdoor into scanning iPhones of U.S users to detect unflattering images of Chinese communist leaders... right?

The CSAM system, which is NOT what this is talking about, only matches known images.

THIS system uses what I presume are Neural Networks to detect sensitive images and warn the user before he/she opens them.

Apple is very, very careful with how they allow apps access to APIs. It sounds to me like this API, if ever released, would essentially allow the app to detect whether the user has a child protection feature turned on and/or pass an image to the API to learn whether it’s a sensitive image or not.

If an app wanted to detect said unflattering photos of certain individuals, it would have to its own processing to detect those photos, which is the same situation as we have today.

Surveillance as a service. Give up the Hubris apple, this is a stupid idea, take your licks and leave it on the dustbin of unimplemented tech

I disagree. I have no insight into the actual process, but it sounds to me like these are still hashes. Imagine this:CSAM is far different - some poor souls had the privilege of viewing all that filth, feeding it through machine learning processes, and fine-tuning the recipe to detect illegal images. They are not hashing these images - they are applying ML analysis. We have no idea what standards they are using to label images as bad/illegal - is it Tim Cook’s standards? The United States? The State of California? This is being used to identify criminal activity so you damn well better be concerned about the information that Apple is collecting, how it is being portrayed, and what exactly they are giving to a prosecutor. If/when you get a knock on your door for flagged images, Apple is acting as an expert witness against you. Good luck with that.

The image in question is taken and broken up into different sections. Each section on its own is “averaged” out, simplifying the content to grayscale, checking for contrast lines, stuff like that.

Each averaged section is then given an individual value, and all the values are hashed as a single string.

This would explain how a basic hash could match resized/cropped images without using a neural network that could trigger false positives.

Anybody read Ben Thompson on this?

stratechery.com

stratechery.com

Great piece - I'll post the bulk of the conclusion here

I am not anti-encryption, and am in fact very much against mandated backdoors. Every user should have the capability to lock down their devices and their communications; bad actors surely will. At the same time, it’s fair to argue about defaults and the easiest path for users: I think the iPhone being fundamentally secure and iCloud backups being subject to the law is a reasonable compromise.

Apple’s choices in this case, though, go in the opposite direction: instead of adding CSAM-scanning to iCloud Photos in the cloud that they own and operate, Apple is compromising the phone that you and I own-and-operate, without any of us having a say in the matter. Yes, you can turn off iCloud Photos to disable Apple’s scanning, but that is a policy decision; the capability to reach into a user’s phone now exists, and there is nothing an iPhone user can do to get rid of it.

A far better solution to the “Flickr problem” I started with is to recognize that the proper point of comparison is not the iPhone and Facebook, but rather Facebook and iCloud. One’s device ought be one’s property, with all of the expectations of ownership and privacy that entails; cloud services, meanwhile, are the property of their owners as well, with all of the expectations of societal responsibility and law-abiding which that entails. It’s truly disappointing that Apple got so hung up on its particular vision of privacy that it ended up betraying the fulcrum of user control: being able to trust that your device is truly yours.

Apple’s Mistake

While it’s possible to understand Apple’s motivations behind its decision to enable on-device scanning, the company had a better way to satisfy its societal obligations while preserving…

stratechery.com

stratechery.com

Great piece - I'll post the bulk of the conclusion here

I am not anti-encryption, and am in fact very much against mandated backdoors. Every user should have the capability to lock down their devices and their communications; bad actors surely will. At the same time, it’s fair to argue about defaults and the easiest path for users: I think the iPhone being fundamentally secure and iCloud backups being subject to the law is a reasonable compromise.

Apple’s choices in this case, though, go in the opposite direction: instead of adding CSAM-scanning to iCloud Photos in the cloud that they own and operate, Apple is compromising the phone that you and I own-and-operate, without any of us having a say in the matter. Yes, you can turn off iCloud Photos to disable Apple’s scanning, but that is a policy decision; the capability to reach into a user’s phone now exists, and there is nothing an iPhone user can do to get rid of it.

A far better solution to the “Flickr problem” I started with is to recognize that the proper point of comparison is not the iPhone and Facebook, but rather Facebook and iCloud. One’s device ought be one’s property, with all of the expectations of ownership and privacy that entails; cloud services, meanwhile, are the property of their owners as well, with all of the expectations of societal responsibility and law-abiding which that entails. It’s truly disappointing that Apple got so hung up on its particular vision of privacy that it ended up betraying the fulcrum of user control: being able to trust that your device is truly yours.

I’m so tired of this. This is not how it works.I'm soooo looking forward to the inevitable 2AM No-knock Raid after sending a diaper rash photo to our kids' pediatrician to triage.

Unless that diaper rash photo is part of a national child pornography database, then you have literally nothing to worry about.

Rip Steve & his vision!!!

The downfall has been started....

Child Safety Features seem inline with Steve... anyone remember KidSafe?

Except that is exactly what they are doing, as part of the normal on device indexing process. Turning off iCloud sync supposedly stops your phone from sending the results to Apple but 1) we’re just supposed to take Apple at its word here though we have no idea what actually goes on in this black box, and 2) just doing this negates one of the biggest selling points of an Apple device: seamless constant backup to the cloud of our media so it can be shared instantly across all our devices and backed up in case of damage theft or loss. That’s one major feature users will need to cripple for the sake of privacy, something Apple literally hangs its hat on publicly.Apple *is not* scanning your photos.

That’s not how it works.

There’s a One and 1 trillion chance that anyone from Apple will ever see any of your images

As for your “1 in a trillion chance” prediction, what exactly are you basing that on? Unless you work for Apple on this project specifically, you just pulled that out of whatever orifice that birthed it. If Amazon or Facebook did something like this would you be so quick to defend with baseless hyperbole?

Apple *is not* scanning your photos.

That’s not how it works.

There’s a One and 1 trillion chance that anyone from Apple will ever see any of your images

There are several problems:

1. If I have 60000 images on my phone, there is a 60000 in 1 trillion chance, or 6 in 100 million. So in the US, that is about 20 people with false positives.

2. If you are concerned about having your images caught:

a. They'll just turn iCloud photos off.

b. Apple says that it is based on the CSAM checksum, but computing a checksum is quite fragile. So if a pixel is changed, the CSAM checksum changes since the image changes. So now CSAM says "Our artificial intelligence based CSAM detection module is capable of finding and highlighting previously unseen, and hence unknown, material." (https://www.image-analyzer.com/threat-categories/csam-detection/)

The point is that it is self-evident that criminals won't obey the law, similarly they'll turn off iCloud photos.

How long until there is:

1. On device scanning of images?

2. A requirement that iCloud Photos be on?

3. The CSAM database being co-opted or hacked to include images that a government somewhere doesn't like. It will happen, it is just a question of when. Perhaps a guy standing in front of a tank will be inserted? (https://en.wikipedia.org/wiki/Tank_Man)

Think Snowden, Assange, Twitter, Facebook etc for the lengths to which authoritarians around the world will go to stop discussion, dissent, and monitor those who are doing so.

Are you seriously comparing website filtering to on device content scanning and reporting?Child Safety Features seem inline with Steve... anyone remember KidSafe?

As sensitive as this new feature is, I don't think Apple should be opening it up to third part apps. Big mistake, in my opinion.

E.g. in a time not far from now.So, you have a new function/API in iOS, something like, is_it_CSAM(img). You as some third party app (good or nefarious), give this new function an image and it tells you “true” or “false”, answering the question “does this image match one of the hashes in the CSAM hash database that’s baked into iOS 15?” - you can’t supply anything other than an image, and it only answers yes or no.

Please explain how some nefarious actor, Chinese or otherwise, can do something bad with this. Not hand waving. An actual bad thing they could do. You seem very certain there’s an obvious horrible problem here, so it should be easy to explain.

Trump Junior, the new president of the United States signs a few new executive orders demanding Apple to flag photos of oranges 🍊 , and hand out details to perform a few punishments against these state rebels.

You’re not answering the question. I described how the likely API works and asked how it could be misused, since you were very concerned about its potential for misuse. You said, they could arrest you. They can do that already, and it doesn’t have anything to do with the API.I would think that knowing who you are and arresting you would be enough for anyone to think it's bad. (And I'm talking about the U.S., not China, they already could do that just as easily.)

If it is true and the CSAM image analyzer isn't applied en masse to the library, then (as above):

1. Perps will just crop the image by a row of pixels or use an image editor to change one pixel and the system will be useless.

2. Perps will turn iCloud Photos off.

The people who will be subjected to this are the ones who have nothing to hide and just want convenience. Then it will be useful as a mass surveillance tool where government X can insert items into the CSAM database and find out who among the population has those images on their phone.

Whether that be in China, Egypt, the US, or elsewhere, it makes it trivial to scan everyone's photos for thought-crimes.

If this is a good idea, why shouldn't they use AI to analyze images for child porn? That is the next step. Or perhaps require that cameras and mics on the phone and computer be on continuously so that they can monitor homes for safety. It is for the children.

Every step that empowers this type of surveillance is abuse, and lied about - just ask Clapper and Brennan.

1. Perps will just crop the image by a row of pixels or use an image editor to change one pixel and the system will be useless.

2. Perps will turn iCloud Photos off.

The people who will be subjected to this are the ones who have nothing to hide and just want convenience. Then it will be useful as a mass surveillance tool where government X can insert items into the CSAM database and find out who among the population has those images on their phone.

Whether that be in China, Egypt, the US, or elsewhere, it makes it trivial to scan everyone's photos for thought-crimes.

If this is a good idea, why shouldn't they use AI to analyze images for child porn? That is the next step. Or perhaps require that cameras and mics on the phone and computer be on continuously so that they can monitor homes for safety. It is for the children.

Every step that empowers this type of surveillance is abuse, and lied about - just ask Clapper and Brennan.

Just because you think a feature might not apply to your personal actions, that doesn't mean the feature can't be abused in a negative way towards you.Or you know, just don’t have iCloud photos turned on.

Or be like 99.999% of people, and don’t be worried about features that Will not ever apply to you

Register on MacRumors! This sidebar will go away, and you'll see fewer ads.