No, it’s not based on any evidence, it’s based on your assumptions. You have presented zero proof the M1 is the same as the A14x, (which you can’t do because there IS no A14x) or a simple revision of the A14. In fact people have done analyses of both chips and while they obviously have some similarities, they also have significant differences (such as the M1 including PCIe support, something an A class processor obvious doesn’t need). You made the claim, you have to provide the proof. A performance graph (which you’ve never even shared just alluded to) is not proof, it’s, at best, evidence, but even then correlation and causation are different. Things can have similar performance levels and not be the same.Let me ask you, if it is exactly the same as everything before how is it not? What evidence do you have? Mine is based off 10 years of evidence anyone can look at

Got a tip for us?

Let us know

Become a MacRumors Supporter for $50/year with no ads, ability to filter front page stories, and private forums.

Apple's Upcoming AR/VR Headset to Require Connection to iPhone

- Thread starter MacRumors

- Start date

- Sort by reaction score

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Unless you have a statement from Apple or evidence showing the M1 was at any point called the A14x what you have is pure speculation. Declaring it to be true does not make it true.It’s a rebrand of the X chips and would be called A14X otherwise

So.....what's it for? What problem does it solve? Or is Apple making a product just because it can?

So.....what's it for? What problem does it solve? Or is Apple making a product just because it can?

Google "Augmented / Virtual Reality."

Answers galore.

Hilarious. Mark Facebook spent a billion dollars buying Oculus Rift. Tim Apple probably will end up spending a similar amount onVR or iRealityDistortionField Goggles or whatever it ends up being named. That'll make $2 billion thrown at devices that make a lot of people nauseous. I think I'm going to look for a barf bag maker to buy stock in and skip the goggles/glass/helmet.

100 yeas ago, people felt nauseous

using electric stairs. Do u think u know more marketing than google apple and fb? Dude please

Check out this concept video of how an AR world could look like. Crazy.So.....what's it for? What problem does it solve? Or is Apple making a product just because it can?

He's clearly still enjoying apple products.So...if you said goodbye to Apple, why are you here?

This made me want to NEVER use VR/AR, so if the idea was to turn people off to it, they succeeded wildly. 🙂Check out this concept video of how an AR world could look like. Crazy.

Ok, that sounds fascinating. But none of these uses seem to be worth the cost and inconvenience of wearing this massively large appendage in front of your eyes. But I guess the early adapters will lead the way, stupid looking or not.It depends on how they target it really.

Technical fields could benefit, being able to overlay schematics, measurements, info in real time while your working for example.

Medicine too, having the glasses feed info to the surgeon.

From a more consumer standpoint there are already apps that do some of this for things like museums, where you can use your phone to get additional information about what you are looking at.

This would be really useful for traveling, especially in countries with different languages. Obviously being able to have signs and such translated to your native language would be incredibly convenient. You could get directions that are displayed in your field of view while you walk around. Points of interest could be displayed. Historical scenes could be overlaid on modern locations. Here in Japan (and lots of other places) there are numerous ruins, it would be fascinating to be able to see models of what they used to look like and be able to switch between those views.

Athletes could use them to display information during their workouts, similar to what the Apple Watch does now but being able to see it without taking their eyes off what they are doing.

In the end it will depend on the price point and ease of use of the decides what areas make sense for it, but there are many potential options.

Jajaj… granted. The thing is, its inevitable. This AR world will happen (let’s just hope it’s not so crazy). So, I might as well be using an Apple product, that ensures me quality and privacy (sorry Facebook). And a nice gain in my AAPL shares.This made me want to NEVER use VR/AR, so if the idea was to turn people off to it, they succeeded wildly. 🙂

Perhaps they will work this way:

these glasses will have their own ar/vr limited capabilities when on its own.

But. BUT. Whenever you move towards a Mac, apple glasses will connect to it, and you'll be interacting with the mac with them. Then you take the iPhone in your hands, and the glasses will now interact with this. Same with apple tv, when you start using it.

In a few words, I think they will work similarly to airpods.

And it would be great.

these glasses will have their own ar/vr limited capabilities when on its own.

But. BUT. Whenever you move towards a Mac, apple glasses will connect to it, and you'll be interacting with the mac with them. Then you take the iPhone in your hands, and the glasses will now interact with this. Same with apple tv, when you start using it.

In a few words, I think they will work similarly to airpods.

And it would be great.

"Limiting to just iOS" ...They did many years ago with their Mackintosh and loose their privileged market position... just ask to IBM and their clones 😉It's not a conspiracy. If Apple wants to sell more watch wouldn't it makes sense to make it compatible with more OS instead of limiting to just iOS? There's a reason it only works for Apple eco system.

It’ll be interesting to see what‘s the killer apps on this headset. Gaming surely wouldn’t be a focus based on apple‘s support in the past, so maybe primarily VR videos/experiences?

Agreed on these. I haven’t been too sold on the gaming VR idea at all, prefer lazy comfy couch mode, but VR concerts have been nice and the 3D modeling app Medium (now some Adobe thing) were actually well beyond just a gimmick.Hopefully there's not too much latency with the wireless connection.

There’s one thing for sure though, these chipsets being developed for it will sooner than later be part of the whole ecosystem, maybe enhancing future “clustered” devices.

Pretty much how a bunch of iPhone/iPad stuff made their way to M1 Macs (and back to iPad), all that airdrop, AirPlay, universal desktop thingy devices, etc could be enhanced even more. Got two iMacs next to each other? They are double power each other.

I hope at least.

I have a PlayStation VR headset. It sometimes got brought out at a party but that's about it. Feel this is going to be very niche and a beta testing ground for proper glasses, which I'm also sceptical of. Using monitors at work all these years has really messed up my eyesight, I'm not sure if having overlays on our vision is a good thing.

My God. It was the fear of something like this that put me off trying LSD.Check out this concept video of how an AR world could look like. Crazy.

If it looks like that design, I agree, but I don’t think we can assume it will since basically all we have are speculation, leaks, and rumors at this point.Ok, that sounds fascinating. But none of these uses seem to be worth the cost and inconvenience of wearing this massively large appendage in front of your eyes. But I guess the early adapters will lead the way, stupid looking or not.

My question is will the limited bandwidth that is available via lightning (usb2.0) going to be enough or is usb-c needed (usb3.1 gen 2 or above)?

Read the lead article? A significant amount of functionality of the custom chip is devoted to wireless. May need a lightning cable to charge it ( though probably Type-C), but main operation mode is wireless. No USB data transmission involved at all.

WiFi 6E is very short range but also relatively high bandwidth and low latency.

" ..., and support for high speed 160MHz channels for multi-gigabit per second speed with superb stability and consistent experiences. .... Clear 6GHz spectrum occupied only by efficient Wi-Fi 6 traffic designed to deliver significant reductions in latency, increasing responsiveness for latency sensitive applications. ... "

https://www.qualcomm.com/wi-fi-6e

Can get about 1.2 Gb/s out of 1024-QAM 160Hz channel

https://en.wikipedia.org/wiki/Wi-Fi_6#Rate_set

If they choose to be a short range spectrum hog. One channel for each screen/lens (left and right0 and one channel for camera feeds ( left side / right side ). That is about 4Gb/s aggregate. ( or 2Gb/s if just primarily uploading video) They still will need some heavyweight compression to make it work (e.g., AV1 4:2:2 professional ) , but not "impossible" with dedicated, fixed function logic covering most of the heavy lifting.

Another "work around" would be dedicated upscaler on frames transmitted down locally.

I would be surprised if this works with any iPhone from 2-3+ years ago. Or any Macs from back then either. The wireless radio on the base "compute" Apple device is probably going to be at least WiFi 6 class (if not better) for this to work. Two years from now there will be a lot more of those devices out there. Also won't be surprised if this doesn't run down the battery on the phone relatively fast if it isn't plugged in. ( if the phones don't have the super compression fixed function logic , etc. ) then there is lots more grunt to getting things compressed for upload.

The video compression and radio work is specialized work so can offload much of the work from more general purpose CPU and/or GPU to more fixed function logic that does this at lower power and faster that generic code could.

In contrast to what some people say, this is primarily not about “pushing the Apple ecosystem”.Don't own an iPhone (I use Android), so that gift of of an Apple Watch I recv'd was worthless and re-gifted. Great idea, Tim! Make products that people can't use unless they're fully invested in Apple's ecosystem. Once I abandoned my iPhone for Android, moving completely away from Apple products became so simple.

As a developer, I know how hard it is - and how many compromises it means!! - to develop cross-platform. Apple doesn’t like compromises. (Me neither, that’s why I am choosing Apple.)

why not put M chip inside instead? AR/VR headsets are usually big enough to easily accommodate that and with Apple's chip design expertise they could make it work easily.

If they can fit the chip thats required to run it in iPhone then why not just save time etc. and put the chip from iPhone to the headset? Surely, the iPhone is smaller (space wise) than the AR/VR.

The M chip fits inside of an iPad Pro , not an iPhone. Those are substantively different size logic boards and battery sizes.

As for well there is a chip in the iPhone it is coupled too.... which iPhone. Does it only works with iPhone 12 or 13 and up? The radios on the base Apple "compute" device are probably going to have some high minimal requirements ( Wifi 6 or 6E ).

The phone isn't actually running the two displays in the headset (and the displays are competing for battery capacity also) . There is local GPU doing that. The blend of the camera capture feed and the 'artificial part' could be done locally also. the "stop! don't stumble over that chair" work could be done local on the device too ( don't need very heavyweight AI to do that if have marked off play area. ) . Neither does the phone have to worry about eyeball focus tracking inferencing.

The phone can also be plugged in while running the rendering engine. The headset can't. ( which is why they go through significant effort to make radio and en/decompression video such lower powered specialized workloads. the phone may not have those fixed function augments and have to use relatively lots more power to do all of the transmitting and en/decompression. . )

If a M-series box has a sufficient radio infrastructure to talk to the AR goggles than can run a more complicated rendering engine on that box while the power consumption on the AR goggles stays the same as if it was coupled to an iPhone. (over time there was be an incentive to buy a better iPhone even if kept the goggles constant. )

It boils down to the question of can they get the radio transmission and en/decompression compute costs significantly down below what the rendering engine costs would be if they are local. radio , en/decompression tasks are specialized and very amiable to fixed function logic. If the RF transmission distance is 10-12 feet and completely "line of sight" ( no walls or major objects) don't need relatively "high power" transmission power either. ( and stuff like 6GHz spectrum will work fine presuming not other 6GHz spectrum hogs nearby. . )

I guess that explains why there were internal battles in the team between integrated and not integrated.

I think that was as much as wires or no wires as much as it was about "integrated" and "not integrated". One group was looking for heavyweight compute power but didn't want the wires. The other group was more so fixated on how to scope down the compute power needed ( e.g., use 90% of reality so don't need to "render" it. ) using wireless isolation as a constraint.

apple's AR solution is likely way different in size of battery and overall weight, but the scope of augmenting rendering it will be far more limited also. The one with a more "no reality , all artificial but looks very real " is really a vastly different compute scope.

I have an Oculus 2 and if this rumor is true, I'd pick that over what Apple comes out with every time just because its a standalone device that doesn't need to be tied to a separate device.

Except to set it up. 🙂 No phone... doesn't work. It isn't completely stanalone.

Also not standalone when the battery dies. If Apple's device goes 4.5 hours and Quest 2 goes 2.5 hours ... that is a standalone gap (when the latter is back on the charger). If Apple's device only gets about the same battery life and weight approximately the same then dropping the onboard compute would not have bought much.

[ Health issues aside of super long exposure of eyes to screens. ]

Don't mind another competitor in the field, but please make it available via a tether to a M1 or higher Mac, or streaming as I want to higher fidelity graphics in my VR.

It’ll be interesting to see what‘s the killer apps on this headset. Gaming surely wouldn’t be a focus based on apple‘s support in the past, so maybe primarily VR videos/experiences?

The killer app will be the ability to consume AR content on a field of view as wide as your eyes can see, without having to constantly hold up your iPhone.

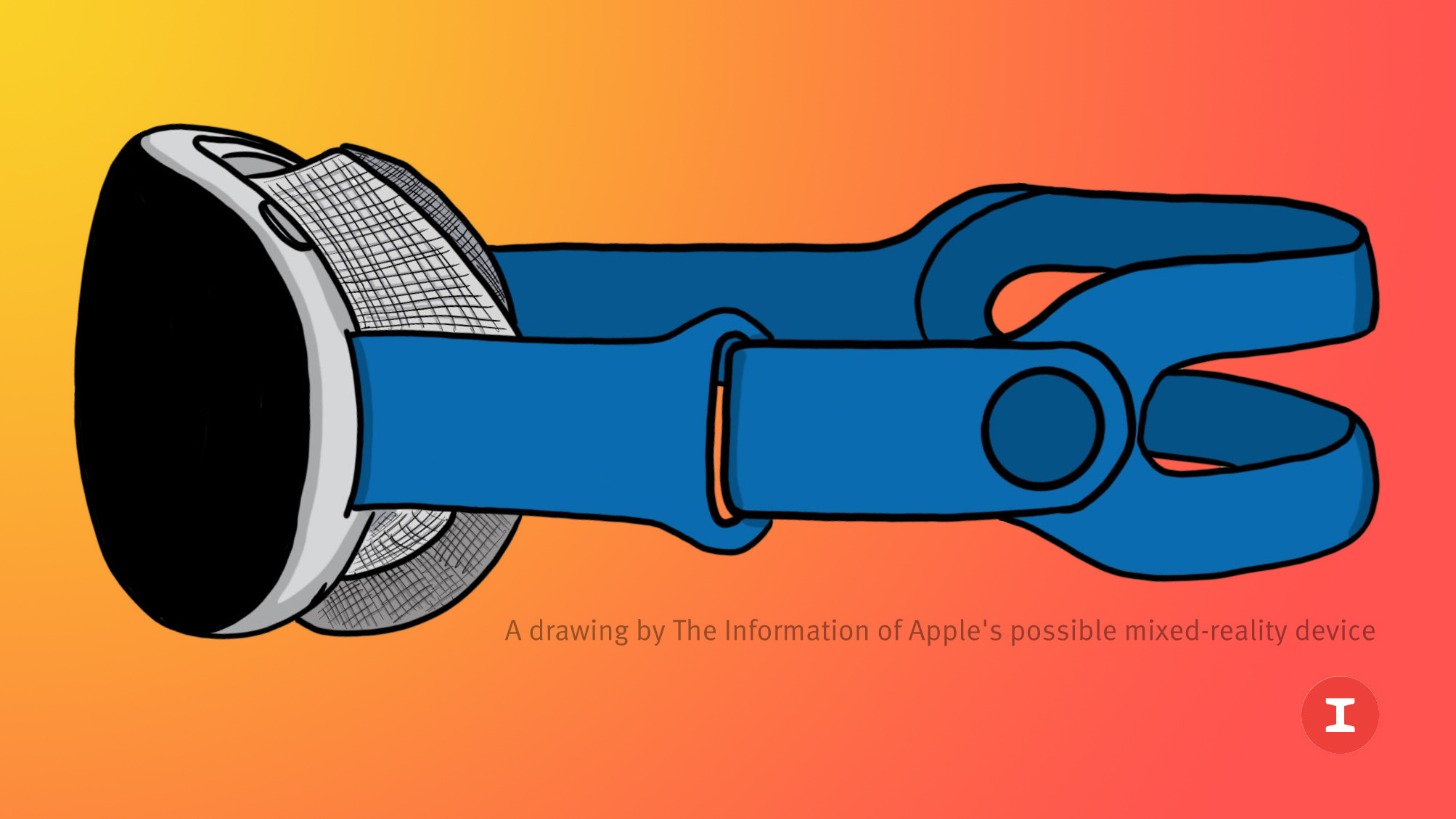

Jony Ive please help. If the final product looks like that, I won’t be wearing it outside.😂😂

The first AR/VR headset that Apple has in development will need to be wirelessly tethered to an iPhone or another Apple device to unlock full functionality, reports The Information.

It will be similar to the WiFi-only version of the Apple Watch, which requires an iPhone connection to work. The headset is meant to wirelessly communicate with another Apple device, which will handle most of the powerful computing.

According to The Information, Apple recently completed work on the 5-nanometer custom chips that are set to be used in the headset, and that's where the connectivity detail comes from.

Apple has completed the key system on a chip (SoC) that will power the headset, along with two additional chips. All three chips have hit the tape-out stage, so work on the physical design has wrapped up and it's now time for trial production.

The chips are not as powerful as the chips used in Apple's Macs and iOS devices, without a neural engine for AI and machine learning capabilities. The chip is designed to optimize for wireless data transmission, compressing and decompressing video, and power efficiency for maximum battery life.

Though designed to work with an iOS device, the headset has a CPU and GPU, so there's a chance that it could have a standalone mode with limited functionality.

TSMC is manufacturing the chips that will be used in the headset, and mass production is said to be at least a year away. The first AR/VR headset could be released as soon as 2022, but the launch could also be pushed back if work on the device is not completed in time.

The Information has also heard that work has been completed on the image sensor and display driver for the headset, and TSMC is still working out issues with the image sensor given its large size. TSMC is working to increase the yield that it is getting during trial production.

This AR/VR headset that's in development is separate from a set of augmented reality smart glasses that are in the works. The sleeker, smaller smart glasses will follow the headset and are expected to launch in 2023.

Article Link: Apple's Upcoming AR/VR Headset to Require Connection to iPhone

Register on MacRumors! This sidebar will go away, and you'll see fewer ads.