Our family already has a code word. Got to, in this day and age.

Got a tip for us?

Let us know

Become a MacRumors Supporter for $50/year with no ads, ability to filter front page stories, and private forums.

iOS 17 Will Let You Create a Voice Sounding Like You in Just 15 Minutes

- Thread starter MacRumors

- Start date

- Sort by reaction score

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

My dad died from ALS and the robot voice speak and spell machine he had was already pretty special for him. This would have been an absolute delight for him. He would have had a lot of fun with it.

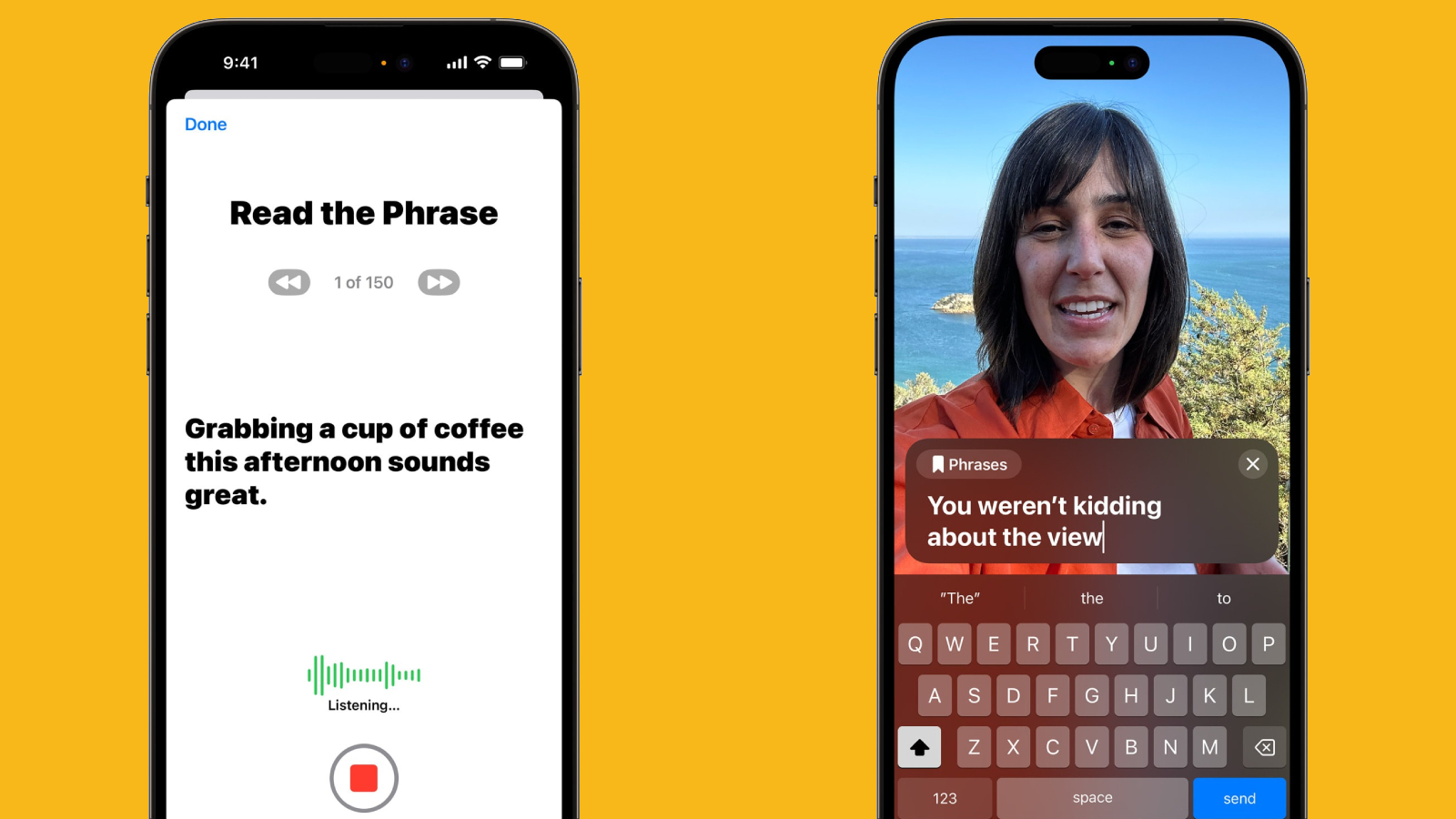

Apple this week previewed new iPhone, iPad, and Mac accessibility features coming later this year. One feature that has received a lot of attention in particular is Personal Voice, which will allow those at risk of losing their ability to speak to "create a voice that sounds like them" for communicating with family, friends, and others.

Those with an iPhone, iPad, or newer Mac will be able to create a Personal Voice by reading a randomized set of text prompts aloud until 15 minutes of audio has been recorded on the device. Apple said the feature will be available in English only at launch, and uses on-device machine learning to ensure privacy and security.

Personal Voice will be integrated with another new accessibility feature called Live Speech, which will let iPhone, iPad, and Mac users type what they want to say to have it be spoken out loud during phone calls, FaceTime calls, and in-person conversations.

Apple said Personal Voice is designed for users at risk of losing their ability to speak, such as those with a recent diagnosis of ALS (amyotrophic lateral sclerosis) or other conditions that can progressively impact speaking ability. Like other accessibility features, however, Personal Voice will be available to all users. The feature will likely be added to the iPhone with iOS 17, which should be unveiled next month and released in September.

"At the end of the day, the most important thing is being able to communicate with friends and family," said Philip Green, who was diagnosed with ALS in 2018 and is a member of the ALS advocacy organization Team Gleason. "If you can tell them you love them, in a voice that sounds like you, it makes all the difference in the world — and being able to create your synthetic voice on your iPhone in just 15 minutes is extraordinary."

Article Link: iOS 17 Will Let You Create a Voice Sounding Like You in Just 15 Minutes

Sure, it can be used for some bad things but I can personally imagine how much joy it would give to people with ALS.

You're coloring my valid criticism as a mindless negativity and that's not good debateBut people who do happen to be Apple customers will get to use it. Isn't that a win? Must the glass always be half-empty?

I imagine Mom might wonder why you had changed your name and banking details before sending you the 50 quid you asked for.Scammers will absolutely love this... "Hey Mom I need you send me some money, ASAP Please!"

I am with the person who wants someone who has read the article and understands the tech at play to write out exactly how this could be used for nefarious purposes as described. I mean, provided the EU or some other governing body doesn’t “consumer safety” their way into completely blowing open the SE and forcing Apple to open source everything.

First, bad actors would have to get 15 minutes of you reciting the exact text from a randomized list in the exact order.

Think about that for just a moment. How would they do that? Coersion? Drugs? If they are in a situation to do that, why not just have you read a specific message? How would they get the ML to wash out those stress elements from the sampling? Oh! I know, hire an impersonator, right? Again, if they already have someone out a much easier to access tool that sounds “close enough”, why bother with this for nefarious means? Oh! Maybe you can use movie clips to sound kind a famous actor. Good luck getting 15 minutes of those specific words in that specific order without some awful background audio.

First, bad actors would have to get 15 minutes of you reciting the exact text from a randomized list in the exact order.

Think about that for just a moment. How would they do that? Coersion? Drugs? If they are in a situation to do that, why not just have you read a specific message? How would they get the ML to wash out those stress elements from the sampling? Oh! I know, hire an impersonator, right? Again, if they already have someone out a much easier to access tool that sounds “close enough”, why bother with this for nefarious means? Oh! Maybe you can use movie clips to sound kind a famous actor. Good luck getting 15 minutes of those specific words in that specific order without some awful background audio.

That's right!I am with the person who wants someone who has read the article and understands the tech at play to write out exactly how this could be used for nefarious purposes as described. I mean, provided the EU or some other governing body doesn’t “consumer safety” their way into completely blowing open the SE and forcing Apple to open source everything.

First, bad actors would have to get 15 minutes of you reciting the exact text from a randomized list in the exact order.

Think about that for just a moment. How would they do that? Coersion? Drugs? If they are in a situation to do that, why not just have you read a specific message? How would they get the ML to wash out those stress elements from the sampling? Oh! I know, hire an impersonator, right? Again, if they already have someone out a much easier to access tool that sounds “close enough”, why bother with this for nefarious means? Oh! Maybe you can use movie clips to sound kind a famous actor. Good luck getting 15 minutes of those specific words in that specific order without some awful background audio.

You are absolutely right!

80% of those who wrote didn't really read the article (if not just the title).

In my opinion a lot of people have realized that they simply have to talk for 15 minutes and that's it!

If it was 1 minute, maybe someone would easily trick them into doing the learning procedure, but 15 minutes means you really have to tie the person up and under torture make them record... maybe then when you do the learning it will also be FaceID active to check the speaker in front of the screen (and won't the display tell them what it is?) 😜

Come on guys, less fantasy...

Anyway, I don't know why it wasn't written on this article, but the library created will not be saved on iCloud and the process will be worked exclusively by the NPU, which nothing will be processed server-side!

Moral of the story: The person has to stand in front of the device "physically" and speak random phrases and keywords, so now explain to me how you do Tim Cook's voice? 😜

Apple often builds upcoming new features "in secret" out in the open, both to improve the technology while also spending time to make it socially acceptable before going mainstream.

What I see here is the future of wearable technology. While Extended Reality glasses, Apple Watch and AirPods are going to become a common trinity that make up many of the functions of what people use their smartphone for today, people will continue to use text messaging on iPhones, iPads and Macs well into the future.

But in some cases, voice works better – specially if Apple does XR right and doesn't flood it with distracting visuals like text. Voice is more natural in that context. Having a user's voice read their texts to someone who is following the conversation on AirPods or XR Glasses seems like a major opportunity to make messaging seamless, regardless of which device you're on.

Scenario: Joe is using his iPhone to message Mary who is walking around with her XR glasses and AirPods. Joe texts Mary and she hears his voice rather than reading the texts. She responds with her voice and Joe sees her message as a text, with an option to tap to hear it in her voice (via voice print, not recorded).

What I see here is the future of wearable technology. While Extended Reality glasses, Apple Watch and AirPods are going to become a common trinity that make up many of the functions of what people use their smartphone for today, people will continue to use text messaging on iPhones, iPads and Macs well into the future.

But in some cases, voice works better – specially if Apple does XR right and doesn't flood it with distracting visuals like text. Voice is more natural in that context. Having a user's voice read their texts to someone who is following the conversation on AirPods or XR Glasses seems like a major opportunity to make messaging seamless, regardless of which device you're on.

Scenario: Joe is using his iPhone to message Mary who is walking around with her XR glasses and AirPods. Joe texts Mary and she hears his voice rather than reading the texts. She responds with her voice and Joe sees her message as a text, with an option to tap to hear it in her voice (via voice print, not recorded).

The volume of individuals in this thread who failed to read the article or comprehend the purpose of this feature is truly repugnant. It's literally in the first sentence of the post.

I didn’t know US banks use voice authentication over phone calls. Sounds very unreliable.“my voice is my password. Please authenticate me”….what u have to say to most banking institutions when u call these days in the US

HomePods can’t even properly identify users. And that’s with multiple mics over WiFi.

Then again, I can’t imagine why I would like to manage my bank accounts over a phone like it’s 1992.

Looks like it will be an excellent feature coming to iOS 17 this year.

Recently there has been a lot of discussion how if someone steals your phone after having watched you enter your passcode (i.e. they know your passcode), they can then also take over your iCloud account (and do so in actual practice). If you created a clone of your voice, now they'll also have your voice. Not a very common occurrence for sure, but something to think about.Anyone saying this can be used for scams should write out all the steps needed for that to work.

Commentor made a self-deprecating joke about his own voice.Commentor completely ignores the accessibility part.

Everyone commenting about how this can be used by scammers....

You realize there's already 20 different websites that do this and it's ALREADY being used by scammers? These services only cost $5 and will accept any audio source. Your local bank branch probably already has stories of people who were tricked by AI voice scammers already - it's been out that long.

Apple has already considered the scamming aspect which is why they make you read from a card. The technology works fine with any sample of your voice as long as it's a minute or two long. There's zero reason to make you read from a card other than to stop scammers. (if a scammer can pin you down and force you to read a card, they already have other way more effective ways of taking all your money).

You realize there's already 20 different websites that do this and it's ALREADY being used by scammers? These services only cost $5 and will accept any audio source. Your local bank branch probably already has stories of people who were tricked by AI voice scammers already - it's been out that long.

Apple has already considered the scamming aspect which is why they make you read from a card. The technology works fine with any sample of your voice as long as it's a minute or two long. There's zero reason to make you read from a card other than to stop scammers. (if a scammer can pin you down and force you to read a card, they already have other way more effective ways of taking all your money).

I think your fears about this are quite silly, frankly.

Easy-to-use AI fake voice is happening anyway. Even now the hardest thing is the practical way to scam with it, not the creation of a model.

You’ll have to be worried when bots will be able to call and have natural conversations in your voice and do this for hundreds of people at the same time. But hey, that’s also surely happening and it won’t be Apple to allow it.

Easy-to-use AI fake voice is happening anyway. Even now the hardest thing is the practical way to scam with it, not the creation of a model.

You’ll have to be worried when bots will be able to call and have natural conversations in your voice and do this for hundreds of people at the same time. But hey, that’s also surely happening and it won’t be Apple to allow it.

This technology is already available, see Descript for example. It is used for dubbing videos.A great use of this technology. Sadly, though, this technology is being used to exploit and scam people as well and probably worse to come. Human nature remains the same: tools can be used for good or ill and usually both. /waxesphilosophical

If it will bring anything good or open gates of hell is question. It may just show us where technology leads humanity...

You can do that over text messaging already.Scammers will absolutely love this... "Hey Mom I need you send me some money, ASAP Please!"

And text is arguably easier. If the target responds with a question the scammer has plenty time to cook up a response - just because texting is inherently slow/sporadic (“sorry, give me a sec“).

A delayed voice response in a phone call would immediately raise suspicions. Indeed, just the time needed for the scammer to type their response into the voice generator would be suspicious.

Interesting point. Apple could gradually apply an aging algorithm to your synthetic voice - so it gets slower and raspier over the years.I really don’t get how it’s going to create a voice sounding like me in 15 minutes. You’d think it would make more sense to create a voice sounding like how I sound now.

And, it’s potentially a dangerous paradox. If I try it and it tells me that it CAN’T create my voice… is that because my voice will no longer exist in 15 minutes? Is it because I’m going to die or have my larynx ripped out by a sky leopaard? Then, would I be able to take steps to prevent my own demise thus upending the space/time continuum?

Going to enter a bug report imploring them to make it sound like me NOW… just too dangerous otherwise.

Live long enough and you’ll be croaking like a frog (which is better than the other form of croaking).

There are already plenty of voice mimickers out there, whether on the internet or on device, and I think this is a good thing because of that. Scammers will likely use one of those instead of an on device system because Apple devices are really expensive for just making a voice clone.

Apple isn't making a new concept. It's just adapting the concept for people who will soon be unable to speak the way they used to, and I think that's really special. Another win for accessibility :D

Apple isn't making a new concept. It's just adapting the concept for people who will soon be unable to speak the way they used to, and I think that's really special. Another win for accessibility :D

Not very useful for people like me who don’t like how they sound… but I guess it’ll appeal to some. Certainly the accessibility part will be great for people with disabilities. This may be helpful but It‘s generally getting to a point with some features that I’m very indifferent to a lot of it. Maybe Apple devices are so good nowadays that I’m not looking that much forward to new devices or features anymore. What more do I need? iPhone 12 pro is perfectly fine. Maybe their VR thing will be interesting though.

Am I the only one who just wants Majel Baret Roddenberry's voice for Siri??

I know that she recorded enough samples prior to her death that we could synthesize her voice.

I know that she recorded enough samples prior to her death that we could synthesize her voice.

You won't say that when you get hacked and the scammers ring your bank sounding like you and empty your accounts!This is a feature with narrow but deep impact on those who need it. If people could not be cynical for five minutes that'd be great.

Register on MacRumors! This sidebar will go away, and you'll see fewer ads.