Many here aren’t really interested in hearing the truth.Depends on context. I honestly don’t think they did anything nefarious. However, many here are making such accusations.

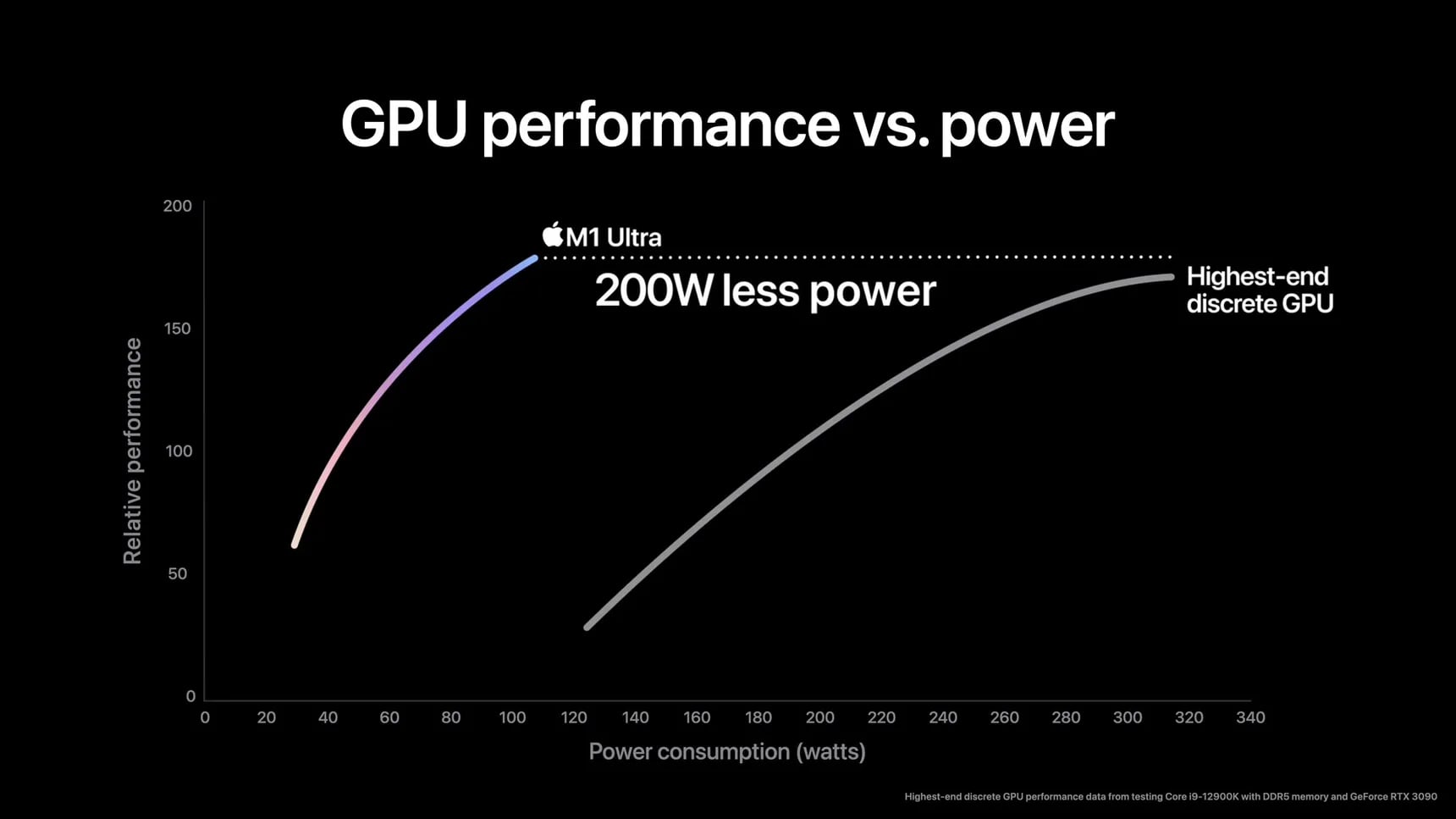

All you have to do is rewatch everything Apple has said when presenting their Silicon from the M1. They pointedly describe Intel CPUs as “power hungry” “noisy” “hot”. Look at the chart the Verge uses. It’s a chart that is clearly measuring performance per watt, and somehow they went ahead and concluded that it was performance at all costs that was being measured.