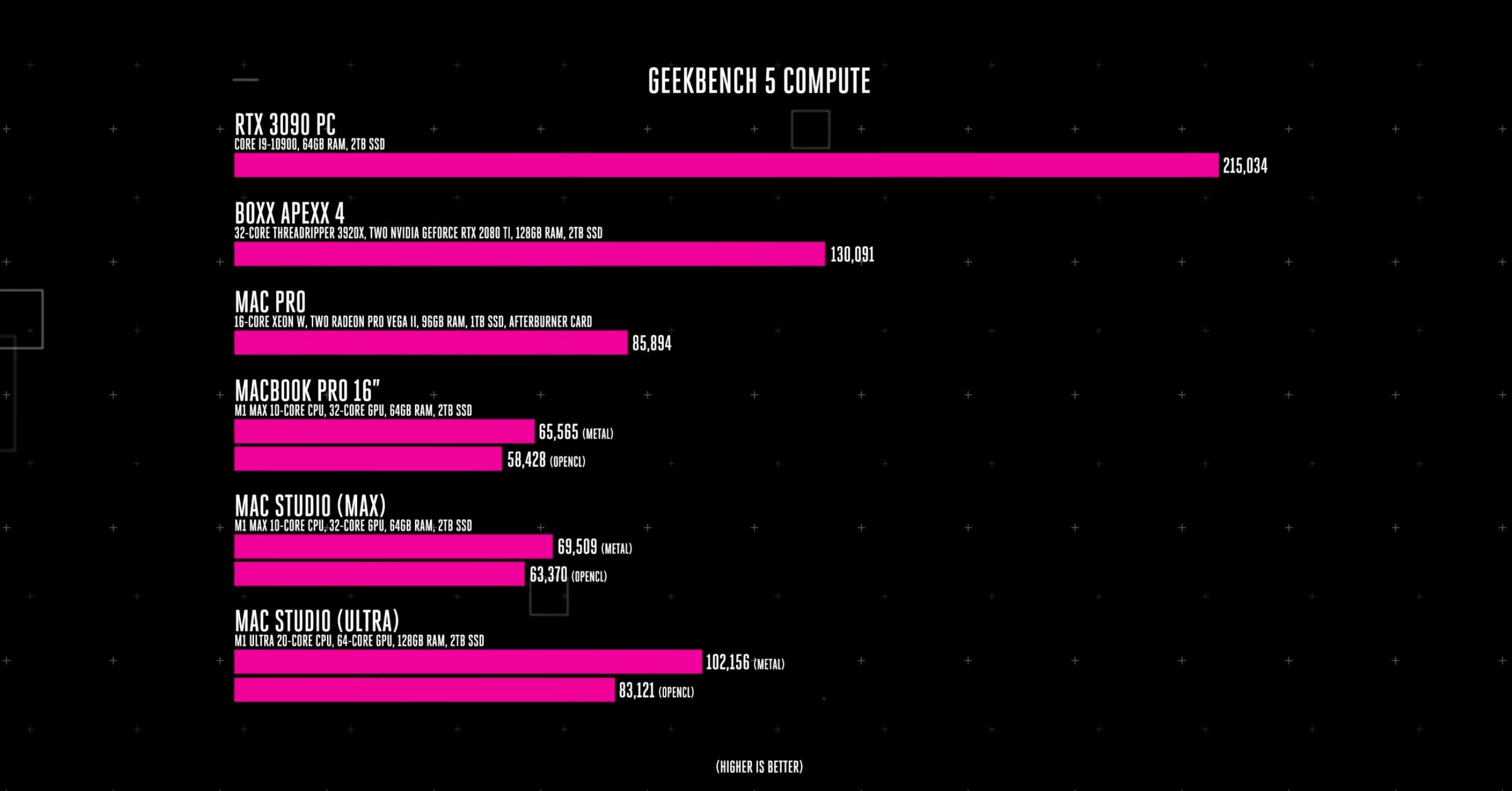

RTX 3090 is on a garbage 8nm Samsung node that was used due to TSMC chip shortages.

The 8nm is an extension of 10nm, per Samsung's own words.

RTX 4000 will be on TSMC 5nm.

If the newest leaks are accurate. Nvidia has absolutely nothing to worry about. The 4000 RTX will smoke anything that Apple has coming down the pipeline for a while.

This isn't even mentioning that Nvidia has it's own chiplet based GPUs likely coming on the RTX 5000 series which have been on the roadmap for a long time now. Likely well into it's design phases.

Nvidia isn't Intel or AMD (in the GPU space). They know how to be agile and respond to market competition.

The 8nm is an extension of 10nm, per Samsung's own words.

RTX 4000 will be on TSMC 5nm.

If the newest leaks are accurate. Nvidia has absolutely nothing to worry about. The 4000 RTX will smoke anything that Apple has coming down the pipeline for a while.

This isn't even mentioning that Nvidia has it's own chiplet based GPUs likely coming on the RTX 5000 series which have been on the roadmap for a long time now. Likely well into it's design phases.

Nvidia isn't Intel or AMD (in the GPU space). They know how to be agile and respond to market competition.