I guess that logic works if you assume that Apple's target is people that are already invested into Apple. That's some MacRumors 100 IQ thinking.I don't think the charts are going to make people who are already pre-disposed to Apple products think Apple has lost integrity.

Got a tip for us?

Let us know

Become a MacRumors Supporter for $50/year with no ads, ability to filter front page stories, and private forums.

M1 Ultra Doesn't Beat Out Nvidia's RTX 3090 GPU Despite Apple's Charts

- Thread starter MacRumors

- Start date

- Sort by reaction score

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

What a load of bollocksA interestedly take away is from the green standpoint, Apple is achieving a lot with far less electric usage. Yes the 3090 can scale a lot higher, but all you need is the equivalent of 2 M1 Ultras using 200 watts to somewhat compare to a single 3090 using 300 watts with that thought. If that was doable, then you have a 40 core CPU and GPU together this becomes a even more lop sided when thinking of enterprise expenses with use of power grids.

Forget about the performance for a sec. A PC with RTX 3090 can do practically anything while a Mac with M1 Ultra can do… Geekbench. 😂

Nvidia also has the 4000 series coming soon using TSMC 5nm like Apple. It’s over. Apple should stick to iOS where they truly have the advantage in software and hardware.

Nvidia also has the 4000 series coming soon using TSMC 5nm like Apple. It’s over. Apple should stick to iOS where they truly have the advantage in software and hardware.

The title of the chart says the comparison they are focused on. The trick is stating that they didn't all of the data if you go beyond those power consumption data points. "200 W less power" is the point they were clearly making. This is great click-bait writing. Well done. And also well done by the Apple marketing team for finding the part in the data story where they can talk about what matters to them. I remember them also saying the average home "may save up to $50 year" and that's a lot of power. More than enough for me to respond to anyone that doesn't like me coming to any type of defense of product marketing for a company that if you are here you likely have some respect for. Or you like to be a troll and that's fair too.

Your comment is the quintessential example of "Limiting Beliefs"! It is not about right now it is what is possible in the future! Apple will eventually eclipse NVIDIA! Just wait for the M2 or M3!The ultra is a joke it’s not needed it’s just for the fact they can say it’s faster and charge double the m1 max is enough for anything macs can do, the only reason 3090 is needed is for gaming and macs can’t game

They should be, think about the planet for once please.Most people are not concerned with power consumption for non-portable devices.

Did you ever heard of CUDA?...the only reason 3090 is needed is for gaming...

CUDA Zone - Library of Resources

Explore CUDA resources including libraries, tools, integrations, tutorials, news, and more.

Matlab GPU computing only works with Nvidia CUDA.

MATLAB GPU Computing Support for NVIDIA CUDA Enabled GPUs

Learn about MATLAB computing on NVIDIA CUDA enabled GPUs.

Your comment is the quintessential example of "Limiting Beliefs"! It is not about right now it is what is possible in the future! Apple will eventually eclipse NVIDIA! Just wait for the M2 or M3!

Why buy the Mac Studio now? Why not wait until the M2/M3 Mac Studio? (then actually, the M4/M5 Mac Studio)

This is not their first generation. They’ve had several desktop AS chips released.Let me see if I am getting this interpretation. The competitor, when burning through “unlimited” power, i.e., 500 watts, outperforms the Mac Studio at under 120 watts, and that is hailed as a victory?

How nVidia could be anything other than concerned / embarrassed by this trend is unclear to me, especially since this is Apple’s first generation of their desktop SOC.

Did they test the 48 core or 62 core Ultra option? Something doesn’t look right here.

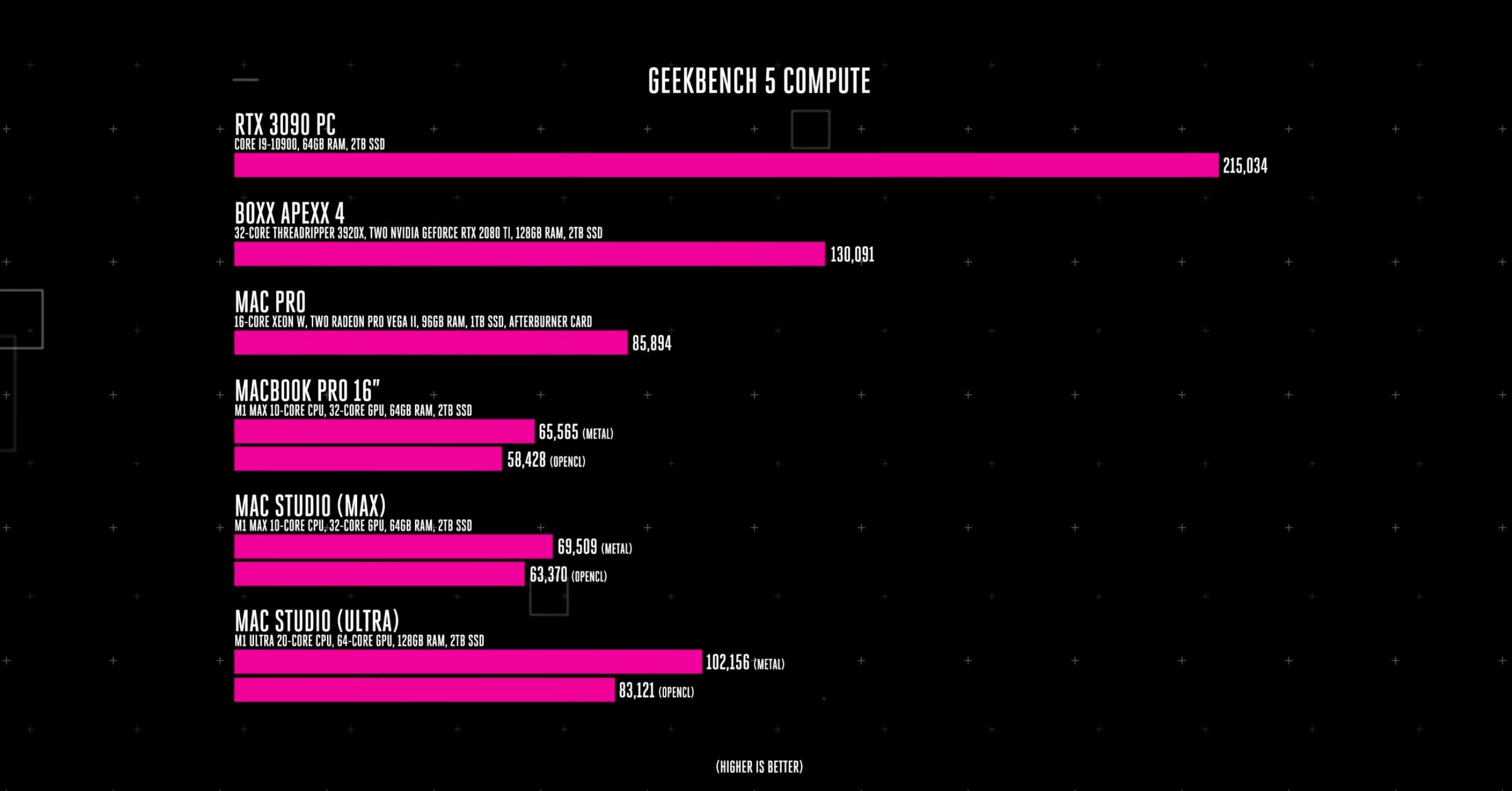

Despite Apple's claims and charts, the new M1 Ultra chip is not able to outperform Nvidia's RTX 3090 in terms of raw GPU performance, according to benchmark testing performed by The Verge.

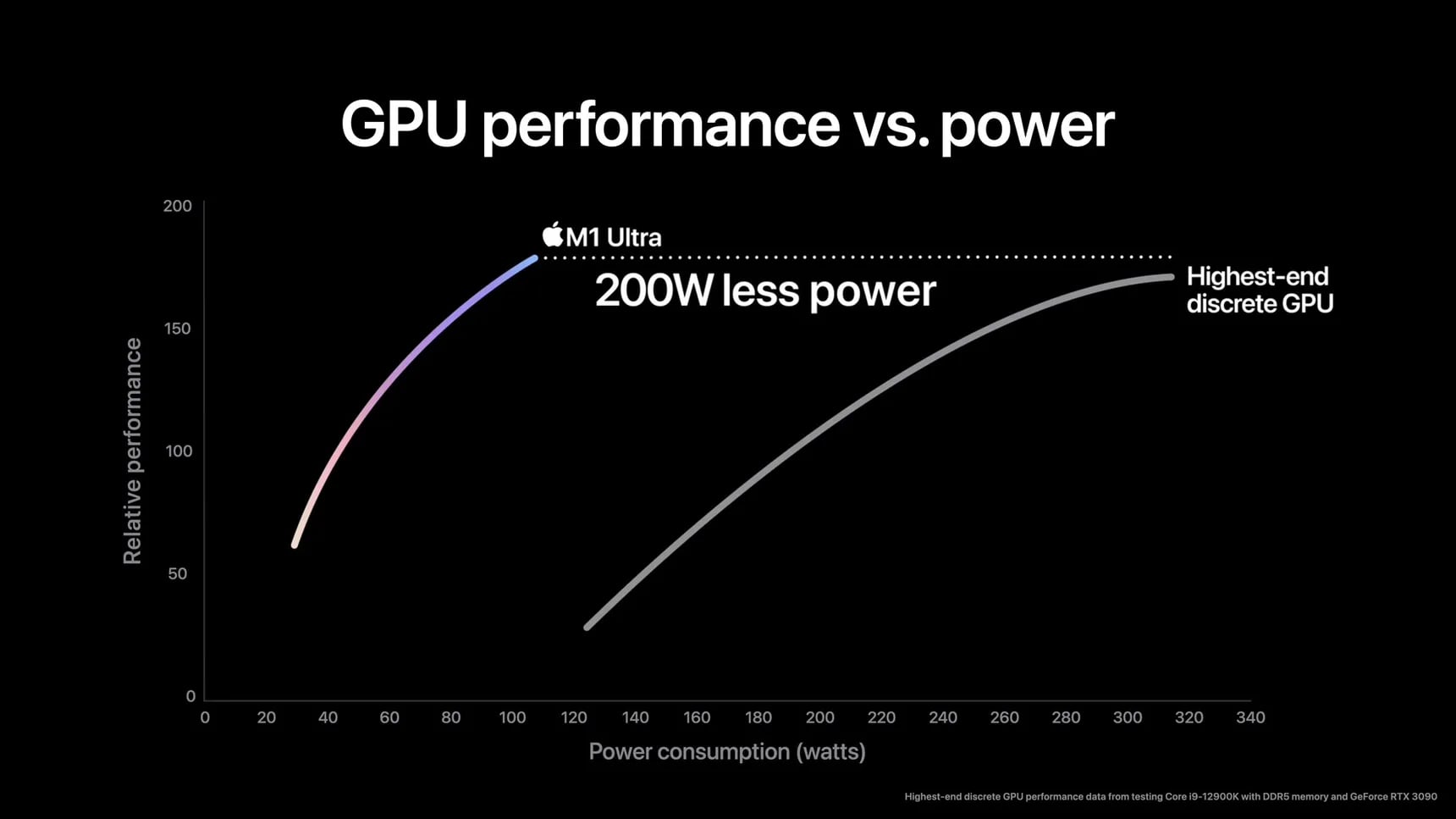

When the M1 Ultra was introduced, Apple shared a chart that had the new chip winning out over the "highest-end discrete GPU" in "relative performance," without details on what tests were run to achieve those results. Apple showed the M1 Ultra beating the RTX 3090 at a certain power level, but Apple isn't sharing the whole picture with its limited graphic.

The Verge decided to pit the M1 Ultra against the Nvidia RTX 3090 using Geekbench 5 graphics tests, and unsurprisingly, it cannot match Nvidia's chip when that chip is run at full power. The Mac Studio beat out the 16-core Mac Pro, but performance was about half that of the RTX 3090.

The M1 Ultra is otherwise impressive, and it is unclear why Apple focused on this particular benchmark as it is somewhat misleading to customers because it does not take into account the full range of Nvidia's chip.

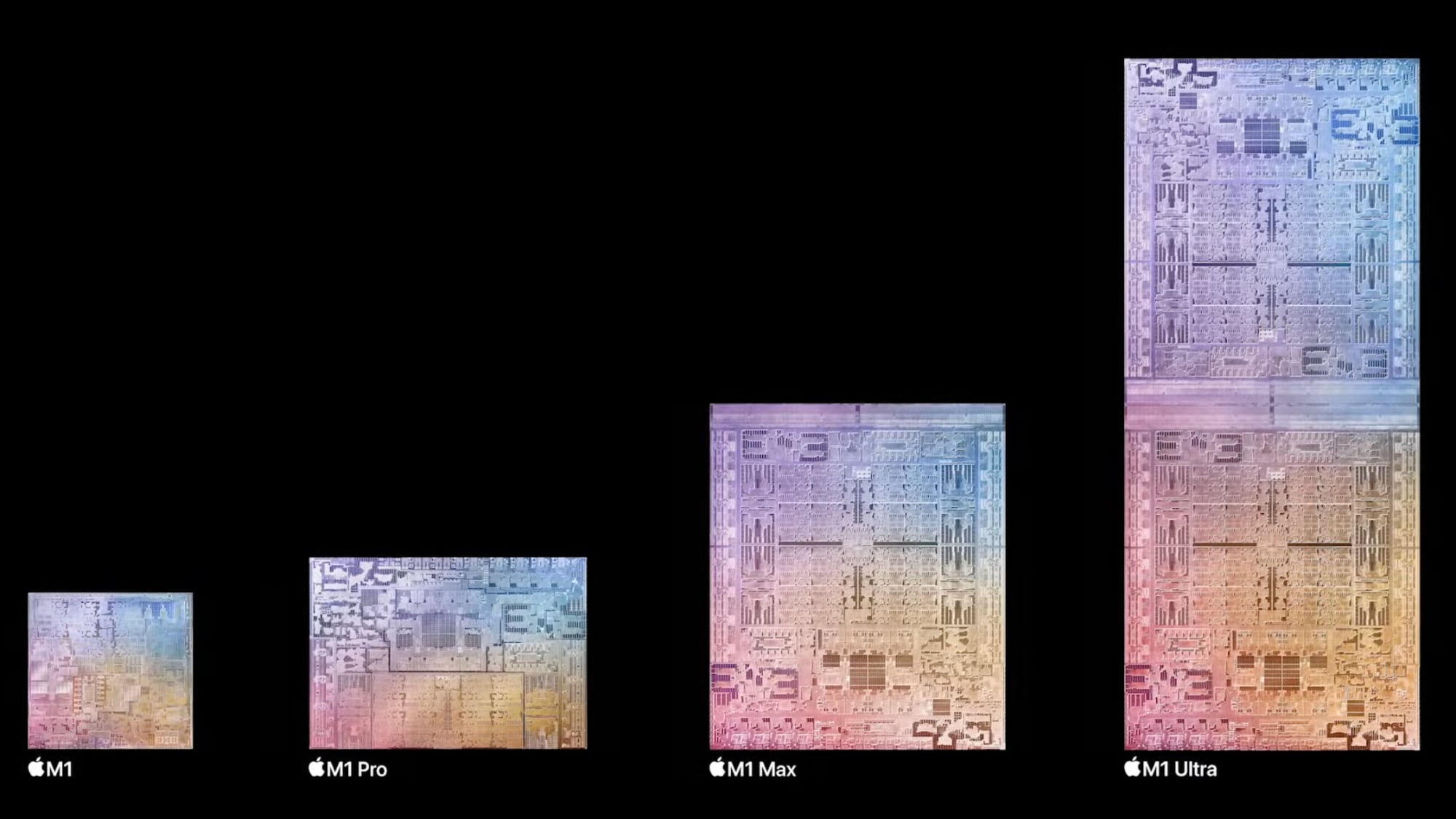

Apple's M1 Ultra is essentially two M1 Max chips connected together, and as The Verge highlighted in its full Mac Studio review, Apple has managed to successfully get double the M1 Max performance out of the M1 Ultra, which is a notable feat that other chip makers cannot match.

Article Link: M1 Ultra Doesn't Beat Out Nvidia's RTX 3090 GPU Despite Apple's Charts

As for the benchmarks, it’ll be interesting to see it in real world use tests. The benefit of Apple’s SOC is the ability to optimise macOS and the rest of the hardware. A PC built like Frankenstein in comparison will never be able to be optimised in this way which is exactly what gives it better performance over a PC with ‘better specs’.

Even if it did beat it, Apple isn't compelling the gaming developers at all.

They have decided that melting and/or cooling of chips isn't the business they want to be in. So I don't know really why they bother.

The moment they compel triple-A developers to bring new games to Mac in lock-step with PC/console releases, then these comparisons really matter. Until then, apple can keep saying "we have the fastest car ever that never leaves the garage".

They have decided that melting and/or cooling of chips isn't the business they want to be in. So I don't know really why they bother.

The moment they compel triple-A developers to bring new games to Mac in lock-step with PC/console releases, then these comparisons really matter. Until then, apple can keep saying "we have the fastest car ever that never leaves the garage".

That anyone could have taken that chart seriously, what with a y-axis specifying a nebulous variable of "relative performance", is beyond me. Kudos to Apple's marketing department; anyone who thought that graph showed anything relevant has probably purchased a bridge or two.

It’s relatively relevant!

OK BUSTED! The graphic above does not show the actual caption used in the Verge article. If you read the article, the 3090 is compared to the M1 Ultra for running OpenCL (software deprecated on the Macs for years). Not surprising at all that software that is obsolete on Macs would not perform as well as on the Nvidia. also not surprising that people at the Verge couldn't figure that out at all. A bit amazing that the author here did not point that out.

Even if it did beat it, Apple isn't compelling the gaming developers at all.

They have decided that melting and/or cooling of chips isn't the business they want to be in. So I don't know really why they bother.

The moment they compel triple-A developers to bring new games to Mac in lock-step with PC/console releases, then these comparisons really matter. Until then, apple can keep saying "we have the fastest car ever that never leaves the garage".

If Apple isn't in the business of cooling chips, then why the heck does the Mac Studio dedicate a substantial portion of volume to air movement?

The graph is actually show that at 100 Watts, the M1 Ultra is more powerful than the 3090 at 320 WattsI suppose it is possible that in the chart displayed in this article, Apple is saying an M1 Ultra at ~100 watts matches a 3090 at ~300 watts. However, pushing the 3090 to it's near-500W maximum would allow it to pull significantly ahead as the benchmarks run by The Verge showed.

Regardless of what Apple actually meant with this graph, I think one thing is for sure:

Apple needs to step things up on the GPU side… On the CPU side apple silicon has been groundbreaking and has sent both Intel and AMD back to the drawing board. But on the GPU side, while the M1 Max and Ultra are certainly good, they don’t come anywhere close to doing to Nvidia what Apple has done to Intel.

So while Apple was a generation ahead on the CPU end, they seem to be a generation or at least half generation behind in the GPU end. Hopefully this can change with the M2 or probably more likely the M3 generation.

Apple needs to step things up on the GPU side… On the CPU side apple silicon has been groundbreaking and has sent both Intel and AMD back to the drawing board. But on the GPU side, while the M1 Max and Ultra are certainly good, they don’t come anywhere close to doing to Nvidia what Apple has done to Intel.

So while Apple was a generation ahead on the CPU end, they seem to be a generation or at least half generation behind in the GPU end. Hopefully this can change with the M2 or probably more likely the M3 generation.

they were testing it wrong LOL

what really matters is real-life performance and I'm sure the ultra will do just fine for 99% of those who buy it.

And no, you don't buy a Mac to play high-end games ...

what really matters is real-life performance and I'm sure the ultra will do just fine for 99% of those who buy it.

And no, you don't buy a Mac to play high-end games ...

I'm glad you said "ARM" is the future rather than Apple Silicon.I doubt Intel will. Nvidia most likely but it all depends how they all adapt as x86 seems to be the end road here.

ARM is the future so lets find out in few years time.

Looking at iPhone and iPad vs competition I think Apple will overtake Nvidia eventually.

One thing is sure - the future is exciting for us customers.

People who use graphics cards like Nvidia RTX 3090 don't really care about performance per watt. They care about performance, period.

Apple Silicon shines when performance per watt matters, e.g., mobile devices & laptops, but it's not significantly better on a desktop all things considered, at least for now.

A dGPU still has its advantages. Apple Silicon is still one to two years behind in graphics performance compared to the highest end dGPU. This MR news article shows that the surge in performance in Apple Silicon is starting to level off...well, unless Apple has something up its sleeves in M2 that we don't know about.

yah, yah, yah. also if you look at the Verge article the comparison is to OpenCL (you know that software that has been deprecated for years on Macs, I'm sure it is optimized though, LOL). So dumb benchmark compariso means every conclusion drawn from it is meaningless. It would be interesting to see a real valid comparison of something using software optimized for each platformActually the point whether valid or not is the performance you can get UP TO a certain amount of power usage. For that range the Ultra chip has much more performance. So its more like saying for 0 to 40 an electric car will beat a McLaren, but obviously 40 McLaren can blow it away. Doesn't mean that in "speed restricted" uses an electric car isn't better.

So it is misleading but not inaccurate. Its even titled "Performance vs Power."

I wanted to stop reading when I saw it came from The Verge.Spot-on. People here are so quick to hurl a "gotcha" before even reading and soaking in what's being presented and compared. Another bucket-of-chum-in-the-water-fest.

Based on a series of benchmarks I did comparing the M1 Max 32-core to a Dell workstation laptop with an NVIDIA RTX A2000 in it, this is not the least bit surprising.

The performance-per-watt is very impressive, and put up against dedicated laptop GPUs the raw performance is also very impressive for nearly every reasonably optimized task (in some 3D gaming-type benchmarks the M1 Max's 32 cores were over three times as fast), but there was no way doubling the GPU cores was going to hold up in absolute terms against a multi-hundred-watt desktop GPU, much less the two double-GPU cards you can order a Mac Pro with today (heck, each of the Radon Pro W6800X Duos has 64GB of GDDR6, so just the GPUs alone have as much memory as a fully-loaded M1 Ultra has shared between GPUs and CPUs).

Which is quite reasonably why Apple is still selling the Mac Pro with its monstrous dedicated $12,000 GPUs. Some people have an actual real-world use for that, just like they have an actual real-world use for 1.5TB of RAM, and are quite willing to have their GPUs alone draw 800W to get there. If you need it, at least for the time being, you can most certainly buy it from Apple.

What's disappointing to me is that, at least in Geekbench, the Ultra does not appear to scale linearly from the Max; it has double the GPU cores but not even close to double the performance. Not sure what the cause is, since the CPU seems to scale pretty linearly.

That said, Apple has already managed to equal the best workstation-grade CPUs from Intel, so the fact that in the first generation their GPUs are already on the map and they have all the thermal overhead in the world available certainly makes for some interesting speculation about what might be yet to come at the extreme high end.

Just playing with back-of-envelope numbers, if Apple was able to linearly scale GPU performance (which, again, this test indicates isn't the case with the Ultra), a hypothetical M1 Trio (or whatever) with 96 GPU cores would match the RTX 3090, with the GPU drawing under 200W versus the dedicated card's 350W. It still wouldn't compete directly with dual Radeon Pro W6900X or Radeon Pro W6800X Duos, but even more extreme GPUs theoretically could.

The performance-per-watt is very impressive, and put up against dedicated laptop GPUs the raw performance is also very impressive for nearly every reasonably optimized task (in some 3D gaming-type benchmarks the M1 Max's 32 cores were over three times as fast), but there was no way doubling the GPU cores was going to hold up in absolute terms against a multi-hundred-watt desktop GPU, much less the two double-GPU cards you can order a Mac Pro with today (heck, each of the Radon Pro W6800X Duos has 64GB of GDDR6, so just the GPUs alone have as much memory as a fully-loaded M1 Ultra has shared between GPUs and CPUs).

Which is quite reasonably why Apple is still selling the Mac Pro with its monstrous dedicated $12,000 GPUs. Some people have an actual real-world use for that, just like they have an actual real-world use for 1.5TB of RAM, and are quite willing to have their GPUs alone draw 800W to get there. If you need it, at least for the time being, you can most certainly buy it from Apple.

What's disappointing to me is that, at least in Geekbench, the Ultra does not appear to scale linearly from the Max; it has double the GPU cores but not even close to double the performance. Not sure what the cause is, since the CPU seems to scale pretty linearly.

That said, Apple has already managed to equal the best workstation-grade CPUs from Intel, so the fact that in the first generation their GPUs are already on the map and they have all the thermal overhead in the world available certainly makes for some interesting speculation about what might be yet to come at the extreme high end.

Just playing with back-of-envelope numbers, if Apple was able to linearly scale GPU performance (which, again, this test indicates isn't the case with the Ultra), a hypothetical M1 Trio (or whatever) with 96 GPU cores would match the RTX 3090, with the GPU drawing under 200W versus the dedicated card's 350W. It still wouldn't compete directly with dual Radeon Pro W6900X or Radeon Pro W6800X Duos, but even more extreme GPUs theoretically could.

I don't care about games or running a system that adds to climate change (seriously why do gamers get a free pass on this?)

Does it render videos faster? thats my main benchmark I care about

Does it render videos faster? thats my main benchmark I care about

There are other things going on in the world besides games. Personally, I never played, never want to waste my time playing, but for those of you for who it is a hobby, great. But I never once heard anyone say they wanted to by a Mac to play games, seriously, no one ever.Even if it did beat it, Apple isn't compelling the gaming developers at all.

They have decided that melting and/or cooling of chips isn't the business they want to be in. So I don't know really why they bother.

The moment they compel triple-A developers to bring new games to Mac in lock-step with PC/console releases, then these comparisons really matter. Until then, apple can keep saying "we have the fastest car ever that never leaves the garage".

It's not just OpenCL they're comparing.yah, yah, yah. also if you look at the Verge article the comparison is to OpenCL (you know that software that has been deprecated for years on Macs, I'm sure it is optimized though, LOL). So dumb benchmark compariso means every conclusion drawn from it is meaningless. It would be interesting to see a real valid comparison of something using software optimized for each platform

Of course the problem is that you can't use Metal on a non AS package.

Register on MacRumors! This sidebar will go away, and you'll see fewer ads.