From somebody who runs parallels to get access to multiple native copies of wintendo, (to check e.g. how my websites look in crappy old MSIE): that needs to get solved before I can move to ARM based machines - and not just for W10 or Linux, but for everythign that can run on a typical x86 machine not from Apple.

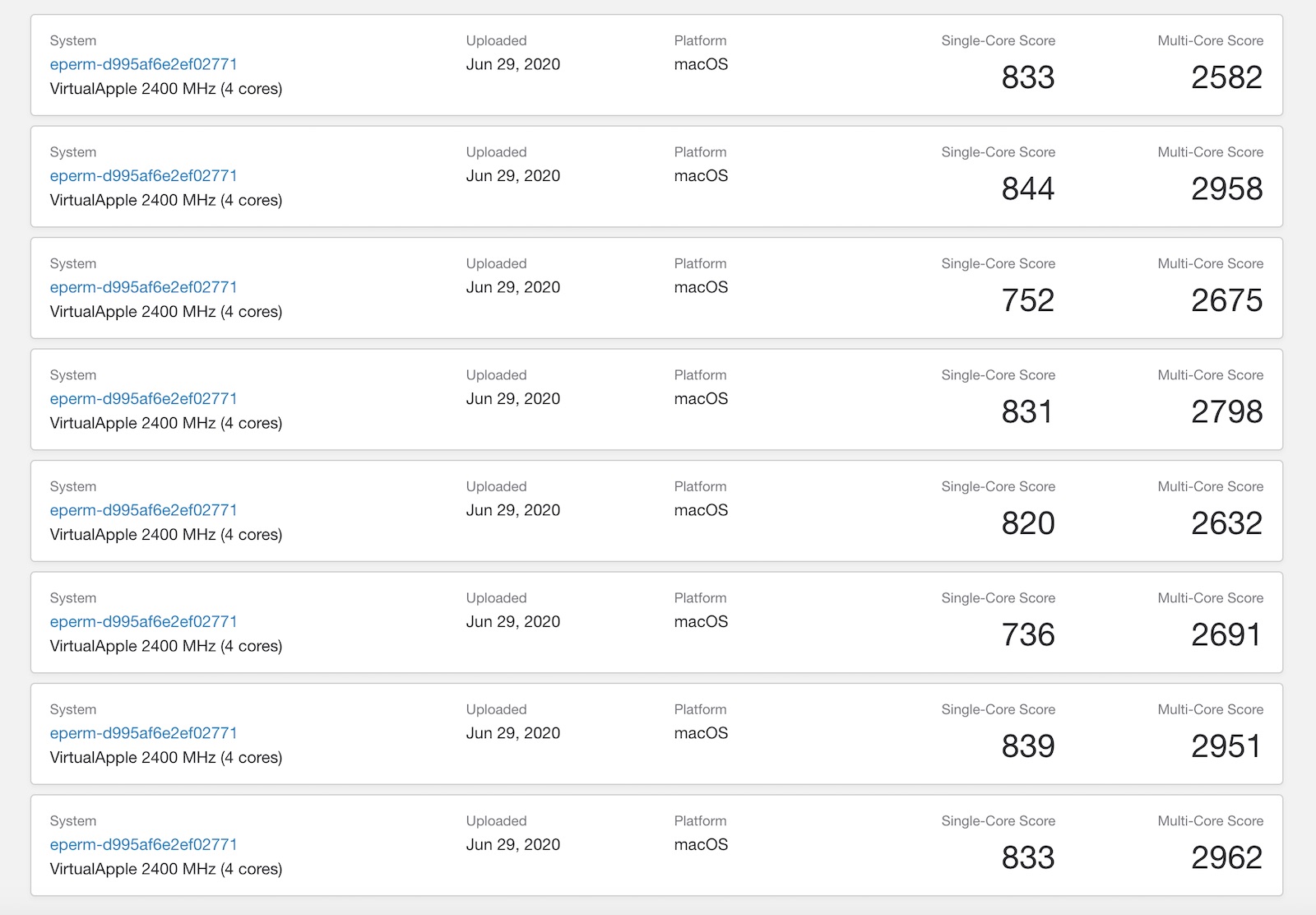

TBH if even half this kind of performance is possible once optimised Virtualisation software comes out then I think most people who use virtual machines will be just fine. Ask yourself this: how much performance do you actually *need* in your virtual machines? You say you use it to check your websites in different versions of Windows. Do you really need your virtual machine to be able to run with the performance of a top end, latest gen i7 processor? Websites aren't exactly processor intensive, or at least they (largely) shouldn't be.