I sort of feel like some people are simply determined to defend Apple, no matter what.

Got a tip for us?

Let us know

Become a MacRumors Supporter for $50/year with no ads, ability to filter front page stories, and private forums.

Apple Outlines Security and Privacy of CSAM Detection System in New Document

- Thread starter MacRumors

- Start date

- Sort by reaction score

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

“Tim, they activated a new device on January 6th, near DC!”Mass surveillance by left winged Apple, Inc. Later they will maybe let you know they are searching for pirated songs and movies from their service and pics of MAGA hats..hehe.

“There’s a hash for that.”

+1. Regarding the purchase of future Apple products, I’m taking a “wait and see” approach. I’ll happily buy the new, large MacBook Pro AND an iPhone 13 Pro Max (if there is such a thing) when Apple backtracks and re-embraces their CES motto of 2019 “what happens on your iPhone STAYS on your iPhone.”I'm currently on my 4th iPhone (had a 3G, 4S, 6 and X) and was planning to pick up a 13 in the fall. But this is making me think twice. As the owner of the device I paid a lot of money for, I decide what spyware runs (or doesn't run) on it. End of story.

Again I ask.

if the only data available for the iOS device to scan is a 1-1 duplicate of the iCloud data what is the slippery slope when governments have been demanding cloud data from tech companies and tech companies providing them since 2005 INCLUDING Apple.

If the scan for CSAM occurs on device when the data availabe to scan is 1-1 duplicate of iCloud data what is the difference between if the scan occurs on Servers vs iOS device. What NEW information is on device that is not available for governments for people who are saying Governments will want more information. Why was this more information not asked for since 2005 when cloud drives include iCLOUD have been given to government since 2005.

The outrage is 1. slippery slope. Slippery slope of what exactly if the data is a duplicate of cloud data. 2. governments will ask to scan more than csam. Ok why hasn't this been raised before August 5 with similar fervor when Apple HAS been giving all iCloud data of users to governments all over the world when they demand it. and I mean ALL data.

the users saying this will be exploited. Ok, if the data available to scan is a duplicate of iCloud data why has this exploitation missing on Cloud scanning since 2005?

I mean.. think logically. the outrage is illogical

if you are concerned that NOTHING should be scanned, cloud or device thats legitimate and a valid opinion but these slippery slope arguments and concerns seemed wholly manufactured when you realize the only available data to scan on device is a duplicate of cloud data. nothing more and nothing less

if the only data available for the iOS device to scan is a 1-1 duplicate of the iCloud data what is the slippery slope when governments have been demanding cloud data from tech companies and tech companies providing them since 2005 INCLUDING Apple.

If the scan for CSAM occurs on device when the data availabe to scan is 1-1 duplicate of iCloud data what is the difference between if the scan occurs on Servers vs iOS device. What NEW information is on device that is not available for governments for people who are saying Governments will want more information. Why was this more information not asked for since 2005 when cloud drives include iCLOUD have been given to government since 2005.

The outrage is 1. slippery slope. Slippery slope of what exactly if the data is a duplicate of cloud data. 2. governments will ask to scan more than csam. Ok why hasn't this been raised before August 5 with similar fervor when Apple HAS been giving all iCloud data of users to governments all over the world when they demand it. and I mean ALL data.

the users saying this will be exploited. Ok, if the data available to scan is a duplicate of iCloud data why has this exploitation missing on Cloud scanning since 2005?

I mean.. think logically. the outrage is illogical

if you are concerned that NOTHING should be scanned, cloud or device thats legitimate and a valid opinion but these slippery slope arguments and concerns seemed wholly manufactured when you realize the only available data to scan on device is a duplicate of cloud data. nothing more and nothing less

The software is capable of scanning more than what is synced with iCloud photos and it is capable of sending data whether iCloud is enable or not. Just because that function isn’t active at launch doesn’t mean it won’t be used.What exactly is the difference if the scan occurs on device is the data being scanned is a 1-1 sync with iCloud photos. Governments have requested data of iCloud , google drive . One drive, Amazon drive .. since .. 2005. Where was this slippery slope theory before august 5, 2021 on cloud data exactly . I’m asking because the only data open to device to scan is a 1-1 iCloud synced data.

In simpler terms: what will the governments want to scan that is different on the phone than what they can already do on the cloud . What is different ?

Remember when the FBI wanted Apple to create the back door code and they said they don’t have ability even if they wanted. Now they have the code to can scan phones remotely and all it takes is a slight tweak of code to make it scan for whatever is requested.

You need to convince me and others that Apple would be willing to completely abandon the China market when they are ordered scan for other items.

The software is capable of scanning more than what is synced with iCloud photos and it is capable of sending data whether iCloud is enable or not. Just because that function isn’t active at launch doesn’t mean it won’t be used.

Remember when the FBI wanted Apple to create the back door code and they said they don’t have ability even if they wanted. Now they have the code to can scan phones remotely and all it takes is a slight tweak of code to make it scan for whatever is requested.

You need to convince me and others that Apple would be willing to completely abandon the China market when they are ordered scan for other items.

No its not capable of scanning more because the non-cloud data is locked in the Secure Enclave which even Apple does not have the keys to. the only available data is iCloud device data which is a duplicate of cloud data on iCloud servers. CSAM scanning cannot scan non-cloud data on user devices even if Apple wanted to unless they remove the Secure Enclave chip. that is the entire purpose of the chip. to secure data which is not cloud related

I see where you are coming from with this, and would agree if the only thing this new ‘feature’ did was scan my images and deny the upload to iCloud if it detected something it thought was CSAM, and that was it. Of course that doesn’t involve law enforcement, sharing data with government agencies, or really anyone other than me so doesn’t really invade my privacy. Unfortunately, it doesn’t work like that and now that the ability to scan users phones is out, governments can and will make requests and Apple will need to comply.

You're not really addressing what I said.

Your earlier argument was that this new CSAM detection gives Apple the ability to upload data when Apple secretly updates their software to do so. This new feature is not worse than Apple's capability backup your entire device which Apple can secretly update their software to force everyone to back up to the cloud.

The code to scan for the CSAM photos is built into the OS itself. The very same OS that is reading and writing these files you say it cannot access. The secure enclave doesn’t provide security against the signed in user in the OS. It can read that data.No its not capable of scanning more because the non-cloud data is locked in the Secure Enclave which even Apple does not have the keys to. the only available data is iCloud device data which is a duplicate of cloud data on iCloud servers. CSAM scanning cannot scan non-cloud data on user devices even if Apple wanted to unless they remove the Secure Enclave chip. that is the entire purpose of the chip. to secure data which is not cloud related

For someone that says everyone else doesnt understand how this works, you really dont.No its not capable of scanning more because the non-cloud data is locked in the Secure Enclave which even Apple does not have the keys to. the only available data is iCloud device data which is a duplicate of cloud data on iCloud servers. CSAM scanning cannot scan non-cloud data on user devices even if Apple wanted to unless they remove the Secure Enclave chip. that is the entire purpose of the chip. to secure data which is not cloud related

The generate hashes for every image on your device. If you then enable icloud photos, and uploaded photos that fail the hash check, it uploads not only the photo, but additional data. Fail somewhere around 30 of the hash check and an apple person looks at the photo. If they think its kiddie porn, you go to jail.

This is different then having your photos scanned only in the cloud.

The code to scan for the CSAM photos is built into the OS itself. The very same OS that is reading and writing these files you say it cannot access. The secure enclave doesn’t provide security against the signed in user in the OS. It can read that data.

the code to make sure even apple doesnt read your emails is also built into the OS. this is amateur tech talk if you think the code is in OS to scan non-iCloud data then that means Apple can read your private data. 😆 I mean .. c'mon man.

the OS also stores your fingerprints and facial ID. do you think Apple has access to it because its built into the OS? this is hilarious

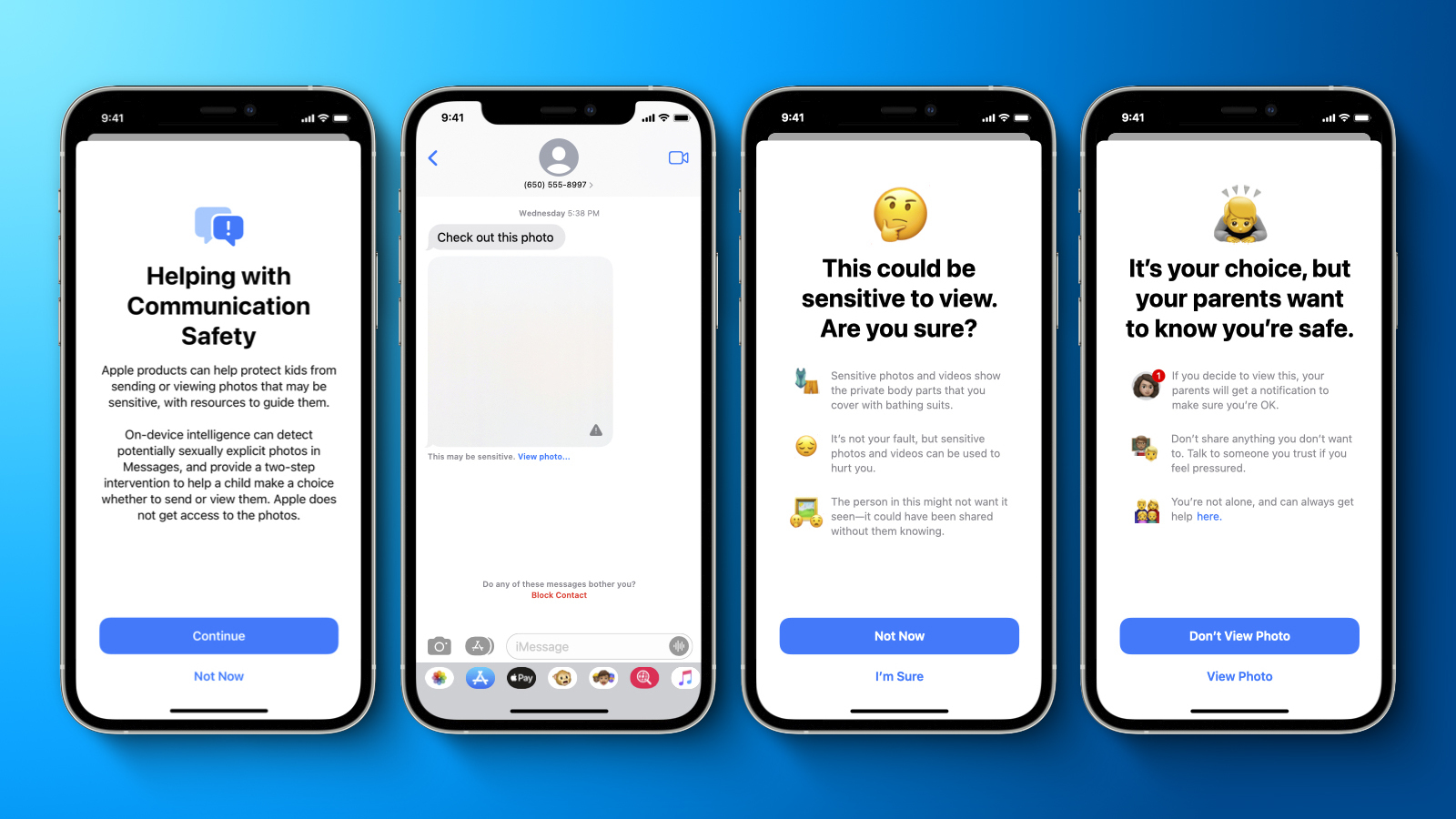

Hey MacRumors... you showed pictures of the new "I'm going to tell your parents you're sending nudes" feature, and then the article was all about the CSAM hash check.

Apple today shared a document that provides a more detailed overview of the child safety features that it first announced last week, including design principles, security and privacy requirements, and threat model considerations.

Apple's plan to detect known Child Sexual Abuse Material (CSAM) images stored in iCloud Photos has been particularly controversial and has prompted concerns from some security researchers, the non-profit Electronic Frontier Foundation, and others about the system potentially being abused by governments as a form of mass surveillance.

The document aims to address these concerns and reiterates some details that surfaced earlier in an interview with Apple's software engineering chief Craig Federighi, including that Apple expects to set an initial match threshold of 30 known CSAM images before an iCloud account is flagged for manual review by the company.

Apple also said that the on-device database of known CSAM images contains only entries that were independently submitted by two or more child safety organizations operating in separate sovereign jurisdictions and not under the control of the same government.Apple added that it will publish a support document on its website containing a root hash of the encrypted CSAM hash database included with each version of every Apple operating system that supports the feature. Additionally, Apple said users will be able to inspect the root hash of the encrypted database present on their device, and compare it to the expected root hash in the support document. No timeframe was provided for this.

In a memo obtained by Bloomberg's Mark Gurman, Apple said it will have an independent auditor review the system as well. The memo noted that Apple retail employees may be getting questions from customers about the child safety features and linked to a FAQ that Apple shared earlier this week as a resource the employees can use to address the questions and provide more clarity and transparency to customers.

Apple initially said the new child safety features would be coming to the iPhone, iPad, and Mac with software updates later this year, and the company said the features would be available in the U.S. only at launch. Despite facing criticism, Apple today said it has not made any changes to this timeframe for rolling out the features to users.

Article Link: Apple Outlines Security and Privacy of CSAM Detection System in New Document

So... let me point out something that my wife and I were talking about.

Let's say Little Suzie decides she's going to take a nude selfie... apple intervenes, and says "are you sure"? Suzie says yes, and apple sends the nude selfie to Daddy.

Now DADDY is in possession of child porn.

How brilliant is that? Does nobody think this stuff through?

For someone that says everyone else doesnt understand how this works, you really dont.

The generate hashes for every image on your device. If you then enable icloud photos, and uploaded photos that fail the hash check, it uploads not only the photo, but additional data. Fail somewhere around 30 of the hash check and an apple person looks at the photo. If they think its kiddie porn, you go to jail.

This is different then having your photos scanned only in the cloud.

What additional data does it upload not available on the iCloud, can it upload your fingerprint data? can it upload your facial ID data along with the positive hash match? can it upload your WhatsApp chat ? What additional data outside of iCloud data can it upload. please enlighten 😂

They would need to write new code to have the iPhone back up itself without user consent. The code doing the scan just needs a minor tweak to likely one or two ‘if else’ statements. There is much higher potential for a government to request/require compliance with demands of this new built in scanning tool over asking them to create secret backups of devices. None of these changes has to be secretive.You're not really addressing what I said.

Your earlier argument was that this new CSAM detection gives Apple the ability to upload data when Apple secretly updates their software to do so. This new feature is not worse than Apple's capability backup your entire device which Apple can secretly update their software to force everyone to back up to the cloud.

You are dodging the question. This has nothing to do with distrusting Dr. Fauci, this is merely an attempt to help qualify his vast expertise for the public.

Dr. Fauci wears glasses, yes?

So because of flaws in his vision, he has a prescription for lenses to correct his vision. The effectiveness of his prescription is dependent on the severity of his visual issues, yes?

Since Dr. Fauci cannot see viral matter with his “poor” human vision, he needs another type of glasses with a certain “prescription” to see down that small.

It would be however be faulty to assume Dr. Fauci’s regular glasses restore his vision to 20/20, because the severity can be greater than treatable, with also a range of diminishing returns past the point of perfect 20/20 correction.

So how good are his other “glasses”? How well do they work, precisely?

I have no idea where you're going regarding his vision and that he wears glasses. Smells like obfuscation to me.

With respect to Dr. Fauci, he's pretty much an open book. Start with wiki if you have to. Then go deeper. Much deeper. He has a 40 year track record of dealing with various infectious diseases and pandemics. Same with Larry Brilliant.

What are your qualifications that permit you to qualify Fauci's, or Brilliant's background?

Are you an epidemiologist or virologist who has engaged in similar research?

Cause apple doesn't want the liability of child porn on their iCloud servers. This is about protecting apple.I just don't want Apple to be scanning iCloud period. It's a way to look over and go through our privacy. What if information gets leak to the government or the criminals. Who's held responsible for that?

Find an alternative way to catch criminals. And, why Apple is even getting involve?

MacRumors guide to endless profit

Step 1: Create a new thread everyday about Apple’s CSAM filter

Step 2: Wait for inevitable 500 replies and 10,000 ad impressions

Step 3: Repeat Steps 1-2 every day

Step 4: Profit????

Step 1: Create a new thread everyday about Apple’s CSAM filter

Step 2: Wait for inevitable 500 replies and 10,000 ad impressions

Step 3: Repeat Steps 1-2 every day

Step 4: Profit????

I sort of feel like some people are simply determined to defend Apple, no matter what.

I feel like some people have no desire to understand or seek out the larger context. And instead believe Apple execs, apparently believing their not very smart, just decided to do this on a whim one morning feeling the public would be fine and there would be no adverse company consequences.

I sort of feel like some people are simply determined to defend Apple, no matter what.

I sort of feel like other people are determined to find something wrong with this perfectly innocent and harmless on-device scanning system that only comes into play when you upload thirty child porn images.

Google and others don't brag about privacy as a selling point.I am not defending Apple on this one, but how are you better off with Windows and Android privacy-wise?

This is a very slippery slope. I don't like the idea that Apple has the key to our icloud photos or that they phones are capable of scanning text messages even if it is on device. Next thing you know, governments will be demanding that Apple scan for images like Tiananmen Square.

Exactly. People buy Apple b/c it's the privacy focused option.Google and others don't brag about privacy as a selling point.

Last edited:

So that big billboard with apples ad was missing the "* E"?As someone else already said, you don't own any server space on iCloud. You're renting it and entered into a legal agreement with Apple regarding it. Here's the document:

Note especially the following paragraph:

View attachment 1818341

Absolutely atrocious decision making. This follows the steep decline in hardware quality, software quality and customer service quality. This is one step removed from combing documents stored in iCloud, “scanning” emails, browser history, listening to voicemails. I’ve already canceled my 2TB plan amd moved everything offline. I’m a former fanboy with dozens and dozens of Apple purchases over the last 15 years. I said a year ago I’d never buy another Apple product and I haven’t. Now though I’m actually selling the ones I do have in the secondary market. RIP Apple.

ok Why hasn't China already done that since 2005? Were they waiting for Apple to join other tech companies in scanning for CSAM? 😂This is a very slippery slope. I don't like the idea that Apple has the key to our icloud photos or that they phones are capable of scanning text messages even if it is on device. Next thing you know, governments will be demanding that Apple scan for images like Tiananmen Square.

yet here you are on macrumors with Apple staying rent free in your headAbsolutely atrocious decision making. This follows the steep decline in hardware quality, software quality and customer service quality. This is one step removed from combing documents stored in iCloud, “scanning” emails, browser history, listening to voicemails. I’ve already canceled my 2TB plan amd moved everything offline. I’m a former fanboy with dozens and dozens of Apple purchases over the last 15 years. I said a year ago I’d never buy another Apple product and I haven’t. Now though I’m actually selling the ones I do have in the secondary market. RIP Apple.

Register on MacRumors! This sidebar will go away, and you'll see fewer ads.