True, and unfortunately an imperfect example in itself. In any case it's helpful --- this isn't someone sitting in your house looking through your stuff. This is their software doing something on your phone with only those photos you choose to upload to their servers, which they already have full access to, anyway, should you choose to upload them.An example that is based on previous attempts to deprive people of privacy and which many already consider a questionable action.

Got a tip for us?

Let us know

Become a MacRumors Supporter for $50/year with no ads, ability to filter front page stories, and private forums.

Apple Outlines Security and Privacy of CSAM Detection System in New Document

- Thread starter MacRumors

- Start date

- Sort by reaction score

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Which - honestly - is even worse. A hardware shop is gaping at my stuff in certain cases and reports it to the police if the criteria fit. NO hardware shop has to to that. NO ONE. Even if the shop is called "Apple"...No, your house is searching itself. If it finds that a certain criteria is met then it calls the a third party to double check before calling the cops.

That’s not what Apple wrote on the side of a building.

You mean "What happens on your iPhone stays on your iPhone"? But you know that if you choose to use iCloud to store your photos, you are voluntarily moving them off your iPhone by definition, right?

Heck, you don't have to be at the airport! Your bag just tells someone you will never meet whatever is in it and where you are.

Owning Apple products is like having a bag that starts screaming “my owner is stalking you and is a pedo” if a stranger stands next to it for 5 minutes.

"Stupid" as defined by someone I don't know, analyzed by someone who has no stake in the outcome, and passed on to a group universally known for their persevering lack of morals and boundless corruption. Sounds like a great plan.Good luck with that! I'd suggest the better, more reasonable alternative is to let go of your paranoia about it and just move on with life. If you're not uploading anything stupid to their servers, Apple doesn't care what you do.

I prefer the strategy of don't let me think a company is a spy and I won't remind everyone every chance I get that they are.

Apple says they about privacy when designing how you can and can not use airtags, but it seems that they weren't really concerned about privacy but rather controlling privacy. They want to know what people are doing, but they don't want you to know what other people are doing.Owning Apple products is like having a bag that starts screaming “my owner is stalking you and is a pedo” if a stranger stands next to it for 5 minutes.

How many people do you believe actually think this? One?Owning Apple products is like having a bag that starts screaming “my owner is stalking you and is a pedo” if a stranger stands next to it for 5 minutes.

So glad I don’t live in the U.S. If Apple ever rolls out this back door to my country I’ll sell all my Apple gear.

This sounds worse and worse the more they explain it.Awful rollout and it’s still dubious at best.

There's a reason they're releasing this and Craig's interview on a Friday afternoon when no one's paying attention.

Then you haven't been reading the posts. Whatever the aims this is SURVEILLANCE, and in any event Apple does not have the database of all the crime agencies that are employed fighting child porn! So its an absolute ridiculous idea, then of course add that to the fact it will if anything assist those engaged in child porn, as Apple has already telegraphed what their software will do, and these criminals will then take action to prevent their photos being scanned, by either avoiding iCloud, using a different transmission system, encrypting their files, thus making it HARDER for those agencies whose are AUTHORISED to pursue these criminals.Weird, because I haven’t seen a single coherent explanation of why it’s a problem if your own device scans your photos for child porn, and only does so if you are trying to upload onto apple’s servers, and only produces information to Apple if you have at least thirty child porn photos that you are trying to upload.

I don't pay for a car and then have the manufacturer decide they want to drive it!!

I don't pay the electricity bill for Apple or anyone else to introduce SURVEILLANCE on my equipment.

Apple. I absolutely love the company, but for God's sake do not go down this ridiculous route that makes Apple a laughing stock, especially given the massively high profile Apple has made about protecting privacy.

cmaier

if you don't mind them engaging in surveillance of your hardware, then good luck to you, if ITS YOUR CHOICE, but we are not given a choice, its being arbitrarily introduced and undermines Apples philosophy on privacy.

If anyone thinks it would always stay at potential child abuse pictures, I think they are kidding themselves.

Whatever is said to justify, and in this case its the emotive subject of child abuse, it is still SURVEILLANCE, plus the irony is it will not help fight the disgusting use of children, if anything it makes life harder for those seeking to make these people pay for their crimes.

Telegraphing what their software will do alerts criminals to avoid the situation! That will not help put them away, it just sets the precedent for a surveillance culture ironically perpetrated from an organisation with a wonderful reputation of upholding privacy and even including that in their advertisements setting themselves apart from other tech companies.

I’m starting to see more and more comments from the limited number of people who seem to be ok with this spying. I smell Apple’s PR team at work with their influencers

But they will permit up to 29 images that are identified on upload as child porn to exist on their servers so obviously they are either breaking the law themselves or there is no legal prohibition on hosting CSAM on their servers.They have a legal requirement to not host CSAM on their servers, which they own and you are renting. They get in trouble if they let you store it.

No its SURVEILLANCE, the idea its not mass surveillance is obfuscation. How many Apple devices in use that will have this software? That's not mass surveillance?People who are into pornography of any type are usually addicted to it and collect hundreds and thousands of images, not just a dozen or so, so 30 seems reasonable to me. Cloud services have already been scanning for CSAM on their servers, so no one has "gotten around" this before or now. They still won't be able to store CSAM collections on iCloud without being detected. They could have 100,000 CSAM images on their phone and not use iCloud for photos, and Apple will never know about that, precisely BECAUSE this is not a "mass surveillance" as some people are twisting it.

I'm afraid what comes across is not posters 'twisting things'.

You can't separate into little surveillance and mass surveillance, and its ludicrous to suggest that when you consider Apple's user base.

Last edited:

It's your choice to install iOS 15, or not. You could be part of I'm assuming will be a relatively small, but significant group of people who protest this with their actions. Apple is pretty happy to publish percentages of upgraders. In short, this is not something that is being introduced without your choice.cmaier

if you don't mind them engaging in surveillance of your hardware, then good luck to you, if ITS YOUR CHOICE, but we are not given a choice, its being arbitrarily introduced and undermines Apples philosophy on privacy.

You realize that Apple (or insert any other cloud storage provider) already is surveilling your photos when they are upload to their servers, right? This is just pushing where it's done. The only coherent argument for why they might be doing this is if they have plans for full E2EE for iCloud Photos in the future. The alternatives given (government bullying/mandates) are laughable --- if this were the case then we'd either A) not get any announcement that they are doing this and/or B) get a different announcement that the government is attempting to force them to do something and they are doing everything they can to fight it..... it is still SURVEILLANCE, plus the irony is it will not help fight the disgusting use of children, if anything it makes life harder for those seeking to make these people pay for their crimes.

Telegraphing what their software will do alerts criminals to avoid the situation! That will not help put them away, it just sets the precedent for a surveillance culture ironically perpetrated from an organisation with a wonderful reputation of upholding privacy and even including that in their advertisements setting themselves apart from other tech companies.

"Stupid" as defined by someone I don't know, analyzed by someone who has no stake in the outcome, and passed on to a group universally known for their persevering lack of morals and boundless corruption. Sounds like a great plan.

No, "stupid" as defined by law and/or the terms of service you agreed to with Apple. There's no mystery here.

It shouldn't surprise us if some people here in the forum are working under Apple's payroll.I’m starting to see more and more comments from the limited number of people who seem to be ok with this spying. I smell Apple’s PR team at work with their influencers

It's normal that a company like Apple monitors forums and pays people to post.

Last edited:

I don’t want to be the one in a trillion, that suddenly turns out to be one in a million (or less) due to a bug in Apples OS, firmware or software. It’s not that iOS13, iOS14 or Big Sur has demonstrated any exceptionally high software quality.

you probably have a higher chance of a government getting a warrant for decrypting all of your iCloud data as a result of mistaking you for someone else (which they can do today).

if you don't want that chance, your best bet is to turn off iCloud.

Holy cow. Now apple is saying they are going to use a foreign nation to evaluate my photos for illegal content?

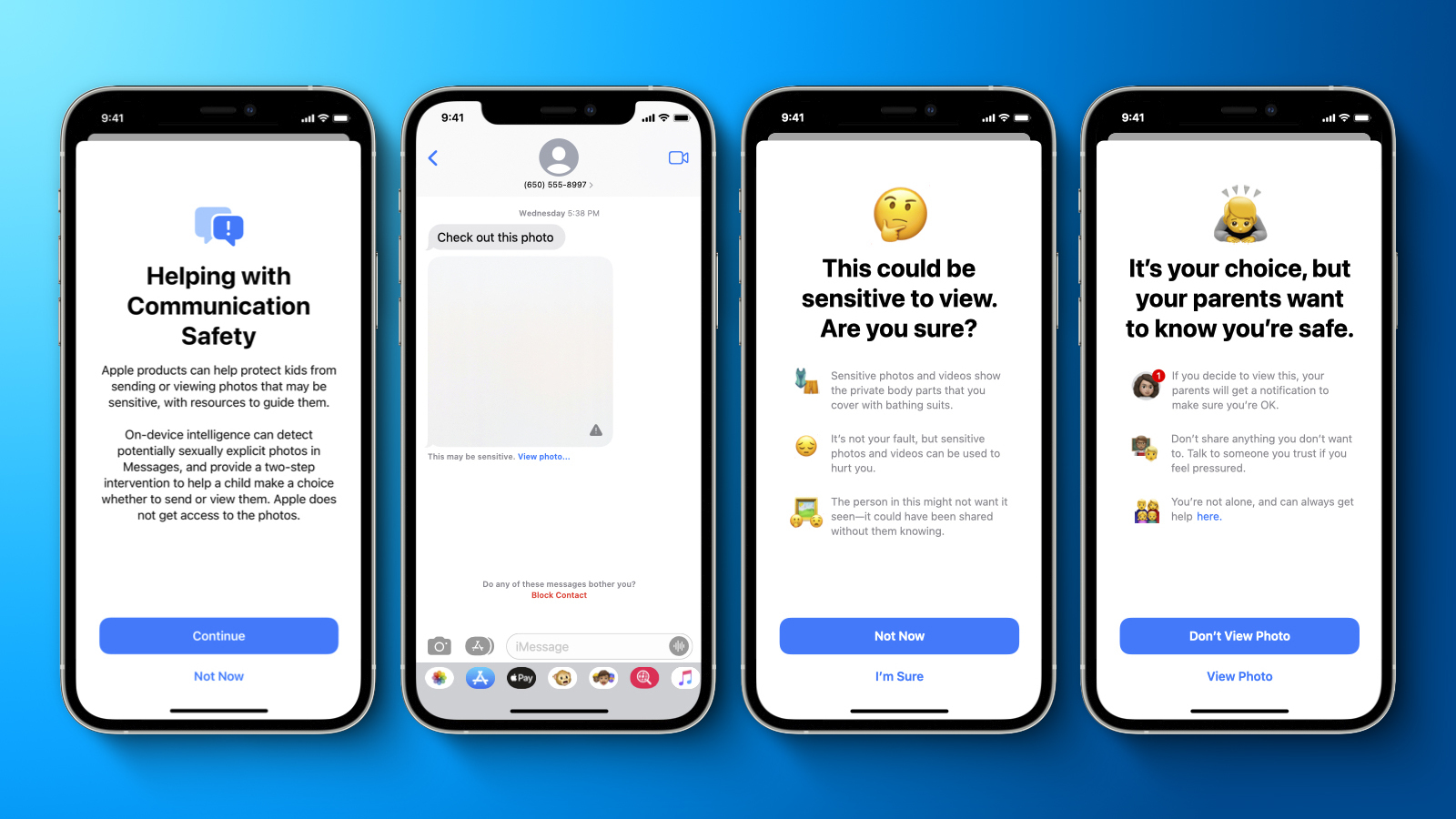

Apple today shared a document that provides a more detailed overview of the child safety features that it first announced last week, including design principles, security and privacy requirements, and threat model considerations.

Apple's plan to detect known Child Sexual Abuse Material (CSAM) images stored in iCloud Photos has been particularly controversial and has prompted concerns from some security researchers, the non-profit Electronic Frontier Foundation, and others about the system potentially being abused by governments as a form of mass surveillance.

The document aims to address these concerns and reiterates some details that surfaced earlier in an interview with Apple's software engineering chief Craig Federighi, including that Apple expects to set an initial match threshold of 30 known CSAM images before an iCloud account is flagged for manual review by the company.

Apple also said that the on-device database of known CSAM images contains only entries that were independently submitted by two or more child safety organizations operating in separate sovereign jurisdictions and not under the control of the same government.Apple added that it will publish a support document on its website containing a root hash of the encrypted CSAM hash database included with each version of every Apple operating system that supports the feature. Additionally, Apple said users will be able to inspect the root hash of the encrypted database present on their device, and compare it to the expected root hash in the support document. No timeframe was provided for this.

In a memo obtained by Bloomberg's Mark Gurman, Apple said it will have an independent auditor review the system as well. The memo noted that Apple retail employees may be getting questions from customers about the child safety features and linked to a FAQ that Apple shared earlier this week as a resource the employees can use to address the questions and provide more clarity and transparency to customers.

Apple initially said the new child safety features would be coming to the iPhone, iPad, and Mac with software updates later this year, and the company said the features would be available in the U.S. only at launch. Despite facing criticism, Apple today said it has not made any changes to this timeframe for rolling out the features to users.

Article Link: Apple Outlines Security and Privacy of CSAM Detection System in New Document

The first protection against mis-inclusion is technical: Apple generates the on-device perceptual CSAM hash database through an intersection of hashes provided by at least two child safety organizations operating in separate sovereign jurisdictions – that is, not under the control of the same government. Any perceptual hashes appearing in only one participating child safety organization’s database, or only in databases from multi- ple agencies in a single sovereign jurisdiction, are discarded by this process, and not included in the encrypted CSAM database that Apple includes in the operating system. This mechanism meets our source image correctness requirement.

Darth Tulhu

macrumors 68020

Exactly. They're breaking into my house to look at my stuff without the authority to do so.Apple isn’t a cop.

But the issue here is the reverse.I don’t need a warrant to search your bags when you enter my house.

You would need to

A: actually be a cop

AND

B: have a warrant to enter my house to check my bags.

My problem with this is that Apple (and the industry really) sold us this privacy thing like it was analogous to a safety deposit box: only I look at what's in the box, regardless of where it sits. My hopes that Apple was different are completely dashed in this "privacy" regard.

But the truth is that nothing is hidden really. You're taking their word for it. If we don't like it, just don't buy or use their stuff. You effectively give up the right to privacy when you go online in ANY way.

I don't have to like that this is the way the entire industry is, but I can deal with it.

Thus, this will not change the fact that I'll STILL use Apple to do my storing and syncing and computing. Privacy has NEVER been the primary reason why I use Apple products. It's the sweet hardware, sweet software integration, and the ecosystem.

This is but one drawback/tradeoff in way too many benefits with Apple (at least for now), so bring on that keynote.

This is one place where I am dumbfounded. They could easily get the 1 in 1 trillion false positive rate with only 4 matches if NeurHash's false positive rate was 1 in 10,000.But they will permit up to 29 images that are identified on upload as child porn to exist on their servers so obviously they are either breaking the law themselves or there is no legal prohibition on hosting CSAM on their servers.

My thoughts on this is one of:

-- "Craig is lying, and they are flagging with much less"

-- "NeuralHash must be much worse than I could put together in a few weeks' time."

-- "They are being insanely cautious to ensure not a single false positive will ever happen ever to anyone"

No its SURVEILLANCE, the idea its not mass surveillance is obfuscation. How man Apple devices in use that will have this software? That's not mass surveillance?

I'm afraid what comes across is not posters 'twisting things'.

You can't separate into little surveillance and mass surveillance, and its ludicrous to suggest that when you consider Apple's user base.

The key word that I have a problem with here is not "mass", but "surveillance". "Surveillance" implies a human is directly observing or remotely monitoring an individual to "catch" them doing something wrong. That's not at all what's going on here. No one at Apple knows anything about what's on your phone unless you choose to upload illegal content away from your phone and onto their servers, as has been explained ten thousands times.

"It's your choice, but your parents want to know you're safe."

When did I give Apple the right to speak to my children on my behalf? Don't they have any idea how creepy that is?

When did I give Apple the right to speak to my children on my behalf? Don't they have any idea how creepy that is?

But they will permit up to 29 images that are identified on upload as child porn to exist on their servers so obviously they are either breaking the law themselves or there is no legal prohibition on hosting CSAM on their servers.

The chances that someone into child porn has only 29 images is slim to none.

No, they're protecting from e.g., China trying to find CCP opposition by inserting photos of CCP opposition leaders into the CSAM database unless the US agrees that those CCP opposition photos are also CSAM.Holy cow. Now apple is saying they are going to use a foreign nation to evaluate my photos for illegal content?

The first protection against mis-inclusion is technical: Apple generates the on-device perceptual CSAM hash database through an intersection of hashes provided by at least two child safety organizations operating in separate sovereign jurisdictions – that is, not under the control of the same government. Any perceptual hashes appearing in only one participating child safety organization’s database, or only in databases from multi- ple agencies in a single sovereign jurisdiction, are discarded by this process, and not included in the encrypted CSAM database that Apple includes in the operating system. This mechanism meets our source image correctness requirement.

"It's your choice, but your parents want to know you're safe."

When did I give Apple the right to speak to my children on my behalf? Don't they have any idea how creepy that is?

So, you don't want to know your kids are safe?

It's ought to be an axiomatic statement.

Register on MacRumors! This sidebar will go away, and you'll see fewer ads.